Hi SS,Hello all,

Chinese Toanjic chip, competition?

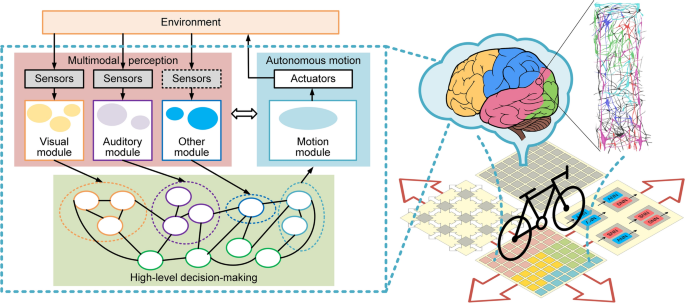

Looks like Tianjic uses analog NN for audio. It also uses CNN (MAC-based) for video and something else for control.

A hybrid and scalable brain-inspired robotic platform | Scientific Reports (nature.com)

A hybrid and scalable brain-inspired robotic platform - Scientific Reports

Recent years have witnessed tremendous progress of intelligent robots brought about by mimicking human intelligence. However, current robots are still far from being able to handle multiple tasks in a dynamic environment as efficiently as humans. To cope with complexity and variability, further...

For each module, we opened independent data paths, which were distinct in information representation, frequency and throughput. In the visual module, each frame of video was resized to a 70 × 70 gray image and fed into a CNN as multi-bit values, enabling rich environmental spatial information to be maintained with limited computing resources (Fig. 3a). In contrast, the raw audio stream was transformed into binary spike trains in the auditory module. After end-point detection32,33, the key frequency features were obtained by taking the Mel Frequency Cepstral Coefficient (MFCC)34. A Gaussian population35 was used to encode each MFCC feature into spike trains as input to a three-layer fully-connected SNN (Fig. 3b). For motion control, sequential signals were first generated by functional cores to merge with the steering commands from other modules as the comprehensive target angle. Data from all sensors were then integrated via the MLP network for angle control (Fig. 3d). The designs and training of each network-based module are described in the Methods.

Tianjic uses different technologies to handle visual and audio. Akida can handle both.

They use 1-bit analog for audio which is very efficient but not so hot on accuracy. Akida can do 1-bit up to 8-bit inputs (256 values).

Tianjic needs a separate FPGA for pre-processing input signals. Akida has built-in preprocessing.

So, competition? - Yes.

Worried? - No,