D

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

BRN - NASA

- Thread starter TechGirl

- Start date

D

Deleted member 118

Guest

D

Deleted member 118

Guest

Description:

OUSD (R&E) MODERNIZATION PRIORITY: General Warfighting Requirements (GWR);Microelectronics;Quantum Science

TECHNOLOGY AREA(S): Electronics

OBJECTIVE: Develop a novel smart visual image recognition system that has intrinsic ultralow power consumption and system latency, and physics-based security and privacy.

DESCRIPTION: Image-based recognition in general requires a complicated technology stack, including lenses to form images, optical sensors for opto-to-electrical conversion, and computer chips to implement the necessary digital computation process. This process is serial in nature, and hence, is slow and burdened by high-power consumption. It can take as long as milliseconds, and require milliwatts of power supply, to process and recognize an image. The image that is digitized in a digital domain is also vulnerable to cyber-attacks, putting the users’ security and privacy at risk. Furthermore, as the information content of images needs to be surveilled and reconnoitered, and continues to be more complex over time, the system will soon face great challenges in system bottleneck regarding energy efficiency, system latency, and security, as the existing digital technologies are based on digital computing, because of the required sequential analog-to-digital processing, analog sensing, and digital computing.

It is the focus of this STTR topic to explore a much more promising solution to mitigate the legacy digital image recognition latency and power consumption issues via processing visual data in the optical domain at the edge. This proposed technology shifts the paradigm of conventional digital image processing by using analog instead of digital computing, and thus can merge the analog sensing and computing into a single physical hardware. In this methodology, the original images do not need to be digitized into digital domain as an intermediate pre-processing step. Instead, incident light is directly processed by a physical medium. An example is image recognition [Ref 1], and signal processing [Ref 2], using physics of wave dynamics. For example, the smart image sensors [Ref 1] have judiciously designed internal structures made of air bubbles. These bubbles scatter the incident light to perform the deep-learning-based neuromorphic computing. Without any digital processing, this passive sensor can guide the optical field to different locations depending on the identity of the object. The visual information of the scene is never converted to a digitized image, and yet the object can be identified in this unique computation process. These novel image sensors are extremely energy efficient (a fraction of a micro Watt) because the computing is performed passively without active use of energy. Combined with photovoltaic cells, in theory, it can compute without any energy consumption, and a small amount of energy will be expended upon successful image recognition and an electronic signal needs to be delivered to the optical and digital domain interface. It is also extremely fast, and has extremely low latency, because the computing is done in the optical domain. The latency is determined by the propagation time of light in the device, which is on the order of no more than hundreds of nanoseconds. Therefore, its performance metrics in terms of energy consumption and latency are projected to exceed those of conventional digital image processing and recognition by up to at least six orders of magnitude (i.e., 100,000 times improvement). Furthermore, it has the embedded intrinsic physics-based security and privacy because the coherent properties of light are exploited for image recognition. When these standalone devices are connected to system networks, cyber hackers cannot gain access to original images because such images have never been created in the digital domain in the entire computation process. Hence, this low-energy, low-latency image sensor system is well suited for the application of 24/7 persistent target recognition surveillance system for any intended targets.

In summary, these novel image recognition sensors, which use the nature of wave physics to perform passive computing that exploits the coherent properties of light, is a game changer for image recognition in the future. They could improve target recognition and identification in degraded vision environment accompanied by heavy rain, smoke, and fog. This smart image recognition sensor, coupled with analog computing capability, is an unparalleled alternative solution to traditional imaging sensor and digital computing systems, when ultralow power dissipation and system latency, and higher system security and reliability provided by analog domain, are the most critical key performance metrics of the system.

PHASE I: Develop, design, and demonstrate the feasibility of an image recognition device based on a structured optical medium. Proof of concept demonstration should reach over 90% accuracy for arbitrary monochrome images under both coherent and incoherent illumination. The computing time should be less than 10 µs. The throughput of the computing is over 100,000 pictures per second. The projected energy consumption is less than 1 mW. The Phase I effort will include prototype plans to be developed under Phase II.

PHASE II: Design image recognition devices for general images, including color images in the visible or multiband images in the near-infrared (near-IR). The accuracy should reach 90% for objects in ImageNet. The throughput reaches over 10 million pictures per second with computation time of 100 ns and with an energy consumption less than 0.1 mW. Experimentally demonstrate working prototype of devices to recognize barcodes, handwritten digits, and other general symbolic characters. The device size should be no larger than the current digital camera-based imaging system.

PHASE III DUAL USE APPLICATIONS: Fabricate, test, and finalize the technology based on the design and demonstration results developed during Phase II, and transition the technology with finalized specifications for DoD applications in the areas of persistent target recognition surveillance and image recognition in the future for improved target recognition and identification in degraded vision environment accompanied by heavy rain, smoke, and fog.

The commercial sector can also benefit from this crucial, game-changing technology development in the areas of high-speed image and facial recognition. Commercialize the hardware and the deep-learning-based image recognition sensor for law enforcement, marine navigation, commercial aviation enhanced vision, medical applications, and industrial manufacturing processing.

D

Deleted member 118

Guest

5 ways AI can help NASA's Artemis Mission

In this article, we’ll explore a few ways in which AI can help NASA with its goal of returning to the Moon.

D

Deleted member 118

Guest

NASA wants a 100x upgrade for space computers

Does it hope to run Doom on the Moon or something?

D

Deleted member 118

Guest

Hmmmm

OUSD (R&E) MODERNIZATION PRIORITY: Biotechnology Space; Nuclear

TECHNOLOGY AREA(S): Nuclear; Sensors; Space Platform

The technology within this topic is restricted under the International Traffic in Arms Regulation (ITAR), 22 CFR Parts 120-130, which controls the export and import of defense-related material and services, including export of sensitive technical data, or the Export Administration Regulation (EAR), 15 CFR Parts 730-774, which controls dual use items. Offerors must disclose any proposed use of foreign nationals (FNs), their country(ies) of origin, the type of visa or work permit possessed, and the statement of work (SOW) tasks intended for accomplishment by the FN(s) in accordance with the Announcement. Offerors are advised foreign nationals proposed to perform on this topic may be restricted due to the technical data under US Export Control Laws. Please direct questions to the Air Force SBIR/STTR HelpDesk: usaf.team@afsbirsttr.us(link sends e-mail).

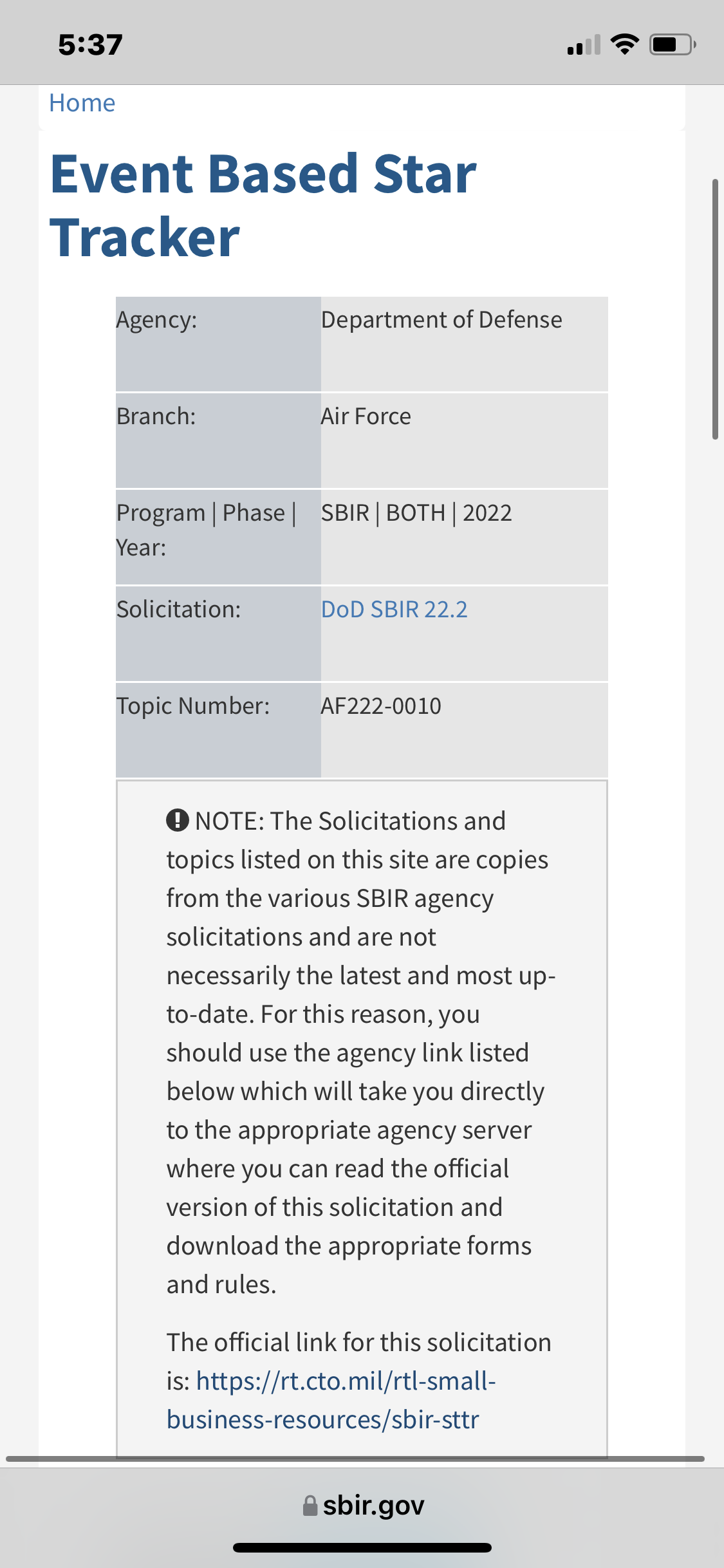

OBJECTIVE: Develop a low SWAP, low cost, high angular rate star tracker for satellite and nuclear enterprise applications.

DESCRIPTION: Topic Description: Existing star tracking attitude sensors for small satellites and rocket applications are limited in their ability to operate above an angular rate of approximately 3-5 degrees/second, thus rendering them useless for both satellite high spin (i.e. lost in space) applications, as well as spinning rocket body applications. Recent advances in neuromorphic (a.k.a. event based) sensors have dramatically improved their overall performance2, which allows them to be considered for these high angular rate applications1. In addition, the difference between a traditional frame-based camera and an event based camera is simply a matter of how the sensor is read out, which should allow for electronic switching between event based (i.e. high angular rate) and frame (i.e. low angular rate) modes within the star tracker. Additional advantages inherent in an event based sensor include high temporal resolution (µs) and high dynamic range (140 dB), which could allow for multiple modes of continuous attitude determination (i.e. star tracking, sun sensor, earth limb sensor) within a single small, low cost sensor package. All technology solutions that meet the topic objective are solicited in this call, however, neuromorphic sensors appear ideally suited to meet the technical objectives and should therefore be considered in the solution trade space. The scope of this effort will be to first analyze the capability of event based sensors to meet a high angular rate star tracker application, define the trade space for the technical solution against the satellite and nuclear enterprise requirements, develop a working prototype and test it against the requirements, and finally in Phase 3 move to initial production of a commercial star tracker unit.

PHASE I: Acquire existing state of the art COTS neuromorphic (a.k.a. event based) sensor or modify existing star tracking sensor as appropriate. Perform analysis and testing of the event based sensor to determine feasibility in the high angular rate star tracking satellite and nuclear enterprise applications

PHASE II: Development of a prototype event based high angular rate star tracker. Ideally this prototype will have the ability to be operated in both event based mode, as well as switch back and forth to standard (i.e. frame) mode. Explore and document the technical trade space (maximum angular rate, minimum detection threshold, associated algorithm development, etc.) and potential military/commercial application of the prototype device.

PHASE III DUAL USE APPLICATIONS: Phase 3 efforts will focus on transitioning the developed high angular rate attitude sensor technology to a working commercial and/or military solution. Potential applications include commercial and military satellites, as well as missile applications.

OUSD (R&E) MODERNIZATION PRIORITY: Biotechnology Space; Nuclear

TECHNOLOGY AREA(S): Nuclear; Sensors; Space Platform

The technology within this topic is restricted under the International Traffic in Arms Regulation (ITAR), 22 CFR Parts 120-130, which controls the export and import of defense-related material and services, including export of sensitive technical data, or the Export Administration Regulation (EAR), 15 CFR Parts 730-774, which controls dual use items. Offerors must disclose any proposed use of foreign nationals (FNs), their country(ies) of origin, the type of visa or work permit possessed, and the statement of work (SOW) tasks intended for accomplishment by the FN(s) in accordance with the Announcement. Offerors are advised foreign nationals proposed to perform on this topic may be restricted due to the technical data under US Export Control Laws. Please direct questions to the Air Force SBIR/STTR HelpDesk: usaf.team@afsbirsttr.us(link sends e-mail).

OBJECTIVE: Develop a low SWAP, low cost, high angular rate star tracker for satellite and nuclear enterprise applications.

DESCRIPTION: Topic Description: Existing star tracking attitude sensors for small satellites and rocket applications are limited in their ability to operate above an angular rate of approximately 3-5 degrees/second, thus rendering them useless for both satellite high spin (i.e. lost in space) applications, as well as spinning rocket body applications. Recent advances in neuromorphic (a.k.a. event based) sensors have dramatically improved their overall performance2, which allows them to be considered for these high angular rate applications1. In addition, the difference between a traditional frame-based camera and an event based camera is simply a matter of how the sensor is read out, which should allow for electronic switching between event based (i.e. high angular rate) and frame (i.e. low angular rate) modes within the star tracker. Additional advantages inherent in an event based sensor include high temporal resolution (µs) and high dynamic range (140 dB), which could allow for multiple modes of continuous attitude determination (i.e. star tracking, sun sensor, earth limb sensor) within a single small, low cost sensor package. All technology solutions that meet the topic objective are solicited in this call, however, neuromorphic sensors appear ideally suited to meet the technical objectives and should therefore be considered in the solution trade space. The scope of this effort will be to first analyze the capability of event based sensors to meet a high angular rate star tracker application, define the trade space for the technical solution against the satellite and nuclear enterprise requirements, develop a working prototype and test it against the requirements, and finally in Phase 3 move to initial production of a commercial star tracker unit.

PHASE I: Acquire existing state of the art COTS neuromorphic (a.k.a. event based) sensor or modify existing star tracking sensor as appropriate. Perform analysis and testing of the event based sensor to determine feasibility in the high angular rate star tracking satellite and nuclear enterprise applications

PHASE II: Development of a prototype event based high angular rate star tracker. Ideally this prototype will have the ability to be operated in both event based mode, as well as switch back and forth to standard (i.e. frame) mode. Explore and document the technical trade space (maximum angular rate, minimum detection threshold, associated algorithm development, etc.) and potential military/commercial application of the prototype device.

PHASE III DUAL USE APPLICATIONS: Phase 3 efforts will focus on transitioning the developed high angular rate attitude sensor technology to a working commercial and/or military solution. Potential applications include commercial and military satellites, as well as missile applications.

D

Deleted member 118

Guest

D

Deleted member 118

Guest

D

Deleted member 118

Guest

D

Deleted member 118

Guest

5 ways AI can help NASA's Artemis Mission

In this article, we’ll explore a few ways in which AI can help NASA with its goal of returning to the Moon.

D

Deleted member 118

Guest

REMOTE DETECTION TECHNOLOGIES | SBIR.gov

b. Networked Edge Sensing

Advances in neuromorphic engineering [1] and event-based sensing have demonstrated new paradigms for remote sensing science. This is in part due to increased computation and analysis on-board the sensor (or ‘at the edge’). Additionally, these technologies are capable of reduced size, weight, and power requirements.

D

Deleted member 118

Guest

Space-Based Sensing at the Tactical Edge | SBIR.gov

D

Deleted member 118

Guest

Visual Semantics, Inc. (dba Third Insight) and its non-profit partner, the Institute for Human and Machine Cognition (IHMC) based in Pensacola, FL, are pleased to submit the attached Phase I STTR proposal: “DARPA-USAF Integration: Collaboration and Secure Tasking for Multi-Agent Swarms”. This effort sits within the “Information Technology” focus area to address adversary effort disruption, autonomous systems teaming, reasoning and intelligence, and human and autonomy systems trust and interaction. Third Insight and IHMC have developed complementary approaches for DARPA and the USAF to understand how multiple UAVs may collaborate in a decentralized manner. Third Insight is working closely with AFRL, AFIMSC, and the National Guard under three ongoing USAF Phase II SBIRs to provide the DOD with an AI-driven software plug-in that imparts COTS Unmanned Aerial Vehicles (UAVs) with autonomous decision-making, navigation, and planning capabilities. While such capabilities are typically intended for GPS-denied and/or contested environments, the AI’s ability to build Situational Awareness at the edge is bearing its own fruit. Perhaps most exciting is our team’s realization that multiple UAVs can share their Situational Awareness and then collaboratively reason about it. Our non-profit partner, Dr. Matthew Johnson at IHMC, is currently leading two DARPA projects (CREATE and ASIST) that focus on different aspects of autonomous systems teaming and human-machine coordination. IHMC has developed high-fidelity, 3D simulated versions of “Capture-the-Flag” to study the impact of collaboration on successful teaming. In the simplest case, UAVs on each team select from a pre-defined “playlist” of behaviors to defend the team’s flag, take offensive action, etc., based on their perceived state in the world. The approach taken by DARPA is state-of-the-art, and we expect similar approaches to be taken by the two USAF Vanguard Programs Skyborg and Golden Horde. The proposed STTR seeks to integrate teaming concepts from both the DARPA and USAF projects. Project goals are to assess the feasibility of combining both approaches so agents can: Build and share representations of dynamic threats (both to the agent and to the group) Communicate with neighboring teammates to highlight tactical and strategic advantages Balance individual goals and behaviors against those of the group Capture new knowledge, best practices, and learnings and share with the team Machine-machine cooperation will be a key enabler for

Skyborg and Golden Horde. Our STTR efforts will seek to identify customers inside AFSOC and AFIMSC, as well as the National Guard, who has critical need for collaborative ISR and tracking in support of Disaster Response and Homeland Security. Lt Col Alex “Stoiky” Goldberg (TXANG), who is the TPOC for two of Third Insight’s SBIR Phase II’s, has provided a letter of support on behalf of this effort to highlight the project’s urgency

D

Deleted member 118

Guest

D

Deleted member 118

Guest

D

Deleted member 118

Guest

Been a while since I’ve found anything but a mention for brainchip

I know we are not power hungry, Rocket, but do we limit throughput?Been a while since I’ve found anything but a mention for brainchip

If you don't have dreams, you can't have dreams come true!

D

Deleted member 118

Guest

I know we are not power hungry, Rocket, but do we limit throughput?

If you don't have dreams, you can't have dreams come true!

Similar threads

- Replies

- 0

- Views

- 4K

- Replies

- 9

- Views

- 6K

- Replies

- 0

- Views

- 3K