You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

BRN - NASA

- Thread starter TechGirl

- Start date

D

Deleted member 118

Guest

A few articles I’ve stumbled across if anyone wants to browse over them

stuart888

Regular

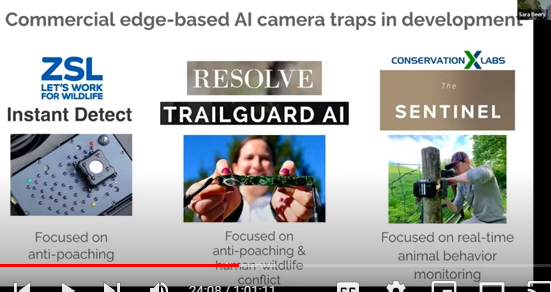

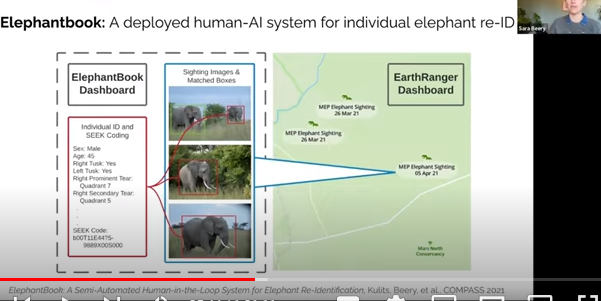

They cannot solve: Which Elephant is it?

Brainchip can give them a call. At about the 24 minute mark she talks about the solutions out there and their problems.

All the stuff Brainchip Akida SNN can go.

Brainchip can give them a call. At about the 24 minute mark she talks about the solutions out there and their problems.

All the stuff Brainchip Akida SNN can go.

They cannot solve: Which Elephant is it?

Brainchip can give them a call. At about the 24 minute mark she talks about the solutions out there and their problems.

All the stuff Brainchip Akida SNN can go.

View attachment 29280

View attachment 29279

You should suggest that to Ms. Beery.

D

Deleted member 118

Guest

Future intelligent autonomous vehicles like the Orb/eVTOL/UAM (Electric Vertical Takeoff and Landing/Urban Air Mobility) vehicles will be able to “feel”, “think”, and “react” in real time by incorporating high-resolution state-sensing, awareness, and self-diagnostic capabilities. They will be able to sense and observe phenomena at unprecedented length and time scales allowing for superior performance in complex dynamic environments, safer operation, reduced maintenance costs, and complete life-cycle management. Despite the importance of vehicle state sensing and awareness, however, the current state of the art is primitive as well as prohibitively heavy, expensive, and complex. Therefore, new Fly-by-Feel technologies are required for the next generation of intelligent aerospace structures that will utilize AI to sense the environmental conditions and structural state, and effectively interpret the sensing data to achieve real-time state awareness to employ appropriate self-diagnostics under varying operational environments. Acellent is teaming with Stanford University, USAF and The Boeing Company in this STTR project to develop a Fly-by-Feel (FBF) autonomous system to significantly enhance agility of drones by integrating directly on the wings a nerve-like stretchable multimodal sensor network with AI-based state sensing and health diagnostic software to mimic the biological sensory systems like birds. Once integrated with the wings, the distributed sensor data will be collected and processed in real-time through AI-based diagnostics for flight-state estimation in terms of lift, drag, flutter, angle of attack, and damage/failure of the component in real time so that the system can interface with the controller to significantly enhance the maneuverability and survivability of the vehicle. Phase 1 focused on manufacturing, integrating and testing the network in the laboratory environment. Phase 2 program will mature the technology to TRL 5-6 via integration with a UAV to flight test the complete UAV in a flight regime using a wind tunnel.

D

Deleted member 118

Guest

Rise from the ashes

Regular

Can you break that down in layman's terms for me mate.

D

Deleted member 118

Guest

Can you break that down in layman's terms for me mate.

Not a hope in hell I just typed in TENN and I don’t even know what that is, just seem it posted since Akida 2000 been discussed

Rise from the ashes

Regular

All good mate, my brain would probably overheat with an explanation anyway.Not a hope in hell I just typed in TENN

Cheers for posting.

D

Deleted member 118

Guest

Could this be NASA detailing Akida 2 gen?

Maybe a tech savvy person can confirm @Diogenese

www.sbir.gov

www.sbir.gov

Maybe a tech savvy person can confirm @Diogenese

Multi-Scale Representation Learning | SBIR.gov

Last edited by a moderator:

D

Deleted member 118

Guest

New round of phase 1 funding available that closes in 2 days and becomes available from June. Plenty in there to include Akida technology if you click on the focus areas.

www.sbir.gov

www.sbir.gov

SBIR 2023-I | SBIR.gov

Last edited by a moderator:

D

Deleted member 118

Guest

Neuromorphic Sensor for Missile Defense | SBIR.gov

Proposal seeks to build and test a Persistent wide field-of-view (FOV) infrared (IR) camera array for detecting and tracking dim objects. The optical design is based on the successful Tau PANDORA (visible) array systems, now in use for tracking low earth orbit (LEO) and geostationary earth orbit (GEO) satellites. In Phase I, propose to collect daytime shortwave IR (SWIR) imagery on satellites with a single camera. Analyzed this data to demonstrate quantitatively the successful use of Convolutional Neural Networks (CNNs) to identify satellites distinct from the clutter. Tau proposes to construct a prototype sensor array to demonstrate the feasibility of utilizing Neuromorphic Machine Learning (NML). The primary research in Phase II will be directed towards developing an effective training algorithm of Spiking Neural Networks (SNN), an advanced Neuromorphic network, while we continue on present path by improving the Generation II Neural Networks previously investigated. Approved for Public Release | 22-MDA-11215 (27 Jul 22)

D

Deleted member 118

Guest

D

Deleted member 118

Guest

D

Deleted member 118

Guest

D

Deleted member 118

Guest

D

Deleted member 118

Guest

D

Deleted member 118

Guest

D

Deleted member 118

Guest

Similar threads

- Replies

- 0

- Views

- 5K

- Replies

- 9

- Views

- 6K

- Replies

- 0

- Views

- 3K