D

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

BRN - NASA

- Thread starter TechGirl

- Start date

D

Deleted member 118

Guest

D

Deleted member 118

Guest

Looks like a use case for Prophesee in space

D

Deleted member 118

Guest

This is the last one I’ve had stored in my favorites for sometime

Last edited by a moderator:

Rise from the ashes

Regular

The next four people to begin the next chapter of spaceflight.

Rise from the ashes

Regular

Those two guys on the right look strangely similar?View attachment 33699

The next four people to begin the next chapter of spaceflight.

Deadpool

Regular

I believe they maybe JK200SXThose two guys on the right look strangely similar?

Rise from the ashes

Regular

I believe they maybe JK200SX

NASA discovers a massive asteroid heading towards the Earth

The asteroid is as wide as 21 buses parked end to end.

Last edited:

D

Deleted member 118

Guest

Took a while for an official acknowledge from BRN so I wonder what stage we are at.

Adapting SRT’s M1 Hardware Portal for Navy Facility Health Monitoring and Prioritization

Award Information

Agency:

Department of Defense

Branch:

Navy

Contract:

N68335-21-C-0013

Agency Tracking Number:

N202-099-1097

Amount:

$239,831.00

Phase:

Phase I

Program:

SBIR

Solicitation Topic Code:

N202-099

Solicitation Number:

20.2

Timeline

Solicitation Year:

2020

Award Year:

2021

Award Start Date (Proposal Award Date):

2020-10-07

Award End Date (Contract End Date):

2021-12-10

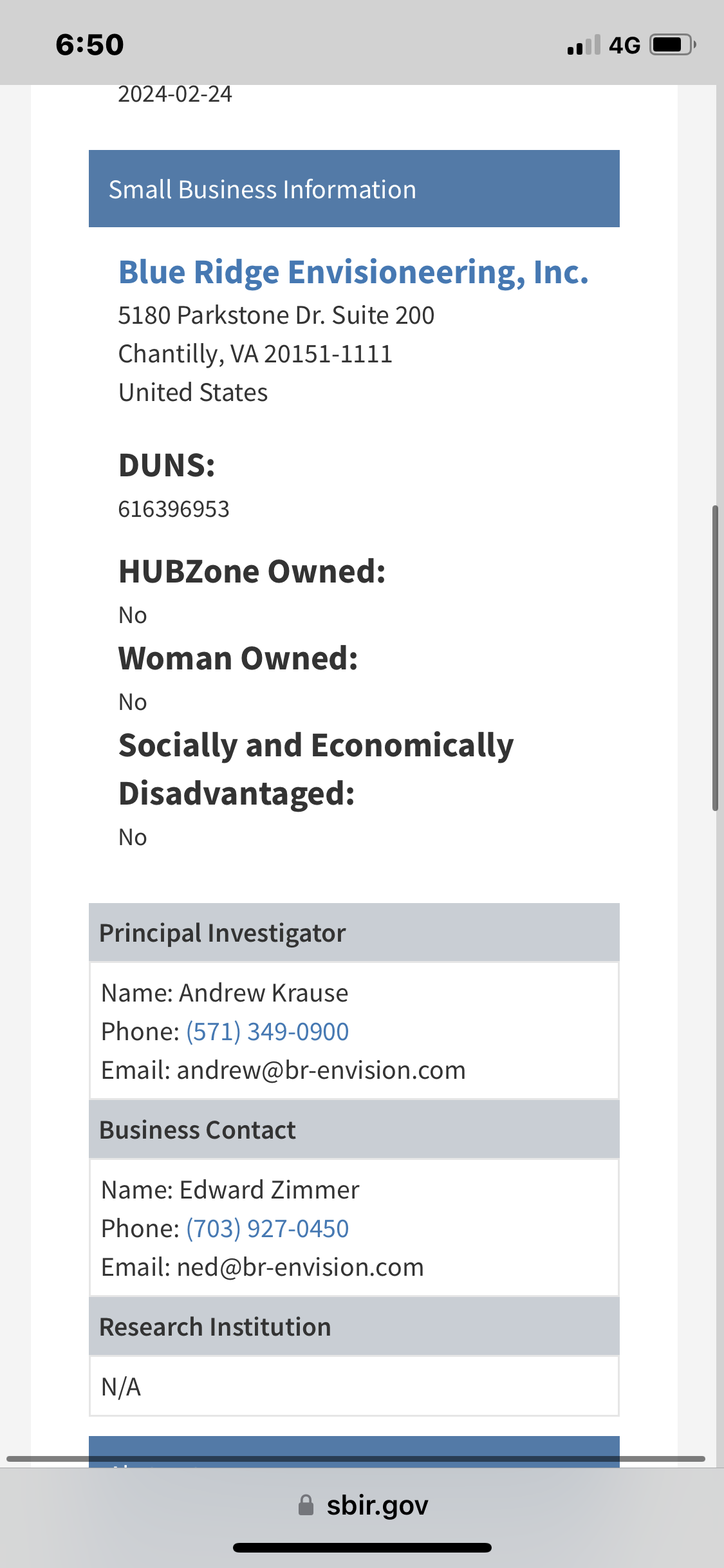

Small Business Information

Blue Ridge Envisioneering, Inc.

5180 Parkstone Dr. Suite 200

Chantilly, VA 20151-1111

United States

DUNS:

616396953

HUBZone Owned:

No

Woman Owned:

No

Socially and Economically Disadvantaged:

No

Principal Investigator

Name: Jason Pualoa

Phone: (571) 349-0900

Email: andrew@br-envision.com

Business Contact

Name: Edward Zimmer

Phone: (703) 927-0450

Email: ned@br-envision.com

Research Institution

N/A

Abstract

Deep Neural Networks (DNN) have become a critical component of tactical applications, assisting the warfighter in interpreting and making decisions from vast and disparate sources of data. Whether image, signal or text data, remotely sensed or scraped from the web, cooperatively collected or intercepted, DNNs are the go-to tool for rapid processing of this information to extract relevant features and enable the automated execution of downstream applications. Deployment of DNNs in data centers, ground stations and other locations with extensive power infrastructure has become commonplace but at the edge, where the tactical user operates, is very difficult. Secure, reliable, high bandwidth communications are a constrained resource for tactical applications which limits the ability to routed data collected at the edge back to a centralized processing location. Data must therefore be processed in real-time at the point of ingest which has its own challenges as almost all DNNs are developed to run on power hungry GPUs at wattages exceeding the practical capacity of solar power sources typically available at the edge. So what then is the future of advanced AI for the tactical end user where power and communications are in limited supply? Neuromorphic processors may provide the answer. Blue Ridge Envisioneering, Inc. (BRE) proposes the development of a systematic and methodical approach to deploying Deep Neural Network (DNN) architectures on neuromorphic hardware and evaluating their performance relative to a traditional GPU-based deployment. BRE will develop and document a process for benchmarking a DNN’ s performance on a standard GPU, converting it to run on near-commercially available neuromorphic hardware, training and evaluating model accuracy for a range of available bit quantizations, characterizing the trade between power consumption and the various bit quantizations, and characterizing the trade between throughput/latency and the various bit quantizations. This process will be demonstrated on a Deep Convolutional Neural Network trained to classify objects in SAR imagery from the Air Force Research Laboratory’s MSTAR open source dataset. The BrainChip Akida Event Domain Neural Processor development environment will be utilized for demonstration as it provides a simulated execution environment for running converted models under the discrete, low quantization constraints of neuromorphic hardware.

D

Deleted member 118

Guest

Photonic AI Processor (PHASOR) | SBIR.gov

www.sbir.gov

www.sbir.gov

Photonic AI Processor (PHASOR) | SBIR.gov

Photonic AI Processor (PHASOR)

Award InformationAgency

Branch:Strategic Capabilities Office

Contract:HQ003422C0114

Agency Tracking Number:SCO2D-0088

Amount:$1,499,916.08

Phase

Program:SBIR

Solicitation Topic Code:SCO213-003

Solicitation Number:21.3

Timeline

Solicitation Year:2021

Award Year:2022

Award Start Date (Proposal Award Date):2022-09-30

Award End Date (Contract End Date):2024-09-29

Small Business Information

BASCOM HUNTER TECHNOLOGIES INC

5501 Bascom Way

Baton Rouge, LA 70809-3507

United States

DUNS:964413657

HUBZone Owned:No

Woman Owned:No

Socially and Economically Disadvantaged:No

Principal Investigator

Name: Samuel Subbarao

Phone: (225) 283-2158

Email: subbarao@bascomhunter.com

Business Contact

Name: Stacey McCarthy

Phone: (269) 271-6966

Email: mccarthy@bascomhunter.com

Research Institution

N/A

Abstract

Bascom Hunter proposes a Photonic AI Processor (PHASOR) to deliver automatic target recognition (ATR) at a rate of 75,000 frames per second (FPS) using 4k resolution images. PHASOR will deliver these results in a 1U form factor using a combination of Neuromorphic Processors in the form of FPGAs and Photonic Integrated Circuits (PICs). In previous work, Bascom Hunter, partnering with Princeton University, demonstrated the performance of Matrix Vectro Multiplication (MVM) using PICs. We were able to implement 2x10 photonic arrays. The microring resonator (MRR)-based design was able to achieve a matrix loading speed of 2 Gbps, an input vector loading speed of 24 Gbps and an energy consumption per multiply and accumulate (MAC) operation of 1 pJ/bit. With a 2x2 matrix, we have shown computational performance of 0.16 Terra Operations Per Second (TOPS) and a latency of 200 ps. The latency of 200 ps can be compared to Tensor Processing Unit (TPU) latency of 10 ms. In Phase II, we will be laying the groundwork for the PHASOR product. We will be utilizing the previously designed 2x10 photonic MVM to create a CNN for MNIST classification. Our proposed design can implement a 4x4 MVM kernel (utilizing two 2x10 PICs) with a modulated laser input at 10 GSps (Vector update speed) and an MRR update at 1 GSps (Matrix update speed). We expect the 4x4 kernel in Phase II to provide 150k FPS for the MNIST dataset when using a stride of 1.

D

Deleted member 118

Guest

TRACS is open, modular, makes decisions under uncertainty, and learns in a manner that the performance of the system is assured and improves over time.nbsp; It deeply integrates natural language capabilities and one-shot learning from instructions, observations, and demonstrations

Rise from the ashes

Regular

Might want to dust off the hard hat tomorrow.

NASA discovers a massive asteroid heading towards the Earth

The asteroid is as wide as 21 buses parked end to end.7news.com.au

Asteroid warning! NASA issues alert as 5 asteroids headed for Earth soon

As many as 5 asteroids are set to make close approaches to the Earth in the next few days, NASA has revealed. Know their speed, distance, and other details.

tech.hindustantimes.com

D

Deleted member 118

Guest

It was only a matter of time that more information became available via nasa

www.sbir.gov

www.sbir.gov

Deep Neural Networks (DNN) have become a critical component of tactical applications, assisting the warfighter in interpreting and making decisions from vast and disparate sources of data. Whether image, signal or text data, remotely sensed or scraped from the web, cooperatively collected or intercepted, DNNs are the go-to tool for rapid processing of this information to extract relevant features and enable the automated execution of downstream applications. Deployment of DNNs in data centers, ground stations and other locations with extensive power infrastructure has become commonplace but at the edge, where the tactical user operates, is very difficult. Secure, reliable, high bandwidth communications are a constrained resource for tactical applications which limits the ability to routed data collected at the edge back to a centralized processing location. Data must therefore be processed in real-time at the point of ingest which has its own challenges as almost all DNNs are developed to run on power hungry GPUs at wattages exceeding the practical capacity of solar power sources typically available at the edge. So what then is the future of advanced AI for the tactical end user where power and communications are in limited supply. Neuromorphic processors may provide the answer. Blue Ridge Envisioneering, Inc. (BRE) proposes the development of a systematic and methodical approach to deploying Deep Neural Network (DNN) architectures on neuromorphic hardware and evaluating their performance relative to a traditional GPU-based deployment. BRE will develop and document a process for benchmarking a DNN’ s performance on a standard GPU, converting it to run on commercially available neuromorphic hardware, training and evaluating model accuracy for a range of available bit quantizations, characterizing the trade between power consumption and the various bit quantizations, and characterizing the trade between throughput/latency and the various bit quantizations. This process will be demonstrated on a Deep Convolutional Neural Network trained to classify Electronic Warfare (EW) emitters in data collected by AFRL in 2011. The BrainChip Akida Event Domain Neural Processor development environment will be utilized for demonstration as it provides a simulated execution environment for running converted models under the discrete, low quantization constraints of neuromorphic hardware. In the option effort we pursue direct Spiking Neural Network (SNN) implementation and compare performance on the Akida hardware, and potentially other vendor’s hardware as well. We demonstrate the capability operating on real hardware in a relevant environment by conducting a data collection and demonstration activity at a U.S. test range with relevant EW emitters.

MENTAT | SBIR.gov

Deep Neural Networks (DNN) have become a critical component of tactical applications, assisting the warfighter in interpreting and making decisions from vast and disparate sources of data. Whether image, signal or text data, remotely sensed or scraped from the web, cooperatively collected or intercepted, DNNs are the go-to tool for rapid processing of this information to extract relevant features and enable the automated execution of downstream applications. Deployment of DNNs in data centers, ground stations and other locations with extensive power infrastructure has become commonplace but at the edge, where the tactical user operates, is very difficult. Secure, reliable, high bandwidth communications are a constrained resource for tactical applications which limits the ability to routed data collected at the edge back to a centralized processing location. Data must therefore be processed in real-time at the point of ingest which has its own challenges as almost all DNNs are developed to run on power hungry GPUs at wattages exceeding the practical capacity of solar power sources typically available at the edge. So what then is the future of advanced AI for the tactical end user where power and communications are in limited supply. Neuromorphic processors may provide the answer. Blue Ridge Envisioneering, Inc. (BRE) proposes the development of a systematic and methodical approach to deploying Deep Neural Network (DNN) architectures on neuromorphic hardware and evaluating their performance relative to a traditional GPU-based deployment. BRE will develop and document a process for benchmarking a DNN’ s performance on a standard GPU, converting it to run on commercially available neuromorphic hardware, training and evaluating model accuracy for a range of available bit quantizations, characterizing the trade between power consumption and the various bit quantizations, and characterizing the trade between throughput/latency and the various bit quantizations. This process will be demonstrated on a Deep Convolutional Neural Network trained to classify Electronic Warfare (EW) emitters in data collected by AFRL in 2011. The BrainChip Akida Event Domain Neural Processor development environment will be utilized for demonstration as it provides a simulated execution environment for running converted models under the discrete, low quantization constraints of neuromorphic hardware. In the option effort we pursue direct Spiking Neural Network (SNN) implementation and compare performance on the Akida hardware, and potentially other vendor’s hardware as well. We demonstrate the capability operating on real hardware in a relevant environment by conducting a data collection and demonstration activity at a U.S. test range with relevant EW emitters.

Last edited by a moderator:

Frangipani

Top 20

Very interesting that nasa are pushing to develope(finalise) a VOC device and I wonder if they are trying to utilise Akida into it.

Now that would be something else and maybe that’s where a few of our chips have gone.

Respiratory viral infection can sometimes lead to serious, possibly life threatening complications. Highly contagious respiratory diseases cause significant disruptions to social and economic systems if spread is uncontrolled. Therefore, the rapid and precise identification of viral infection before entering crowded or vulnerable areas is essential for suppressing their transmission effectively. Additionally, a device that is reconfigurable to address the next pandemic is highly desired. Screening for infection via exhaled breath analysis could provide a quick and simple method to find infectious carriers. This breath analyzer conceptualizes a rapid scanning device enabling the user to determine the presence of viral infection in an exhaled breath through analyzing volatile organic compounds (VOCs) concentrations.N5 Sensors will technically evaluate the feasibility of volatile organic compounds (VOCs) sensors for realizing Rapid Infection Screening via Exhalation (RISE) in a breathalyzer able to identify respiratory virus infected individuals, suitable for mass-testing scenarios.The proposed survey is expected to provide the guidance how to devise an integrated sensor system for actualizing initial screening at key check points. The evaluation will be accomplished by performing market survey, research level survey, and receiving consulting from breath analyzer pioneering companies for 1) Breath analyzer platform2) VOC gas sensors and 3) Machine learning algorithm. The survey will be progressed within stepwise assessment from initial database search, article screening and selection, to quality assessment and assortment. A comprehensive final report will be provided in which our findings and research strategy for Phase II are presented.

Triton Systems, Inc. will identify, design, and develop three noninvasive diagnostic tools to screen breath for the presence of communicable respiratory viral infections. The proposed screening technologies will be based on low-cost, high-throughput sensing modalities capable of detecting unique signatures of viral pathogens. After conducting a thorough and technical review of available targets, including volatile organic contaminants (VOCs), and suitable sensing platforms, Triton will develop sensor components into an integrated system with minimal form factor for use as personal health monitors or at travel checkpoints in highly trafficked areas. The proposed sensors will be easy to administer and widely deployable to maximize their benefit during seasonal epidemics and global pandemics involving communicable respiratory viral infections. Emphasis will be placed on an inexpensive platform with superlative sensitivity, selectivity, stability, and throughput. Wireless communication capabilities will enable the presentation and recording of the screening results in under five minutes at the site of use. Combined, the sensor components will be a widely deployable platform for detecting viral respiratory agents with pandemic potential, enhancing public health emergency readiness, and improving the transportation security infrastructure in the United States.

The COVID-19 pandemic is an example of human vulnerability to new communicable respiratory viral infections. Currently, most viral respiratory infections in humans are detected by sensing the presence of the pathogen's genetic material or proteins (i.e., antigens) in bodily fluids. Polymerase chain reaction (PCR)-based methods are the most commonly used to detect a pathogen's genetic material. Although samples can be collected outside the lab, it requires specialized laboratories and skilled technicians to collect samples, perform the tests, and analyze results. Furthermore, these tests requires hours to days to process and provide results. Additionally, their sampling methods are generally invasive. Accelerating the development of new, near real time, inexpensive, user-friendly, non-invasive, accurate, and sensitive detection technologies will contribute significantly to the national and worldwide efforts to curb communicable respiratory viral infections, like the COVID-19 pandemic. During Phase I, Lynntech will use its extensive expertise in portable chemical and biochemical sensor development (including sensors for VOC detection) to select portable, fast, reliable sensors/detectors that could be used to detect VOC markers in exhaled breath and that are associated with infectious agents. During Phase II, Lynntech will develop prototypes of the candidate approach and conduct tests to demonstrate the device's capability in the detection of VOC markers of a viral infection.

Last edited: 12

Here is an update on NASA’s testing of VOC devices, in this case tailored to COVID-19 infections specifically - an abstract of a paper published on May 24, co-authored by eight researchers from the NASA Ames Research Center, two Stanford School of Medicine MDs and the founder and CEO of a Tennessee company called Variable, Inc., a repeat NASA subcontractor. Unfortunately accessing the full-text PDF requires either membership of the American Chemical Society or institutional log-in credentials - anyone?! Maybe the full-text PDF would give us a clue whether or not Akida was utilised. The section I put in bold print could also hint that it is still to come.

Anyway, the last sentence spells it out crystal clear that NASA is absolutely planning to commercialise those VOC devices here on earth and not only develop them for astronauts’ use in space:

“Additional clinical testing, design refinement, and a mass manufacturing approach are the main steps toward deploying this technology to rapidly screen for active infection in clinics and hospitals, public and commercial venues, or at home.”

Electronic Nose Development and Preliminary Human Breath Testing for Rapid, Non-Invasive COVID-19 Detection

- Jing Li*,

- Ami Hannon,

- George Yu,

- Luke A. Idziak,

- Adwait Sahasrabhojanee,

- Prasanthi Govindarajan,

- Yvonne A. Maldonado,

- Khoa Ngo,

- John P. Abdou,

- Nghia Mai, and

- Antonio J. Ricco*

Publication Date:May 24, 2023

https://doi.org/10.1021/acssensors.3c00367

© 2023 American Chemical Society

RIGHTS & PERMISSIONS

Article Views

147Altmetric

3Citations

-LEARN ABOUT THESE METRICS

Add to

ExportRIS

PDF (6 MB)More Access Options

Get e-Alerts

Abstract

We adapted an existing, spaceflight-proven, robust “electronic nose” (E-Nose) that uses an array of electrical resistivity-based nanosensors mimicking aspects of mammalian olfaction to conduct on-site, rapid screening for COVID-19 infection by measuring the pattern of sensor responses to volatile organic compounds (VOCs) in exhaled human breath. We built and tested multiple copies of a hand-held prototype E-Nose sensor system, composed of 64 chemically sensitive nanomaterial sensing elements tailored to COVID-19 VOC detection; data acquisition electronics; a smart tablet with software (App) for sensor control, data acquisition and display; and a sampling fixture to capture exhaled breath samples and deliver them to the sensor array inside the E-Nose. The sensing elements detect the combination of VOCs typical in breath at parts-per-billion (ppb) levels, with repeatability of 0.02% and reproducibility of 1.2%; the measurement electronics in the E-Nose provide measurement accuracy and signal-to-noise ratios comparable to benchtop instrumentation. Preliminary clinical testing at Stanford Medicine with 63 participants, their COVID-19-positive or COVID-19-negative status determined by concomitant RT-PCR, discriminated between these two categories of human breath with a 79% correct identification rate using “leave-one-out” training-and-analysis methods. Analyzing the E-Nose response in conjunction with body temperature and other non-invasive symptom screening using advanced machine learning methods, with a much larger database of responses from a wider swath of the population, is expected to provide more accurate on-the-spot answers. Additional clinical testing, design refinement, and a mass manufacturing approach are the main steps toward deploying this technology to rapidly screen for active infection in clinics and hospitals, public and commercial venues, or at home.

KEYWORDS:

To access the full text, please choose an option below.

Get Access To This Article

Loading Institutional Login Options...

Log In with ACS ID

- ACS members enjoy benefits including 50 free articles a year and reduced priced individual subscription.

Learn More - Forgot ACS ID or Password?

The NASA Ames Research Center seems to have had several subcontractors (see @Rocket577’s post I am replying to) in regards to the development of VOC devices - in the above paper published on May 24, yet another company called Variable, Inc. is named, whose founder and CEO had worked for NASA before.

R&D | Research and Development

Research and Development - Variable Works with NASA to Rapidly Develop Covid-19 Detection Device

Variable Works with NASA to Rapidly Develop Covid-19 Detection Device

Photo: NASA/Ames Research Center/Dominic Hart

Variable, Inc. was awarded a NASA subcontract to prototype a NASA COVID-19 detection device called the E-Nose in 2020. Variable successfully produced the electronics and packaging for it along with a paired mobile app for the agency to test its nanosensor array technology developed by NASA’s Ames Research Center for the detection of volatile organic compounds (VOCs) in COVID-19 patient’s breath.

Variable is known around the world for their reliable and accurate color communication tools like the patented Color Muse® and Spectro 1 devices. The team has a specialized skill set in developing new sensor technology, building on a history of creating devices that could detect temperatures and complex gases among other components.

Variable founder and Chief Executive Officer, George Yu, Ph.D., got his start as a NASA subcontractor developing a device funded by the Department of Homeland Security that enabled smartphones to detect harmful gases using the agency’s nanosensor technology. The past project had a tight window for completion, and Yu earned a reputation for himself by rapidly producing a successful prototype. When NASA reached out to their workforce in April 2020 for innovative solutions to help in the fight against COVID-19, an Ames scientist, Dr. Jing Li, the principal investigator of the project at Ames, contacted Yu.

NASA was looking for a team that could build a device to detect a distinctive COVID-19 fingerprint from a list of VOCs in breath. When humans are sick, they release unique VOCs in their breath. This new device could run tests to determine what the VOC pattern from virus infection might be by using its specialized nanosensor array. Instruments with the capability to detect harmful gases are typically bulky and designed for laboratory use; however, the E-Nose is hand-held.

Scientists are still discovering new strains of COVID-19 and the outcome of this project is still largely unknown. However, Variable is committed to working with NASA and providing its resources and expertise to advance the agency’s nanosensor technology surrounding instant detection of the virus.

“We are incredibly honored to have this opportunity to work with NASA and feel strongly about this project because our nation and the world is in need,” said Yu. “If we are able to be of any help, we want to do our part with the abilities we can offer our country.”

About Variable, Inc.

Founded in 2012, Variable, Inc. is the global leader in mobile-paired spectrophotometers and colorimeters. Its patented technology enables users to accurately, affordably, and effortlessly communicate color within a wide variety of industries from paint and coatings to print and design to textiles and fashion and more. Variable produces devices such as the Color Muse, Color Muse SE, Spectro 1, and Spectro 1 Pro. Each is supported by Variable Cloud software and paired with custom apps for both iOS and Android. With these apps, users can scan and match to more than 500,000 different colors and items from house-hold brands around the world.

ADDRESS

2474 Clay Street

Chattanooga, TN 37406

hello@variableinc.com

PHONE

1.888.568.8588

SOCIAL

© Copyright 2022 Variable, Inc.

https://www.variableinc.com/variable-privacy-policy.html

D

Deleted member 118

Guest

Neuromorphic Sensor for Missile Defense | SBIR.gov

Proposal seeks to build and test a Persistent wide field-of-view (FOV) infrared (IR) camera array for detecting and tracking dim objects. The optical design is based on the successful Tau PANDORA (visible) array systems, now in use for tracking low earth orbit (LEO) and geostationary earth orbit (GEO) satellites. In Phase I, propose to collect daytime shortwave IR (SWIR) imagery on satellites with a single camera. Analyzed this data to demonstrate quantitatively the successful use of Convolutional Neural Networks (CNNs) to identify satellites distinct from the clutter. Tau proposes to construct a prototype sensor array to demonstrate the feasibility of utilizing Neuromorphic Machine Learning (NML). The primary research in Phase II will be directed towards developing an effective training algorithm of Spiking Neural Networks (SNN), an advanced Neuromorphic network, while we continue on present path by improving the Generation II Neural Networks previously investigated.

Pom down under

Top 20

I like this one I have more to post

Department of Defense

Branch:

Defense Advanced Research Projects Agency

Program | Phase | Year:

SBIR | BOTH | 2023

Solicitation:

23.4 SBIR Annual BAA

Topic Number:

HR0011SB20234-05

NOTE: The Solicitations and topics listed on this site are copies from the various SBIR agency solicitations and are not necessarily the latest and most up-to-date. For this reason, you should use the agency link listed below which will take you directly to the appropriate agency server where you can read the official version of this solicitation and download the appropriate forms and rules.

The official link for this solicitation is:https://www.defensesbirsttr.mil/

Release Date:

November 15, 2022

Open Date:

March 07, 2023

Application Due Date:

January 16, 2024

Close Date:

April 06, 2023

Description:

OUSD (R&E) CRITICAL TECHNOLOGY AREA(S): Advanced Materials, Microelectronics OBJECTIVE: The objective of the Wearables at the Edge to Augment Readiness (WEAR) SBIR topic is to develop a secure and lightweight framework for real-time analysis of sensory data from wearables to monitor warfighter health and readiness at the edge. DESCRIPTION: Wearable technology is now fundamental to all areas of the human ecosystem. The term wearable technology refers to small electronic and mobile devices, or computers with wireless communications capability that are incorporated into gadgets, accessories, or clothes, which can be worn on the human body [1]; for the purposes of this SBIR topic, it does not apply to invasive versions such as micro-chips or smart tattoos. Wearable technology can provide invaluable physiological and environmental data that can potentially be used to assess a warfighters’ physical/mental wellness and readiness. Modern edge devices, like smartphones and smart watches, are equipped with an ever-increasing set of sensors, such as accelerometers, magnetometers, gyroscopes, etc., that can continuously record users’ movements and motion [2]. The observed patterns can be an effective tool for seamless Human Activity Recognition which is the process of identifying and labeling human activities by applying Artificial Intelligence (AI)/Machine Learning (ML) to sensor data generated by smart devices both in isolation and in combination [3, 4]. However, smartphone and wearable sensor signals are typically noisy and can lack context/causality due to inaccurate timestamps when the device sleeps, goes into low-power mode, or experiences high resource utilization. Thus, it can be challenging to fuse any of the various raw sensor data to achieve positive or negative assessment in wellness areas such as personal healthcare, injuries, fall detection, as well as monitoring functional/behavioral health. For instance, sensor data can be processed into feature data related to sleep or different physical activities that potentially correlate to effects on an individual’s health [5, 6]. The objective of WEAR is to develop a secure and lightweight framework for real-time analysis of sensory data from wearables to monitor warfighter health and readiness at the edge. Importantly, WEAR will achieve this goal while consuming less than 5% of the wearable battery over 10 days, assuming an initial full battery charge. The battery consumption metric is of particular interest to WEAR as warfighters at the edge (e.g., expeditionary forces deployed to remote locations or Special Operations Forces units) may not be able to recharge wearable batteries due to mission constraints limiting access to power sources for re-charging. Equally important is the need for all processing to occur at the edge because of security concerns [8]. Existing commercial efforts require cloud and off-premises server resources to analyze sensor data. PHASE I: This topic is soliciting Direct to Phase 2 (DP2) proposals only. Phase I feasibility will be demonstrated through evidence of: a completed proof of concept/principal or basic prototype system; definition and characterization of framework properties/technology capabilities desirable for both Department of Defense (DoD)/government and civilian/commercial use; and capability/performance comparisons with existing state-of-the-art technologies/methodologies (competing approaches). Entities interested in submitting a DP2 proposal must provide documentation to substantiate that the scientific/technical merit and feasibility described above has been achieved and also describe the potential commercial applications. DP2 Phase I feasibility documentation should include: • technical reports describing results and conclusions of existing work, particularly regarding the commercial opportunity or DoD insertion opportunity, risks/mitigations, and technology assessments; • presentation materials and/or white papers; • technical papers; • test and measurement data; • prototype designs/models; • performance projections, goals, or results in different use cases; and, • documentation of related topics such as how the proposed WEAR solution can enable accurate and reliable analysis of sensory data at the edge. This collection of material will verify mastery of the required content for DP2 consideration. DP2 proposers must also demonstrate knowledge, skills, and abilities in AI/ML, data analytics, edge technologies, software development/engineering, and mobile security/privacy. For detailed information on DP2 requirements and eligibility, please refer to the DoD Broad Agency Announcement and the DARPA Instructions for this topic. PHASE II: The Personal Health Determinations (WEAR) SBIR topic seeks to develop a secure and lightweight framework that can perform real-time analysis of sensory data from wearables to monitor warfighter operational health and readiness at the edge, while consuming less than 5% of the wearable battery over 10 days, assuming an initial full battery charge (i.e., WEAR component overhead can be no more than 5% of the wearable battery over 10 days). One potential direction to achieve this goal is to leverage advances in low-power sensing at the chipset level present in modern mobile and wearable devices, including but not limited to “always-on sensing.” The primary interest is in commercial-off-the-shelf hardware paired with novel sensor drivers and algorithms developed to operate at low power. A secondary objective is to offer modular application programming interfaces (APIs) to access sensor data and edge ML models/algorithms that can fit into the resource-constrained environments of commercial wearables. The end goal is the capability to monitor and accurately assess warfighter operational health and readiness by using the sensory information on the edge devices without transporting information outside of the wearable or smartphone devices. Any custom hardware or sensors are out of scope for this solicitation. DP2 proposals should: • describe a proposed framework design/architecture to achieve the above stated goals; • present a plan for maturation of the framework to a demonstrable prototype system; and • detail a test plan, complete with proposed metrics and scope, for verification and validation of the prototype system performance. Phase II will culminate in a prototype system demonstration using one or more compelling use cases consistent with commercial opportunities and/or insertion into a DARPA program (e.g., Warfighter Analytics using Smartphones for Health (WASH [7]), which seeks to use data collected from cellphone sensors to enable novel algorithms that conduct passive, continuous, real-time assessment of the warfighter). The Phase II Option period will further mature the technology for insertion into a DoD/Intelligence Community (IC) Acquisition Program, another Federal agency; or commercialization into the private sector. The below schedule of milestones and deliverables is provided to establish expectations and desired results/end products for the Phase II and Phase II Option period efforts. Schedule/Milestones/Deliverables: Proposers will execute the research and development (R&D) plan as described in the proposal, including the below: • Month 1: Phase I Kickoff briefing (with annotated slides) to the DARPA Program Manager (PM) including: any updates to the proposed plan and technical approach, risks/mitigations, schedule (inclusive of dependencies) with planned capability milestones and deliverables, proposed metrics, and plan for prototype demonstration/validation. • Months 4, 7, 10: Quarterly technical progress reports detailing technical progress to date, tasks accomplished, risks/mitigations, a technical plan for the remainder of Phase II (while this would normally report progress against the plan detailed in the proposal or presented at the Kickoff briefing, it is understood that scientific discoveries, competition, and regulatory changes may all have impacts on the planned work and DARPA must be made aware of any revisions that result), planned activities, trip summaries, and any potential issues or problem areas that require the attention of the DARPA PM. • Month 12: Interim technical progress briefing (with annotated slides) to the DARPA PM detailing progress made (including quantitative assessment of capabilities developed to date), tasks accomplished, risks/mitigations, planned activities, technical plan for the second half of Phase II, the demonstration/verification plan for the end of Phase II, trip summaries, and any potential issues or problem areas that require the attention of the DARPA PM. • Month 15, 18, 21: Quarterly technical progress reports detailing technical progress made, tasks accomplished, risks/mitigations, a technical plan for the remainder of Phase II (with necessary updates as in the parenthetical remark for Months 4, 7, and 10), planned activities, trip summaries, and any potential issues or problem areas that require the attention of the DARPA PM. • Month 24: Final technical progress briefing (with annotated slides) to the DARPA PM. Final architecture with documented details; a demonstration of the ability to perform real-time analysis of sensory data at the edge while consuming less than 5% of the wearable battery over 10 days; documented APIs; and any other necessary documentation (including, at a minimum, user manuals and a detailed system design document; and the commercialization plan). • Month 30 (Phase II Option period): Interim report of matured prototype performance against existing state-of-the-art technologies, documenting key technical gaps towards productization. • Month 36 (Phase II Option period): Final Phase II Option period technical progress briefing (with annotated slides) to the DARPA PM including prototype performance against existing state-of-the-art technologies, including quantitative metrics for battery consumption and assessment of monitoring/assessment capabilities to support determinations of warfighter health status. PHASE III DUAL USE APPLICATIONS: Phase III Dual use applications (Commercial DoD/Military): WEAR has potential applicability across DoD and commercial entities. For DoD, WEAR is extremely well-suited for continuous, low-cost, opportunistic monitoring of warfighter health in the field, where specialized equipment and medical experts are not necessarily available. WEAR has the same applicability for the commercial sector and has the potential to provide doctors and physicians with invaluable historical patient health data that can be correlated to their activities, environment, and physiological responses. Phase III refers to work that derives from, extends, or completes an effort made under prior SBIR funding agreements, but is funded by sources other than the SBIR Program. The Phase III work will be oriented towards transition and commercialization of the developed WEAR technologies. The proposer is required to obtain funding from either the private sector, a non-SBIR Government source, or both, to develop the prototype into a viable product or non-R&D service for sale in military or private sector markets. Primary WEAR support will be to national efforts to explore the ability to collect and fuse sensor data and apply ML algorithms at the edge to that data in a manner that does not drain device battery. Results of WEAR are intended to improve healthcare monitoring and assessment at the edge, across government and industry. REFERENCES: [1] Aleksandr Ometov, Viktoriia Shubina, Lucie Klus, Justyna Skibinska, Salwa Saafi, Pavel Pascacio, Laura Flueratoru, Darwin Quezada Gaibor, Nadezhda Chukhno, Olga Chukhno, Asad Ali, Asma Channa, Ekaterina Svertoka, Waleed Bin Qaim, Raúl Casanova-Marqués, Sylvia Holcer, Joaquín Torres-Sospedra, Sven Casteleyn, Giuseppe Ruggeri, Giuseppe Araniti, Radim Burget, Jiri Hosek, Elena Simona Lohan, A Survey on Wearable Technology: History, State-of-the-Art and Current Challenges, Computer Networks, Volume 193, 2021, 108074, ISSN 1389-1286, https://doi.org/10.1016/j.comnet.2021.108074. (Available at https://www.sciencedirect.com/science/article/pii/S1389128621001651) [2] Paula Delgado-Santos, Giuseppe Stragapede, Ruben Tolosana, Richard Guest, Farzin Deravi, and Ruben Vera-Rodriguez. 2022. A Survey of Privacy Vulnerabilities of Mobile Device Sensors. ACM Comput. Surv. 54, 11s, Article 224 (January 2022), 30 pages. https://doi.org/10.1145/3510579. (Available at https://dl.acm.org/doi/pdf/10.1145/3510579) [3] Straczkiewicz, M., James, P. & Onnela, JP. A systematic review of smartphone-based human activity recognition methods for health research. npj Digit. Med. 4, 148 (2021). https://doi.org/10.1038/s41746-021-00514-4. (Available at https://www.nature.com/articles/s41746-021-00514-4) [4] E. Ramanujam, T. Perumal and S. Padmavathi, "Human Activity Recognition With Smartphone and Wearable Sensors Using Deep Learning Techniques: A Review," in IEEE Sensors Journal, vol. 21, no. 12, pp. 13029-13040, 15 June15, 2021, doi: 10.1109/JSEN.2021.3069927. (Available at https://ieeexplore.ieee.org/stamp/stamp.jsp?tp=&arnumber=9389739) [5] Jun-Ki Min, Afsaneh Doryab, Jason Wiese, Shahriyar Amini, John Zimmerman, and Jason I. Hong. 2014. Toss 'n' turn: smartphone as sleep and sleep quality detector. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems (CHI '14). Association for Computing Machinery, New York, NY, USA, 477–486. https://doi.org/10.1145/2556288.2557220 (Available at https://dl.acm.org/doi/pdf/10.1145/2556288.2557220) [6] Sardar, A. W., Ullah, F., Bacha, J., Khan, J., Ali, F., & Lee, S. (2022). Mobile sensors based platform of Human Physical Activities Recognition for COVID-19 spread minimization. Computers in biology and medicine, 146, 105662. https://doi.org/10.1016/j.compbiomed.2022.105662. (Available at https://www.ncbi.nlm.nih.gov/pmc/articles/PMC9137241/pdf/main.pdf) [7] Defense Advanced Research Projects Agency. (2017, May 8). Warfighter Analytics using Smartphones for Health (WASH) Broad Agency Announcement HR001117S0032. (Available at https://cpb-us-w2.wpmucdn.com/wp.wpi.edu/dist/d/274/files/2017/05/DODBAA-SMARTPHONES.pdf) [8] Fitness tracking app Strava gives away location of secret US army bases. https://www.theguardian.com/world/2...p-gives-away-location-of-secret-us-army-bases KEYWORDS: Wearable Technology, Health Monitoring, Health Assessment, Data Analytics, Edge Technology, Activity Recognition, Machine Learning

Wearables at the Edge to Augment Readiness (WEAR)

Agency:Department of Defense

Branch:

Defense Advanced Research Projects Agency

Program | Phase | Year:

SBIR | BOTH | 2023

Solicitation:

23.4 SBIR Annual BAA

Topic Number:

HR0011SB20234-05

NOTE: The Solicitations and topics listed on this site are copies from the various SBIR agency solicitations and are not necessarily the latest and most up-to-date. For this reason, you should use the agency link listed below which will take you directly to the appropriate agency server where you can read the official version of this solicitation and download the appropriate forms and rules.

The official link for this solicitation is:https://www.defensesbirsttr.mil/

Release Date:

November 15, 2022

Open Date:

March 07, 2023

Application Due Date:

January 16, 2024

Close Date:

April 06, 2023

Description:

OUSD (R&E) CRITICAL TECHNOLOGY AREA(S): Advanced Materials, Microelectronics OBJECTIVE: The objective of the Wearables at the Edge to Augment Readiness (WEAR) SBIR topic is to develop a secure and lightweight framework for real-time analysis of sensory data from wearables to monitor warfighter health and readiness at the edge. DESCRIPTION: Wearable technology is now fundamental to all areas of the human ecosystem. The term wearable technology refers to small electronic and mobile devices, or computers with wireless communications capability that are incorporated into gadgets, accessories, or clothes, which can be worn on the human body [1]; for the purposes of this SBIR topic, it does not apply to invasive versions such as micro-chips or smart tattoos. Wearable technology can provide invaluable physiological and environmental data that can potentially be used to assess a warfighters’ physical/mental wellness and readiness. Modern edge devices, like smartphones and smart watches, are equipped with an ever-increasing set of sensors, such as accelerometers, magnetometers, gyroscopes, etc., that can continuously record users’ movements and motion [2]. The observed patterns can be an effective tool for seamless Human Activity Recognition which is the process of identifying and labeling human activities by applying Artificial Intelligence (AI)/Machine Learning (ML) to sensor data generated by smart devices both in isolation and in combination [3, 4]. However, smartphone and wearable sensor signals are typically noisy and can lack context/causality due to inaccurate timestamps when the device sleeps, goes into low-power mode, or experiences high resource utilization. Thus, it can be challenging to fuse any of the various raw sensor data to achieve positive or negative assessment in wellness areas such as personal healthcare, injuries, fall detection, as well as monitoring functional/behavioral health. For instance, sensor data can be processed into feature data related to sleep or different physical activities that potentially correlate to effects on an individual’s health [5, 6]. The objective of WEAR is to develop a secure and lightweight framework for real-time analysis of sensory data from wearables to monitor warfighter health and readiness at the edge. Importantly, WEAR will achieve this goal while consuming less than 5% of the wearable battery over 10 days, assuming an initial full battery charge. The battery consumption metric is of particular interest to WEAR as warfighters at the edge (e.g., expeditionary forces deployed to remote locations or Special Operations Forces units) may not be able to recharge wearable batteries due to mission constraints limiting access to power sources for re-charging. Equally important is the need for all processing to occur at the edge because of security concerns [8]. Existing commercial efforts require cloud and off-premises server resources to analyze sensor data. PHASE I: This topic is soliciting Direct to Phase 2 (DP2) proposals only. Phase I feasibility will be demonstrated through evidence of: a completed proof of concept/principal or basic prototype system; definition and characterization of framework properties/technology capabilities desirable for both Department of Defense (DoD)/government and civilian/commercial use; and capability/performance comparisons with existing state-of-the-art technologies/methodologies (competing approaches). Entities interested in submitting a DP2 proposal must provide documentation to substantiate that the scientific/technical merit and feasibility described above has been achieved and also describe the potential commercial applications. DP2 Phase I feasibility documentation should include: • technical reports describing results and conclusions of existing work, particularly regarding the commercial opportunity or DoD insertion opportunity, risks/mitigations, and technology assessments; • presentation materials and/or white papers; • technical papers; • test and measurement data; • prototype designs/models; • performance projections, goals, or results in different use cases; and, • documentation of related topics such as how the proposed WEAR solution can enable accurate and reliable analysis of sensory data at the edge. This collection of material will verify mastery of the required content for DP2 consideration. DP2 proposers must also demonstrate knowledge, skills, and abilities in AI/ML, data analytics, edge technologies, software development/engineering, and mobile security/privacy. For detailed information on DP2 requirements and eligibility, please refer to the DoD Broad Agency Announcement and the DARPA Instructions for this topic. PHASE II: The Personal Health Determinations (WEAR) SBIR topic seeks to develop a secure and lightweight framework that can perform real-time analysis of sensory data from wearables to monitor warfighter operational health and readiness at the edge, while consuming less than 5% of the wearable battery over 10 days, assuming an initial full battery charge (i.e., WEAR component overhead can be no more than 5% of the wearable battery over 10 days). One potential direction to achieve this goal is to leverage advances in low-power sensing at the chipset level present in modern mobile and wearable devices, including but not limited to “always-on sensing.” The primary interest is in commercial-off-the-shelf hardware paired with novel sensor drivers and algorithms developed to operate at low power. A secondary objective is to offer modular application programming interfaces (APIs) to access sensor data and edge ML models/algorithms that can fit into the resource-constrained environments of commercial wearables. The end goal is the capability to monitor and accurately assess warfighter operational health and readiness by using the sensory information on the edge devices without transporting information outside of the wearable or smartphone devices. Any custom hardware or sensors are out of scope for this solicitation. DP2 proposals should: • describe a proposed framework design/architecture to achieve the above stated goals; • present a plan for maturation of the framework to a demonstrable prototype system; and • detail a test plan, complete with proposed metrics and scope, for verification and validation of the prototype system performance. Phase II will culminate in a prototype system demonstration using one or more compelling use cases consistent with commercial opportunities and/or insertion into a DARPA program (e.g., Warfighter Analytics using Smartphones for Health (WASH [7]), which seeks to use data collected from cellphone sensors to enable novel algorithms that conduct passive, continuous, real-time assessment of the warfighter). The Phase II Option period will further mature the technology for insertion into a DoD/Intelligence Community (IC) Acquisition Program, another Federal agency; or commercialization into the private sector. The below schedule of milestones and deliverables is provided to establish expectations and desired results/end products for the Phase II and Phase II Option period efforts. Schedule/Milestones/Deliverables: Proposers will execute the research and development (R&D) plan as described in the proposal, including the below: • Month 1: Phase I Kickoff briefing (with annotated slides) to the DARPA Program Manager (PM) including: any updates to the proposed plan and technical approach, risks/mitigations, schedule (inclusive of dependencies) with planned capability milestones and deliverables, proposed metrics, and plan for prototype demonstration/validation. • Months 4, 7, 10: Quarterly technical progress reports detailing technical progress to date, tasks accomplished, risks/mitigations, a technical plan for the remainder of Phase II (while this would normally report progress against the plan detailed in the proposal or presented at the Kickoff briefing, it is understood that scientific discoveries, competition, and regulatory changes may all have impacts on the planned work and DARPA must be made aware of any revisions that result), planned activities, trip summaries, and any potential issues or problem areas that require the attention of the DARPA PM. • Month 12: Interim technical progress briefing (with annotated slides) to the DARPA PM detailing progress made (including quantitative assessment of capabilities developed to date), tasks accomplished, risks/mitigations, planned activities, technical plan for the second half of Phase II, the demonstration/verification plan for the end of Phase II, trip summaries, and any potential issues or problem areas that require the attention of the DARPA PM. • Month 15, 18, 21: Quarterly technical progress reports detailing technical progress made, tasks accomplished, risks/mitigations, a technical plan for the remainder of Phase II (with necessary updates as in the parenthetical remark for Months 4, 7, and 10), planned activities, trip summaries, and any potential issues or problem areas that require the attention of the DARPA PM. • Month 24: Final technical progress briefing (with annotated slides) to the DARPA PM. Final architecture with documented details; a demonstration of the ability to perform real-time analysis of sensory data at the edge while consuming less than 5% of the wearable battery over 10 days; documented APIs; and any other necessary documentation (including, at a minimum, user manuals and a detailed system design document; and the commercialization plan). • Month 30 (Phase II Option period): Interim report of matured prototype performance against existing state-of-the-art technologies, documenting key technical gaps towards productization. • Month 36 (Phase II Option period): Final Phase II Option period technical progress briefing (with annotated slides) to the DARPA PM including prototype performance against existing state-of-the-art technologies, including quantitative metrics for battery consumption and assessment of monitoring/assessment capabilities to support determinations of warfighter health status. PHASE III DUAL USE APPLICATIONS: Phase III Dual use applications (Commercial DoD/Military): WEAR has potential applicability across DoD and commercial entities. For DoD, WEAR is extremely well-suited for continuous, low-cost, opportunistic monitoring of warfighter health in the field, where specialized equipment and medical experts are not necessarily available. WEAR has the same applicability for the commercial sector and has the potential to provide doctors and physicians with invaluable historical patient health data that can be correlated to their activities, environment, and physiological responses. Phase III refers to work that derives from, extends, or completes an effort made under prior SBIR funding agreements, but is funded by sources other than the SBIR Program. The Phase III work will be oriented towards transition and commercialization of the developed WEAR technologies. The proposer is required to obtain funding from either the private sector, a non-SBIR Government source, or both, to develop the prototype into a viable product or non-R&D service for sale in military or private sector markets. Primary WEAR support will be to national efforts to explore the ability to collect and fuse sensor data and apply ML algorithms at the edge to that data in a manner that does not drain device battery. Results of WEAR are intended to improve healthcare monitoring and assessment at the edge, across government and industry. REFERENCES: [1] Aleksandr Ometov, Viktoriia Shubina, Lucie Klus, Justyna Skibinska, Salwa Saafi, Pavel Pascacio, Laura Flueratoru, Darwin Quezada Gaibor, Nadezhda Chukhno, Olga Chukhno, Asad Ali, Asma Channa, Ekaterina Svertoka, Waleed Bin Qaim, Raúl Casanova-Marqués, Sylvia Holcer, Joaquín Torres-Sospedra, Sven Casteleyn, Giuseppe Ruggeri, Giuseppe Araniti, Radim Burget, Jiri Hosek, Elena Simona Lohan, A Survey on Wearable Technology: History, State-of-the-Art and Current Challenges, Computer Networks, Volume 193, 2021, 108074, ISSN 1389-1286, https://doi.org/10.1016/j.comnet.2021.108074. (Available at https://www.sciencedirect.com/science/article/pii/S1389128621001651) [2] Paula Delgado-Santos, Giuseppe Stragapede, Ruben Tolosana, Richard Guest, Farzin Deravi, and Ruben Vera-Rodriguez. 2022. A Survey of Privacy Vulnerabilities of Mobile Device Sensors. ACM Comput. Surv. 54, 11s, Article 224 (January 2022), 30 pages. https://doi.org/10.1145/3510579. (Available at https://dl.acm.org/doi/pdf/10.1145/3510579) [3] Straczkiewicz, M., James, P. & Onnela, JP. A systematic review of smartphone-based human activity recognition methods for health research. npj Digit. Med. 4, 148 (2021). https://doi.org/10.1038/s41746-021-00514-4. (Available at https://www.nature.com/articles/s41746-021-00514-4) [4] E. Ramanujam, T. Perumal and S. Padmavathi, "Human Activity Recognition With Smartphone and Wearable Sensors Using Deep Learning Techniques: A Review," in IEEE Sensors Journal, vol. 21, no. 12, pp. 13029-13040, 15 June15, 2021, doi: 10.1109/JSEN.2021.3069927. (Available at https://ieeexplore.ieee.org/stamp/stamp.jsp?tp=&arnumber=9389739) [5] Jun-Ki Min, Afsaneh Doryab, Jason Wiese, Shahriyar Amini, John Zimmerman, and Jason I. Hong. 2014. Toss 'n' turn: smartphone as sleep and sleep quality detector. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems (CHI '14). Association for Computing Machinery, New York, NY, USA, 477–486. https://doi.org/10.1145/2556288.2557220 (Available at https://dl.acm.org/doi/pdf/10.1145/2556288.2557220) [6] Sardar, A. W., Ullah, F., Bacha, J., Khan, J., Ali, F., & Lee, S. (2022). Mobile sensors based platform of Human Physical Activities Recognition for COVID-19 spread minimization. Computers in biology and medicine, 146, 105662. https://doi.org/10.1016/j.compbiomed.2022.105662. (Available at https://www.ncbi.nlm.nih.gov/pmc/articles/PMC9137241/pdf/main.pdf) [7] Defense Advanced Research Projects Agency. (2017, May 8). Warfighter Analytics using Smartphones for Health (WASH) Broad Agency Announcement HR001117S0032. (Available at https://cpb-us-w2.wpmucdn.com/wp.wpi.edu/dist/d/274/files/2017/05/DODBAA-SMARTPHONES.pdf) [8] Fitness tracking app Strava gives away location of secret US army bases. https://www.theguardian.com/world/2...p-gives-away-location-of-secret-us-army-bases KEYWORDS: Wearable Technology, Health Monitoring, Health Assessment, Data Analytics, Edge Technology, Activity Recognition, Machine Learning

equanimous

Norse clairvoyant shapeshifter goddess

Thanks RocketI like this one I have more to post

Wearables at the Edge to Augment Readiness (WEAR)

Agency:

Department of Defense

Branch:

Defense Advanced Research Projects Agency

Program | Phase | Year:

SBIR | BOTH | 2023

Solicitation:

23.4 SBIR Annual BAA

Topic Number:

HR0011SB20234-05

NOTE: The Solicitations and topics listed on this site are copies from the various SBIR agency solicitations and are not necessarily the latest and most up-to-date. For this reason, you should use the agency link listed below which will take you directly to the appropriate agency server where you can read the official version of this solicitation and download the appropriate forms and rules.

The official link for this solicitation is:https://www.defensesbirsttr.mil/

Release Date:

November 15, 2022

Open Date:

March 07, 2023

Application Due Date:

January 16, 2024

Close Date:

April 06, 2023

Description:

OUSD (R&E) CRITICAL TECHNOLOGY AREA(S): Advanced Materials, Microelectronics OBJECTIVE: The objective of the Wearables at the Edge to Augment Readiness (WEAR) SBIR topic is to develop a secure and lightweight framework for real-time analysis of sensory data from wearables to monitor warfighter health and readiness at the edge. DESCRIPTION: Wearable technology is now fundamental to all areas of the human ecosystem. The term wearable technology refers to small electronic and mobile devices, or computers with wireless communications capability that are incorporated into gadgets, accessories, or clothes, which can be worn on the human body [1]; for the purposes of this SBIR topic, it does not apply to invasive versions such as micro-chips or smart tattoos. Wearable technology can provide invaluable physiological and environmental data that can potentially be used to assess a warfighters’ physical/mental wellness and readiness. Modern edge devices, like smartphones and smart watches, are equipped with an ever-increasing set of sensors, such as accelerometers, magnetometers, gyroscopes, etc., that can continuously record users’ movements and motion [2]. The observed patterns can be an effective tool for seamless Human Activity Recognition which is the process of identifying and labeling human activities by applying Artificial Intelligence (AI)/Machine Learning (ML) to sensor data generated by smart devices both in isolation and in combination [3, 4]. However, smartphone and wearable sensor signals are typically noisy and can lack context/causality due to inaccurate timestamps when the device sleeps, goes into low-power mode, or experiences high resource utilization. Thus, it can be challenging to fuse any of the various raw sensor data to achieve positive or negative assessment in wellness areas such as personal healthcare, injuries, fall detection, as well as monitoring functional/behavioral health. For instance, sensor data can be processed into feature data related to sleep or different physical activities that potentially correlate to effects on an individual’s health [5, 6]. The objective of WEAR is to develop a secure and lightweight framework for real-time analysis of sensory data from wearables to monitor warfighter health and readiness at the edge. Importantly, WEAR will achieve this goal while consuming less than 5% of the wearable battery over 10 days, assuming an initial full battery charge. The battery consumption metric is of particular interest to WEAR as warfighters at the edge (e.g., expeditionary forces deployed to remote locations or Special Operations Forces units) may not be able to recharge wearable batteries due to mission constraints limiting access to power sources for re-charging. Equally important is the need for all processing to occur at the edge because of security concerns [8]. Existing commercial efforts require cloud and off-premises server resources to analyze sensor data. PHASE I: This topic is soliciting Direct to Phase 2 (DP2) proposals only. Phase I feasibility will be demonstrated through evidence of: a completed proof of concept/principal or basic prototype system; definition and characterization of framework properties/technology capabilities desirable for both Department of Defense (DoD)/government and civilian/commercial use; and capability/performance comparisons with existing state-of-the-art technologies/methodologies (competing approaches). Entities interested in submitting a DP2 proposal must provide documentation to substantiate that the scientific/technical merit and feasibility described above has been achieved and also describe the potential commercial applications. DP2 Phase I feasibility documentation should include: • technical reports describing results and conclusions of existing work, particularly regarding the commercial opportunity or DoD insertion opportunity, risks/mitigations, and technology assessments; • presentation materials and/or white papers; • technical papers; • test and measurement data; • prototype designs/models; • performance projections, goals, or results in different use cases; and, • documentation of related topics such as how the proposed WEAR solution can enable accurate and reliable analysis of sensory data at the edge. This collection of material will verify mastery of the required content for DP2 consideration. DP2 proposers must also demonstrate knowledge, skills, and abilities in AI/ML, data analytics, edge technologies, software development/engineering, and mobile security/privacy. For detailed information on DP2 requirements and eligibility, please refer to the DoD Broad Agency Announcement and the DARPA Instructions for this topic. PHASE II: The Personal Health Determinations (WEAR) SBIR topic seeks to develop a secure and lightweight framework that can perform real-time analysis of sensory data from wearables to monitor warfighter operational health and readiness at the edge, while consuming less than 5% of the wearable battery over 10 days, assuming an initial full battery charge (i.e., WEAR component overhead can be no more than 5% of the wearable battery over 10 days). One potential direction to achieve this goal is to leverage advances in low-power sensing at the chipset level present in modern mobile and wearable devices, including but not limited to “always-on sensing.” The primary interest is in commercial-off-the-shelf hardware paired with novel sensor drivers and algorithms developed to operate at low power. A secondary objective is to offer modular application programming interfaces (APIs) to access sensor data and edge ML models/algorithms that can fit into the resource-constrained environments of commercial wearables. The end goal is the capability to monitor and accurately assess warfighter operational health and readiness by using the sensory information on the edge devices without transporting information outside of the wearable or smartphone devices. Any custom hardware or sensors are out of scope for this solicitation. DP2 proposals should: • describe a proposed framework design/architecture to achieve the above stated goals; • present a plan for maturation of the framework to a demonstrable prototype system; and • detail a test plan, complete with proposed metrics and scope, for verification and validation of the prototype system performance. Phase II will culminate in a prototype system demonstration using one or more compelling use cases consistent with commercial opportunities and/or insertion into a DARPA program (e.g., Warfighter Analytics using Smartphones for Health (WASH [7]), which seeks to use data collected from cellphone sensors to enable novel algorithms that conduct passive, continuous, real-time assessment of the warfighter). The Phase II Option period will further mature the technology for insertion into a DoD/Intelligence Community (IC) Acquisition Program, another Federal agency; or commercialization into the private sector. The below schedule of milestones and deliverables is provided to establish expectations and desired results/end products for the Phase II and Phase II Option period efforts. Schedule/Milestones/Deliverables: Proposers will execute the research and development (R&D) plan as described in the proposal, including the below: • Month 1: Phase I Kickoff briefing (with annotated slides) to the DARPA Program Manager (PM) including: any updates to the proposed plan and technical approach, risks/mitigations, schedule (inclusive of dependencies) with planned capability milestones and deliverables, proposed metrics, and plan for prototype demonstration/validation. • Months 4, 7, 10: Quarterly technical progress reports detailing technical progress to date, tasks accomplished, risks/mitigations, a technical plan for the remainder of Phase II (while this would normally report progress against the plan detailed in the proposal or presented at the Kickoff briefing, it is understood that scientific discoveries, competition, and regulatory changes may all have impacts on the planned work and DARPA must be made aware of any revisions that result), planned activities, trip summaries, and any potential issues or problem areas that require the attention of the DARPA PM. • Month 12: Interim technical progress briefing (with annotated slides) to the DARPA PM detailing progress made (including quantitative assessment of capabilities developed to date), tasks accomplished, risks/mitigations, planned activities, technical plan for the second half of Phase II, the demonstration/verification plan for the end of Phase II, trip summaries, and any potential issues or problem areas that require the attention of the DARPA PM. • Month 15, 18, 21: Quarterly technical progress reports detailing technical progress made, tasks accomplished, risks/mitigations, a technical plan for the remainder of Phase II (with necessary updates as in the parenthetical remark for Months 4, 7, and 10), planned activities, trip summaries, and any potential issues or problem areas that require the attention of the DARPA PM. • Month 24: Final technical progress briefing (with annotated slides) to the DARPA PM. Final architecture with documented details; a demonstration of the ability to perform real-time analysis of sensory data at the edge while consuming less than 5% of the wearable battery over 10 days; documented APIs; and any other necessary documentation (including, at a minimum, user manuals and a detailed system design document; and the commercialization plan). • Month 30 (Phase II Option period): Interim report of matured prototype performance against existing state-of-the-art technologies, documenting key technical gaps towards productization. • Month 36 (Phase II Option period): Final Phase II Option period technical progress briefing (with annotated slides) to the DARPA PM including prototype performance against existing state-of-the-art technologies, including quantitative metrics for battery consumption and assessment of monitoring/assessment capabilities to support determinations of warfighter health status. PHASE III DUAL USE APPLICATIONS: Phase III Dual use applications (Commercial DoD/Military): WEAR has potential applicability across DoD and commercial entities. For DoD, WEAR is extremely well-suited for continuous, low-cost, opportunistic monitoring of warfighter health in the field, where specialized equipment and medical experts are not necessarily available. WEAR has the same applicability for the commercial sector and has the potential to provide doctors and physicians with invaluable historical patient health data that can be correlated to their activities, environment, and physiological responses. Phase III refers to work that derives from, extends, or completes an effort made under prior SBIR funding agreements, but is funded by sources other than the SBIR Program. The Phase III work will be oriented towards transition and commercialization of the developed WEAR technologies. The proposer is required to obtain funding from either the private sector, a non-SBIR Government source, or both, to develop the prototype into a viable product or non-R&D service for sale in military or private sector markets. Primary WEAR support will be to national efforts to explore the ability to collect and fuse sensor data and apply ML algorithms at the edge to that data in a manner that does not drain device battery. Results of WEAR are intended to improve healthcare monitoring and assessment at the edge, across government and industry. REFERENCES: [1] Aleksandr Ometov, Viktoriia Shubina, Lucie Klus, Justyna Skibinska, Salwa Saafi, Pavel Pascacio, Laura Flueratoru, Darwin Quezada Gaibor, Nadezhda Chukhno, Olga Chukhno, Asad Ali, Asma Channa, Ekaterina Svertoka, Waleed Bin Qaim, Raúl Casanova-Marqués, Sylvia Holcer, Joaquín Torres-Sospedra, Sven Casteleyn, Giuseppe Ruggeri, Giuseppe Araniti, Radim Burget, Jiri Hosek, Elena Simona Lohan, A Survey on Wearable Technology: History, State-of-the-Art and Current Challenges, Computer Networks, Volume 193, 2021, 108074, ISSN 1389-1286, https://doi.org/10.1016/j.comnet.2021.108074. (Available at https://www.sciencedirect.com/science/article/pii/S1389128621001651) [2] Paula Delgado-Santos, Giuseppe Stragapede, Ruben Tolosana, Richard Guest, Farzin Deravi, and Ruben Vera-Rodriguez. 2022. A Survey of Privacy Vulnerabilities of Mobile Device Sensors. ACM Comput. Surv. 54, 11s, Article 224 (January 2022), 30 pages. https://doi.org/10.1145/3510579. (Available at https://dl.acm.org/doi/pdf/10.1145/3510579) [3] Straczkiewicz, M., James, P. & Onnela, JP. A systematic review of smartphone-based human activity recognition methods for health research. npj Digit. Med. 4, 148 (2021). https://doi.org/10.1038/s41746-021-00514-4. (Available at https://www.nature.com/articles/s41746-021-00514-4) [4] E. Ramanujam, T. Perumal and S. Padmavathi, "Human Activity Recognition With Smartphone and Wearable Sensors Using Deep Learning Techniques: A Review," in IEEE Sensors Journal, vol. 21, no. 12, pp. 13029-13040, 15 June15, 2021, doi: 10.1109/JSEN.2021.3069927. (Available at https://ieeexplore.ieee.org/stamp/stamp.jsp?tp=&arnumber=9389739) [5] Jun-Ki Min, Afsaneh Doryab, Jason Wiese, Shahriyar Amini, John Zimmerman, and Jason I. Hong. 2014. Toss 'n' turn: smartphone as sleep and sleep quality detector. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems (CHI '14). Association for Computing Machinery, New York, NY, USA, 477–486. https://doi.org/10.1145/2556288.2557220 (Available at https://dl.acm.org/doi/pdf/10.1145/2556288.2557220) [6] Sardar, A. W., Ullah, F., Bacha, J., Khan, J., Ali, F., & Lee, S. (2022). Mobile sensors based platform of Human Physical Activities Recognition for COVID-19 spread minimization. Computers in biology and medicine, 146, 105662. https://doi.org/10.1016/j.compbiomed.2022.105662. (Available at https://www.ncbi.nlm.nih.gov/pmc/articles/PMC9137241/pdf/main.pdf) [7] Defense Advanced Research Projects Agency. (2017, May 8). Warfighter Analytics using Smartphones for Health (WASH) Broad Agency Announcement HR001117S0032. (Available at https://cpb-us-w2.wpmucdn.com/wp.wpi.edu/dist/d/274/files/2017/05/DODBAA-SMARTPHONES.pdf) [8] Fitness tracking app Strava gives away location of secret US army bases. https://www.theguardian.com/world/2...p-gives-away-location-of-secret-us-army-bases KEYWORDS: Wearable Technology, Health Monitoring, Health Assessment, Data Analytics, Edge Technology, Activity Recognition, Machine Learning

buena suerte :-)

BOB Bank of Brainchip

Pom down under

Top 20

New? as I know they worked on the rad hardening in 2020 and it was one of our 1st announcements from nasa.?

Scroll down as this was January 2022

www.sbir.gov

www.sbir.gov

Silicon Space Technology Corporation

1501 South MoPac Expressway, Suite 350

Austin, TX 78746-6966

United States

Hubzone Owned:

No

Socially and Economically Disadvantaged:

No

Woman Owned:

No

Duns:

147671957

Principal Investigator

Name: Patrice Parris

Phone: (602) 463-5757

Email: pparris@voragotech.com

Business Contact

Name: Garry Nash

Phone: (631) 559-1550

Email: gnash@siliconspacetech.com

Research Institution

N/A

Abstract

The goal of this project is the creation of a radiation-hardened Spiking Neural Network (SNN) SoC based on the BrainChip Akida Neuron Fabric IP. Akida is a member of a small set of existing SNN architectures structured to more closely emulate computation in a human brain. The rationale for using a SNN for Edge AI Computing is because of its efficiencies. The neurmorphic approach used in the Akida architecture takes fewer MACs per operation since it creates and uses sparsity of both weights and activation by its event-based model. BrainChip’s studies have shown that, for the small models studied, the sparsity on Akida averaged 52.3%. For medium and large image recognition models, the Akida model sparsity averaged 53.8%. This means that the Akida model needs roughly half the MACs to solve the same problem. In addition, Akida reduces memory consumption by quantizing and compressing network parameters. This helps to reduce power consumption and die size while maintaining performance.The Akida fabric is built of a collection of Neural Processing Units (NPUs) which are connected by and communicate over a mesh network. This allows the layers of a neural network to be distributed across NPUs. The NPU are arranged in groups of 4 called Nodes. Each NPU has 8 Compute Engines and 100KB of local SRAM. The SRAM stores internal events, network parameters and activations. Having SRAM local to the nodes saves energy since the node data is not being constantly moved around on the network. Packets on the mesh network are filtered locally so that each NPU only has to process packets addressed to it. The Compute Engines generate Output Events which are packetized and placed on the mesh network. Communication over the network is routed without intervention from the supervisory CPU, preventing the CPU from limiting the communication bandwidth and reducing the energy needed to transfer data between nodes.

Scroll down as this was January 2022

January 2022 | SBIR.gov

Silicon Space Technology Corporation

1501 South MoPac Expressway, Suite 350

Austin, TX 78746-6966

United States

Hubzone Owned:

No

Socially and Economically Disadvantaged:

No

Woman Owned:

No

Duns:

147671957

Principal Investigator

Name: Patrice Parris

Phone: (602) 463-5757

Email: pparris@voragotech.com

Business Contact

Name: Garry Nash

Phone: (631) 559-1550

Email: gnash@siliconspacetech.com

Research Institution

N/A

Abstract