You weren't Alan Jones' grade 8 teacher by any chance?I joked a while ago I was going to subscribe to MF and AFR so I could invest against their advice................can't go wrong I reckon

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

BRN Discussion Ongoing

- Thread starter TechGirl

- Start date

FJ-215

Regular

Just watching Bloomberg TV. As luck would have it, currently live from Davos.I just had a browse through CSL's financials:

https://www.intelligentinvestor.com.au/shares/asx-csl/csl-limited/financials

What struck me was that an $132B company which is highly profitable has $7B debt (a bit over twice the net profit), and pays a quarter of a billion in interest which could otherwise go to shareholders as dividends.

Then I think back to the 80s and the days of the corporate raiders unleashed by the deregulation part of the Davos/WTO mantra (privatization, deregulation, incentivization, globalization) who took over cash rich companies just to plunder the assets and discard the corporate shell wreaking havoc in western economies.

So companies need to be highly geared to avoid that trap, and the Davos boys get the interest payments for free - a nice protection racket - leaving the companies performing a tightrope act balancing profit and debt.

So, once the money starts to flow, BrainChip will need to distribute a lot of the profit to keep the sharks at bay - the rainy-day-sock-under-the-bed will only attract them - or they could go on a buying spree.

All the talk there at the moment is de-globalization/regionalization

Yep! They've realized that to satisfy their avarice they have raked it all in and the global economy is on the verge of collapse, so to preserve their wealth they are prepared to do a little penance and pay a bit of tax so the proles can keep their noses to the grindstone.Just watching Bloomberg TV. As luck would have it, currently live from Davos.

All the talk there at the moment is de-globalization/regionalization

[Thinks - "Now where did I put my lamp?"]

Quatrojos

Regular

Hey Z,

This is from 2020, I believe; I've been down this hole before!

Q

Tothemoon24

Top 20

cosors

👀

Let everyone make up his own mind.

I post this opinion actually only because of the funny remarks below. Either we are in the WRONG forum here and we should switch to high quality discussions in the new generation forum for BrainChip's stock. Or else Simon hasn't discovered tse yet and should join us. ) I find some of his assessments to be.... ... ...

Just my opinion...

"BrainChip succeeds in coup with industry giant

Simon / 05.23.22 / 12:43 pm

Shares in Australian startup BrainChip (WKN: A14Z7W) shot up +15% after trading began in Australia on Monday. Late Sunday, the AI specialist had reported its inclusion in the partner program of an absolute industry giant. Is the Australian AI gem's breakthrough imminent?

BrainChip Holding is an Australian technology company with solutions in the field of artificial intelligence and machine learning (AI & ML). The chip developer's flagship project is a so-called neuromorphic processor called Akida. It is supposed to be very close to the way the brain works and thus, in particular, very energy-efficient. The tech stock is already worth over US$1.4 billion on the stock market.

Inclusion in ARM's partner program

BrainChip shares shot up +15% on the Australian Stock Exchange Monday morning, eventually exiting trading up 8.55% at AU$1.27.

What put investors of the tech stock in such a buying mood was, to all appearances, a company announcement from late Sunday. According to it, the Australians were accepted into the AI Partner Program of ARM, one of the biggest names in chip sector. The British manufacturer's blueprints are the world's dominant standard for smartphones, tablets and mobile IoT devices.

ARM is considered a sort of "neutral Switzerland" due to its status in the semiconductor industry. Earlier this year, tech giant Nvidia attempted to acquire the company for US$40 billion. However, the deal ultimately fell through due to regulatory concerns raised by the relevant competition watchdogs.

The British company's partner program is an ecosystem of hardware and software specialists designed to enable developers to build the next generation of AI solutions, according to the statement.

It reportedly helps members form strategic alliances and provides them with technical support and resources to reach partners, developers and decision makers in their target markets.

Mohamed Awad, vice president of IoT and embedded at ARM, said:

"As part of ARM's AI Partner Program, BrainChip will enable developers to address the need for high-performance and ultra-low-power edge AI inference, enabling new innovation opportunities."

Partner network grows

BrainChip has scored another strong coup by being accepted into the AI Partner Program of market giant ARM. In all likelihood, this will enable the chip manufacturer to land further partnerships to expand the commercial reach of its Akida processors.

In Europe, it already has distribution alliances with Easttronics and SalesLink. A month ago, it added an exciting collaboration with Swiss AI firm NVISO, which specializes in human behavior analysis. Heavyweights such as Mercedes and the US Air Force have also already announced cooperation with the Australians.

Breakthrough still unlikely at present

However, in our view it is probably still a bit too early for a substantial investment in BrainChip. Experience shows that the long-term chances of success for start-ups in the chip segment are extremely low. This insight has apparently also recently become widespread again among investors: After the Australians obtained an important patent in January and the share exploded by +200% to AU$2.20, the price promptly halved again.

And I thought that was because of Mercedes and patent was the cherry...

ARM's partner program will undoubtedly increase BrainChip's visibility in the industry, but the company is far from the only edge AI specialist in the ecosystem. In addition to an unlikely breakthrough, however, risk-averse investors can bet on an acquisition by a chip giant such as Intel or Nvidia - though they would prefer to do so only with a very small portfolio stake.

=>BrainChip: Discuss now!<=

High-quality discussions and real information advantages: Benefit just like thousands of other investors from our unique Live Chat, the new generation forum for BrainChip stock.

Not a member yet? Here you can register for free!"

https://www.sharedeals.de/brainchip-gelingt-coup-mit-branchengroesse/#gref

Come on, they need insight from you and it's free! Give yourselves a jolt and register now.

I post this opinion actually only because of the funny remarks below. Either we are in the WRONG forum here and we should switch to high quality discussions in the new generation forum for BrainChip's stock. Or else Simon hasn't discovered tse yet and should join us. ) I find some of his assessments to be.... ... ...

Just my opinion...

"BrainChip succeeds in coup with industry giant

Simon / 05.23.22 / 12:43 pm

Shares in Australian startup BrainChip (WKN: A14Z7W) shot up +15% after trading began in Australia on Monday. Late Sunday, the AI specialist had reported its inclusion in the partner program of an absolute industry giant. Is the Australian AI gem's breakthrough imminent?

BrainChip Holding is an Australian technology company with solutions in the field of artificial intelligence and machine learning (AI & ML). The chip developer's flagship project is a so-called neuromorphic processor called Akida. It is supposed to be very close to the way the brain works and thus, in particular, very energy-efficient. The tech stock is already worth over US$1.4 billion on the stock market.

Inclusion in ARM's partner program

BrainChip shares shot up +15% on the Australian Stock Exchange Monday morning, eventually exiting trading up 8.55% at AU$1.27.

What put investors of the tech stock in such a buying mood was, to all appearances, a company announcement from late Sunday. According to it, the Australians were accepted into the AI Partner Program of ARM, one of the biggest names in chip sector. The British manufacturer's blueprints are the world's dominant standard for smartphones, tablets and mobile IoT devices.

ARM is considered a sort of "neutral Switzerland" due to its status in the semiconductor industry. Earlier this year, tech giant Nvidia attempted to acquire the company for US$40 billion. However, the deal ultimately fell through due to regulatory concerns raised by the relevant competition watchdogs.

The British company's partner program is an ecosystem of hardware and software specialists designed to enable developers to build the next generation of AI solutions, according to the statement.

It reportedly helps members form strategic alliances and provides them with technical support and resources to reach partners, developers and decision makers in their target markets.

Mohamed Awad, vice president of IoT and embedded at ARM, said:

"As part of ARM's AI Partner Program, BrainChip will enable developers to address the need for high-performance and ultra-low-power edge AI inference, enabling new innovation opportunities."

Partner network grows

BrainChip has scored another strong coup by being accepted into the AI Partner Program of market giant ARM. In all likelihood, this will enable the chip manufacturer to land further partnerships to expand the commercial reach of its Akida processors.

In Europe, it already has distribution alliances with Easttronics and SalesLink. A month ago, it added an exciting collaboration with Swiss AI firm NVISO, which specializes in human behavior analysis. Heavyweights such as Mercedes and the US Air Force have also already announced cooperation with the Australians.

Breakthrough still unlikely at present

However, in our view it is probably still a bit too early for a substantial investment in BrainChip. Experience shows that the long-term chances of success for start-ups in the chip segment are extremely low. This insight has apparently also recently become widespread again among investors: After the Australians obtained an important patent in January and the share exploded by +200% to AU$2.20, the price promptly halved again.

And I thought that was because of Mercedes and patent was the cherry...

ARM's partner program will undoubtedly increase BrainChip's visibility in the industry, but the company is far from the only edge AI specialist in the ecosystem. In addition to an unlikely breakthrough, however, risk-averse investors can bet on an acquisition by a chip giant such as Intel or Nvidia - though they would prefer to do so only with a very small portfolio stake.

=>BrainChip: Discuss now!<=

High-quality discussions and real information advantages: Benefit just like thousands of other investors from our unique Live Chat, the new generation forum for BrainChip stock.

Not a member yet? Here you can register for free!"

https://www.sharedeals.de/brainchip-gelingt-coup-mit-branchengroesse/#gref

Come on, they need insight from you and it's free! Give yourselves a jolt and register now.

Last edited:

Zedjack33

Regular

Awe Bugga.Hey Z,

This is from 2020, I believe; I've been down this hole before!

Q

Fact Finder

Top 20

Worth a second read:

What’s So Exciting About Neuromorphic Computing

By Aaryaa PadhyegurjarMay 18, 2022

41

Telegram

https://www.electronicsforu.com/technology-trends/exciting-neuromorphic-computing/amp#

The human brain is the most efficient and powerful computer that exists. Even after decades and decades of technological advancements, no computer has managed to beat the brain with respect to efficiency, power consumption, and many other factors.

Will neuromorphic computers be able to do it?

The exact sequence of events that take place when we do a particular activity on our computer, or on any other device, completely depends on its inherent architecture. It depends on how the various components of the computer like the processor and memory are structured in the solid state.

Fast forward to today

Nowadays, almost all companies have dedicated teams working on neuromorphic computing. Groundbreaking research is being done in multiple research organisations and universities. It is safe to say that neuromorphic computing is gaining momentum and will continue to do so as various advancements are being made.

What’s interesting to note is that although this is a specialised field with prerequisites from various topics, including solid-state physics, VLSI, neural networks, and computational neurobiology, undergraduate engineering students are extremely curious about this field.

At IIT Kanpur, Dr Shubham Sahay, Assistant Professor at the Department of Electrical Engineering, introduced a course on neuromorphic computing last year. Despite being a post-graduate level course, he saw great participation from undergrads as well. “Throughout the course, they were very interactive. The huge B.Tech participation in my course bears testimony to the fact that undergrads are really interested in this topic. I believe that this (neuromorphic computing) could be introduced as one of the core courses in the UG curriculum in the future,” he says.

Getting it commercial

Getting it commercial

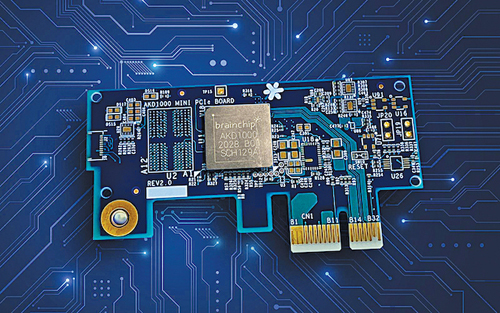

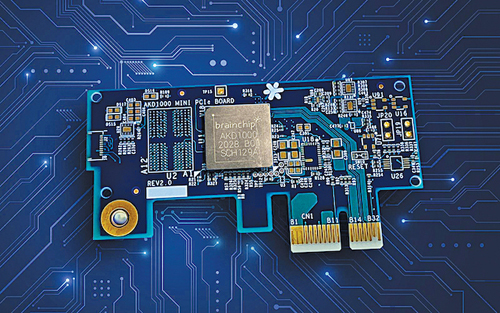

Until recently, neuromorphic computing was a widely used term only in research and not in the commercial arena. However, as of January 18, 2022, BrainChip, a leading provider of ultra-low-power high-performance AI technology, commercialised its AKD1000 AIOT chip. Developers, system integrators, and engineers can now buy AKD1000-powered Mini PCIe boards and leverage them in their applications, especially those requiring on-edge computing, low power consumption, and high-performance AI.

“It’s meant as our entry-level product. We want to proliferate this into as many hands as we can and get people designing in the Akida environment,” says Rob Telson, Vice President of WorldWide Sales at BrainChip. Anil Mankar, Co-founder and Chief Development Officer of BrainChip, explains, “We are enabling system integrators to easily use neuromorphic AI in their applications. In India, if some system integrators want to manufacture the board locally, they can take the bill of materials from us (BrainChip) and manufacture it locally.”

What’s fascinating about Akida is that it enables sensor nodes to compute without depending on the cloud. Further, BrainChip’s AI technology not only performs audio and video based learning but even focuses on other sensor modalities like taste, vibration, and smell. You can use it to make a sensor that performs wine tasting! Here is a link to their wine tasting demonstration: Link

Another major event that occurred this year was when Mercedes implemented BrainChip’s Akida technology in its Vision EQXX electric vehicle. This is definitely a big deal since the Akida technology is tried and tested for a smart automotive experience. All features that the Akida provides, including facial recognition, keyword spotting, etc consume extremely low power.

“This is where we get excited. You’ll see a lot of these functionalities in vehicles—recognition of voices, faces, and individuals in the vehicle. This allows the vehicles to have customisation and device personalisation according to the drivers or the passengers as well,” says Telson. These really are exciting times.

Neuromorphic hardware, neural networks, and AI

The process in which neurons work is eerily similar to an electric process. Neurons communicate with each other via synapses. Whenever they receive input, they produce electrical signals called spikes (also called action potentials), and the event is called neuron spiking. When this happens, chemicals called neurotransmitters are released into hundreds of synapses and activate the respective neurons. That’s the reason why this process is super-fast.Artificial neural networks mimic the logic of the human brain, but on a regular computer. The thing is, regular computers work on the Von Neumann architecture, which is extremely different from the architecture of our brain and is very power-hungry. We may not be able to deploy CMOS logic on the Von Neumann architecture for long. We will eventually reach a threshold to which we can exploit silicon. We are nearing the end of Moore’s Law and there is a need to establish a better computing mechanism. Neuromorphic computing is the solution because neuromorphic hardware realises the structure of the brain in the solid-state.

As we make progress in neuromorphic hardware, we will be able to deploy neural networks on it. Spiking Neural Network (SNN) is a type of artificial neural network that uses time in its model. It transmits information only when triggered—or, in other words, spiked. SNNs used along with neuromorphic chips will transform the way we compute, which is why they are so important for AI.

How to get started with SNNs

Since the entire architecture of neuromorphic AI chips is different, it is only natural to expect the corresponding software framework to be different too. Developers need to be educated on working with SNNs. However, that is not the case with MetaTF, a free software development framework environment that BrainChip launched in April 2021.“We want to make our environment extremely simple to use. Having people learn a new development environment is not an efficient way to move forward,” says Telson. “We had over 4600 unique users start looking at and playing with MetaTF in 2021. There’s a community out there that wants to learn.”

India and the future of neuromorphic computing

When asked about the scope of neuromorphic computing in India, Dr Sahay mentions, “As of now, the knowledge, dissemination, and expertise in this area is limited to the eminent institutes such as IITs and IISc, but with government initiatives such as India Semiconductor Mission (ISM) and NITI Ayog’s national strategy for artificial intelligence (#AIforall), this field would get a major boost. Also, with respect to opportunities in the industry, several MNCs have memory divisions in India—Micron, Sandisk (WesternDigital), etc—that develop the memory elements which will be used for neuromorphic computing.” There’s a long way to go, but there is absolutely no lack of potential. More companies would eventually have their neuromorphic teams in India.

The author, Aaryaa Padhyegurjar, is an Industry 4.0 enthusiast with a keen interest in innovation and research

Bravo

Meow Meow 🐾

Now we are friends with SiFive, and SiFive is hooked up with Intel Foundry Services,Let everyone make up his own mind.

I post this opinion actually only because of the funny remarks below. Either we are in the WRONG forum here and we should switch to high quality discussions in the new generation forum for BrainChip's stock. Or else Simon hasn't discovered tse yet and should join us. ) I find some of his assessments to be.... ... ...

Just my opinion...

"BrainChip succeeds in coup with industry giant

Simon / 05.23.22 / 12:43 pm

Shares in Australian startup BrainChip (WKN: A14Z7W) shot up +15% after trading began in Australia on Monday. Late Sunday, the AI specialist had reported its inclusion in the partner program of an absolute industry giant. Is the Australian AI gem's breakthrough imminent?

BrainChip Holding is an Australian technology company with solutions in the field of artificial intelligence and machine learning (AI & ML). The chip developer's flagship project is a so-called neuromorphic processor called Akida. It is supposed to be very close to the way the brain works and thus, in particular, very energy-efficient. The tech stock is already worth over US$1.4 billion on the stock market.

Inclusion in ARM's partner program

BrainChip shares shot up +15% on the Australian Stock Exchange Monday morning, eventually exiting trading up 8.55% at AU$1.27.

What put investors of the tech stock in such a buying mood was, to all appearances, a company announcement from late Sunday. According to it, the Australians were accepted into the AI Partner Program of ARM, one of the biggest names in chip sector. The British manufacturer's blueprints are the world's dominant standard for smartphones, tablets and mobile IoT devices.

ARM is considered a sort of "neutral Switzerland" due to its status in the semiconductor industry. Earlier this year, tech giant Nvidia attempted to acquire the company for US$40 billion. However, the deal ultimately fell through due to regulatory concerns raised by the relevant competition watchdogs.

The British company's partner program is an ecosystem of hardware and software specialists designed to enable developers to build the next generation of AI solutions, according to the statement.

It reportedly helps members form strategic alliances and provides them with technical support and resources to reach partners, developers and decision makers in their target markets.

Mohamed Awad, vice president of IoT and embedded at ARM, said:

"As part of ARM's AI Partner Program, BrainChip will enable developers to address the need for high-performance and ultra-low-power edge AI inference, enabling new innovation opportunities."

Partner network grows

BrainChip has scored another strong coup by being accepted into the AI Partner Program of market giant ARM. In all likelihood, this will enable the chip manufacturer to land further partnerships to expand the commercial reach of its Akida processors.

In Europe, it already has distribution alliances with Easttronics and SalesLink. A month ago, it added an exciting collaboration with Swiss AI firm NVISO, which specializes in human behavior analysis. Heavyweights such as Mercedes and the US Air Force have also already announced cooperation with the Australians.

Breakthrough still unlikely at present

However, in our view it is probably still a bit too early for a substantial investment in BrainChip. Experience shows that the long-term chances of success for start-ups in the chip segment are extremely low. This insight has apparently also recently become widespread again among investors: After the Australians obtained an important patent in January and the share exploded by +200% to AU$2.20, the price promptly halved again.

And I thought that was because of Mercedes...

ARM's partner program will undoubtedly increase BrainChip's visibility in the industry, but the company is far from the only edge AI specialist in the ecosystem. In addition to an unlikely breakthrough, however, risk-averse investors can bet on an acquisition by a chip giant such as Intel or Nvidia - though they would prefer to do so only with a very small portfolio stake.

=>BrainChip: Discuss now!<=

High-quality discussions and real information advantages: Benefit just like thousands of other investors from our unique Live Chat, the new generation forum for BrainChip stock.

Not a member yet? Here you can register for free!"

https://www.sharedeals.de/brainchip-gelingt-coup-mit-branchengroesse/#gref

Come on, they need insight from you and it's free! Give yourselves a jolt and register now.

We are also friends with Nvidia,

and now we are friends with ARM, so ...

if one of the big persons were to try a take-over of BRN, could that spark a bidding war?

And where would the SP be after that?

And could it sustain that price?

Bravo

Meow Meow 🐾

Sorry everyone, this was a reaction against other slightly, sorta-sexist posts - just trying to keep the equilibrium. Love youze all! B x

Evermont

Stealth Mode

Interesting couple of posts on the Yahoo conversation board. The first article posted also provides a great illustration expanding on the chiplets topic touched on by FF and others over the weekend.

https://finance.yahoo.com/quote/BRCHF/community?p=BRCHF

www.fierceelectronics.com

www.fierceelectronics.com

https://finance.yahoo.com/quote/BRCHF/community?p=BRCHF

Tech giants Intel, Meta, Arm, Google Cloud, AMD, Qualcomm, TSMC and ASE form chiplet consortium

| Open UCIe standard defines how chiplets are integrated in system-on-chip design to benefit fabs and designers

JoMo68

Regular

LDN really was a legend…That is us but consider if you were employed at Brainchip working on Peter van der Made's vision with a family and a mortgage and a partner reading all the negative press and you not being able to tell them anything really.

I do think it is different being on the inside as opposed to standing behind the crowd when the rocks are being thrown.

Why do I think this because to me it is exactly like my time in the NSW Police when every officer was being tarred with the same brush as a result of the actions of corrupt elements.

The one time I spoke personally with Mr. Dinardo at his last AGM he was clearly nervous, his hands were sweaty and until it became clear I was not there to plunge a knife into his chest he remained that way. He then relaxed and spoke effusively about Brainchip despite knowing by then that I was a former police officer and lawyer.

I do not often disagree with you but I think these things do much more damage than simple surface scratches.

My opinion only DYOR

FF

AKIDA BALLISTA

... and let's not forget CSL's market cap - $132B, which, in BRN's case would be about $80 per share (which, IMO and considering the comparative market and earnings potentials, is a fraction of what BRN could be worth), so I can't see PvdM and Co selling anytime soon.Now we are friends with SiFive, and SiFive is hooked up with Intel Foundry Services,

We are also friends with Nvidia,

and now we are friends with ARM, so ...

if one of the big persons were to try a take-over of BRN, could that spark a bidding war?

And where would the SP be after that?

And could it sustain that price?

So India has a government sponsored program to boost AI SNN/ML, and has a couple of Unis with standing room only lectures, while Australia has a couple of PhD students lucky enough to work at Brainchip's research labs.

Worth a second read:

What’s So Exciting About Neuromorphic Computing

By Aaryaa Padhyegurjar

May 18, 2022

41

Telegram

https://www.electronicsforu.com/technology-trends/exciting-neuromorphic-computing/amp#

The human brain is the most efficient and powerful computer that exists. Even after decades and decades of technological advancements, no computer has managed to beat the brain with respect to efficiency, power consumption, and many other factors.

Will neuromorphic computers be able to do it?

The exact sequence of events that take place when we do a particular activity on our computer, or on any other device, completely depends on its inherent architecture. It depends on how the various components of the computer like the processor and memory are structured in the solid state.

Almost all modern computers we use today are based on the Von Neumann architecture, a design first introduced in the late 1940s. There, the processor is responsible for executing instructions and programs, while the memory stores those instructions and programs. When you think of your body as an embedded device, your brain is the processor as well as the memory. The architecture of our brain is such that there is no distinction between the two.

Since we know for a fact that the human brain is superior to every single computer that exists, doesn’t it make sense to modify computer architecture in a way that it functions more like our brain? This was what many scientists realised in the 1980s, starting with Carver Mead, an American scientist and engineer.

Fast forward to today

Nowadays, almost all companies have dedicated teams working on neuromorphic computing. Groundbreaking research is being done in multiple research organisations and universities. It is safe to say that neuromorphic computing is gaining momentum and will continue to do so as various advancements are being made.

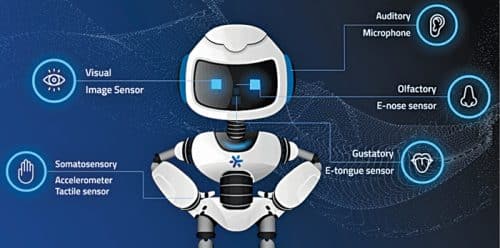

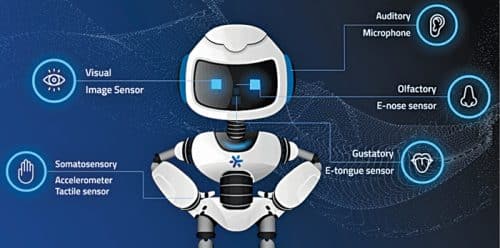

The 5 sensor modalities (Source: BrainChip Inc.)

What’s interesting to note is that although this is a specialised field with prerequisites from various topics, including solid-state physics, VLSI, neural networks, and computational neurobiology, undergraduate engineering students are extremely curious about this field.

At IIT Kanpur, Dr Shubham Sahay, Assistant Professor at the Department of Electrical Engineering, introduced a course on neuromorphic computing last year. Despite being a post-graduate level course, he saw great participation from undergrads as well. “Throughout the course, they were very interactive. The huge B.Tech participation in my course bears testimony to the fact that undergrads are really interested in this topic. I believe that this (neuromorphic computing) could be introduced as one of the core courses in the UG curriculum in the future,” he says.

Until recently, neuromorphic computing was a widely used term only in research and not in the commercial arena. However, as of January 18, 2022, BrainChip, a leading provider of ultra-low-power high-performance AI technology, commercialised its AKD1000 AIOT chip. Developers, system integrators, and engineers can now buy AKD1000-powered Mini PCIe boards and leverage them in their applications, especially those requiring on-edge computing, low power consumption, and high-performance AI.

Getting it commercial

“It’s meant as our entry-level product. We want to proliferate this into as many hands as we can and get people designing in the Akida environment,” says Rob Telson, Vice President of WorldWide Sales at BrainChip. Anil Mankar, Co-founder and Chief Development Officer of BrainChip, explains, “We are enabling system integrators to easily use neuromorphic AI in their applications. In India, if some system integrators want to manufacture the board locally, they can take the bill of materials from us (BrainChip) and manufacture it locally.”

What’s fascinating about Akida is that it enables sensor nodes to compute without depending on the cloud. Further, BrainChip’s AI technology not only performs audio and video based learning but even focuses on other sensor modalities like taste, vibration, and smell. You can use it to make a sensor that performs wine tasting! Here is a link to their wine tasting demonstration: Link

Another major event that occurred this year was when Mercedes implemented BrainChip’s Akida technology in its Vision EQXX electric vehicle. This is definitely a big deal since the Akida technology is tried and tested for a smart automotive experience. All features that the Akida provides, including facial recognition, keyword spotting, etc consume extremely low power.

“This is where we get excited. You’ll see a lot of these functionalities in vehicles—recognition of voices, faces, and individuals in the vehicle. This allows the vehicles to have customisation and device personalisation according to the drivers or the passengers as well,” says Telson. These really are exciting times.

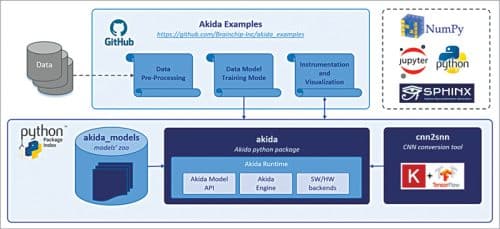

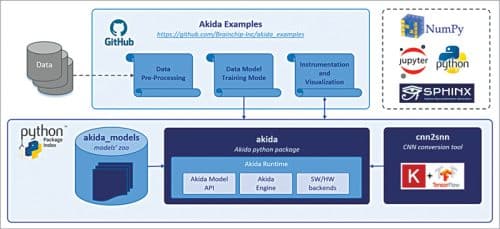

Akida MetaTF ML Framework (Source: MetaTF)

Neuromorphic hardware, neural networks, and AI

The process in which neurons work is eerily similar to an electric process. Neurons communicate with each other via synapses. Whenever they receive input, they produce electrical signals called spikes (also called action potentials), and the event is called neuron spiking. When this happens, chemicals called neurotransmitters are released into hundreds of synapses and activate the respective neurons. That’s the reason why this process is super-fast.

Artificial neural networks mimic the logic of the human brain, but on a regular computer. The thing is, regular computers work on the Von Neumann architecture, which is extremely different from the architecture of our brain and is very power-hungry. We may not be able to deploy CMOS logic on the Von Neumann architecture for long. We will eventually reach a threshold to which we can exploit silicon. We are nearing the end of Moore’s Law and there is a need to establish a better computing mechanism. Neuromorphic computing is the solution because neuromorphic hardware realises the structure of the brain in the solid-state.

As we make progress in neuromorphic hardware, we will be able to deploy neural networks on it. Spiking Neural Network (SNN) is a type of artificial neural network that uses time in its model. It transmits information only when triggered—or, in other words, spiked. SNNs used along with neuromorphic chips will transform the way we compute, which is why they are so important for AI.

How to get started with SNNs

Since the entire architecture of neuromorphic AI chips is different, it is only natural to expect the corresponding software framework to be different too. Developers need to be educated on working with SNNs. However, that is not the case with MetaTF, a free software development framework environment that BrainChip launched in April 2021.

“We want to make our environment extremely simple to use. Having people learn a new development environment is not an efficient way to move forward,” says Telson. “We had over 4600 unique users start looking at and playing with MetaTF in 2021. There’s a community out there that wants to learn.”

India and the future of neuromorphic computing

When asked about the scope of neuromorphic computing in India, Dr Sahay mentions, “As of now, the knowledge, dissemination, and expertise in this area is limited to the eminent institutes such as IITs and IISc, but with government initiatives such as India Semiconductor Mission (ISM) and NITI Ayog’s national strategy for artificial intelligence (#AIforall), this field would get a major boost. Also, with respect to opportunities in the industry, several MNCs have memory divisions in India—Micron, Sandisk (WesternDigital), etc—that develop the memory elements which will be used for neuromorphic computing.” There’s a long way to go, but there is absolutely no lack of potential. More companies would eventually have their neuromorphic teams in India.

BrainChip Inc. is also building its university strategy to make sure students are being educated in this arena. Slowly, the research done in neuromorphic computing is making its way into the commercial world and academia. Someday, we might be able to improve our self-driving cars, create artificial skins and prosthetic limbs that can learn things about their surroundings! Consider your smart devices. All of them are dependent on the internet and the cloud. If equipped with a neuromorphic chip, these devices can compute on their own! This is just the start of the neuromorphic revolution.

The author, Aaryaa Padhyegurjar, is an Industry 4.0 enthusiast with a keen interest in innovation and research

PYFO Australia.

Violin1

Regular

Wait until, you see mine......that'll bring the boots out........lolSo , what's happening other than that dorky dude's butt is getting way more attention than what it's worth.

Fullmoonfever

Top 20

I see the DoD issued a pre-release late April and may have already been covered but I found this one interesting.

Similar wording I've seen somewhere previously....not sure of too many COTS....my bold

Note - the doc is 422 pages.

DoD PDF

DEPARTMENT OF DEFENSE

SMALL BUSINESS INNOVATION RESEARCH (SBIR) PROGRAM

SBIR 22.2 Program Broad Agency Announcement (BAA)

April 20, 2022: DoD BAA issued for pre-release

May 18, 2022: DoD begins accepting proposals

June 15, 2022: Deadline for receipt of proposals no later than 12:00 p.m. ET

AF NUMBER: AF222-0010

TITLE: Event Based Star Tracker

TECH FOCUS AREAS: Biotechnology Space; Nuclear

TECHNOLOGY AREAS: Nuclear; Sensors; Space Platform

OBJECTIVE: Develop a low SWAP, low cost, high angular rate star tracker for satellite and nuclear

enterprise applications.

DESCRIPTION: Existing star tracking attitude sensors for small satellites and rocket applications are

limited in their ability to operate above an angular rate of approximately 3-5 degrees/second, thus

rendering them useless for both satellite high spin (i.e. lost in space) applications, as well as spinning

rocket body applications. Recent advances in neuromorphic (a.k.a. event based) sensors have

dramatically improved their overall performance2, which allows them to be considered for these high

angular rate applications1. In addition, the difference between a traditional frame-based camera and

an event based camera is simply a matter of how the sensor is read out, which should allow for

electronic switching between event based (i.e. high angular rate) and frame (i.e. low angular rate) modes

within the star tracker. Additional advantages inherent in an event based sensor include high temporal

resolution (µs) and high dynamic range (140 dB), which could allow for multiple modes of continuous

attitude determination (i.e. star tracking, sun sensor, earth limb sensor) within a single small, low

cost sensor package. All technology solutions that meet the topic objective are solicited in this call,

however, neuromorphic sensors appear ideally suited to meet the technical objectives and should

therefore be considered in the solution trade space. The scope of this effort will be to first analyze the

capability of event based sensors to meet a high angular rate star tracker application, define the trade

space for the technical solution against the satellite and nuclear enterprise requirements, develop a

working prototype and test it against the requirements, and finally in Phase 3 move to initial production

of a commercial star tracker unit.

PHASE I: Acquire existing state of the art COTS neuromorphic (a.k.a. event based) sensor or modify

existing star tracking sensor as appropriate. Perform analysis and testing of the event based sensor to

determine feasibility in the high angular rate star tracking satellite and nuclear enterprise applications

PHASE II: Development of a prototype event based high angular rate star tracker. Ideally this prototype

will have the ability to be operated in both event based mode, as well as switch back and forth to

standard (i.e. frame) mode. Explore and document the technical trade space (maximum angular rate,

minimum detection threshold, associated algorithm development, etc.) and potential

military/commercial application of the prototype device.

PHASE III DUAL USE APPLICATIONS: Phase 3 efforts will focus on transitioning the developed

high angular rate attitude sensor technology to a working commercial and/or military solution. Potential

applications include commercial and military satellites, as well as missile applications.

NOTES: The technology within this topic is restricted under the International Traffic in Arms

Regulation (ITAR), 22 CFR Parts 120-130, which controls the export and import of defense-related

material and services, including export of sensitive technical data, or the Export Administration

Regulation (EAR), 15 CFR Parts 730-774, which controls dual use items. Offerors must disclose any

proposed use of foreign nationals (FNs), their country(ies) of origin, the type of visa or work permit

possessed, and the proposed tasks intended for accomplishment by the FN(s) in accordance with

section 5.4.c.(8) of the Announcement and within the AF Component-specific instructions. Offerors are

advised foreign nationals proposed to perform on this topic may be restricted due to the technical data

under US Export Control Laws. Please direct questions to the Air Force SBIR/STTR HelpDesk:

usaf.team@afsbirsttr.us

The only other mention of neuromorphic in my search (haven't checked other keywords yet) is:

OSD222-D02 TITLE: Advanced Integrated CMOS Terahertz (THz) Focal Plane Arrays (FPA)

OUSD (R&E) MODERNIZATION PRIORITY: Microelectronics, AI/ML

TECHNOLOGY AREA(S): Information Systems, Modeling and Simulation Technology

OBJECTIVE: Develop advanced THz-FPA that offer large pixel count, high dynamic range, and high

speed over a broad THz frequency range.

DESCRIPTION: Electromagnetic waves in the THz spectral band (roughly covering the 0.1 – 3 THz

frequency range) offer unique properties for chemical identification, nondestructive imaging, and remote

sensing. However, existing THz devices have not yet provided all the functionalities required to fulfill

many of these applications. Although complementary metal–oxide–semiconductor (CMOS) technologies

have been offering robust solutions below 1 THz, the high-frequency portion of the THz band still lacks

mature devices. For example, most of the THz imaging and spectroscopy systems use single-pixel

detectors, which results in a severe tradeoff between the measurement time and field of view. To address

this problem, a large pixel count, high dynamic range, high speed, and broadband THz-FPA needs to be

developed. The proposed THz-FPA can operate either as a frequency-tunable continuous-wave detector

or a broadband-pulsed detector. It should be able to operate over a 1 – 3 THz frequency range while

offering more than 30 decibel (dB) dynamic range per pixel. It should have more than 1,000 pixels and a

frame rate of at least 1 hertz (Hz). Some anticipated features include developing THz-FPAs by exploring

three-dimensional microstructures, smart readout integrated circuits, and processors that incorporate

neuromorphic computing and ML to increase the data collection efficiency.

Similar wording I've seen somewhere previously....not sure of too many COTS....my bold

Note - the doc is 422 pages.

DoD PDF

DEPARTMENT OF DEFENSE

SMALL BUSINESS INNOVATION RESEARCH (SBIR) PROGRAM

SBIR 22.2 Program Broad Agency Announcement (BAA)

April 20, 2022: DoD BAA issued for pre-release

May 18, 2022: DoD begins accepting proposals

June 15, 2022: Deadline for receipt of proposals no later than 12:00 p.m. ET

AF NUMBER: AF222-0010

TITLE: Event Based Star Tracker

TECH FOCUS AREAS: Biotechnology Space; Nuclear

TECHNOLOGY AREAS: Nuclear; Sensors; Space Platform

OBJECTIVE: Develop a low SWAP, low cost, high angular rate star tracker for satellite and nuclear

enterprise applications.

DESCRIPTION: Existing star tracking attitude sensors for small satellites and rocket applications are

limited in their ability to operate above an angular rate of approximately 3-5 degrees/second, thus

rendering them useless for both satellite high spin (i.e. lost in space) applications, as well as spinning

rocket body applications. Recent advances in neuromorphic (a.k.a. event based) sensors have

dramatically improved their overall performance2, which allows them to be considered for these high

angular rate applications1. In addition, the difference between a traditional frame-based camera and

an event based camera is simply a matter of how the sensor is read out, which should allow for

electronic switching between event based (i.e. high angular rate) and frame (i.e. low angular rate) modes

within the star tracker. Additional advantages inherent in an event based sensor include high temporal

resolution (µs) and high dynamic range (140 dB), which could allow for multiple modes of continuous

attitude determination (i.e. star tracking, sun sensor, earth limb sensor) within a single small, low

cost sensor package. All technology solutions that meet the topic objective are solicited in this call,

however, neuromorphic sensors appear ideally suited to meet the technical objectives and should

therefore be considered in the solution trade space. The scope of this effort will be to first analyze the

capability of event based sensors to meet a high angular rate star tracker application, define the trade

space for the technical solution against the satellite and nuclear enterprise requirements, develop a

working prototype and test it against the requirements, and finally in Phase 3 move to initial production

of a commercial star tracker unit.

PHASE I: Acquire existing state of the art COTS neuromorphic (a.k.a. event based) sensor or modify

existing star tracking sensor as appropriate. Perform analysis and testing of the event based sensor to

determine feasibility in the high angular rate star tracking satellite and nuclear enterprise applications

PHASE II: Development of a prototype event based high angular rate star tracker. Ideally this prototype

will have the ability to be operated in both event based mode, as well as switch back and forth to

standard (i.e. frame) mode. Explore and document the technical trade space (maximum angular rate,

minimum detection threshold, associated algorithm development, etc.) and potential

military/commercial application of the prototype device.

PHASE III DUAL USE APPLICATIONS: Phase 3 efforts will focus on transitioning the developed

high angular rate attitude sensor technology to a working commercial and/or military solution. Potential

applications include commercial and military satellites, as well as missile applications.

NOTES: The technology within this topic is restricted under the International Traffic in Arms

Regulation (ITAR), 22 CFR Parts 120-130, which controls the export and import of defense-related

material and services, including export of sensitive technical data, or the Export Administration

Regulation (EAR), 15 CFR Parts 730-774, which controls dual use items. Offerors must disclose any

proposed use of foreign nationals (FNs), their country(ies) of origin, the type of visa or work permit

possessed, and the proposed tasks intended for accomplishment by the FN(s) in accordance with

section 5.4.c.(8) of the Announcement and within the AF Component-specific instructions. Offerors are

advised foreign nationals proposed to perform on this topic may be restricted due to the technical data

under US Export Control Laws. Please direct questions to the Air Force SBIR/STTR HelpDesk:

usaf.team@afsbirsttr.us

The only other mention of neuromorphic in my search (haven't checked other keywords yet) is:

OSD222-D02 TITLE: Advanced Integrated CMOS Terahertz (THz) Focal Plane Arrays (FPA)

OUSD (R&E) MODERNIZATION PRIORITY: Microelectronics, AI/ML

TECHNOLOGY AREA(S): Information Systems, Modeling and Simulation Technology

OBJECTIVE: Develop advanced THz-FPA that offer large pixel count, high dynamic range, and high

speed over a broad THz frequency range.

DESCRIPTION: Electromagnetic waves in the THz spectral band (roughly covering the 0.1 – 3 THz

frequency range) offer unique properties for chemical identification, nondestructive imaging, and remote

sensing. However, existing THz devices have not yet provided all the functionalities required to fulfill

many of these applications. Although complementary metal–oxide–semiconductor (CMOS) technologies

have been offering robust solutions below 1 THz, the high-frequency portion of the THz band still lacks

mature devices. For example, most of the THz imaging and spectroscopy systems use single-pixel

detectors, which results in a severe tradeoff between the measurement time and field of view. To address

this problem, a large pixel count, high dynamic range, high speed, and broadband THz-FPA needs to be

developed. The proposed THz-FPA can operate either as a frequency-tunable continuous-wave detector

or a broadband-pulsed detector. It should be able to operate over a 1 – 3 THz frequency range while

offering more than 30 decibel (dB) dynamic range per pixel. It should have more than 1,000 pixels and a

frame rate of at least 1 hertz (Hz). Some anticipated features include developing THz-FPAs by exploring

three-dimensional microstructures, smart readout integrated circuits, and processors that incorporate

neuromorphic computing and ML to increase the data collection efficiency.

Similar threads

- Replies

- 0

- Views

- 4K

- Replies

- 9

- Views

- 6K

- Replies

- 0

- Views

- 3K