https://www.srcinc.com/products/int...ondor-high-performance-embeded-computing.html

Agile Condor® High-Performance Embedded Computing

The

Agile Condor high-performance embedded computing (HPEC) architecture offers sensor-agnostic, on-board, real-time data processing to deliver actionable intelligence to the warfighter.

Originally designed to fly in a pod-based enclosure onboard RPA, the

Agile Condor system comprises advanced hardware, software and processing algorithms that can deliver super-computer-like performance in extremely size, weight and power (SWaP) constrained environments, whether in the air, in space, on land or at sea

For the first time ever, and in real-time, the Agile Condor system demonstrated: image processing, video processing and pattern recognition through the use of deep convolutional neural networks. A newly invented, high performance, pod-based computer architecture, called Agile Condor (patent...

www.semanticscholar.org

View attachment 76671

-------

https://bascomhunter.com/products-s...c-processors/asic-solutions/3u-vpx-snap-card/

3U VPX SNAP Card

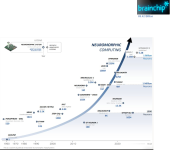

Bascom Hunter’s SNAP Card (Spiking Neuromorphic Advanced Processor) is a high-performance 3U OpenVPX AI/ML processor built for rugged, mission-critical environments. The card is SOSA-aligned, HOST-compatible, and conduction cooled. It combines a Xilinx UltraScale+ RFSoC FPGA with five BrainChipAKD1000 spiking neuromorphic processors to achieve the best of both signal processing and neuromorphic computing – featuring a total of 6million neurons and 50 billion synapses across the card. Unlike traditional machine learning accelerators such as GPUs or TPUs, neuromorphic processors are designed to mimic the biological efficiency of the human brain, allowing the SNAP Card to run multiple ML models in parallel at exceptionally low power — just 1W per model — without sacrificing speed or accuracy while the FPGA enhances input/output operations and performs any desired signal processing tasks. This combination in a rugged, interoperable, and military-hardened package makes Bascom Hunter’s SNAP Card the ideal solution for the concurrent and parallel processing of real-time, multi-modal, and multi sensor data on autonomous, unattended, denied, or otherwise battery constrained military systems.

View attachment 76673

– Automated Target Recognition (ATR) on full motion video feeds and imagery (to include 4k and higher)

– Real-time detection and identification of threat radars and their acquisition/operating modes

– Detection of FISINT (Foreign Instrumentation Signature Intelligence) and/or hacking across the airframe’s 1553 communications bus

– Perform communications analysis to include speech-to-text and foreign language translation of intercepted communications

– Fuse multiple infrared cameras (e.g., SWIR, MWIR, LWIR) to provide a combined infrared operating picture on the ground Multi-user, simultaneous modulation/demodulation

-------

Compare the advertised feature set of the Bascom Hunter product with the features highlighted in the Agile Condor promo video: