Frangipani

Top 20

The University of Washington in Seattle, interesting…

UW’s Department of Electrical & Computer Engineering had already spread the word about a summer internship opportunity at BrainChip last May. In fact, it was one of their graduating Master’s students, who was a BrainChip intern himself at the time (April 2023 - July 2023), promoting said opportunity.

I wonder what exactly Rishabh Gupta is up to these days, hiding in stealth mode ever since graduating from UW & simultaneously wrapping up his internship at BrainChip. What he has chosen to reveal on LinkedIn is that he now resides in San Jose, CA and is “Building next-gen infrastructure and aligned services optimized for multi-modal Generative AI” resp. that his start-up intends to build said infrastructure and services “to democratise AI”…He certainly has a very impressive CV so far as well as first-hand experience with Akida 2.0, given the time frame of his internship and him mentioning vision transformers.

Brainchip Inc: Summer Internship Program 2023

I am Rishabh Gupta, I am a 2nd year ECE PMP masters student and currently working at Brainchip.advisingblog.ece.uw.edu

View attachment 63099

View attachment 63101

View attachment 63102

View attachment 63103

View attachment 63104

Meanwhile, yet another university is encouraging their students to apply for a summer internship at BrainChip:

BrainChip– Internship Program 2024 - USC Viterbi | Career Services

BrainChip– Internship Opportunity! Apply Today! About BrainChip: BrainChip develops technology that brings commonsense to the processing of sensor data, allowing efficient use for AI inferencing enabling one to do more with less. Accurately. Elegantly. Meaningfully. They call this Essential AI...viterbicareers.usc.edu

View attachment 63100

I guess it is just a question of time before USC will be added to the BrainChip University AI Accelerator Program, although Nandan Nayampally is sadly no longer with our company…

IMO there is a good chance that Akida will be utilised in future versions of that UW prototype @cosors referred to.

AI headphones let wearer listen to a single person in a crowd, by looking at them just once

A University of Washington team has developed an artificial intelligence system that lets someone wearing headphones look at a person speaking for three to five seconds to “enroll” them. The...www.washington.edu

“A University of Washington team has developed an artificial intelligence system that lets a user wearing headphones look at a person speaking for three to five seconds to “enroll” them. The system, called “Target Speech Hearing,” then cancels all other sounds in the environment and plays just the enrolled speaker’s voice in real time even as the listener moves around in noisy places and no longer faces the speaker.

The team presented its findings May 14 in Honolulu at the ACM CHI Conference on Human Factors in Computing Systems. The code for the proof-of-concept device is available for others to build on. The system is not commercially available.”

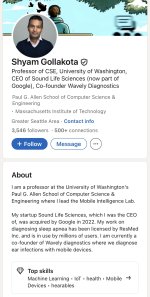

While the paper shared by @cosors (https://homes.cs.washington.edu/~gshyam/Papers/tsh.pdf) indicates that the on-device processing of the end-to-end hardware system UW professor Shyam Gollakota and his team built as a proof-of-concept device is not based on neuromorphic technology, the paper’s future outlook (see below), combined with the fact that UW’s Paul G. Allen School of Computer Science & Engineering* has been encouraging students to apply for the BrainChip Summer Internship Program for the second year in a row, is reason enough for me to speculate those UW researchers could well be playing around with Akida at some point to minimise their prototype’s power consumption and latency.

*(named after the late Paul Gardner Allen who co-founded Microsoft in 1975 with his childhood - and lifelong - friend Bill Gates and donated millions of dollars to UW over the years)

View attachment 64177

View attachment 64203 The paper was co-authored by Shyam Gollakota (https://homes.cs.washington.edu/~gshyam) and three of his UW PhD students as well as by AssemblyAI’s Director of Research, Takuya Yoshioka (ex-Microsoft).

AssemblyAI (www.AssemblyAI.com) sounds like an interesting company to keep an eye on:

View attachment 64205

View attachment 64206

View attachment 64207

View attachment 64204

View attachment 64208

View attachment 64209

FNU Sidharth, a Graduate Student Researcher from the University of Washington in Seattle, will be spending the summer as a Machine Learning Engineering Research Intern at BrainChip:

View attachment 66341

View attachment 66342

View attachment 66344

Bingo!

https://thestockexchange.com.au/threads/brn-discussion-ongoing.1/post-425543

View attachment 66345

View attachment 66346

One of BrainChip’s 2024 summer interns was FNU Sidharth, a Graduate Student Researcher from the University of Washington in Seattle (see the last of my posts above). According to his LinkedIn profile he actually continues to work for our company and will graduate with an M.Sc. in March 2025.

His research is focused on speech & audio processing, and this is what he says about himself: “My ultimate aspiration is to harness the knowledge and skills I gain in these domains to engineer cutting-edge technologies with transformative applications in the medical realm. By amalgamating my expertise, I aim to contribute to advancements that will revolutionise healthcare technology.”

As also mentioned in my previous post on FNU Sidarth, his advisor for his UW spring and summer projects (the last one largely overlapping with his time at BrainChip) was Shyam Gollakota, Professor of Computer Science & Engineering at the University of Washington (where he leads the Mobile Intelligence Lab) and serial entrepreneur (one of his start-ups was acquired by Google in 2022).

Here is a podcast with Shyam Gollakota on “The Future of Intelligent Hearables” that is quite intriguing when you watch it in the above context (especially from 6:15 min onwards) and when you find out that he and his fellow researchers are planning on setting up a company in the field of intelligent hearables…

We should definitely keep an eye on them!