Is hand gesture technology new ?

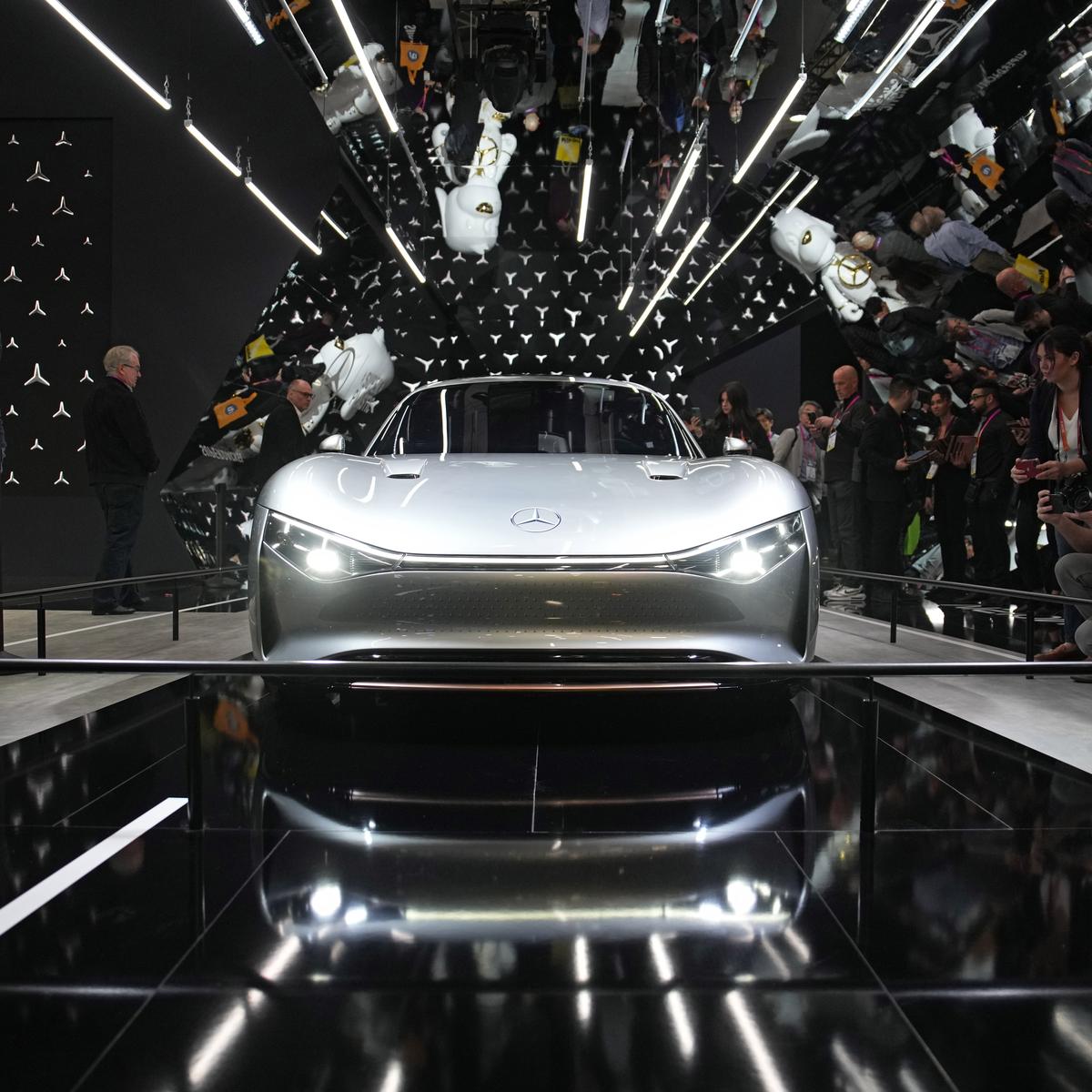

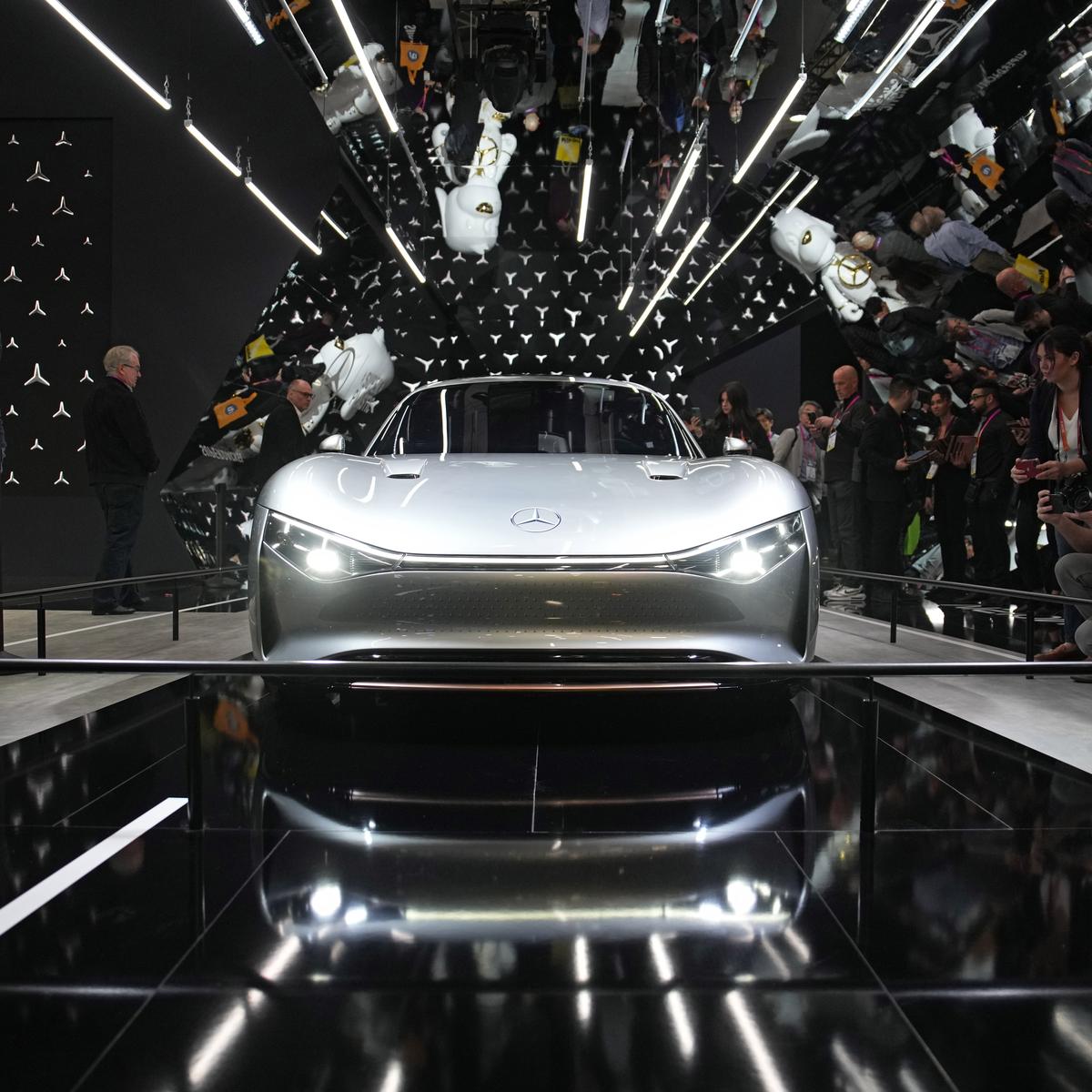

Mercedes-AMG EQS 53 4Matic Plus Tech Review: Future wrapped in luxury

March 25, 2023 11:38 am | Updated 07:44 pm IST

The Mercedes-AMG EQS 53 4Matic Plus embraces the future with enough tech features to satiate a hardcore technocrat

NABEEL AHMED

Performance

Extending from A-Pillar to A-Pillar, the MBUX Hyperscreen in the EQS claims to be the biggest infotainment screen ever mounted in a series-built car. The 56-inch screen comes with 8 CPU cores, 24-gigabyte RAM, 46.4 GB per second RAM memory, 7 individual profiles, and a 2,432.11 cm2 viewing area in a zero-layer concept, which ensures that the most important functions are always on display.

The MBUX Hyperscreen in the EQS extends from A-Pillar to A-Pillar. | Photo Credit: Nabeel Ahmed

The display homogeneously adapts to lighting conditions and permanently displays climate controls for the driver and front passenger. The screen comes with haptic feedback, achieved with the help of 8 actuators in the central display and four in the front passenger display. The MBUX also comes with adaptive software offering personalised suggestions to individual driver profiles, which can be created via using the Mercedes me app on a smartphone.

Driver display and steering-mounted controls

The driver’s 12.3-inch driver display rests behind the wheel and displays information based on the selected driver profile. We found the display bright and crisp, and its beginner profile settings were especially useful when manoeuvring through narrow urban stretches.

A driver can switch profiles based on the performance setting of the vehicle- which includes the Sport and Sport+- and change the overall lighting and settings within the cabin, including the fake engine sound pumped through the sound speakers.

Steering mounted controls allow drivers to switch easily between driving modes. | Photo Credit: Nabeel Ahmed

Additionally, the driver display also presents prompts for ADAS features including active lane-keep assist and adaptive distance from oncoming traffic. The driver display settings can be adjusted using the steering-mounted controls which come with haptic feedback.The controls are easy to use and provide ample feedback, ensuring that the driver does not need to take their eyes off the road while switching between modes. Additionally, the side mirrors also present warnings every time a vehicle approaches for an overtake.

The steering also comes with paddle shifters and controls for drive modes.

Central display

This 17.7-inch touchscreen display sits flush with the dashboard of the vehicle. It comes with access to all the driving, comfort, and infotainment features. The display is bright and comes with adaptive software, which is handy when scrolling through the menu, changing volume settings, and accessing infotainment features while driving.

ALSO READ

Mercedes-Benz to deploy advanced automated driving system in Nevada

Mercedes-Benz to deploy advanced automated driving system in Nevada

This software is intuitive and responds well to user inputs based on past usage. We also found the hand gesture recognition feature helpful when opening the sunroof and switching on cabin lights on the go.

The central display also offers a host of luxury features. | Photo Credit: Nabeel Ahmed

The central display also offers a host of luxury features, including seat ventilation and massage features along with interior mood light controls. Seat ventilation settings are also available on the door panels for ease of access.

A welcome addition is the ability to download games on the screen, which can be accessed when the vehicle is stationary and provide ample entertainment when the vehicle is being charged.

Passenger display

The passenger display, a 12.3-inch touchscreen unit, and the features baked into the central display ensure that front passengers have plenty to keep them engaged.

While skipping out on the functionality to switch between driving modes, the unit comes with the ability to input a destination, control the infotainment, seat ventilation and massage functionality. The passenger display thus ensures that the driver is not disturbed when changes are made to the passenger seat.

Another useful feature includes the dimming of the passenger display if when the vehicle detects the driver looking at the passenger screen. The screen can detect the presence of a front passenger and will only switch on if there is one.

Rear seat tablet

As an AMG automobile, the Mercedes EQS focuses more on the driver than the passengers, but the vehicle does not leave them empty-handed, with their own tablet in a dedicated spot in the armrest.

The rear seat of the AMG EQS gets a fully functional Android tablet. | Photo Credit: Nabeel Ahmed

The fully functional Android tablet comes with controls for rear seat reclining and ventilation, along with integration with the MBUX system. The tablet can also be used for content consumption and has all the functionality of users expect from an Android tablet.

Ease of life features

Along with multiple screens, hand gestures, adaptive software and ADAS, the Mercedes EQS also comes with an inbuilt air purifier with a HEPA filter to remove airborne pollutants. During our review, the vehicle was able to start the purifier and climate control when it detected the key in its vicinity, ensuring clean and comfortable breathing upon entry. The vehicle was even able to keep AQI readings within reasonable limits when driven with an open sunroof.