I mentioned a few days ago that the government was running the change to superannuation tax rules up the flag pole despite promises to the contrary.

The present line is that it will only affect 80,000 superannuates however this is in its first year of operation.

Just like stamp duty on real estate in NSW it will not be indexed to adjust for inflation and so over time this number will greatly increase.

In the real world in which we all live someone today commencing work at the age of 20 years will have about 40+ years before they enter retirement. The implications for a ceiling of $3 million over 40 years can be evidenced by if you are an oldie like myself recalling what you paid for your first home about 45 years ago. In our case it was $38,000. It came on the market two or three years ago now and I noticed that the owners were then asking over $900,000.

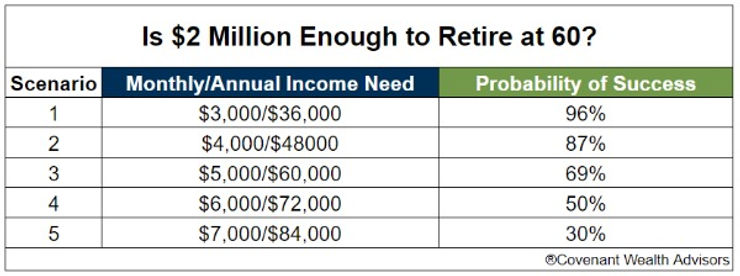

Some here will not have given much thought to their retirement but the following graph using Monte Carlo calculations suggests that having $2 million in superannuation today and wanting to have an annual pension of $84,000 based on average returns will give you only a 30% chance of your $2 million lasting until your average life expectancy:

There are lots of these tables out there on the web but the reality is the younger you retire the more money you need to have tucked away because while $3 million might seem like a lot now in 40 + years it will be a bag of lollies.

Those retirees who retired last year would have sat down with their accountants or advisers and calculated what they needed to live a comfortable life in retirement based upon the known rules and government promises. Overnight those calculations became meaningless for 80,000 Australians.

Now before you decide that these 80,000 are all fat cats or filthy rich consider this Australia has almost 3 million small business owners providing approximately 97% of all jobs in our economy. Amongst this three million business owners are:

"Medium-scale enterprises are about 56,835 in number (employing about 20 to 199 people),"

Then ask yourself if you were one of these 56,835 medium scale business owners would you want the stress and responsibility this involves if you could not at least retire with a 100% certainty that your superannuation would see you out and give you a life style that made it all worthwhile.

On the above figures to guarantee this and have a good buffer against inflation and tax law changes you would want four or five million dollars in my opinion.

Anyway these changes will not be made until after the next election so you have plenty of time to take advice, do your numbers and complain to your local member if you feel that this proposed change might not be particularly fair or reasonable for you or your loved ones.

My opinion only DYOR

FF

AKIDA BALLISTA