You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

BRN Discussion Ongoing

- Thread starter TechGirl

- Start date

SharesForBrekky

Regular

I know what's going on. There are secret bunkers under the old air base and now under the Great Park, where Brainchip can work in complete stealth mode. Haha, just being silly, but hey, who knows what could be lying underneath.Yep. Famous for its park though.

Residential and commerical area has lots of highways around. Typical L.A California life so I hear:

View attachment 30931

Easytiger

Regular

… done the maths!Since Management began giving ASX the silent treatment on news feeds about 13mths ago, daily volume has dropped from 200m peak to around 5m these days.

IMO the past 13mths trading has been dominated by selling pressure.

The broader market does not have a BRN news feed but may screen ASX for Company announcements.

By managements ASX silent treatment , we have been missing out on new interest from the broader market that may have provided some balance against the selling pressure which could have reduced our share price depreciation over that period. IMO

We were rich last year and looking forward to multiples more but have rapidly suffered a 75% fall from those highs in the silent period.

Is it any wonder some astute investors have concerns? Especially while targets continue being missed.

If Sean gets a $450k incentive bonus as share at todays price he will receive 300% more share than he would have at last years highs.

Getupthere

Regular

Posted from another site.

Learning

Learning to the Top 🕵♂️

Not sure this has been mention.

Another patent by BrainChip recently published.

Learning 🏖

Another patent by BrainChip recently published.

US20230026363A1 - Spiking neural network - Google Patents

Disclosed herein are system, method, and computer program product embodiments for an improved spiking neural network (SNN) configured to learn and perform unsupervised extraction of features from an input stream. An embodiment operates by receiving a set of spike bits corresponding to a set...

patents.google.com

Learning 🏖

Great post.Howdy All,

This is showing as having been published 3 hours ago. I don't think it's been posted, but if so, I'll delete.

Neuromorphic vision sensors are coming to smartphones

By Dan O'SheaFeb 28, 2023 03:45pm

Prophesee SAQualcomm Snapdragonneuromorphicimage sensors

A new partnership teaming Prophesee and Qualcomm will optimize event-based neuromorphic vision sensors for use in mobile devices, resulting in better images from device cameras. (Prophesee).

Prophesee, a provider of event-based neuromorphic vision sensor technology, announced a partnership with Qualcomm Technologies at the massive Mobile World Congress event in Barcelona, Spain, this week, a collaboration that will see Prophesee’s Metavision sensors optimized for use with Qualcomm’s Snapdragon platform to bring neuromorphic-enabled image capture capabilities to mobile devices.

Event-based neuromorphic vision sensor technology could be a game changer for camera performance, with neuromorphic capabilities processing movement and moments closer to the way the human brain processes them–with less blurring and more clarity than a frame-based camera can manage. Specifically, the technology allows cameras to perform better while capturing fast movements and scenes in low lighting in their photos and videos. For the most part, the consumer marketplace is still waiting to get their hands on devices with these capabilities, but the new partnership means that wait is growing shorter.

Later this year, the Prophesee plans to release a development kit to support the integration of the Metavision sensor technology for use with devices that contain next-generation Snapdragon platforms. After that, it will not be too much longer before consumers can experience the benefits of event-based neuromorphic vision sensor technology themselves.

Luca Verre, co-founder and CEO of Prophesee, told Fierce Electronics, “We expect phones with this feature/capability to be in the market by 2024. It will likely appear in ‘flagship’ models first.”

When the technology arrives, it is not expected to replace traditional frame-based sensors, but instead work in tandem with them, with much of the camera performance improvement coming through Prophesee’s event-based continuous and asynchronous pixel sensing approach which will help in the “de-blurring of images” and the highlighting of focal points that otherwise might become lost where low lighting intrudes on a captured moment.

That could make consumers much happier about the quality of the pictures they take on their mobile devices, although there is a good chance they may not even know they will have neuromorphic sensors to thank for the improvement, as they probably will not have to think about switching into a different photo capture mode to take advantage of neuromorphic sensing.

“It’s unlikely that smartphones would have a ‘neuromorphic mode,’ but instead would work seamlessly with the existing image capture capabilities - but, in theory, that could be something the OEM could consider,” Verre said. “Note that using an event based camera actually reduces the amount of data processed, capturing only things in a scene that move, which are often ‘invisible’ to traditional cameras, so it is largely an augmentation of traditional frame based cameras (and other sensors, such as lidar in a car), not a replacement, especially in consumer applications.”

These sensors already are used in other kinds of applications, including business and industrial use cases such as security cameras, surveillance, preventative maintenance, vibration monitoring, high speed counting, and others where event cameras can work “as a standalone machine vision option,” Verre said, adding, “There is vast potential in the idea of sensor fusion, combining event-based sensors with other types of sensors, like frame-based cameras.”

The Qualcomm partnership comes almost a year after Prophesee announced a partnership with Sony that revolved around enabling improved integration of event-based sensing technology into devices, and Verre said the migration of the technology to mobile phones likely will be smoother as a result of the earlier partnership. Working with Sony, a leading CMOS sensor provider for the mobile industry, helped make the sensors “more applicable for mobile (smaller size, lower power, etc.) with 3D stacking manufacturing techniques,” he said.

Prophesee also sees the technology as having potential in other mobile and wearable device applications, such as augmented reality headsets. Prophesee already is talking to OEMs about moving in that direction, and Verre said the company believes that “by enabling a paradigm shift in sensing modalities with this approach, there are countless applications that can benefit.”

"These sensors already are used in other kinds of applications, including business and industrial use cases such as security cameras, surveillance, preventative maintenance, vibration monitoring, high speed counting..."

These all sounds familiar!

Very excited.

Fullmoonfever

Top 20

Given we have a minor in with ISL to AFRL  , it would great if there was some discussion / info on same at the AFRL booth at the upcoming Symposium.

, it would great if there was some discussion / info on same at the AFRL booth at the upcoming Symposium.

afresearchlab.com

afresearchlab.com

End Date: Apr 20, 2023

The premier event for global space professionals.

The premier event for global space professionals.

Space Symposium attendees consistently represent all sectors of the space community from multiple spacefaring nations; space agencies; commercial space businesses and associated subcontractors; military, national security and intelligence organizations; cyber security organizations; federal and state government agencies and organizations; research and development facilities; think tanks; educational institutions; space entrepreneurs and private space exploration and commercial space travel providers; space commerce businesses engaged in adapting, manufacturing or selling space technologies for commercial use and media that inspire and educate the general public about space.

Bringing all these groups together in one place provides a unique opportunity to examine space issues from multiple perspectives, to promote dialog and to focus attention on critical space issues.

Check back for more details on the AFRL Booth and featured technologies which include:

38th Space Symposium – 2023 – Air Force Research Laboratory

38TH SPACE SYMPOSIUM – 2023

Start Date: Apr 17, 2023End Date: Apr 20, 2023

Space Symposium attendees consistently represent all sectors of the space community from multiple spacefaring nations; space agencies; commercial space businesses and associated subcontractors; military, national security and intelligence organizations; cyber security organizations; federal and state government agencies and organizations; research and development facilities; think tanks; educational institutions; space entrepreneurs and private space exploration and commercial space travel providers; space commerce businesses engaged in adapting, manufacturing or selling space technologies for commercial use and media that inspire and educate the general public about space.

Bringing all these groups together in one place provides a unique opportunity to examine space issues from multiple perspectives, to promote dialog and to focus attention on critical space issues.

Check back for more details on the AFRL Booth and featured technologies which include:

- Hack-A-Sat

- Neuromorphic Camera

- Neuromorphic Intelligent Computing Systems

- NTS-3

- Oracle (Formerly name CHPS)

- Rotating Detonating Rocket Engine

- SSPIDR

- Tactically Responsive Space Access

- WxSat

Frangipani

Top 20

Any chance at all that Syntiant would do an Intel, i.e. a former competitor morphing into a future partner and thus possibly another case of „If you can‘t beat them, join them“?

Interestingly, according to Apple Maps, Syntiant is just around the corner from the former El Toro Marine Air Station in Irvine, CA. They will apparently share the same booth with Brainchip (who are not actually listed as an exhibitor themselves) at the Embedded World 2023 (but who says Edge Impulse and Renesas will be Brainchip‘s only partners there?), and then there is also the connection between the companies through Chris Stevens, who was Global Vice President of Sales at Syntiant before joining Brainchip…

Interestingly, according to Apple Maps, Syntiant is just around the corner from the former El Toro Marine Air Station in Irvine, CA. They will apparently share the same booth with Brainchip (who are not actually listed as an exhibitor themselves) at the Embedded World 2023 (but who says Edge Impulse and Renesas will be Brainchip‘s only partners there?), and then there is also the connection between the companies through Chris Stevens, who was Global Vice President of Sales at Syntiant before joining Brainchip…

Boab

I wish I could paint like Vincent

They may not be listed as an actual exhibitor but this is what you see when you open the BrainChip website.Any chance at all that Syntiant would do an Intel, i.e. a former competitor morphing into a future partner and thus possibly another case of „If you can‘t beat them, join them“?

Interestingly, according to Apple Maps, Syntiant is just around the corner from the former El Toro Marine Air Station in Irvine, CA. They will apparently share the same booth with Brainchip (who are not actually listed as an exhibitor themselves) at the Embedded World 2023 (but who says Edge Impulse and Renesas will be Brainchip‘s only partners there?), and then there is also the connection between the companies through Chris Stevens, who was Global Vice President of Sales at Syntiant before joining Brainchip…

All good Frangipani.

Thanks Esq.111:, so its the US listed 100% owned subsidiary Brainchip Inc that is doing all the employing.???Makes sense. Good pick up.

See website: https://shop.brainchipinc.com

65 Enterprise, Aliso Viejo, CA

92656, United States

finally, .........................someone has done a bit of "digging" around. Been waiting.

65 Enterprise, Aliso Viejo, CA

92656, United States

This address has run adverts for jobs going back several years. It is a "VIRTUAL OFFICE" located inside a massive building with many smaller offices and services. BrainChip do not hold an office here, the Main Brainchip offices are several klms away ...but......................

nearby in the same building is Sony Virtual Entertainment and next door is Microsoft. Again seem to be "virtual" offices. Cannot confirm status on these two.

As for Brainchip USA well its registered in Delaware (tax haven status) with no details available publicly on ultimate ownership apparently.

Brainchip USA also holds the titles to many of the PATENTS belonging to Brainchip.

Now lets expand our thoughts here.

Jobs adverts via Brainchip USA , Patents via Brainchip USA, and what else via Brainchip USA.?? and its a SUBSIDIARY.

Y.

finally, .........................someone has done a bit of "digging" around. Been waiting.

65 Enterprise, Aliso Viejo, CA

92656, United States

This address has run adverts for jobs going back several years. It is a "VIRTUAL OFFICE" located inside a massive building with many smaller offices and services. BrainChip do not hold an office here, the Main Brainchip offices are several klms away ...but......................

nearby in the same building is Sony Virtual Entertainment and next door is Microsoft. Again seem to be "virtual" offices. Cannot confirm status on these two.

As for Brainchip USA well its registered in Delaware (tax haven status) with no details available publicly on ultimate ownership apparently.

Brainchip USA also holds the titles to many of the PATENTS belonging to Brainchip.

Now lets expand our thoughts here.

Jobs adverts via Brainchip USA , Patents via Brainchip USA, and what else via Brainchip USA.?? and its a SUBSIDIARY.

Y.

AND just to prove my point.................posted from above @Learning post tonight.

Note the assignee & date.

Brainchip Inc (USA) Laguna Hills.

Y.

Techinvestor17

Regular

Just go on shortman.co.au it’s all thereEvening Rskiff.,

No , unfortunately.

Below numbers I started to gather 19 . 8. 2021 .

Tizz a f..k fest, but thay will get burnt , and badly.

cosors

👀

And then in a staff position, wow!Geez! I would have thought having a new addition to the BOD of this high a calibre would warrant an ASX Announcement. Wonders will never cease...

View attachment 30901

Techinvestor17

Regular

No, the rebalance Ann will this fridayHi All. If I see correctly, new ASX 200 rebalancing is in March 2023 (which is this month). Do we think we keep our slot?

Deadpool

Regular

I believe the leftie razor gang has got wind of, that a sizable proportion of the 42000 BRN shareholders will be millionaires and billionaires in the near future and just like all stand over organizations want their cut.I mentioned a few days ago that the government was running the change to superannuation tax rules up the flag pole despite promises to the contrary.

The present line is that it will only affect 80,000 superannuates however this is in its first year of operation.

Just like stamp duty on real estate in NSW it will not be indexed to adjust for inflation and so over time this number will greatly increase.

In the real world in which we all live someone today commencing work at the age of 20 years will have about 40+ years before they enter retirement. The implications for a ceiling of $3 million over 40 years can be evidenced by if you are an oldie like myself recalling what you paid for your first home about 45 years ago. In our case it was $38,000. It came on the market two or three years ago now and I noticed that the owners were then asking over $900,000.

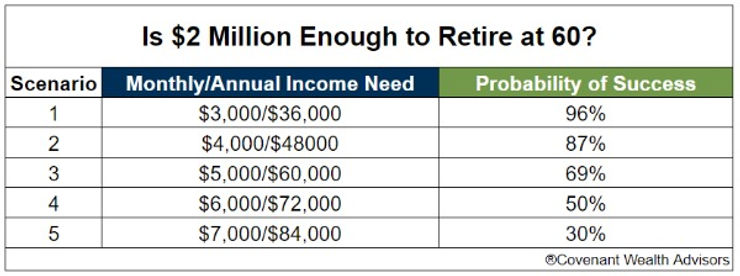

Some here will not have given much thought to their retirement but the following graph using Monte Carlo calculations suggests that having $2 million in superannuation today and wanting to have an annual pension of $84,000 based on average returns will give you only a 30% chance of your $2 million lasting until your average life expectancy:

There are lots of these tables out there on the web but the reality is the younger you retire the more money you need to have tucked away because while $3 million might seem like a lot now in 40 + years it will be a bag of lollies.

Those retirees who retired last year would have sat down with their accountants or advisers and calculated what they needed to live a comfortable life in retirement based upon the known rules and government promises. Overnight those calculations became meaningless for 80,000 Australians.

Now before you decide that these 80,000 are all fat cats or filthy rich consider this Australia has almost 3 million small business owners providing approximately 97% of all jobs in our economy. Amongst this three million business owners are:

"Medium-scale enterprises are about 56,835 in number (employing about 20 to 199 people),"

Then ask yourself if you were one of these 56,835 medium scale business owners would you want the stress and responsibility this involves if you could not at least retire with a 100% certainty that your superannuation would see you out and give you a life style that made it all worthwhile.

On the above figures to guarantee this and have a good buffer against inflation and tax law changes you would want four or five million dollars in my opinion.

Anyway these changes will not be made until after the next election so you have plenty of time to take advice, do your numbers and complain to your local member if you feel that this proposed change might not be particularly fair or reasonable for you or your loved ones.

My opinion only DYOR

FF

AKIDA BALLISTA

Also believe that the home safe market is going to have exponential growth over the next couple of years.

You'd have to expect revenue from mobile phone sales by 2024. AKIDA is surely part of this.

SharesForBrekky

Regular

An article giving an insight into where Qualcomm believe the future is heading:

www.edgeir.com

www.edgeir.com

"This is a significant milestone for Qualcomm AI Research as it proves that AI models can be run efficiently on edge devices without any reliance on the cloud or an internet connection."

"Our vision of the connected, intelligent edge is happening before our eyes as large AI cloud models begin gravitating toward running on edge devices, faster and faster,” adds Qualcomm. “What was considered impossible only a few years ago is now possible."

Whether Brainchip is involved here or not, great to see a big boy like Qualcomm publicly stating the future of edge devices is looking bright.

Qualcomm Technologies demonstrates generative AI on Android phone | Edge Industry Review

This is a significant milestone as it proves that AI models can be run efficiently on edge devices without any reliance on the cloud or an internet connection.

"This is a significant milestone for Qualcomm AI Research as it proves that AI models can be run efficiently on edge devices without any reliance on the cloud or an internet connection."

"Our vision of the connected, intelligent edge is happening before our eyes as large AI cloud models begin gravitating toward running on edge devices, faster and faster,” adds Qualcomm. “What was considered impossible only a few years ago is now possible."

Whether Brainchip is involved here or not, great to see a big boy like Qualcomm publicly stating the future of edge devices is looking bright.

Fact Finder

Top 20

Just on patents.Not sure this has been mention.

Another patent by BrainChip recently published.

View attachment 30936

US20230026363A1 - Spiking neural network - Google Patents

Disclosed herein are system, method, and computer program product embodiments for an improved spiking neural network (SNN) configured to learn and perform unsupervised extraction of features from an input stream. An embodiment operates by receiving a set of spike bits corresponding to a set...patents.google.com

Learning 🏖

I don’t think anyone mentioned that the Annual Report disclosed that Brainchip has 14 granted patents with THIRTY (30) now awaiting approval/grant.

Once they start to tick over we should be getting news from Brainchip on an at least weekly basis for quite some time.

My opinion only DYOR

FF

AKIDA BALLISTA

Similar threads

- Replies

- 0

- Views

- 4K

- Replies

- 9

- Views

- 6K

- Replies

- 0

- Views

- 3K