SiFive Is Leading The Way For Innovation On RISC-V

Karl Freund

Founder and Principal Analyst, Cambrian-AI Research LLC

Aug 8, 2022,01:04pm EDT

The company appears well positioned to challenge CPU incumbents with high performance RISC-V CPUs and Vector Extensions to the open ISA architecture.

The RISC-V CPU Instruction Set Architecture (ISA) is emerging as a serious challenger to current CPUs based on proprietary architectures, creating new opportunities for chip designers and investors alike. While RISC-V first gained traction in the low-end embedded market, where the open ISA model afforded more cost-effective designs, RISC-V is now getting more wind in its sails due to performance and power efficiency, especially with vector enhancements. Behind all the RISC-V buzz, SiFive is the company that makes many of the innovations of the open-source CPU architecture available and so appealing.

In addition to open-source and computing efficiency, RISC-V now offers a well-designed, highly efficient vector processing extension which can enable significant acceleration in applications where large data sets need to be manipulated in parallel. We have published

a research paper that dives more deeply into the advantages Vector extensions offer. This note explores the company’s role and directions.

RISC-V Benefits and SiFive’s Role

Silicon Valley startup SiFive has assumed the role of industry leadership and commercial IP innovation for the RISC-V movement, providing tested Intellectual Property (IP) and support for chip developers who incorporate RISC-V into their products.

RISC-V portends to offer an alternative to proprietary processor cores in a user-friendly licensing and development environment. The raw performance of the latest SiFive RISC-V implementation is rapidly closing the gap, but with lower power and smaller die area, and with no lock-in to a closed architecture. SiFive is further enhancing its portfolio with vector processing extensions that clearly differentiate the ISA from any other architecture.

SiFive is essentially the most visible and accomplished commercial steward of RISC-V, providing validated IP and support as well as open and proprietary enhancements to the RISC-V development community. With this open-standard approach and dependable IP, SiFive has garnered over 300 design wins with over 100 firms, including 8 of the top 10 semiconductor companies. With the addition of vector processing, we expect this trend to accelerate.

SiFive Strategy and Product Portfolio

In September 2020, SiFive announced it had hired CEO Patrick Little as the new President, CEO, and Chairman. Coming from Qualcomm where he led the company’s successful foray into the automotive sector, Mr. Little has sharpened the company’s business model on developing and licensing IP, selling the SiFive’s OpenFive SoC design business to AlphaWave for $210 million. The company subsequently raised $175 million in a Series F funding round at a $2.5 billion post-money valuation. The latest round brings SiFive's total venture funding to over $350 million and was led by global investment firm Coatue Management LLC. Existing investors Intel Capital, Sutter Hill, and some others joined this latest round.

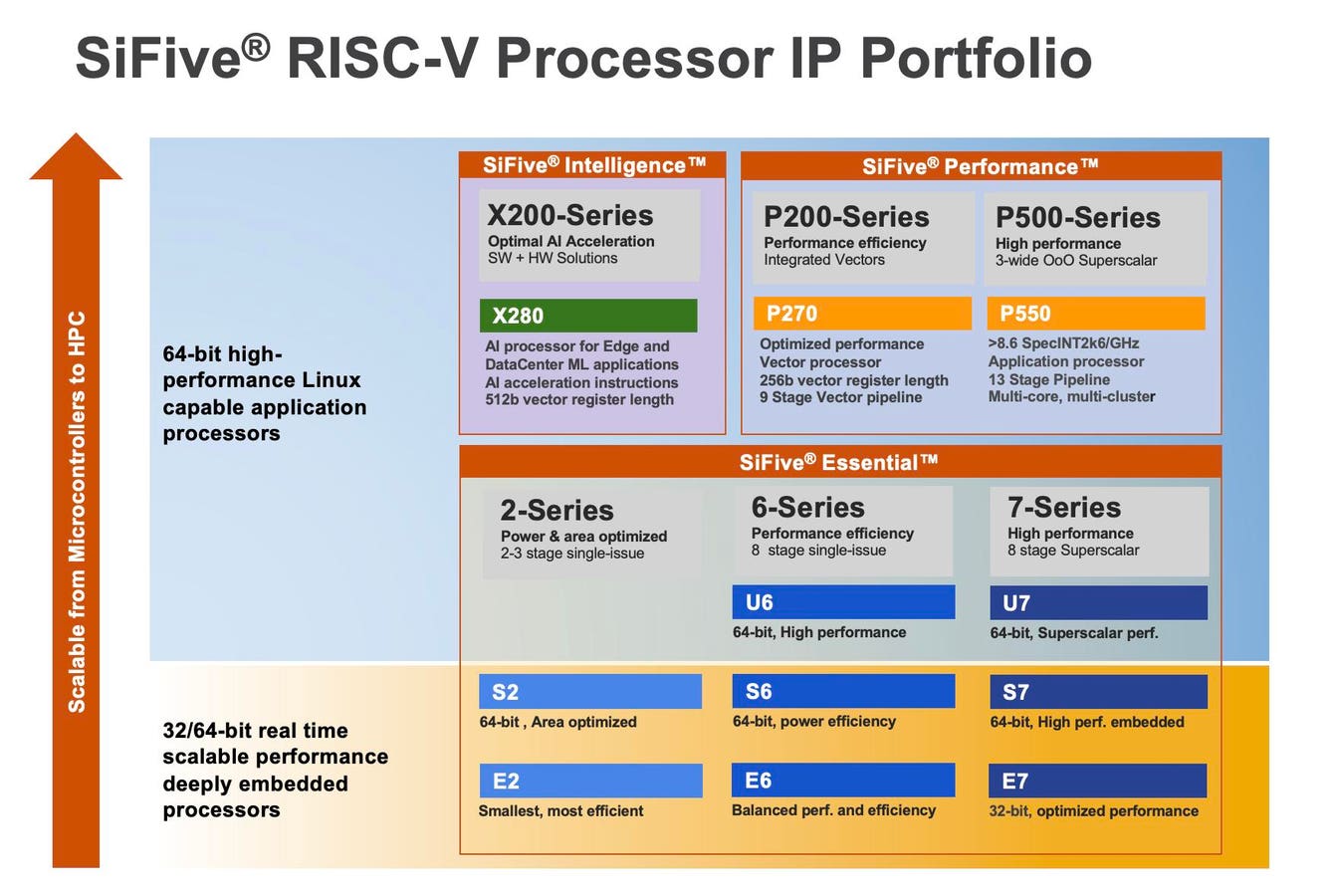

SiFive already has a broad portfolio of RISC-V processors.

SiFive

In today’s heterogeneous world of Domain-Specific Processors, parallel processing of large data sets is a critical adjunct to scalar processing. While accelerators such as GPU’s and ASICs provide some incremental performance, they come at significant cost and generally require connectivity to CPU’s along with the cost of data transfers from the CPU to and from the accelerator. And each accelerator requires its own distinct programming model. Now with RISC-V, general vector processing in the CPU cores offers an alternative approach.

Vector processing, where instructions manipulate data across a large dataset of numbers, has been a foundation of high-performance computing since the Cray 1 supercomputer in 1975. RISC-V Vector extensions (RVV) enables RISC-V cores to process data arrays alongside traditional scalar operations to parallelize the computation of single instruction streams on large data sets. SiFive helped establish RVV as a part of the RISC-V standard and has now extended the concept in two dimensions.

The SiFive extensions to the RISC-V vector capabilities can dramatically increase performance and ... [+]

SiFIve

Figure 2: The SiFive extensions to the RISC-V vector capabilities can dramatically increase performance and efficiency.

The SiFive Intelligence Extensions add new operations such as matmuls for INT8, BF16 converts and compute operations, and enable vector instructions to operate on a broad range of AI/ML data types, including BFLOAT16. The SiFive Intelligence Extensions also add support for TensorFlow Lite for Machine Learning models, reducing the cost to port AI models to SiFive based designs.

VCIX represents a strategic opportunity for SiFive

In a world of increasing heterogeneity, there is a large opportunity to help SoC and System-on-Package (SoP) designers build tightly integrated solutions. The SiFive Vector Coprocessor Interface Extension (VCIX) is a direct interface between the X280 and a custom accelerator, enabling parallel instructions to be executed on the accelerator directly from the scalar pipeline. The custom instructions are executed from the standard software flow, utilizing the vector pipeline, and can access the full vector register set.

The SiFive Processor Portfolio

The SiFive product portfolio is structured into three clearly differentiated product lines: the 32/64 bit Essential products (2-, 6-, and 7-Series) for embedded control/Linux applications, the SiFive Performance Series (the P200 and P500/P600 families) for high efficiency and higher performance, and the SiFive Intelligence Series (the X200 family) for parallelizable workloads such as Machine Learning at the edge and in data centers.

To capitalize on its advantage in vector processing, SiFive has built its vector capabilities into both the Performance P270 and the Intelligence X280 processors.

The portfolio includes the Essential, Performance, and Intelligence processors.

SiFive

Early Adopters of the SiFive X280

SiFive X280 has already been adopted by several companies of note, including a Tier 1 semiconductor company and a US Federal Agency for a strategic initiative in the aerospace and defence sector. Another customer has selected the X280 for projects for its mobile devices and data center AI products. Similarly, a US company delivering autonomous self-driving platforms has selected the X280 for its next generation SoC. Of these opportunities, the last two could generate significant volumes, while the 1st could open more doors in the government sector.

On the startup front, we have already seen a number of SoC developers publicly announce their adoption of SiFive including Tenstorrent and Kinara (formerly known as DeepVision). Many are developing SoCs for AI acceleration, leveraging the vector processing of the X280 and complementing that with custom AI blocks. Tenstorrent tells us they are getting great support and that the cores are rock solid.

SiFive Development Tool Suite

For AI applications, SiFive supports an Out-of-the-box software and processor hardware solution with TensorFlow Lite running under Linux OS to run NN models in the Object detention, Image Classification, Segmentation, Text, and Speech domains. Existing models can be run with little porting effort with a broad range of optimized NN operators in both 32-bit Float and Quantized 8-bit precisions.

Applications that can Benefit from Vector Processing

From our perspective, we believe that parallel processing is transitioning from the tool of a few to the norm for many applications, especially as AI and Machine Learning become pervasive. And as Moore’s Law provides ever-diminishing returns, application developers still require more performance and Vector processing can provide the avenue for both higher levels of performance and better power efficiency especially with RISC-V. We see opportunities for RISC-V vector processing in multiple application domains including smart homes, telco, mobile devices, autonomous vehicles, industrial automation, robotic control, and health care. The simplicity and elegance of RVV and the performance gains are powerful selling points.

The X280 processor supports a wide range of use cases.

SiFive

Figure 5: The X280 processor supports a wide range of use cases.

Conclusions

We are impressed by the progress that RISC-V and SiFive has made in the last few years. The new product line positioning makes a ton of sense, the processors are beefier, the software stack is getting much better and the vector extensions are impressive, both the open source RVV and the AI extensions the company has included in the Intelligence Series X280. The CPUs are relatively high performance with excellent scalability and power efficiency due to the simplicity that stems from the efficient RISC-V ISA and clever extensions. SiFive has also recently disclosed the intention of releasing an even higher performance P600 Series class Out-of-Order core with RISC-V vector compute in the near future.

Finally, the commitment to and leverage of the open-source community is perhaps RISC-V and SiFive’s most important value they can offer as an alternative to Arm, especially for designers looking to build SoC solutions for Domain-Specific Architectures.

Disclosures: This article expresses the opinions of the author, and is not to be taken as advice to purchase from nor invest in the companies mentioned. My firm, Cambrian-AI Research, is fortunate to have many semiconductor firms as our clients, including NVIDIA, Intel, IBM, Qualcomm, Esperanto, Graphcore, SImA,ai, Synopsys, Cerebras Systems, Tenstorrent and Ventana Microsystems. We have no investment positions in any of the companies mentioned in this article. For more information, please visit our website at

https://cambrian-AI.com.

Karl Freund

I love to learn and share the amazing hardware and services being built to enable Artificial Intelligence, the next big thing in technology.

While RISC-V first gained traction in the low-end embedded market, where the open ISA model afforded more cost-effective designs, RISC-V is now getting more wind in its sails due to performance and power efficiency, especially with vector enhancements with advantages over alternative ISAs.

www.forbes.com

www.linkedin.com

www.forbes.com

www.forbes.com

www.theregister.com

www.prnewswire.com

www.techtimes.com

research.utexas.edu