You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

BRN Discussion Ongoing

- Thread starter TechGirl

- Start date

Frank Zappa

Regular

God forgives you

Today, 3 years after first buying into BRN, and a year since the purchase of my third tranche, I took the plunge and doubled my holding, while averaging down from 0.74 to 0.53

And on the very same day, I now see that FF has returned with a killer post. I'll take that as a sign

And on the very same day, I now see that FF has returned with a killer post. I'll take that as a sign

Easytiger

Regular

Love ya workToday a mini report was released by Cambrian Ai covering the release of AKIDA Second Generation. The significance of this report cannot be overstated. I had held the opinion prior to reading this report that AKIDA Third Generation would be the IP that allowed Brainchip and its shareholders to capitalise on the obsessed CHATGpt technology world but I was clearly wrong.

Thank you Cambrian Ai for disclosing just what makes AKIDA Second Generation a potential love child of CHATGpt or more generally GenAi.

To explain why I hold the opinion that today's reveal by Cambrian Ai is of such significance I have set out here a series of FACTS which I believe justifies my opinion.

From CNN —

The crushing demand for AI has also revealed the limits of the global supply chain for powerful chips used to develop and field AI models.

The continuing chip crunch has affected businesses large and small, including some of the AI industry’s leading platforms and may not meaningfully improve for at least a year or more, according to industry analysts.

The latest sign of a potentially extended shortage in AI chips camein Microsoft’s annual report recently. The report identifies, for the first time, the availability of graphics processing units (GPUs) as a possible risk factor for investors.

GPUs are a critical type of hardware that helps run the countless calculations involved in training and deploying artificial intelligence algorithms.

“We continue to identify and evaluate opportunities to expand our datacenter locations and increase our server capacity to meet the evolving needs of our customers, particularly given the growing demand for AI services,” Microsoft wrote. “Our datacenters depend on the availability of permitted and buildable land, predictable energy, networking supplies, and servers, including graphics processing units (‘GPUs’) and other components.”

Microsoft’s nod to GPUs highlights how access to computing power serves as a critical bottleneck for AI. The issue directly affects companies that are building AI tools and products, and indirectly affects businesses and end-users who hope to apply the technology for their own purposes.

OpenAI CEO Sam Altman, testifying before the US Senate in May, suggested that the company’s chatbot tool was struggling to keep up with the number of requests users were throwing at it.

“We’re so short on GPUs, the less people that use the tool, the better,” Altman said. An OpenAI spokesperson later told CNN the company is committed to ensuring enough capacity for users.

The problem may sound reminiscent of the pandemic-era shortages in popular consumer electronics that saw gaming enthusiasts paying substantially inflated prices for game consoles and PC graphics cards. At the time, manufacturing delays, a lack of labor, disruptions to global shipping and persistent competing demand from cryptocurrency miners contributed to the scarce supply of GPUs,spurring a cottage industry of deal-tracking tech to help ordinary consumers find what they needed."

Cambrian Ai's mini paper on Brainchip and Second Gen AKIDA can be found via Cambrian Ai's Homepage at:https://cambrian-ai.com/

On their Homepage they state that they work with the following companies: Cerebras, Esperanto Technologies, Graphcore, IBM, Intel, NVIDIA, Qualcomm, Tenstorrent, SiFive, Simple Machines, Synopsys, Cadence and others.

In their mini paper they say many nice things but the significant parts in my opinion are found in the concluding paragraph which I have broken up into three points:

1. The second-generation Akida processor IP is available now from BrainChip for inclusion in any SoC and comes complete with a software stack tuned for this unique architecture.

2. We encourage companies to investigate this technology, especially those implementing time series or sequential data applications.

3. Given that GenAI and LLMs generally involve sequence prediction, and advances made for pre-trained language models for event-based architectures with SpikeGPT, the compactness and capabilities of BrainChip’s TENNs and the availability of Vision Transformer …second-generation Akida could facilitate more GenAI capabilities at the Edge.

In Summary:

There is a massive shortage of GPU chips to run GenAi and this shortage is going to extend into the next year and a half at least.

Cambrian Ai has told the World that they should be looking at AKIDA Second Generation to facilitate GenAi capabilities at the Edge.

If Cambrian Ai only pass on their opinion to their existing customer base this is still a very large portion of the technology market where AKIDA Second Generation could profitably be adopted.

As we all should know AKIDA technology reduces bandwidth by processing data collected at the EDGE into relevant meta data and in so doing reduces processing demand in the Cloud be it public or private.

Peter van der Made is on record stating that AKIDA technology at the EDGE doing its thing can reduce the use of power in the Cloud by up to 97%.

It just makes sense if you cannot get enough GPU’s to handle your GenAi workloads in the Cloud then one solution is to reduce the workload.

Cambrian is only stating the obvious when it asks companies to investigate what AKIDA Second Generation can do for them to facilitate their GenAi capabilities at the EDGE.

In my opinion there is no reason to doubt that Brainchip is finally on the cusp of publicly realising what we all have known for what seems like a lifetime. An EDGE technology revolution.

Validation of AKIDA technology Science Fiction has been coming thick and fast from diverse sources but of late the most impressive was from TATA researchers who found that AKIDA technology was 35 times more power efficient and 3.4 times faster than the Nvidia GPU that they were also trialling. I doubt that such findings by a company with the global presence of TATA will have been missed by those that matter in the technology space.

However this is my opinion only so be sure to DYOR.

Regards

Fact Finder

Humble Genius

Regular

I still don't get why these guys don't leverage Brainchip's name in their Linkedin posts - I'm sure that there following what increase exponentially if they did!

Taproot

Regular

Hi Jesse,I know @Diogenese is probably exhausted talking about Valeo but maybe he can explain again why he believes it is us?!

My reasons is, we have a joint development agreement with them asx announced back in June 2020, there is NOBODY right now or especially back then that has commercially available neuromorphic IP, can somebody allude me to another company that has commercial neuromorphic IP that was available back in 2020??

Nanden has recently said that they (Brainchip) have even worked on a prototype with Valeo, now what other prototype could it be?

It’s been 3 years since that announcement with Brainchip and Valeo and by 2024 rolls around it makes sense that it’s almost 4 years time to market, which is standard for the automotive industry.

We’ve got the confirmation from a 27 year veteran currently working at Valeo that they achieved Scala 3 through neuromorphic and spiking neural networks, they appear on our presentations as early adopters, it is us I bet my life on it (my opinion ASIC)

There may be a standalone announcement but as @Diogenese has said, we already have an asx announcement already about the joint development and the terms of it, as Brainchip will be paid when undisclosed milestones are met.

So when Sean Hehir says financials, I believe this will be one of them in the not too distant future, I’d say by March next year we would have had a stand-alone announcement about revenue owing to Brainchip as it will be substantial, if there’s let’s say $2 billion Aus, can Brainchip demand a 5% royalty? Well of course they can, but let’s just go with 2%, do the maths guys, and that will be reoccurring for many years and growing every year as more automotive companies adopt Scala3.

Also by years end I am expecting a launch customer and another 1-2 customers to sign, maybe I am expecting too much but that’s what I am thinking.

There is also companies as you all would know that just because there isn’t an asx announcement right now, does not mean that companies arent working on designing and implementing akida 1 or 2 as we speak.

It is very simple, you only have to read the announcements on all our partnerships in the last year and a half or so, they don’t say we will evaluate Brainchip, it says that Brainchip is basically the key differentiator and revolutionary and will start designing right away.

So, in my opinion, many companies as we speak are working on getting their prototypes working and getting ready to get to market for themselves and their customers.

Of course my opinion but this makes sense based on the research I have done!!

The timeline for Scala 3 just fits beautifully. It's going to be a total game changer for BrainChip !

BrainChip Holdings (ASX:BRN) Scheduled Market Update Presentation, March 2020

27 Mar 2020 - BrainChip Holdings Limited (ASX:BRN) CEO Louis DiNardo, Founder and CTO Peter van der Made, and Founder and CDO Anil Mankar provide an update on the company.

BrainChip Holdings (ASX:BRN) Scheduled Market Update Presentation, March 2020

COMPANY PRESENTATIONSMarch 27, 2020 04:00 PM

"Tier one automobile manufactures, we've got Detroit, we can't name names, but we've got Detroit where we are close to a proof of concept agreement. That is the first step. We'll get a little bit of money but more importantly it's validation that tier one automobile manufacturers are interested in developing a solution which includes a heated device in a module or some part of your infrastructure in the automobile. Automotive module suppliers, probably the most target rich environment for us. Most automobile manufactures don't develop their own modules. It pushed that down to tier one module suppliers. We have a proof of concept, it's been frustrating, I've talked about this before, but it's been frustrating. It's a large European tier one module supplier. Contract's taken four months almost five months now. But it's moving forward.

In the module arena, you could think about LiDAR, you could think about ultrasound, you could think about radar, you could think about standard pixel based cameras. In our case what we are finding is a sweet spot in LiDAR environment. So that's moving forward very nicely. Unfortunately, when you are dealing with large multinational conglomerates the legal issues sometimes take longer than you would like. But those are being resolved quite effectively now."

From the March 2020 update, looks like they had been working on a contract with a large European tier one module supplier since November 2019.

3 months after the March 2020 presentation, they sign a joint development agreement with Valeo.

Who just happen to be the world leader in Lidar.

"In our case what we are finding is a sweet spot in LiDAR environment." Louis DiNardo

Getupthere

Regular

BrainChip Sees Gold In Sequential Data Analysis At The Edge — Forbes

BrainChip has singled out such data processing needs as a key opportunity to apply its Akida technology, which specializes in Event-Based Processing and Spiking Neural...

Cartagena

Regular

Yep it's comingI totally agree - it's clearly coming. So many things lining up, there's no way this isn't going to take off. It's just the waiting game now. If other people are stupid enough to sell their shares then that's their problem . . .

Attachments

SharesForBrekky

Regular

I'm thinking 01R stands for #1 RevolutionaryYep it's coming

wilzy123

Founding Member

BrainChip Sees Gold In Sequential Data Analysis At The Edge — Forbes

BrainChip has singled out such data processing needs as a key opportunity to apply its Akida technology, which specializes in Event-Based Processing and Spiking Neural...apple.news

LMAO.... doubling down on the more balanced posts I see. You make it too obvious champ.

Cartagena

Regular

Yes it's a sign and we hope we land a juicy contract very soon that will extinguish the shorters once and for all.I'm thinking 01R stands for #1 Revolutionary

1 = 1st September # big contract

R car with Renesas

8 = 8th month = August

R = Rocket / NASA

R = revenue to be announced (hopeful).

FJ-215

Regular

Hi @Mccabe84,If the 2 gen Akida IP is available now shouldn’t there have been an ASX announcement?

There was!

BrainChip Introduces Second-Generation Akida Platform

OK, it's not in the title but it does state that this is the official launch of Gen 2 (March 6th, 2023)

And it does state that...

"General availability will follow in Q3’ 2023. A formal platform launch press release will

take place on 7 March at 1:00am AEDT, 6 March at 6:00am US PST."

Also if you go to the BRN website and toggle over products/ then/ Akida 2nd Generation. You will find.....

Akida 2nd Generation Platform Brief

The 2nd generation Akida builds on the existing technology foundation and supercharges the processing of raw time-continuous streaming data, such as video analytics, target tracking, audio classification, analysis of health monitoring data such as heart rate and respiratory rate for vital signs prediction, and time series analytics used in forecasting, and predictive production line maintenance. These capabilities are critically needed in industrial, automotive, digital health, smart home, and smart city applications.Download Platform Brief

Availability:

Engaging with lead adopters now. General availability in Q3’2023

Maybe, just maybe someone at BRN is a Douglas Adams fan.

From my much loved 'HHGG'

Cartagena

Regular

Professor & Head of Institute of Automotive Engineering so he holds some influence I presume.

Seems like he is fond of both Brainchip and Mercedes Benz.

MB still on the official Brainchip website " a car that thinks like you". We wait patiently for the much needed contract with MB

Frangipani

Top 20

Yep it's coming

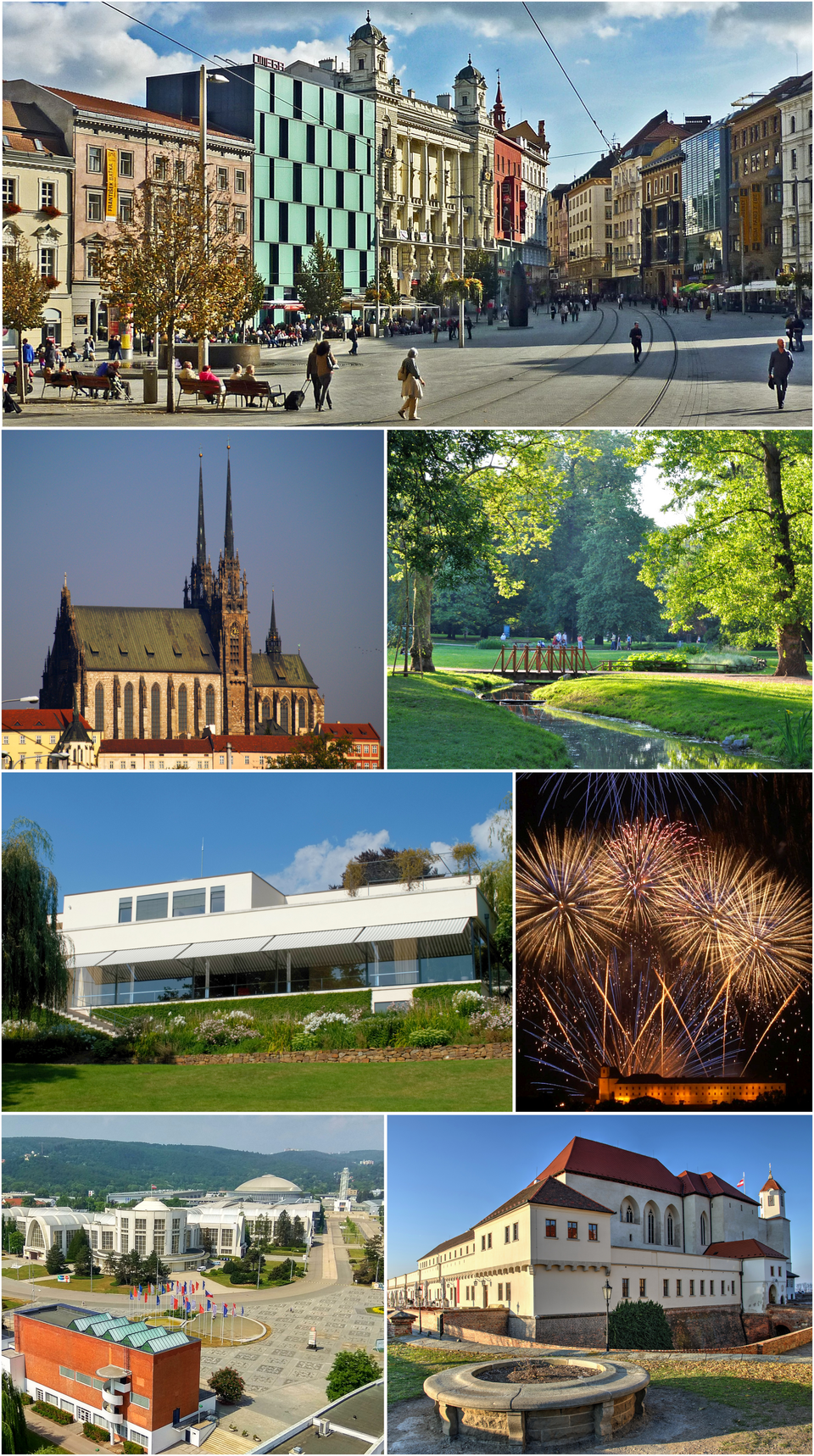

For all we know, the owner of the vehicle could also be an artist immigrant hailing from the Czech Republic with a penchant for impressionist aesthetics:

BRNO + RENOIR > BRNO1R

Brno - Wikipedia

Saw an interesting like on one of the recent Brainchip post on LinkedIn so decided to chase it up.

Checkout-

www.viavisolutions.com

www.viavisolutions.com

I will look into it more tomorrow sometime but reading through the above page, I strongly believe that Akida is the secret sauce here.

Checkout-

What is Edge Computing? | VIAVI Solutions Inc.

Learn about edge computing, a technology that enables rapid, near real-time analysis and response by processing, analyzing, and storing data closer to its source. Explore its benefits, deployment strategies, and use cases.

I will look into it more tomorrow sometime but reading through the above page, I strongly believe that Akida is the secret sauce here.

Frangipani

Top 20

Prof. Dr.-Ing. Steven Peters has come up a couple of times in my research before.Professor & Head of Institute of Automotive Engineering so he holds some influence I presume.

Seems like he is fond of both Brainchip and Mercedes Benz.

Prior to becoming full professor for automotive engineering at TU Darmstadt, he was actually working for MB for a number of years, and it is not hard to guess what project team he was on before returning to the world of academia full-time…

Last edited:

Frangipani

Top 20

Prof. Dr.-Ing. Steven Peters has come up a couple of times in my research before.

Prior to becoming full professor for automotive engineering at TU Darmstadt, he was actually working for MB for five years, and it is not hard to guess what project team he was on before returning to the world of academia full-time…

View attachment 42756

In addition, his Wikipedia page (German only) states that he was already associated with Daimler AG as the first ever KIT (Karlsruhe Institute of Technology) Industry Fellow in 2013 and 2014 and that he was not only Head of AI Research at Mercedes, but in fact its founder.

So yes, I’d definitely say the fact he “likes” Brainchip carries a lot of weight.

Last edited:

Today a mini report was released by Cambrian Ai covering the release of AKIDA Second Generation. The significance of this report cannot be overstated. I had held the opinion prior to reading this report that AKIDA Third Generation would be the IP that allowed Brainchip and its shareholders to capitalise on the obsessed CHATGpt technology world but I was clearly wrong.

Thank you Cambrian Ai for disclosing just what makes AKIDA Second Generation a potential love child of CHATGpt or more generally GenAi.

To explain why I hold the opinion that today's reveal by Cambrian Ai is of such significance I have set out here a series of FACTS which I believe justifies my opinion.

From CNN —

The crushing demand for AI has also revealed the limits of the global supply chain for powerful chips used to develop and field AI models.

The continuing chip crunch has affected businesses large and small, including some of the AI industry’s leading platforms and may not meaningfully improve for at least a year or more, according to industry analysts.

The latest sign of a potentially extended shortage in AI chips camein Microsoft’s annual report recently. The report identifies, for the first time, the availability of graphics processing units (GPUs) as a possible risk factor for investors.

GPUs are a critical type of hardware that helps run the countless calculations involved in training and deploying artificial intelligence algorithms.

“We continue to identify and evaluate opportunities to expand our datacenter locations and increase our server capacity to meet the evolving needs of our customers, particularly given the growing demand for AI services,” Microsoft wrote. “Our datacenters depend on the availability of permitted and buildable land, predictable energy, networking supplies, and servers, including graphics processing units (‘GPUs’) and other components.”

Microsoft’s nod to GPUs highlights how access to computing power serves as a critical bottleneck for AI. The issue directly affects companies that are building AI tools and products, and indirectly affects businesses and end-users who hope to apply the technology for their own purposes.

OpenAI CEO Sam Altman, testifying before the US Senate in May, suggested that the company’s chatbot tool was struggling to keep up with the number of requests users were throwing at it.

“We’re so short on GPUs, the less people that use the tool, the better,” Altman said. An OpenAI spokesperson later told CNN the company is committed to ensuring enough capacity for users.

The problem may sound reminiscent of the pandemic-era shortages in popular consumer electronics that saw gaming enthusiasts paying substantially inflated prices for game consoles and PC graphics cards. At the time, manufacturing delays, a lack of labor, disruptions to global shipping and persistent competing demand from cryptocurrency miners contributed to the scarce supply of GPUs,spurring a cottage industry of deal-tracking tech to help ordinary consumers find what they needed."

Cambrian Ai's mini paper on Brainchip and Second Gen AKIDA can be found via Cambrian Ai's Homepage at:https://cambrian-ai.com/

On their Homepage they state that they work with the following companies: Cerebras, Esperanto Technologies, Graphcore, IBM, Intel, NVIDIA, Qualcomm, Tenstorrent, SiFive, Simple Machines, Synopsys, Cadence and others.

In their mini paper they say many nice things but the significant parts in my opinion are found in the concluding paragraph which I have broken up into three points:

1. The second-generation Akida processor IP is available now from BrainChip for inclusion in any SoC and comes complete with a software stack tuned for this unique architecture.

2. We encourage companies to investigate this technology, especially those implementing time series or sequential data applications.

3. Given that GenAI and LLMs generally involve sequence prediction, and advances made for pre-trained language models for event-based architectures with SpikeGPT, the compactness and capabilities of BrainChip’s TENNs and the availability of Vision Transformer …second-generation Akida could facilitate more GenAI capabilities at the Edge.

In Summary:

There is a massive shortage of GPU chips to run GenAi and this shortage is going to extend into the next year and a half at least.

Cambrian Ai has told the World that they should be looking at AKIDA Second Generation to facilitate GenAi capabilities at the Edge.

If Cambrian Ai only pass on their opinion to their existing customer base this is still a very large portion of the technology market where AKIDA Second Generation could profitably be adopted.

As we all should know AKIDA technology reduces bandwidth by processing data collected at the EDGE into relevant meta data and in so doing reduces processing demand in the Cloud be it public or private.

Peter van der Made is on record stating that AKIDA technology at the EDGE doing its thing can reduce the use of power in the Cloud by up to 97%.

It just makes sense if you cannot get enough GPU’s to handle your GenAi workloads in the Cloud then one solution is to reduce the workload.

Cambrian is only stating the obvious when it asks companies to investigate what AKIDA Second Generation can do for them to facilitate their GenAi capabilities at the EDGE.

In my opinion there is no reason to doubt that Brainchip is finally on the cusp of publicly realising what we all have known for what seems like a lifetime. An EDGE technology revolution.

Validation of AKIDA technology Science Fiction has been coming thick and fast from diverse sources but of late the most impressive was from TATA researchers who found that AKIDA technology was 35 times more power efficient and 3.4 times faster than the Nvidia GPU that they were also trialling. I doubt that such findings by a company with the global presence of TATA will have been missed by those that matter in the technology space.

However this is my opinion only so be sure to DYOR.

Regards

Fact Finder

Been a holder since 2019, still in the black and still hopeful. My first post here.

The Cambrian AI research helps to get the message out but doesn't come across as independent, rigorous analysis so I wonder about its impact. Indeed a disclosure at the bottom says: This document was developed with BrainChip Inc. funding and support. I'm sure further independent support, of which we've had plenty in various ways, will keep coming. It is so much more persuasive. I work in investor relations/public relations and rarely seek paid-for validation.

cosors

👀

Thanks, I'll take a look at it too!Saw an interesting like on one of the recent Brainchip post on LinkedIn so decided to chase it up.

Checkout-

What is Edge Computing? | VIAVI Solutions Inc.

Learn about edge computing, a technology that enables rapid, near real-time analysis and response by processing, analyzing, and storing data closer to its source. Explore its benefits, deployment strategies, and use cases.www.viavisolutions.com

I will look into it more tomorrow sometime but reading through the above page, I strongly believe that Akida is the secret sauce here.

Wiki: Viavi Solutions

Best regards to France

Cartagena

Regular

Excellent research. He is very well regarded indeed in the Mercedes engineering project team. Love itIn addition, his Wikipedia page (German only) states that he was already associated with Daimler AG as the first ever KIT (Karlsruhe Institute of Technology) Industry Fellow in 2013 and 2014 and that he was not only Head of AI Research at Mercedes, but in fact its founder.

So yes, I’d definitely say the fact he “likes” Brainchip carries a lot of weight.

View attachment 42757

Similar threads

- Replies

- 1

- Views

- 4K

- Replies

- 10

- Views

- 6K

- Replies

- 1

- Views

- 3K