You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

BRN Discussion Ongoing

- Thread starter TechGirl

- Start date

From what I can tell this project closed to submissions a year ago this week. Do they ever announce who was successful?

sam.gov

sam.gov

Army SBIR 21.4 Topic Index

A214-051 Asynchronous Neuromorphic Digital Readout Circuit for Infrared Cameras for Autonomous Target Acquisition and Autonomous Vehicles

A214-051

TITLE: Asynchronous Neuromorphic Digital Readout Circuit for Infrared Cameras for Autonomous Target Acquisition and Autonomous Vehicles

OBJECTIVE:

Most military scenarios consist of highly cluttered and dynamic scenes. Asynchronous on chip smart event cameras can eliminate cluttered scenarios with a much-reduced latency, power, and would be able to hand off images of interest to imbedded autonomous target algorithms. Development of a smart digital readout circuit, with embedded processing, containing this capability would significantly enhance infrared cameras for use in autonomous detection. The objective of this topic is to take this new technology and apply it to the 3GEN FLIR program and all other systems that use or will use 3GEN FLIR Cameras.

DESCRIPTION:

Currently, 3GEN FLIR consists of imaging in two infrared bands with four fields of view. This capability for the Army increases the effectiveness of the sensors to operate in all atmospheric conditions with much longer range than previous versions. In addition, new ground systems will employ autonomous vehicles that will have to contain some use of artificial intelligence to navigate and target. This will regain overmatch by reducing target acquisition time and engagement timelines compared to today’s manual search and acquiring “next target” process. It will also reduce the cognitive burden for vehicle crew by automating search and acquisition – targets are verified by man-in-the-loop prior to engagement. The project, if successful, will make a game changing improvement by indicating temporal events at the focal plane level and reduce latency of target acquisition times. This project will design a neuromorphic chip to be combined with the digital 3GEN FLIR readout circuit at the 12 micron pixel level. This two chip stacked readout will perform the basic sensor functions as well as the neuromorphic processing. Power dissipation of the neuromorphic chip will be at a minimum since it is cryogenically cooled to 75K and added heat load needs to be minimized.

PHASE I:

Phase I will be a short study phase to come up with a neuromorphic design chip.

PHASE II:

Phase II will consist of the design and fabrication of the asynchronous neuromorphic digital readout. Testing will be done to prove out the concept performance. If Phase II Sequential required, it should demonstrate the chip in a 3GEN FLIR focal plane. Packaging and testing will validate the conceptual success of the project.

PHASE III:

Phase III will consist of the commercialization of the selected proposal.

KEYWORDS:

3GEN FLIR, Neuromorphic chip, latency

SAM.gov

Army SBIR 21.4 Topic Index

A214-051 Asynchronous Neuromorphic Digital Readout Circuit for Infrared Cameras for Autonomous Target Acquisition and Autonomous Vehicles

A214-051

TITLE: Asynchronous Neuromorphic Digital Readout Circuit for Infrared Cameras for Autonomous Target Acquisition and Autonomous Vehicles

OBJECTIVE:

Most military scenarios consist of highly cluttered and dynamic scenes. Asynchronous on chip smart event cameras can eliminate cluttered scenarios with a much-reduced latency, power, and would be able to hand off images of interest to imbedded autonomous target algorithms. Development of a smart digital readout circuit, with embedded processing, containing this capability would significantly enhance infrared cameras for use in autonomous detection. The objective of this topic is to take this new technology and apply it to the 3GEN FLIR program and all other systems that use or will use 3GEN FLIR Cameras.

DESCRIPTION:

Currently, 3GEN FLIR consists of imaging in two infrared bands with four fields of view. This capability for the Army increases the effectiveness of the sensors to operate in all atmospheric conditions with much longer range than previous versions. In addition, new ground systems will employ autonomous vehicles that will have to contain some use of artificial intelligence to navigate and target. This will regain overmatch by reducing target acquisition time and engagement timelines compared to today’s manual search and acquiring “next target” process. It will also reduce the cognitive burden for vehicle crew by automating search and acquisition – targets are verified by man-in-the-loop prior to engagement. The project, if successful, will make a game changing improvement by indicating temporal events at the focal plane level and reduce latency of target acquisition times. This project will design a neuromorphic chip to be combined with the digital 3GEN FLIR readout circuit at the 12 micron pixel level. This two chip stacked readout will perform the basic sensor functions as well as the neuromorphic processing. Power dissipation of the neuromorphic chip will be at a minimum since it is cryogenically cooled to 75K and added heat load needs to be minimized.

PHASE I:

Phase I will be a short study phase to come up with a neuromorphic design chip.

PHASE II:

Phase II will consist of the design and fabrication of the asynchronous neuromorphic digital readout. Testing will be done to prove out the concept performance. If Phase II Sequential required, it should demonstrate the chip in a 3GEN FLIR focal plane. Packaging and testing will validate the conceptual success of the project.

PHASE III:

Phase III will consist of the commercialization of the selected proposal.

KEYWORDS:

3GEN FLIR, Neuromorphic chip, latency

Rise from the ashes

Regular

I was trying to teach it the meaning of WANCA I thought I was successful after a bit of to and fro as it kept responding with the correct definition. Once I closed the chat then reopened it and asked the same question it reverted back to me having to explain it allover again.Finally... proof that ChatGPT has surpassed human (well, at least some) intelligence.

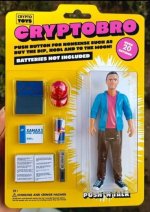

View attachment 26344

Xhosa12345

Regular

View attachment 26334

I could not find the modified 2002 I was thinking of but this Dee seems to be paying a very strong tribute to probably one of the BMW icons to my eye.

Totally pointless observation except for other fans of the 2002.

Regards

FF

AKIDA BALLISTA

Herbie was ahead of his time......

Sirod69

bavarian girl ;-)

Mercedes announces major autonomous driving update at CES

Mercedes has slowly become a leader in autonomous driving and vehicle software, along with the likes of Tesla. And while its far more conservative approach to software introduction has meant that fewer of its customers have benefited from its offering than its American counterpart, the company’s full legal acceptance of responsibility has inspired rare confidence in the system. Now, Mercedes has announced key updates coming to its autonomous driving suite in 2023.

Mercedes announces major autonomous driving update at CES

Mercedes announced a slate of new software updates coming to vehicles in 2023, notably including new autonomous driving features in the U.S.

White Horse

Regular

Hi FF,When our children were young and understood a general concept of infinity as the number it was impossible to count too in a million lifetimes I would say ‘I love you to infinity plus one more just in case.’

Infinity is a pretty big number and unlike with my children if they achieved infinite or ubiquitous profit I would say enough. And one more just in case would be unnecessary.

My eight year old grandson floored me last year when we bidding up with higher numbers about something of which I don't recollect, when I said, infinity. He said, you can't say that Pa, infinity is not a number, its a concept. End of contest.

Good evening Chippers,

Great week all in all , finnished on par with last week. Incredible.

As Fact Finder was kind enough to lay out for all , in an earlier post, our company is engaged with 6 to 7 partners this week @ C.E.S , most of which behind closed doors, showcasing our tech & the benefits to clientele, some of which require confidentiality / NDA 's to be signed before a viewing.

TOP SHELF & extremely exciting.

Future , solid partners & connections, with serious engagement, one on one.

Sterling effort to all contributors this week .

Big end of town is deffinately trying to psychologically play with us on the share price side of things.

Personally, I am 97% certain Brainchip share price will be many fold from where it is pressently, in the not to distant.

On a lighter note....

Have a great weekend all.

This Lass goes off,

Korolova, Live so track Boa @ Sao Paulo, Brazil / Melodic Techno & Progressive House Mix.

1:25:24 duration.

* Once again if a savvy person could locate and post link would be greatfull, thankyou in advance.

Regards,

Esq.

Great week all in all , finnished on par with last week. Incredible.

As Fact Finder was kind enough to lay out for all , in an earlier post, our company is engaged with 6 to 7 partners this week @ C.E.S , most of which behind closed doors, showcasing our tech & the benefits to clientele, some of which require confidentiality / NDA 's to be signed before a viewing.

TOP SHELF & extremely exciting.

Future , solid partners & connections, with serious engagement, one on one.

Sterling effort to all contributors this week .

Big end of town is deffinately trying to psychologically play with us on the share price side of things.

Personally, I am 97% certain Brainchip share price will be many fold from where it is pressently, in the not to distant.

On a lighter note....

Have a great weekend all.

This Lass goes off,

Korolova, Live so track Boa @ Sao Paulo, Brazil / Melodic Techno & Progressive House Mix.

1:25:24 duration.

* Once again if a savvy person could locate and post link would be greatfull, thankyou in advance.

Regards,

Esq.

Tothemoon24

Top 20

Great positive post Esq,Good evening Chippers,

Great week all in all , finnished on par with last week. Incredible.

As Fact Finder was kind enough to lay out for all , in an earlier post, our company is engaged with 6 to 7 partners this week @ C.E.S , most of which behind closed doors, showcasing our tech & the benefits to clientele, some of which require confidentiality / NDA 's to be signed before a viewing.

TOP SHELF & extremely exciting.

Future , solid partners & connections, with serious engagement, one on one.

Sterling effort to all contributors this week .

Big end of town is deffinately trying to psychologically play with us on the share price side of things.

Personally, I am 97% certain Brainchip share price will be many fold from where it is pressently, in the not to distant.

On a lighter note....

Have a great weekend all.

This Lass goes off,

Korolova, Live so track Boa @ Sao Paulo, Brazil / Melodic Techno & Progressive House Mix.

1:25:24 duration.

* Once again if a savvy person could locate and post link would be greatfull, thankyou in advance.

Regards,

Esq.

I did a 10km beach walk today listening to your last banger great vibe .

I’ll await the kind work of a savvy soul for your next delight

Good evening,

2nd day of CES due to start in less than 5 hours, I have noticed a few posts where some have mentioned that companies that

are partnered with us haven't actually raved about the Akida input, and why would they?

NDAs haven't magically dissolved, and as in the case of Edge Impulse, giving us a great plug, well that's truly fantastic, but we

have absolutely no control over what they do and speak.

Is Brainchip going to start pumping up IFS, I don't think so, each company, though partnered up through their ecosystems, are

when all is said and done, looking after themselves, just as our Board is doing.

As has been stated numerous times, we may never even know that our IP is embedded in some company's future products, as far

as they are concerned, it's their product and their name being pumped, we quietly go about our business, assisting with engineering

services when approached or sitting back and enjoying the 90% + profit margin and royalty streams.

It's the doors that have been closed up until now, that I find really interesting, in 12/24 months how many new names will suddenly

appear that were borne out of CES 2023, having Brainchip's team making the most of a great business opportunity...now that's exciting

to ponder over.

5 days in, just pace yourselves, all of 2023 and all of 2024....then we probably have fair reason to question how we are travelling, I

realise that my timeline isn't yours and that's fair enough, you make that choice, which I respect.

Love Brainchip. Tech.

2nd day of CES due to start in less than 5 hours, I have noticed a few posts where some have mentioned that companies that

are partnered with us haven't actually raved about the Akida input, and why would they?

NDAs haven't magically dissolved, and as in the case of Edge Impulse, giving us a great plug, well that's truly fantastic, but we

have absolutely no control over what they do and speak.

Is Brainchip going to start pumping up IFS, I don't think so, each company, though partnered up through their ecosystems, are

when all is said and done, looking after themselves, just as our Board is doing.

As has been stated numerous times, we may never even know that our IP is embedded in some company's future products, as far

as they are concerned, it's their product and their name being pumped, we quietly go about our business, assisting with engineering

services when approached or sitting back and enjoying the 90% + profit margin and royalty streams.

It's the doors that have been closed up until now, that I find really interesting, in 12/24 months how many new names will suddenly

appear that were borne out of CES 2023, having Brainchip's team making the most of a great business opportunity...now that's exciting

to ponder over.

5 days in, just pace yourselves, all of 2023 and all of 2024....then we probably have fair reason to question how we are travelling, I

realise that my timeline isn't yours and that's fair enough, you make that choice, which I respect.

Love Brainchip. Tech.

Deadpool

Regular

Good evening Chippers,

Great week all in all , finnished on par with last week. Incredible.

As Fact Finder was kind enough to lay out for all , in an earlier post, our company is engaged with 6 to 7 partners this week @ C.E.S , most of which behind closed doors, showcasing our tech & the benefits to clientele, some of which require confidentiality / NDA 's to be signed before a viewing.

TOP SHELF & extremely exciting.

Future , solid partners & connections, with serious engagement, one on one.

Sterling effort to all contributors this week .

Big end of town is deffinately trying to psychologically play with us on the share price side of things.

Personally, I am 97% certain Brainchip share price will be many fold from where it is pressently, in the not to distant.

On a lighter note....

Have a great weekend all.

This Lass goes off,

Korolova, Live so track Boa @ Sao Paulo, Brazil / Melodic Techno & Progressive House Mix.

1:25:24 duration.

* Once again if a savvy person could locate and post link would be greatfull, thankyou in advance.

Regards,

Esq.

RobjHunt

Regular

Once again Esq, spot on the money, me thinking.Good evening Chippers,

Great week all in all , finnished on par with last week. Incredible.

As Fact Finder was kind enough to lay out for all , in an earlier post, our company is engaged with 6 to 7 partners this week @ C.E.S , most of which behind closed doors, showcasing our tech & the benefits to clientele, some of which require confidentiality / NDA 's to be signed before a viewing.

TOP SHELF & extremely exciting.

Future , solid partners & connections, with serious engagement, one on one.

Sterling effort to all contributors this week .

Big end of town is deffinately trying to psychologically play with us on the share price side of things.

Personally, I am 97% certain Brainchip share price will be many fold from where it is pressently, in the not to distant.

On a lighter note....

Have a great weekend all.

This Lass goes off,

Korolova, Live so track Boa @ Sao Paulo, Brazil / Melodic Techno & Progressive House Mix.

1:25:24 duration.

* Once again if a savvy person could locate and post link would be greatfull, thankyou in advance.

Regards,

Esq.

The only thing I disagree with is that I believe your gonkulations should be closer to 98%

Rise from the ashes

Regular

[Press Release] Dolphin Design and Neovision joint forces to make AI processing viable for ambient computing electronics - Accelerate Energy Efficient SoC designs - Dolphin Design

Las Vegas, 3 January 2023. Dolphin Design, a leader in silicon IPs for Very Edge Computing embedding AI, integrated power management, and audio IPs, and Neovision, an AI Engineering Consulting company, specializing in Deep Learning & Computer Vision, announced today during CES at Las Vegas that...

MadMayHam | 合氣道

Regular

Anyone taken a look into this yet?

[Press Release] Dolphin Design and Neovision joint forces to make AI processing viable for ambient computing electronics - Accelerate Energy Efficient SoC designs - Dolphin Design

Las Vegas, 3 January 2023. Dolphin Design, a leader in silicon IPs for Very Edge Computing embedding AI, integrated power management, and audio IPs, and Neovision, an AI Engineering Consulting company, specializing in Deep Learning & Computer Vision, announced today during CES at Las Vegas that...www.dolphin-design.fr

From the brief description in the link it seems like it is merely a mathematical accelerator akin to Percieve's Ergo, it does not claim to be neuromorphic or use SNN. @Diogenese or our other more technical-minded friends can shed more light on this.

Rise from the ashes

Regular

Thanks for the reply @MadMayHamFrom the brief description in the link it seems like it is merely a mathematical accelerator akin to Percieve's Ergo, it does not claim to be neuromorphic or use SNN. @Diogenese or our other more technical-minded friends can shed more light on this.

I didn't have a chance to look into it much but thought I'd post it here because normally I'll just forget about stuff I find unless it's a real bazinga of a find

Gies

Regular

#ces2023 #sensor #iot #neuromorphic #spikingneuralnetworks #ai #artificialintelligence #iot #ces2023 #edgecomputing #edgelearning #faredge #augmentedreality #virtualreality #semiconductor… | Jesse Chapman

PROPHESEE presenting at #ces2023 using BrainChip technology showcasing three different technologies. “And to present them with three technologies, each targeting a key market: a new sensor prototype, co-developed with the Sony group and intended for the improvement of the image for the world of...

PROPHESEE Renesas Electronics Edge Impulse VVDN Technologies Socionext US NVISO Mercedes-Benz AG Valeo

These companies showcase Brainchip technology

Sirod69

bavarian girl ;-)

As you always write, I think Innoviz is in bed with Qualcomm

......

*1 InnovizTwo

InnovizTwo is Innoviz’s new high-performance, automotive-grade LiDAR sensor which offers a fully featured solution for all levels of autonomous driving at dramatically lower cost.

Innoviz360 LiDAR to Support Multiple Applications & Verticals

- 6. Januar 2023

......

Innoviz360 Specifications

Key Innoviz360 features include up to 1280 scanning lines per frame, configurable frame rate, 0.05°x 0.05° resolution, 64° vertical FOV, and 300 meters of range. Innoviz360 leverages other hardware advances from *1InnovizTwo, including a single laser, detector, and ASIC. According to the CES 2023 announcement, the resolution, vertical field of view, and reduced cost of Innoviz360 will help OEMs meet the challenges of Level 4 to 5 automation.*1 InnovizTwo

InnovizTwo is Innoviz’s new high-performance, automotive-grade LiDAR sensor which offers a fully featured solution for all levels of autonomous driving at dramatically lower cost.

Last edited:

SharesForBrekky

Regular

Nice mention here of Brainchip & Edge Impulse's partnership (press release #6):

www.prnewswire.com

www.prnewswire.com

This Week in Tech News: 11 Stories You Need to See

/PRNewswire/ -- With thousands of press releases published each week, it can be difficult to keep up with everything on PR Newswire. To help journalists...

Similar threads

- Replies

- 0

- Views

- 4K

- Replies

- 9

- Views

- 6K

- Replies

- 0

- Views

- 3K