Proga

Regular

Mutual Funds are a subset of Institutional Owners. Mutual Funds are a list of all the different funds you can invest in. Individual ETF's are usually listed on a bourse where the institutional owner collects fees for running it and the rest are internal investment funds for their own clients where they charge fees as well. As an example, all iShare ETF mutual funds are run by BlackRock as the institutional owner.Ok, this has got me confused.

The top 20 shareholders list as per last announcement on 1AUG22 is:

View attachment 15431

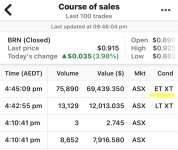

On the MSN Money website, it shows Brainchip ownership by Mutual Fund:

View attachment 15426

And also ownership of Brainchip shares by Institutions:

View attachment 15427

I'm not sure how current the data is on the MSN website, but these 2 lists are totally different to that shown on the Top 20 Shareholders list. Would anyone have any suggestions as to what insight the lists from the MSN website give us?

(I presume all these other entities hold BRN shares, which is good!)

The MSN money list looks out of date. As we all know there has been a lot of institutional buying in the last couple of months. What intrigues me is Australian Super funds seem to own the bare minimum allowable according to their own fund rules. Having said that, a lot of the smaller super funds might be hidden inside the Institutions. Have a look at SYR below.