Sorry mate spelling mistake. The other forum "hot$@##er"Form?

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

BRN Discussion Ongoing

- Thread starter TechGirl

- Start date

uiux

Regular

What are you asking Pmel?

Fullmoonfever

Top 20

Yeah...usual suspects dribbling sh!t over there but he fighting the good fight and keeping the bastards honest as they say.

Would be this one.

Fullmoonfever

Top 20

With further regards to Dell.DELL

- Edge will be one of the biggest transformations in manufacturing history

- 81% of of manufacturers plan to increase their edge deployments in 2023

View attachment 14705

View attachment 14704

View attachment 14706

View attachment 14707

The below is a excerpt snip from a post of mine back in Mar.

Post in thread 'Breaking News- new BRN articles/research' https://thestockexchange.com.au/threads/breaking-news-new-brn-articles-research.6/post-31943

"Well...would appear the Director of Dell Tech in China across us (with other Co's to be fair haha)....article / post from the Dell verified account.

Is all their bold but we down in the hardware commentary & I highlighted red.

Edit. Thought I would also their comment end of hardware section re accelerators and what they want to achieve."

In conclusion, the advanced algorithms of the "third wave of artificial intelligence" can not only extract valuable information (Learn) from the data in the environment (Perceive), but also create new meanings (Abstract). , and has the ability to assist human planning and decision-making (Reasoning), while meeting human needs (Integration, Integration) and concerns (Ethics, Security) .

- From a hardware perspective, the accelerators of Domain Specific Architectures (DSA) [12] enable the third-wave AI algorithms to operate in a hybrid ecosystem consisting of Edge, Core, and Cloud. run anywhere in the system . Specifically, accelerators for specific domain architectures include the following examples: Nvidia's GPU, Xilinx's FPGA, Google's TPU, and artificial intelligence acceleration chips such as BrainChip's Akida Neural Processer, GraphCore's Intelligent Processing Unit (IPU), Cambrian's Machine Learning Unit (MLU) and more. These types of domain-specific architecture accelerators will be integrated into more information devices, architectures, and ecosystems by requiring less training data and being able to operate at lower power when needed. In response to this trend, the area where we need to focus on development is to develop a unified heterogeneous architecture approach that enables information systems to easily integrate and configure various different types of domain-specific architecture hardware accelerators. For Dell Technologies, we can leverage Dell's vast global supply chain and sales network to attract domain-specific architecture accelerator suppliers to adhere to the standard interfaces defined by Dell to achieve a unified heterogeneous architecture .

I was asking about the patent getting approved on 31st august. Is it significant. Does is create roadblock for other companies?What are you asking Pmel?

Ethinvestor

Regular

Well, When I posted 11 days ago the short-term downtrend is coming .. some (DOZ and all his likers laughed at me - I guess they not laughing now) and you said you watching... with a funny gif ( I liked the left girl watching me anywayI’m waiting patiently to buy in as I’ve still not sold some of my coin yet as it’s been pumping since the release plus I’ve got my self super to use. Might see what happening overnight in America before i decide to take the plunge

Proga

Regular

Looks like you weren't the only one after the board approved it. A big spike in volume today.There's an opportunity if you own NEA, the board's recommending a takeover offer - I sold today and will divert into BRN holdings. Off topic, but shows how little Aussie tech companies can be targets.

They may not be able to if there is news pending - insider trading rules?

Proga

Regular

Nothing wrong sometimes to hedge by taking some profits and sit on the cash until you see which direction the global markets go. BRN has bounced of a low of 80c 3 times in the last 2-3 months. DYORThat's exactly how I feel about Anson (37%+.) But I do not interfere with the nice jump. There is still air.

Sirod69

bavarian girl ;-)

BrainChip demonstrates Regression Analysis with Vibration Sensors

In this video, BrainChip demonstrates regression analysis using vibration sensors in a live demonstration as well as numerous use cases for preventative maintenance, safety and monitoring.

BrainChip demonstrates Regression Analysis with Vibration Sensors

Discover how BrainChip's regression analysis enables accurate analysis and predictive modeling for various applications.

Sirod69

bavarian girl ;-)

Todd Vierra and Rob Telson like it!

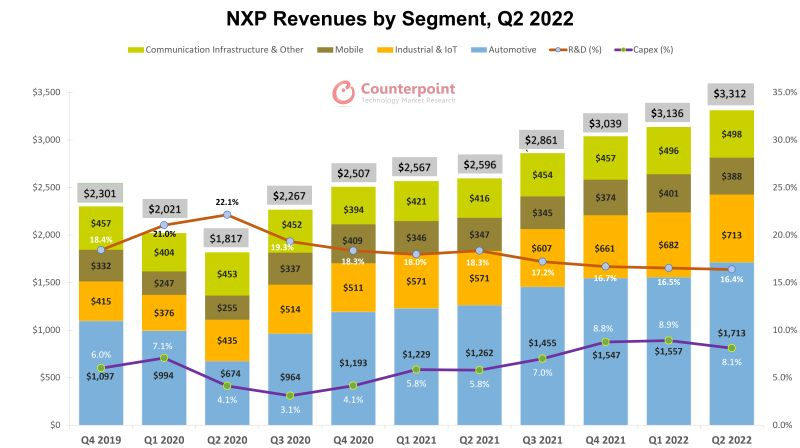

Despite macroeconomic headwinds and supply chain constraints, NXP Semiconductors reported healthy Q2 2022 revenues at $3.31 billion, an increase of 27.6% YoY and 5.6% QoQ. Major revenue drivers for this quarter were the #automotive and #industrial segments.

Key Takeaways:

NXP’s #automotive segment captured almost 52% of the total revenue and stood at $1.7 billion, rising 35.7% YoY and 10% QoQ respectively. Supply-demand mismatch persists within the automobile industry but the content within cars is increasing due to increased digitization and growing penetration of xEVs, which is where NXP benefits a lot.

NXP’s #automotive segment captured almost 52% of the total revenue and stood at $1.7 billion, rising 35.7% YoY and 10% QoQ respectively. Supply-demand mismatch persists within the automobile industry but the content within cars is increasing due to increased digitization and growing penetration of xEVs, which is where NXP benefits a lot.

The industrial and #iot segment grew by 25% YoY to reach $713 million. Use cases for smart and connected applications, like within homes or factories, are evolving and leveraging the potential of IoT. Its broad portfolio includes scalable #compute platforms along with collaborations with multiple cloud players like AWS, Azure, and Baidu, which allows for differentiated software enablement and services.

The industrial and #iot segment grew by 25% YoY to reach $713 million. Use cases for smart and connected applications, like within homes or factories, are evolving and leveraging the potential of IoT. Its broad portfolio includes scalable #compute platforms along with collaborations with multiple cloud players like AWS, Azure, and Baidu, which allows for differentiated software enablement and services.

During these uncertain times, the company is banking on its NCNR orders to provide its customers with supply assurances. For 2023, NCNR orders are already more than what the company can supply. Therefore, NXP is focused on de-risking its existing backlog for potential double/stale orders and improving supply capabilities.

During these uncertain times, the company is banking on its NCNR orders to provide its customers with supply assurances. For 2023, NCNR orders are already more than what the company can supply. Therefore, NXP is focused on de-risking its existing backlog for potential double/stale orders and improving supply capabilities.

For the third quarter, the target is to achieve 20% YoY growth at $3.425 billion. The automotive and industrial segments will take the center stage again to provide a safe landing going forward with respect to demand.

For the third quarter, the target is to achieve 20% YoY growth at $3.425 billion. The automotive and industrial segments will take the center stage again to provide a safe landing going forward with respect to demand.

For more insights, refer to the link below

https://lnkd.in/dQ4wBvsX

Despite macroeconomic headwinds and supply chain constraints, NXP Semiconductors reported healthy Q2 2022 revenues at $3.31 billion, an increase of 27.6% YoY and 5.6% QoQ. Major revenue drivers for this quarter were the #automotive and #industrial segments.

Key Takeaways:

For more insights, refer to the link below

https://lnkd.in/dQ4wBvsX

cosors

👀

My account was finally closed after three mails and four months. If it is really important why he gives it to TMH and not to us. Unchecked since I don't look there anymore. My thought.Has anyone read the post from @ uiux on the other forum about a patent getting approved. How significant is it?

US20190188600A1 - Secure Voice Communications System - Google Patents

Disclosed herein are system and method embodiments for establishing secure communication with a remote artificial intelligent device. An embodiment operates by capturing an auditory signal from an auditory source. The embodiment coverts the auditory signal into a plurality of pulses having a...patents.google.com

Dyor.

Krustor

Regular

We are in the lucky situation, that everyone is free to share his/her thoughts and researches at the place and time he/she like to.My account was finally closed after three mails and four months. If it is really important why he gives it to TMH and not to us. Unchecked since I don't look there anymore. My thought.

@uiux is one of the last man standing over there - fighting everyday with bullshit from shareguy and friends... I personally don't want to change...

If he feels free to share it here, he will do...

Akida Ballista & Pantene

cosors

👀

I know that. That's not what I meant. BRN on HC is bad but Talga at least as well. A good buddy has dared the way over again. I'm pulling mine hat to him. But for me the mud pit is just nothing. That doesn't mean I don't exceedingly appreciate uiux posts and tireless work here. To each his own playground.

I have one question to you. Were you the one with the MB article that was the highest rated on HC that day and also attracted me like a moth to the light, even though I knew BRN long before?

All good mate, does not want to annoy was annoyed by HC but too much. With TLG I went through a hard school. Cheers!

AKIDA BALLISTA & UBIQUE

I have one question to you. Were you the one with the MB article that was the highest rated on HC that day and also attracted me like a moth to the light, even though I knew BRN long before?

All good mate, does not want to annoy was annoyed by HC but too much. With TLG I went through a hard school. Cheers!

AKIDA BALLISTA & UBIQUE

Izzzzzzzzzzy

Regular

NO ONE LOOK AT THE MARKET

Sirod69

bavarian girl ;-)

NIEMAND BLICKT AUF DEN MARKT

JK200SX

Regular

I don't know if some of these are new videos posted on the Brainchipinc youtube channel, but they show as being uploaded 6hrs ago.

They are all now linked to the playlists on the AKIDA BALLISTA (Awesome Brainchip Videos) Youtube channel:

They are all now linked to the playlists on the AKIDA BALLISTA (Awesome Brainchip Videos) Youtube channel:

Similar threads

- Replies

- 0

- Views

- 4K

- Replies

- 9

- Views

- 6K

- Replies

- 0

- Views

- 3K