You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

BRN Discussion Ongoing

- Thread starter TechGirl

- Start date

Deadpool

Regular

I was just having a gander at there web site, they have more open positions on offer than Seek.Hi DP, yes it seems like a no brainer. The best part of it all, the founder Luckey Palmer is a balls to the wall younger version of Musk, and I’ve got no doubt of all the engineers within a tech company like Anduril or Tesla the word AKIDA has filtered through.

To me it’s just at what stage, have they been playing with our IP for the last year or two or have they just found out after Mercedes Benz announcements.

The submarines would be great with Akida though to me the big one for them like I said in my original post is deploying a sensor bomb full of Akida enabled devices which would integrate into their lattice OS.

I have never seen a company with so many jobs on offer, here and abroad.

Newk R

Regular

Just an observation on this. If the company has some idea of pending good news in the future, why would they let out useless updates in the meantime.Tend to agree. I've been in since 2016 and don't remember such a long "blackout" period....sure hope the news will be spectacular when it eventually sees the light of day.......

My point being, if there was no news on the horizon, we would possibly see all sorts of vague releases in the vain hope of propping up the share price.

So, in this instance, perhaps "Silence is Golden".

equanimous

Norse clairvoyant shapeshifter goddess

Discussions on BRN making announcements has been a bit dissapointing.

Two reasons:

1. It highlights how some people think shouting at the TV will cause change despite the continuing evidence that nothing happens the louder you scream. Common sense dictates that a letter to the TV station or regulator has some chance of causing change and that so too might sending an email to Brainchip Investor Relations setting out concerns and outlining what type of information shareholders would like to see in the 4C. Then again why not just sit there screaming at the computer screen hoping against all the evidence that it will effect change.

2. Basing an argument about the inadequacies of an ASX listed company (Brainchip) not making announcements on the fact that a private unlisted company (Nviso) which is seeking to raise capital and eventually list on the ASX and is trying to pump interest in its company to a frenzy before it is regulated and can only tell the absolute truth as determined by the ASX is just plain stupid.

Apples and oranges have more in common than Brainchip and Nviso where the ability to make announcements is concerned.

In fact if they thought to analysis the releases by Nviso they would know that Nviso would not have been allowed to release the majority of the things they have if they were a listed on the ASX.

Just by way of example the claims by Nviso they have signed two deals with OEM Tier 1 & 2 automotive companies would not be permitted unless they disclosed full details of the contracts and the names of the two companies. The Panasonic deal would have to be fully disclosed as to the terms of the deal and the dollars and cents that will flow as would the Siemens Health engagement.

Holding Brainchip to the standards of Nviso is just incorrect.

Two reasons:

1. It highlights how some people think shouting at the TV will cause change despite the continuing evidence that nothing happens the louder you scream. Common sense dictates that a letter to the TV station or regulator has some chance of causing change and that so too might sending an email to Brainchip Investor Relations setting out concerns and outlining what type of information shareholders would like to see in the 4C. Then again why not just sit there screaming at the computer screen hoping against all the evidence that it will effect change.

2. Basing an argument about the inadequacies of an ASX listed company (Brainchip) not making announcements on the fact that a private unlisted company (Nviso) which is seeking to raise capital and eventually list on the ASX and is trying to pump interest in its company to a frenzy before it is regulated and can only tell the absolute truth as determined by the ASX is just plain stupid.

Apples and oranges have more in common than Brainchip and Nviso where the ability to make announcements is concerned.

In fact if they thought to analysis the releases by Nviso they would know that Nviso would not have been allowed to release the majority of the things they have if they were a listed on the ASX.

Just by way of example the claims by Nviso they have signed two deals with OEM Tier 1 & 2 automotive companies would not be permitted unless they disclosed full details of the contracts and the names of the two companies. The Panasonic deal would have to be fully disclosed as to the terms of the deal and the dollars and cents that will flow as would the Siemens Health engagement.

Holding Brainchip to the standards of Nviso is just incorrect.

Fullmoonfever

Top 20

Been wondering about the V2L myself.

Post in thread 'Renesas' https://thestockexchange.com.au/threads/renesas.8510/post-91507

Post in thread 'Renesas' https://thestockexchange.com.au/threads/renesas.8510/post-91590

Post in thread 'Renesas' https://thestockexchange.com.au/threads/renesas.8510/post-91614

AARONASX

Holding onto what I've got

Nothing new uet....back to digging at theHowdy Team,

Have I missed anything? I suppose I better put my rubber gloves on and get back to work finding some more wet spots!

View attachment 14646

Blue Sky Mine. Gotta feed the family somehow

DingoBorat

Slim

It uses their DRP AI solution, so not us me thinks..Been wondering about the V2L myself.

Post in thread 'Renesas' https://thestockexchange.com.au/threads/renesas.8510/post-91507

Post in thread 'Renesas' https://thestockexchange.com.au/threads/renesas.8510/post-91590

Post in thread 'Renesas' https://thestockexchange.com.au/threads/renesas.8510/post-91614

Fullmoonfever

Top 20

Yeah understand DRP and unlikely us however I'm wondering if our IP potentially being utilised within the DRP in some use cases?It uses their DRP AI solution, so not us me thinks..

A statement from Chittipeddi at the time.

At the very low end, we have added an ARM M33 MCU and spiking neural network with BrainChip core licensed for selected applications – we have licensed what we need to license from BrainChip including the software to get the ball rolling.”

Whether software refers only to what needed to run Akida core / nodes or if IP is something that can be utilised within the DRP?

Stable Genius

Regular

My thoughts are that the DRP AI solution is why Renesas is not going to be marketing Akida as hard as they could.Yeah understand DRP and unlikely us however I'm wondering if our IP potentially being utilised within the DRP in some use cases?

A statement from Chittipeddi at the time.

At the very low end, we have added an ARM M33 MCU and spiking neural network with BrainChip core licensed for selected applications – we have licensed what we need to license from BrainChip including the software to get the ball rolling.”

Whether software refers only to what needed to run Akida core / nodes or if IP is something that can be utilised within the DRP?

Akida is competition for their home grown product. They’ll be happy to use Akida where it gives them the edge where there product doesn’t work, but their profit margins are going to be greater using their own IP.

I still think we are in the sensor released recently as discussed in the Renesas thread. “Watch the financials.”

What? The Blue Sky Mining Company IS coming to the rescue?Nothing new uet....back to digging at the

Blue Sky Mine. Gotta feed the family somehow

Bravo

Meow Meow 🐾

Me again,

Inspector Bravo reporting for dot-joining duty!

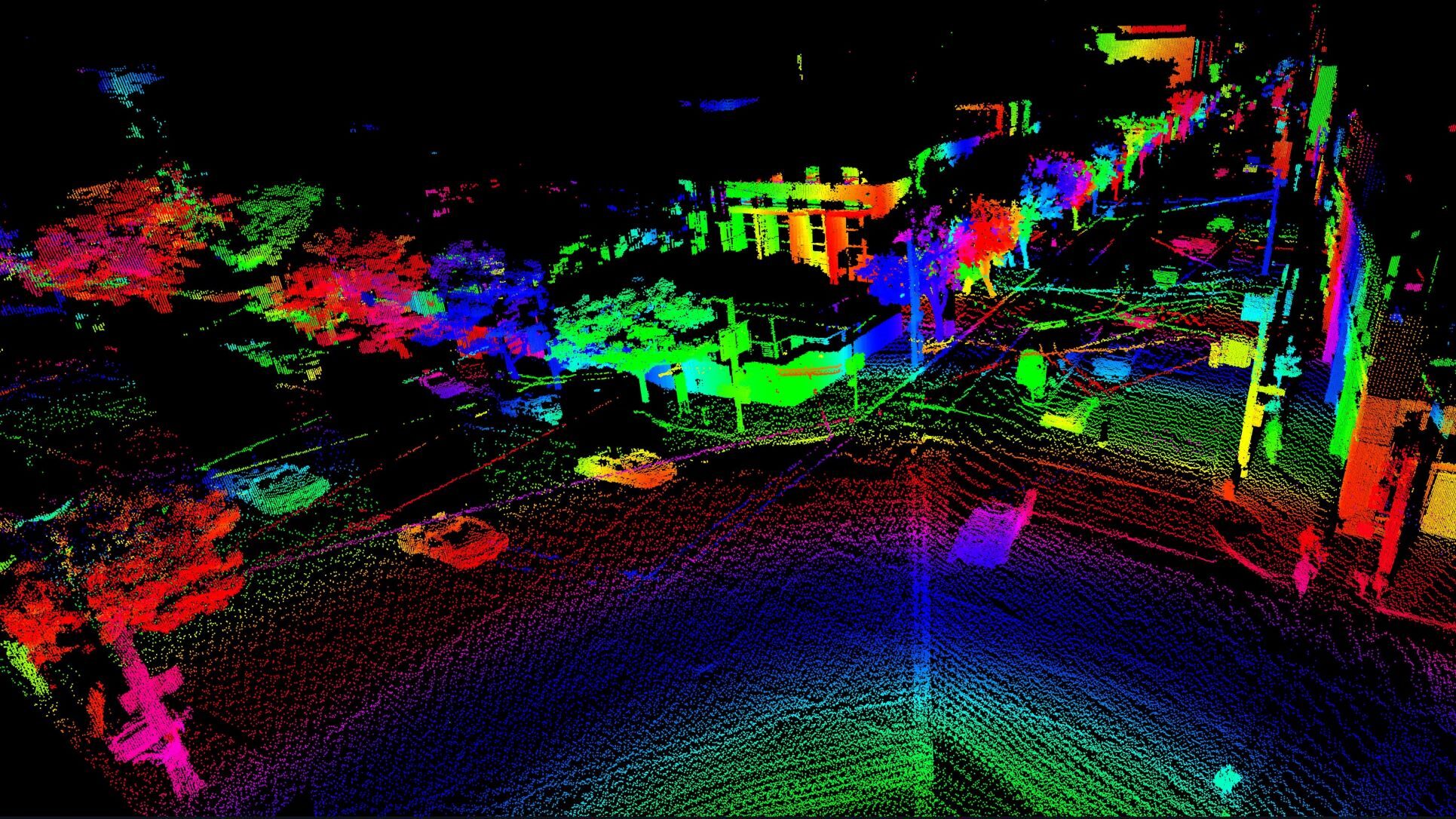

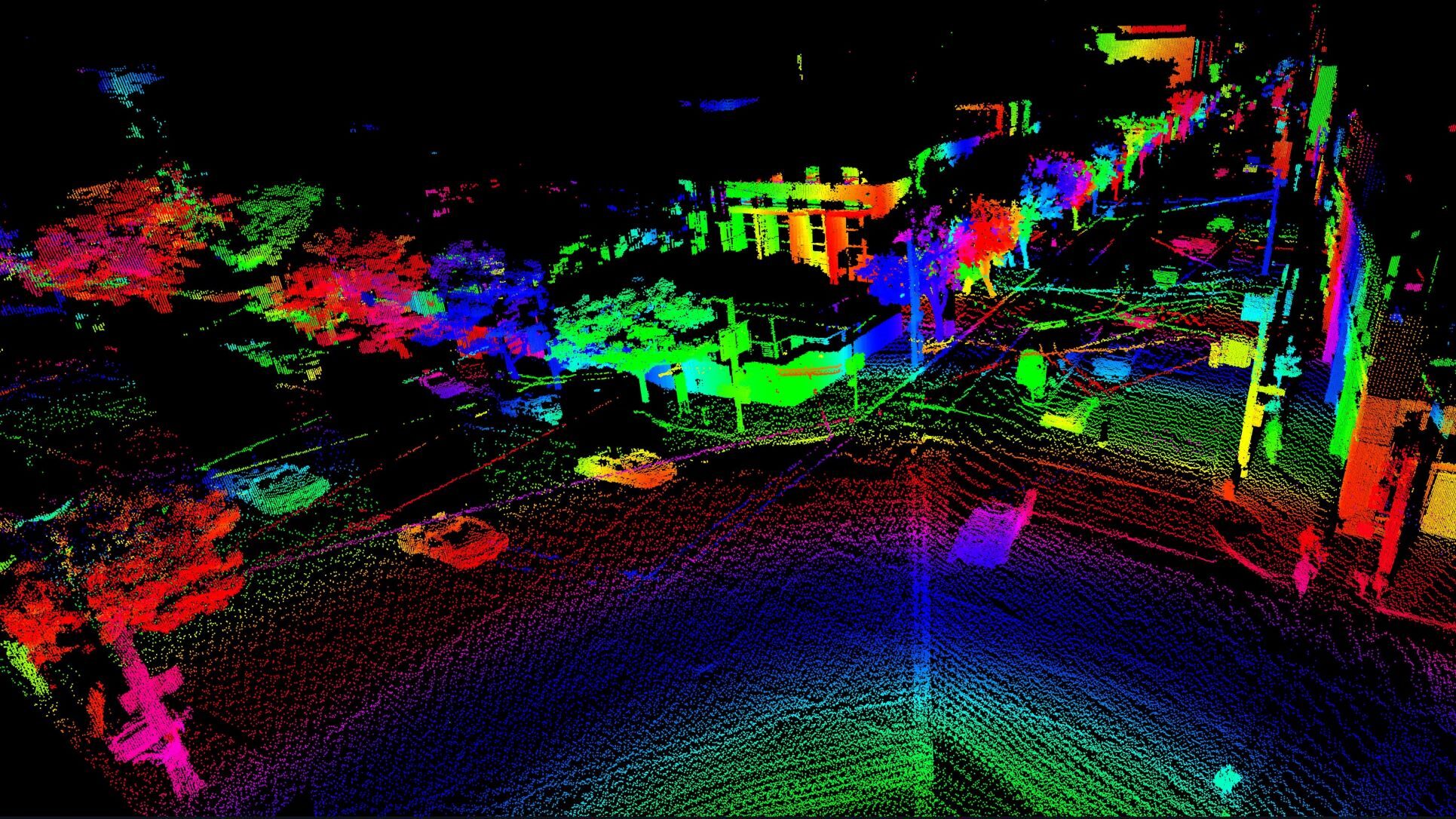

Something I'm currently perusing is this recent announcement from AEye Inc who are teaming up with Booz Allen Hamilton to drive the adoption of AEye’s technology for aerospace and defense applications/DOD requirements. They are talking about applications "that must be able to see, classify, and respond to an object in real-time, at high speeds, and long distances". They mention technology like artificial intelligence (AI), machine learning (ML), and edge computing as well as embedded sensors that are capable of long-range detection, exceeding one kilometer, are flexible enough to track a bullet at 25,000 frames per second, and can either cue off of other sensors or self-cue, subsequently adapting to place high-density regions of interest around targets". The new long range LiDAR technology looks particularly interesting.

I looked at AEye's website and can see that they're working with NVDIA and with the NVDIA DRIVE autonomous vehicle platform (circled below). It also says "selected by Continental, one of the world’s largest automotive suppliers, as their high-performance sensor with volume production starting in 2024".

If you check out this other article linked below it talks about AEye's CTO who is Luis Dussan "previously a leading technologist at Lockheed Martin and Northrop Grumman responsible for designing mission-critical targeting systems for fighter jets. Dussan realized that a self-driving car faces a similar challenge; it must be able to see, classify, and respond to an object—whether it’s a parked car or a child crossing the street—in real-time and before it’s too late. He helped build the AEye team of scientists and electro-optics engineers from NASA, Lockheed, Northrop, the U.S. Air Force, and DARPA to create a high-performance sensing and perception system to ensure the highest levels of safety for autonomous driving".

Suffice to say, there is a lot of interesting information here which might indicate the use of neuromorphic sensors but I'll need to keep digging to see if I hit either an almighty, tsunami-like water spout or else a dried up ancient burial ground with skeletons piled up in the corner and not a drop of moisture to be found for love or money. Obviously I'm hoping it will be the former option but I'll keep you posted.

B x

News Desk

-

08/19/2022 2 Minutes Read

AEye, Inc, a global leader in adaptive, high-performance lidar solutions, today announced a partnership with Booz Allen Hamilton, one of the Department of Defense’s premier digital system integrators and a leader in data-driven artificial intelligence, to productize and drive the adoption of AEye’s technology for aerospace and defense (A&D) applications.

With the accelerated activity in these markets, the company also announced the opening of an office in Florida’s “Space Coast” region, and the hiring of veteran defense systems engineering leader Steve Frey, a Lockheed Martin and L3Harris Technologies alum, as its vice president of business development for A&D.

AEye and Booz Allen Collaborate on AI-Driven Defense Solutions

“Aerospace and defense applications must be able to see, classify, and respond to an object in real-time, at high speeds, and long distances. AEye’s 4Sight™ software-definable lidar system, with its adaptive sensor-based operating system, uniquely meets these challenging demands,” said Blair LaCorte, CEO of AEye. “We are collaborating with Booz Allen Hamilton to optimize its real-time embedded processor perception stack. This aligns with Booz Allen’s digital battlespace vision for an information-driven, fully integrated conflict space extending across all warfighting domains, enabled by technology like artificial intelligence (AI), machine learning (ML), and edge computing to realize information superiority and achieve overmatch.”

Booz Allen has developed a client toolkit for assessing the performance of machine learning and artificial intelligence, fusing data from multiple sensors – including lidar, camera, and radar – and virtualizing perception data for optimized mapping onto embedded processors to fully support situational awareness for the military. This toolkit accelerates AEye’s 4Sight Intelligent Sensing Platform time-to-market in the Aerospace & Defense markets.

“Information warfare will drive tomorrow’s battles, and wars will be won by those who maintain superior situational awareness provided by critical technologies like AI and ML,” said Dr. Randy M. Yamada, Booz Allen vice president and a leader in the firm’s defense solutions portfolio. “Given this, AI must not be an afterthought, but rather a solution that can keep up with the challenging demands of DOD requirements. Booz Allen will enable AEye’s adaptive, software-defined architecture that greatly expands the utility of AI and ML for defense applications, which we believe will be a game-changer.”

AEye’s 4Sight sensors are capable of long-range detection, exceeding one kilometer, are flexible enough to track a bullet at 25,000 frames per second, and can either cue off of other sensors or self-cue, subsequently adapting to place high-density regions of interest around targets. These capabilities, enabled by 4Sight’s in-sensor perception, greatly expand the utility of AI and ML for defense applications and, ultimately, save lives.

Beyond defense, there are many types of applications that require a real-time transformation of raw data into actionable information. As such, AEye plans to leverage the perception stack advancements being developed in conjunction with Booz Allen into various edge computing environments with its automotive and industrial customer base.

www.geospatialworld.net

www.geospatialworld.net

futurride.com

futurride.com

Inspector Bravo reporting for dot-joining duty!

Something I'm currently perusing is this recent announcement from AEye Inc who are teaming up with Booz Allen Hamilton to drive the adoption of AEye’s technology for aerospace and defense applications/DOD requirements. They are talking about applications "that must be able to see, classify, and respond to an object in real-time, at high speeds, and long distances". They mention technology like artificial intelligence (AI), machine learning (ML), and edge computing as well as embedded sensors that are capable of long-range detection, exceeding one kilometer, are flexible enough to track a bullet at 25,000 frames per second, and can either cue off of other sensors or self-cue, subsequently adapting to place high-density regions of interest around targets". The new long range LiDAR technology looks particularly interesting.

I looked at AEye's website and can see that they're working with NVDIA and with the NVDIA DRIVE autonomous vehicle platform (circled below). It also says "selected by Continental, one of the world’s largest automotive suppliers, as their high-performance sensor with volume production starting in 2024".

If you check out this other article linked below it talks about AEye's CTO who is Luis Dussan "previously a leading technologist at Lockheed Martin and Northrop Grumman responsible for designing mission-critical targeting systems for fighter jets. Dussan realized that a self-driving car faces a similar challenge; it must be able to see, classify, and respond to an object—whether it’s a parked car or a child crossing the street—in real-time and before it’s too late. He helped build the AEye team of scientists and electro-optics engineers from NASA, Lockheed, Northrop, the U.S. Air Force, and DARPA to create a high-performance sensing and perception system to ensure the highest levels of safety for autonomous driving".

Suffice to say, there is a lot of interesting information here which might indicate the use of neuromorphic sensors but I'll need to keep digging to see if I hit either an almighty, tsunami-like water spout or else a dried up ancient burial ground with skeletons piled up in the corner and not a drop of moisture to be found for love or money. Obviously I'm hoping it will be the former option but I'll keep you posted.

B x

AEye joins hands with Booz Allen to advance Lidar in Aerospace and Defense

ByNews Desk

-

08/19/2022 2 Minutes Read

AEye, Inc, a global leader in adaptive, high-performance lidar solutions, today announced a partnership with Booz Allen Hamilton, one of the Department of Defense’s premier digital system integrators and a leader in data-driven artificial intelligence, to productize and drive the adoption of AEye’s technology for aerospace and defense (A&D) applications.

With the accelerated activity in these markets, the company also announced the opening of an office in Florida’s “Space Coast” region, and the hiring of veteran defense systems engineering leader Steve Frey, a Lockheed Martin and L3Harris Technologies alum, as its vice president of business development for A&D.

AEye and Booz Allen Collaborate on AI-Driven Defense Solutions

“Aerospace and defense applications must be able to see, classify, and respond to an object in real-time, at high speeds, and long distances. AEye’s 4Sight™ software-definable lidar system, with its adaptive sensor-based operating system, uniquely meets these challenging demands,” said Blair LaCorte, CEO of AEye. “We are collaborating with Booz Allen Hamilton to optimize its real-time embedded processor perception stack. This aligns with Booz Allen’s digital battlespace vision for an information-driven, fully integrated conflict space extending across all warfighting domains, enabled by technology like artificial intelligence (AI), machine learning (ML), and edge computing to realize information superiority and achieve overmatch.”

Booz Allen has developed a client toolkit for assessing the performance of machine learning and artificial intelligence, fusing data from multiple sensors – including lidar, camera, and radar – and virtualizing perception data for optimized mapping onto embedded processors to fully support situational awareness for the military. This toolkit accelerates AEye’s 4Sight Intelligent Sensing Platform time-to-market in the Aerospace & Defense markets.

“Information warfare will drive tomorrow’s battles, and wars will be won by those who maintain superior situational awareness provided by critical technologies like AI and ML,” said Dr. Randy M. Yamada, Booz Allen vice president and a leader in the firm’s defense solutions portfolio. “Given this, AI must not be an afterthought, but rather a solution that can keep up with the challenging demands of DOD requirements. Booz Allen will enable AEye’s adaptive, software-defined architecture that greatly expands the utility of AI and ML for defense applications, which we believe will be a game-changer.”

AEye’s 4Sight sensors are capable of long-range detection, exceeding one kilometer, are flexible enough to track a bullet at 25,000 frames per second, and can either cue off of other sensors or self-cue, subsequently adapting to place high-density regions of interest around targets. These capabilities, enabled by 4Sight’s in-sensor perception, greatly expand the utility of AI and ML for defense applications and, ultimately, save lives.

Beyond defense, there are many types of applications that require a real-time transformation of raw data into actionable information. As such, AEye plans to leverage the perception stack advancements being developed in conjunction with Booz Allen into various edge computing environments with its automotive and industrial customer base.

AEye joins hands with Booz Allen to advance Lidar in Aerospace and Defense

AEye Inc, has announced a partnership with Booz Allen Hamilton to productize and drive the adoption of AEye’s tech for Aerospace and Defense.

AEye demonstrates adaptive lidar to enhance software-defined vehicles

Its 4Sight platform, shown at AutoSens Detroit, can be modified for any vehicle application, increasing adoption and deployment across OEM platforms and reducing engineering costs by enabling OEMs to embed the same lidar sensor in multiple locations using its proprietary sensing software.

futurride.com

futurride.com

Fullmoonfever

Top 20

Something else I was wondering and only did a quick skim and find plenty research paper / academic info but no direct COTS product info....pretty sure there must be someone using same.My thoughts are that the DRP AI solution is why Renesas is not going to be marketing Akida as hard as they could.

Akida is competition for their home grown product. They’ll be happy to use Akida where it gives them the edge where there product doesn’t work, but their profit margins are going to be greater using their own IP.

I still think we are in the sensor released recently as discussed in the Renesas thread. “Watch the financials.”

Does anyone know of many Feed Forward NN as we know Akida is Feed Forward and from that same article with Chittipeddi a snip below around their DRP-AI.

He was speaking of their move to 2 areas being the general purpose MPU and AI below being feed forward.

Just reducing any chance we've been tested / coupled to the DRP.

ARM battles RISC-V at Renesas

Dr. Sailesh Chittipeddi, Executive Vice President and General Manager of IoT and Infrastructure Business Unit of Renesas Electronics talks to eeNews Europe

"The other side is the embedded AI with DRP dynamically reconfigurable processor for vision solutions. That’s a feedforward neural network rather than a convolutional neural network (CNN), and it offers reasonable 0.5TOPS to 10TOPS at very low power compared to day the Nvidia or Intel equivalent. From my perspective RISC-V will evolve into that areas in the not too distant future"

DingoBorat

Slim

Was trying to think of a reply and then read what Stable Genius said..Yeah understand DRP and unlikely us however I'm wondering if our IP potentially being utilised within the DRP in some use cases?

A statement from Chittipeddi at the time.

At the very low end, we have added an ARM M33 MCU and spiking neural network with BrainChip core licensed for selected applications – we have licensed what we need to license from BrainChip including the software to get the ball rolling.”

Whether software refers only to what needed to run Akida core / nodes or if IP is something that can be utilised within the DRP?

So yeah, what he said

Would be nice for Renesas to adopt our IP more widely, but their "selected applications" for us, are likely very significant, in chips out the door anyway..

D

Deleted member 118

Guest

Howdy Team,

Have I missed anything? I suppose I better put my rubber gloves on and get back to work finding some more wet spots!

View attachment 14646

Fullmoonfever

Top 20

Who’s gonna save meWhat? The Blue Sky Mining Company IS coming to the rescue?

D

Deleted member 118

Guest

Think that's a brown spot finder

Lol

Violin1

Regular

Don't think we would see prop-up announcements under any circumstances - it's not the nature of our team. The Chairman has said the SP will do what the SP does and the company is cautious about getting tied into a "more info" OR "please explain" from ASX. The CEO said he'd try to get a couple more NDA players to be a bit more forthcoming but that hasn't happened and so he has said "watch the financials".Just an observation on this. If the company has some idea of pending good news in the future, why would they let out useless updates in the meantime.

My point being, if there was no news on the horizon, we would possibly see all sorts of vague releases in the vain hope of propping up the share price.

So, in this instance, perhaps "Silence is Golden".

All in all I think this means we'll see not much until late October with the September quarterly. This will be preceded by about three weeks of all of us guessing, debating, dreaming and speculating over what the revenue figure will be! Lol! So it's just accept a slow burn and hope the slow burn is the wick that leads to the explosion of revenue.

Still, remember, we are 50% up since Christmas Eve. Looking forward to this Christmas.

Last edited:

Sirod69

bavarian girl ;-)

Unfortunately, I currently have no more hiding places where I could find money to buy, do you have a treasure chest under the bed?Every time I think I can't afford anymore BRN, I find a way to buy more.

Short term pain = a much bigger yacht.

Similar threads

- Replies

- 0

- Views

- 4K

- Replies

- 9

- Views

- 6K

- Replies

- 0

- Views

- 3K