@ladenbauer

Regular

you make me crazy with your wild things, i'm chinese, don't understand anythingIt's tse and our community and not Australia

https://thestockexchange.com.au/help/terms/

...not the r* word

machen wir so habe es verstanden

you make me crazy with your wild things, i'm chinese, don't understand anythingIt's tse and our community and not Australia

https://thestockexchange.com.au/help/terms/

...not the r* word

machen wir so habe es verstanden

Well, how seriously should I take this?It's tse and our community and not Australia

https://thestockexchange.com.au/help/terms/

...not the r* word

https://www.bosch.de/en/contact/

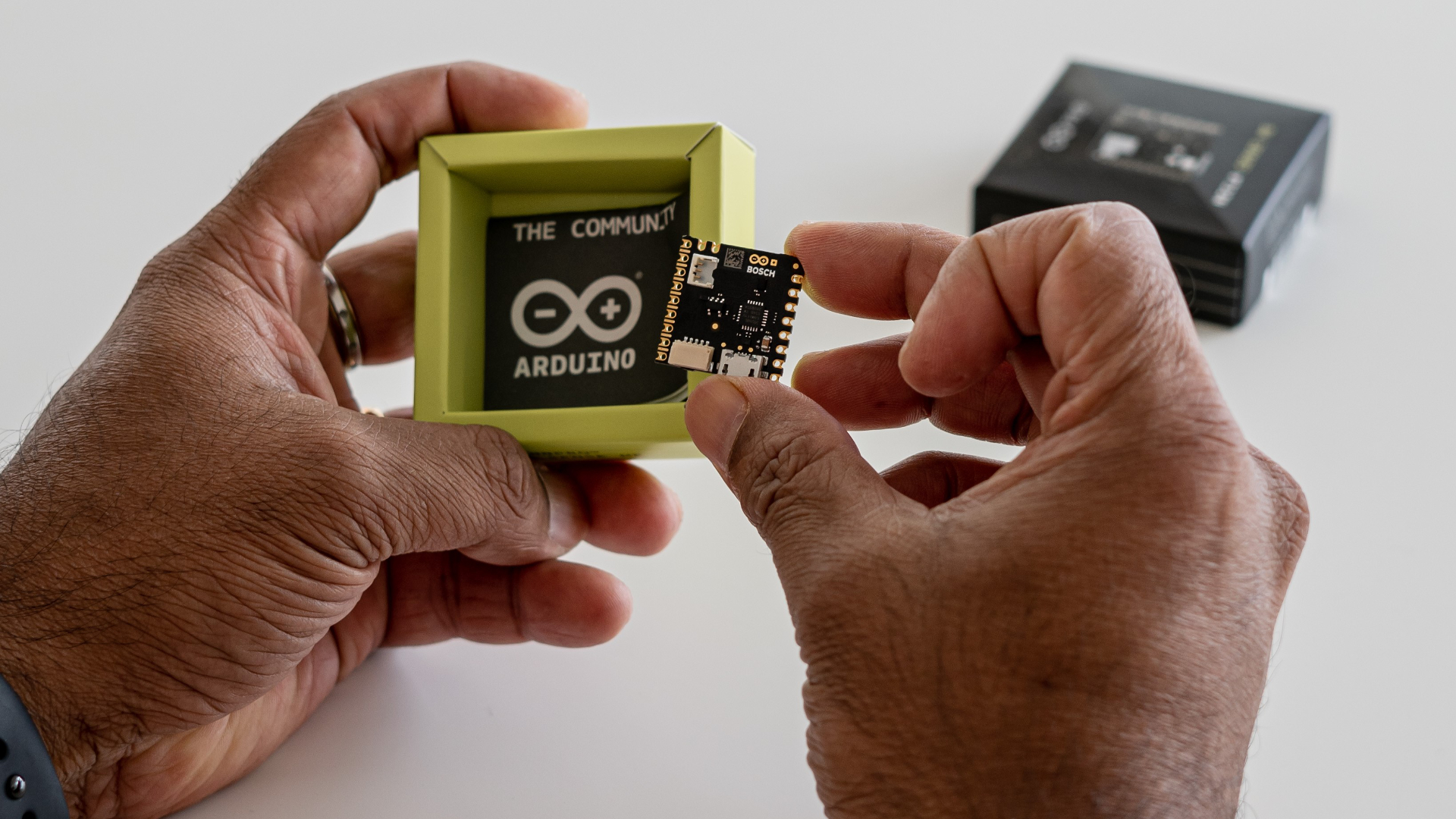

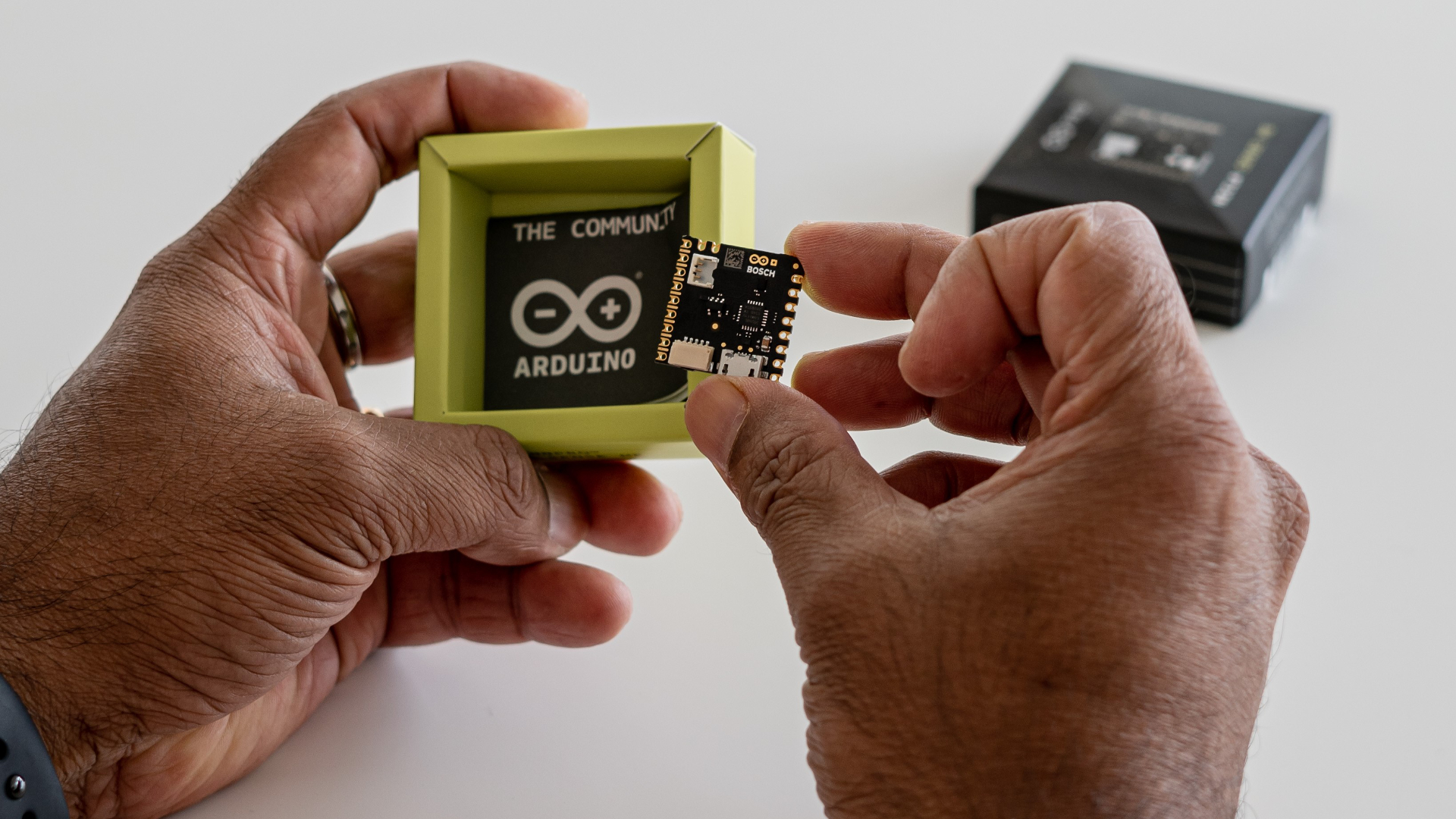

Edge Impulse Announces

Edge Impulse announces a new sensor platform powered by Arduino Nicla Sense ME ➤ Get the latest news from Bosch Sensortec!www.bosch-sensortec.com

I think it's going to be a bad day, for shorters, market wide

A billion dollar journey starts with a single million. (Old Chinese saying.)I note in the last 4C that the receipts from customers was $205k .... Accordingly, I would be more than happy to see this upcoming 4C's receipts from customers at closer to ~$500k range .... as imo this would basically imply, that more customers are coming on board and the Co is collecting funds from services rendered to them.... anything over $500K would be impressive imo and could indicate the beginning of the exponential growth phase as discussed/implied by Sean H at the AGM....

I think it's going to be a bad day, for shorters, market wide

View attachment 11880

Shorters...

Sucks to be you today!

Email received from NVISO regarding their BrainChip Akida interoperability webinar today…We better register to find out more

View attachment 11879

Wow @cosors that is so interesting. Thanks for sharing this with us.And promptly follows a perfect example of Germany's strict data protection. The so-called ~ Data Protection General Regulation (DSGVO) has applied to the entire EU since 2018 and is still being implemented. Nevertheless, anyone who wants to sell or process something here must comply with it. Tesla was made aware of this today. For me, this is not about recording data, but explicitly about recognising faces or behaviour in vehicles, locally and without storing the data. This is the spot for Akida and Nviso, for example. At least that's how I see it.

"Consumer advice center sues Tesla for violation of data protection and misleading environmental claims

19.07.2022, 15:551 min reading time

The Federal Association of German Consumer Centers has sued Tesla. Accordingly, the US electric car manufacturer is concealing the fact that its customers are violating data protection. In addition, Tesla makes misleading environmental claims.

Tesla is facing new lawsuits. The Federal Association of German Consumer Centers justified this on Tuesday with "misleading statements" by the car company about CO2 savings through the purchase of its cars and with data protection concerns when using the so-called guard mode for environmental monitoring. The lawsuits were filed with the district court in Berlin, the association announced in the capital.

The consumer advocates criticize the company's advertising statements, which, according to the association, could give buyers the impression that the purchase of a Tesla reduces the overall CO2 emissions of car traffic. In reality, however, the US group sells the rights it saves on CO2 emissions from its own fleet to other car manufacturers.

Tesla Guardian feature not allowed in public spaces

In addition, the consumer advocates accuse Tesla of not informing the users of its cars that it should be impossible to use the so-called guard function in public spaces in accordance with data protection regulations. Cameras monitor the surroundings and save the recordings in certain cases, which is intended to protect the vehicles. According to the association, this is not permitted under the General Data Protection Regulation.

Accordingly, Tesla owners would have to obtain consent to data processing from random passers-by in the field of view of the cameras. In addition, an unprovoked recording of what is happening around the cars is not permitted at all. The drivers therefore risked fines."

https://www.stern.de/auto/e-mobilit...nd-irrefuehrende-umweltaussagen-32554714.html

The topic of handling data will become more and more important as time goes on. Here, the field can belong to Akida alone until the others have caught up. I would do more advertising with data protection.

The EU's regulation or guideline on facial recognition. From here it branches out into other guidelines such as dealing with AI.

Adopted on 12 May 2022:

https://edpb.europa.eu/system/files/2022-05/edpb-guidelines_202205_frtlawenforcement_en_1.pdf

and:

WHITE PAPER On Artificial Intelligence - A European approach to excellence and trust

Don't worry, I haven't studied them yet either, I didn't have the time.

Google Maps and Germany: Take any city and a well-designed residential area. It is much and often obfuscated. Germany is the least enlightened country on Google Maps in the western world. But it's not Germany with privacy here, it's Europe. Everyone has to adapt. In the beginning, the dissenting voices were very loud, even today. Nevertheless, as with the regulations regarding autonomous driving, it is trend-setting and, strangely enough, many nations accept it. I am sure that Brainchip will have a monopole in this niche for the next few years.Wow @cosors that is so interesting. Thanks for sharing this with us.

What an absolutely intriguing can-o-worms this German legislation (or law suit) will open. The right to privacy, even when in a public place, quite honestly is the way I’d prefer it to be. I believe our rights to privacy have already been eroded way too much with all the street-based cameras that already exist.

On a huge positive note, these type of privacy laws (even just the consideration of them) enhance the saleability of sensors that process the data on board and do not require a connection to The Cloud. And that should make Akida an even more attractive proposition.

I wonder if this same law, in Germany, will impact Google maps, specifically Street View. As I understand Street View, individuals can “apply” to have their image blurred, but they remain publicly visible otherwise. Is this different in Germany?

Also, what about dash cams?

As I said—what a can-o-worms. Do the legislators really want to open up this can?

German capital for armaments was increased to 100b this year. I do not like armament topics and you are maybe about something different. But you can be sure that they will do very well. Focus for me are drones, detectors and radar.Morning Chippers,

Looking primed for another great day.

Something for the super sleuths...

Watching news this morning ... Tasmanian observatory receives new telescope mount from HENSOLDT.

Would appear HENSOLDT are a pretty full on bunch of characters.

Possibly falls within Uiux field of expertise.

AKIDA certainly falls within their field of expertise, if Brainchip is not engaged with HENSOLDT yet might pay to send them a gift basket .

Regards,

Esq.

Thanks for that VERY informative tip @thelittleshort, unfortunately that functionality isn’t available on any of the platforms I use.Hello, Hillo (probably how an Aussie would mock a Kiwi for saying it), Hallo, Howdy and Hej

The issue of translation has come up a few times recently - with some getting increasingly frustrated with non-English posts

This issue is easily fixed but maybe people are not aware of how to translate text etc?

Personally I would hate for the posters with English as their second, third or fourth language to be scared off or banned for not following the rules

In saying that, regarding the rules (in terms of primary language to be used on TSE) - it is clearly stated as English

I value all of your input and literally read every single post, so please post in English with a translation of your native language post if you wish

Here’s a guide to translation via iPhone - if this is helpful

Performing a text translation on an iPhone takes less than 3 seconds: highlight text > arrow right > select translate

Below is how you do it for photographed text:

Click text/lines icon at bottom right of screen

View attachment 11871

Flattened text unflattens

View attachment 11872

Double tap and select highlighted text

View attachment 11873

Translation pops up to be read/copied

View attachment 11875

I think it's going to be a bad day, for shorters, market wide

View attachment 11880

Shorters...

Sucks to be you today!

BrainChip Partners with Prophesee Optimizing Computer Vision AI Performance and Efficiency

“We’ve successfully ported the data from Prophesee’s neuromorphic-based camera sensor to process inference on Akida with impressive performance,” said Anil Mankar, Co-Founder and CDO of BrainChip. “This combination of intelligent vision sensors with Akida’s ability to process data with unparalleled efficiency, precision and economy of energy at the point of acquisition truly advances state-of-the-art AI enablement and offers manufacturers a ready-to-implement solution.”

“By combining our Metavision solution with Akida-based IP, we are better able to deliver a complete high-performance and ultra-low power solution to OEMs looking to leverage edge-based visual technologies as part of their product offerings, said Luca Verre, CEO and co-founder of Prophesee.”

METAVISION GEN4 EVALUATION KIT 2 - HD S-MOUNT

Prophesee Evaluation Kit 2 HD enables full performance evaluation of Generation 4.1 Event-Based Vision sensor co-developed with SONY Semiconductor Solutions,

featuring the industry’s smallest pixels and superior HDR performance.

Metavision for Machines

Inventor of the world’s most advanced neuromorphic vision systemswww.automate.org

Metavision® sensors and algorithms mimic how the human eye and brain work to dramatically improve efficiency in areas such as autonomous vehicles, industrial automation, IoT, security and surveillance, and AR/VR. Prophesee is based in Paris, with local offices in Grenoble, Shanghai, Tokyo and Silicon Valley. The company is driven by a team of more than 100 visionary engineers, holds more than 50 international patents and is backed by leading international investors including Sony, iBionext, 360 Capital Partners, Intel Capital, Robert Bosch Venture Capital, Supernova Invest, and European Investment Bank

Hi Esq ... yep should be a nice GREEN dayMorning Chippers,

Looking primed for another great day.

Something for the super sleuths...

Watching news this morning ... Tasmanian observatory receives new telescope mount from HENSOLDT.

Would appear HENSOLDT are a pretty full on bunch of characters.

Possibly falls within Uiux field of expertise.

AKIDA certainly falls within their field of expertise, if Brainchip is not engaged with HENSOLDT yet might pay to send them a gift basket .

Regards,

Esq.

Laden. On a slightly different tangent. Thanks for taking the time to post in this forum. However as a relatively time poor user I have to admit that I simply skim over your articles as I don’t have time to do the translation. Now this is my loss if you post a gem and I take that risk and don’t translate, however if you genuinely wish to share what has been great research to reach the masses you need to make communications as easy for the majority as you can no matter what language that takes. So it is no bother to me if you post in German. But thank you for contributingbetter take care of contributions or start as a teacher in your village! Master data are in English! and everyone who is interested can also translate with the tools! would be strange to me that it is forbidden to speak German in Australia! because the people are pretty coolso i'm not ashamed of my language and i have to translate it too! don't believe that australian people in german forums are so constantly attacked by collaborators! so fun aside, sorry for my blatant mistake people