You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

BRN Discussion Ongoing

- Thread starter TechGirl

- Start date

My final point was the way they spoke of ecosystems.

The Team understand and are forging ahead with ecosystems to enable the uptake of the ip.

Otherwise it‘s like trying to sell a hammer but you don’t have nails to go with, or a knife with no fork.

By putting Akida IP into ecosystems they can sell a working system which takes the work out of the individual companies having to do it.

Both ARM and SiFive are massive in this regard. They are both going to be fighting for the competitive edge and Akida is the current weapon of choice!

Another example would be to sell a video player but not TV’s. You can’t just take a video player home and watch a movie (ignoring Netflix etc).

So the ecosystem would be selling the video player TV combination. A working model which can be taken up quickly by the manufacturer to onsell the final product to the consumer.

Obviously the larger companies e.g. Valeo/Mercedes etc have their own engineers and will make their own magic (systems) to put in their own products.

Hence the different versions of current customers.

But we are getting closer and closer to the customer who will actually be selling to consumers; e.g. cars, cctv camera’s, phones, drones, underwater drones, sensors, medical devices etc etc.

That is when the SP will well and truly take off!

They are well and truly kicking goals. I’m so jealous I couldn’t make it to the AGM. I would have enjoyed meeting them all immensly and I love thanking those that truly deserve praise and respect for the work they have done and are currently doing.

Cheers

Couldn’t have said it better SG. Every customer is unique in their ability to understand Akida and use it effectively in their end products. The Structure that Sean and team have put in, is all about identifying this capability and directing them to different solutions tailored for their needs.

BRN’s recently announced AI enablement program is a no brainer, if you look at other IP vendors pretty much all of them have something similar and is a sure fire way to catch even the smallest of fish coming your way. As Sean said in the AGM, they have the go to market strategy spot on and from now on it’s all about getting some runs on the board.

Fact Finder

Top 20

There is a very old saying that something is only worth what someone is willing to pay for it.The other big takeaway I got from yesterdays presentation is the obvious: “How switched on are they!”

They truly are leaders in their respective fields.

They are well aware that just being the first and best is not enough. That was excellent to hear!

I hope the Management Team is respected enough that every time the SP fluctuates a little shareholders can stop with the cheap shots and appreciate that the Team is doing their best: “And they know best!”

The Management Team is focussed on the business of selling IP. Once that is in full flight and has become uquitious the SP will take care of itself hence the statement, “The shareprice does what the shareprice does.”

This saying is considered conventional wisdom but it is completely flawed.

Consider this I have mentioned that I provide the grunt for my wife’s hobby of antique furniture buying and selling.

At the last physical auction we attended before Covid a drop inlaid table was her target. She was the only bidder and it was knocked down to her for $90.00.

I picked it up brought it home she photographed and listed it and sold it within 24 hours for $650.00.

So what was the real value of the table $90.00 or $650.00.

The answer $1,000 if my wife had been prepared to wait.

So the table sold twice for a price that did not reflect its true value.

My wife does her research. My wife new the true value and she had a plan and she implemented her plan.

The price that BRNASX sells for is not true value it is just the price it sold for and hence the statement the share price will do what the share price will do.

My opinion only SO DYOR AND HAVE A PLAN

FF

AKIDA BALLISTA

Yoda

Regular

Brothers In ARMsanother favorite

Hi tls,Not sure if this PVDM hypothesis has been mooted? Is it feasible that the resistance to his reappointment comes from those who look favourably at a future takeover bid? Has PVDM inserted himself to prevent the possible sale of everything he has worked to create? Although such a sale would made him rich beyond his wildest dreams, he does not seem like the type of character with whom this would sit well

1: yes it has been

2: no idea

3: Absolutely 100%

4: imo , I agree. Way to soon for such thoughts

Bravo

Meow Meow 🐾

By Jove, I think I've cracked it!

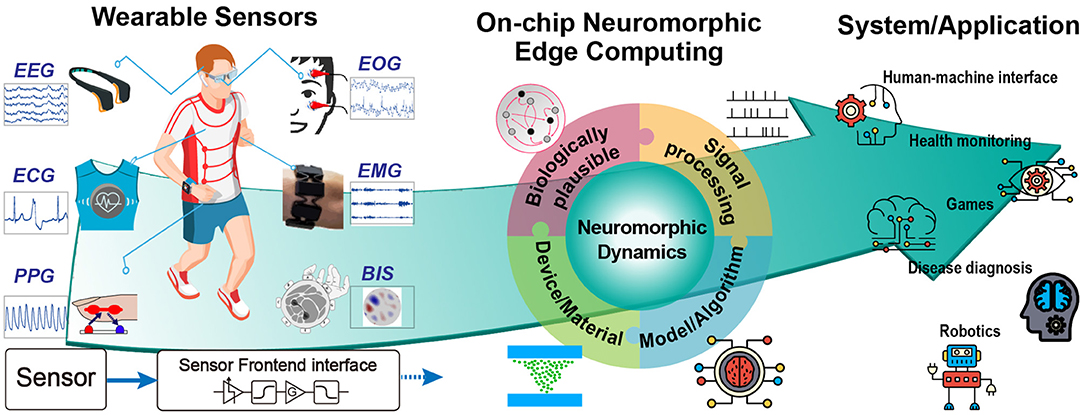

Beyond the edge! This has to be a reference for smart wearable devices which can monitor various human body symptoms ranging from heart, respiration, movement, to brain activities. AKIDA should be able to detect, predict, and analyze physical performance, physiological status, biochemical composition, mental alertness, etc.

View attachment 7594

Some examples of smart wearables are:

- Smart Rings

- Smart Watches

- Smart Glasses, Light-Filtering Glasses,Google AR Glasses Conceptual Translating Eyewear

- Smart Clothing with Sensors

- Smart Earphones

- Medical Wearables - blood pressure monitors, ECG monitors, etc.

- Smart Helmets

- Biosensors

Wearable sensors with machine learning to monitor ECG, EMG, etc, for applications including cancer detection, heart disease diagnosis and brain-computer interface.

View attachment 7595

Frontiers | Adaptive Extreme Edge Computing for Wearable Devices

Wearable devices are a fast-growing technology with impact on personal healthcare for both society and economy. Due to the widespread of sensors in pervasive...www.frontiersin.org

Hey Brain Fam,

Yesterday I posted the above graph which mentions an application for wearables called "brain-computer interface" and it must have lodged itself in my sub-conscious, because last night I had a series of very weird dreams about "brain-computer interface" and... MERECEDES.

This morning , despite feeling a bit tired and rough around the 'edges' (he-he-he!), I got up and typed in "Mercedes+ Brain-computer interface" into the search bar and look at what Dr Google spat back at me!

Is it merely a coincidence that the "Hey Mercedes" voice assistant technology is discussed in tandem with the introduction of the first BCI application in the Mercedes VISION AVTR concept vehicle, or are we involved in this to? I know what my dreams were indicating...

Mercedes-Benz VISION AVTR | Mercedes-Benz Group

A new dimension of future human-vehicle interaction: Mercedes-Benz gives an outlook on possible applications of brain-computer interface technology in the car at the IAA MOBILITY

Last edited:

equanimous

Norse clairvoyant shapeshifter goddess

With ARM in place and Nvidia accelareted I bet AMD are pulling their leg being gpu and cpu competitive. AMD will be trying maintain advantage over intel..

Quercuskid

Regular

all the talk of birds I have to show you the bird which came into my garden today.

Rise from the ashes

Regular

Nothing will stop this from being the world changing tech that it is.Yes Bad Bad form .... and so many large parcels bought prior to AGM = voting rights!! ?

Boab

I wish I could paint like Vincent

I must have signed up to receive email updates and received the below this morning.

I don't think I'll have time to read it all.

Enjoy I hope.

I don't think I'll have time to read it all.

Enjoy I hope.

| A NEWSLETTER FROM THE EDGE AI AND VISION ALLIANCE Late May 2022 | VOL. 12, NO. 10 |

To view this newsletter online, please click here |

| LETTER FROM THE EDITOR |

Dear Colleague,  Last week's Embedded Vision Summit was a resounding success, with more than 1,000 attendees learning from 100+ expert speakers and 80+ exhibitors and interacting in person for the first time since 2019. Special congratulations go to the winners of the 2022 Edge AI and Vision Product of the Year Awards:

2022 Embedded Vision Summit presentation slide decks in PDF format are now available for download from the Alliance website; publication of presentation videos will begin in the coming weeks. See you at the 2023 Summit! Brian Dipert Editor-In-Chief, Edge AI and Vision Alliance |

| ROBUST REAL-WORLD VISION IMPLEMENTATIONS |

Optimizing ML Systems for Real-World Deployment  In the real world, machine learning models are components of a broader software application or system. In this talk from the 2021 Embedded Vision Summit, Danielle Dean, Technical Director of Machine Learning at iRobot, explores the importance of optimizing the system as a whole–not just optimizing individual ML models. Based on experience building and deploying deep-learning-based systems for one of the largest fleets of autonomous robots in the world (the Roomba!), Dean highlights critical areas requiring attention for system-level optimization, including data collection, data processing, model building, system application and testing. She also shares recommendations for ways to think about and achieve optimization of the whole system. A Practical Guide to Implementing Machine Learning on Embedded Devices  Deploying machine learning onto edge devices requires many choices and trade-offs. Fortunately, processor designers are adding inference-enhancing instructions and architectures to even the lowest cost MCUs, tools developers are constantly discovering optimizations that extract a little more performance out of existing hardware, and ML researchers are refactoring the math to achieve better accuracy using faster operations and fewer parameters. In this presentation from the 2021 Embedded Vision Summit, Nathan Kopp, Principal Software Architect for Video Systems at the Chamberlain Group, takes a high-level look at what is involved in running a DNN model on existing edge devices, exploring some of the evolving tools and methods that are finally making this dream a reality. He also takes a quick look at a practical example of running a CNN object detector on low-compute hardware. |

| CAMERA DEVELOPMENT AND OPTIMIZATION |

How to Optimize a Camera ISP with Atlas to Automatically Improve Computer Vision Accuracy  Computer vision (CV) works on images pre-processed by a camera’s image signal processor (ISP). For the ISP to provide subjectively “good” image quality (IQ), its parameters must be manually tuned by imaging experts over many months for each specific lens / sensor configuration. However, “good” visual IQ isn’t necessarily what’s best for specific CV algorithms. In this session from the 2021 Embedded Vision Summit, Marc Courtemanche, Atlas Product Architect at Algolux, shows how to use the Atlas workflow to automatically optimize an ISP to maximize computer vision accuracy. Easy to access and deploy, the workflow can improve CV results by up to 25 mAP points while reducing time and effort by more than 10x versus today’s subjective manual IQ tuning approaches. 10 Things You Must Know Before Designing Your Own Camera  Computer vision requires vision. This is why companies that use computer vision often decide they need to create a custom camera module (and perhaps other custom sensors) that meets the specific needs of their unique application. This 2021 Embedded Vision Summit presentation from Alex Fink, consultant at Panopteo, will help you understand how cameras are different from other types of electronic products; what mistakes companies often make when attempting to design their own cameras; and what you can do to end up with cameras that are built on spec, on schedule and on budget. |

| FEATURED NEWS |

Intel's oneAPI 2022.2 is Now Available FRAMOS Makes Next-Generation GMSL3 Accessible for Any Embedded Vision Application AMD's Robotics Starter Kit Kick-starts the Intelligent Factory of the Future iENSO Makes CV22 and CV28 Ambarella-based Embedded Vision Camera Modules Commercially Available Imagination Technologies and Visidon Partner for Deep-learning-based Super Resolution Technology More News |

About This E-Mail LETTERS AND COMMENTS TO THE EDITOR: Letters and comments can be directed to the editor, Brian Dipert, at insights@edge-ai-vision.com. PASS IT ON...Feel free to forward this newsletter to your colleagues. If this newsletter was forwarded to you and you would like to receive it regularly, click here to register. |

buena suerte :-)

BOB Bank of Brainchip

Totally agree ...Nothing will stop this from being the world changing tech that it is.

Izzzzzzzzzzy

Regular

So annoying, dropping faster than we went up

Izzzzzzzzzzy

Regular

Where speeding ticket?So annoying, dropping faster than we went up

Rise from the ashes

Regular

All they do is create opportunities for us long-term believers also the new holders to pick up some bargains. Once she pops there will be no holding us back. Of course manipulation will still be there but by that time we'll be laughing all the way to the bank so to speak.Shorters would do that to create panic and sell off so simple and unfortunate for us genuine BRN shareholders. Pretty smart move if you ask me, they need to close their positions and move on to the next company but for how long can they play these games, well we need to wait for announcements and future revenues so they can release the share price in an all mighty push. Im waiting patiently.

Izzzzzzzzzzy

Regular

People have no respect for this share millions of orders just being wiped

MANIPULATION

IRON GRIP by the same organization as yesterdays manipulated dump using their buying/selling power via the BoTs to control the SP today.

They had knowledge of the ASX release just before (performance rights/shares to CEO & Board) before the Market and did that hard dump from $1.17 down to $1.13 and are now forcing the SP down more.

THEY .....have vacuumed up 12 Million shares so far today.

Yak52

IRON GRIP by the same organization as yesterdays manipulated dump using their buying/selling power via the BoTs to control the SP today.

They had knowledge of the ASX release just before (performance rights/shares to CEO & Board) before the Market and did that hard dump from $1.17 down to $1.13 and are now forcing the SP down more.

THEY .....have vacuumed up 12 Million shares so far today.

Yak52

Fact Finder

Top 20

Can we make you a glass of hot milk and put you back to bed clearly you do your best work while asleep.Hey Brain Fam,

Yesterday I posted above the above graph which mentions an application for wearables called "brain-computer interface" and it must have lodged itself in my sub-conscious, because last night I had a series of very weird dreams about "brain-computer interface" and... MERECEDES.

This morning , despite feeling a bit tired and rough around the 'edges' (he-he-he!), I got up and typed in "Mercedes+ Brain-computer interface" into the search bar and look at what Dr Google spat back at me!

Is it merely a coincidence that the "Hey Mercedes" voice assistant technology is discussed in tandem with the introduction of the first BCI application in the Mercedes VISION AVTR concept vehicle, or are we involved in this to? I know what my dreams were indicating...

View attachment 7649

Mercedes-Benz VISION AVTR | Mercedes-Benz Group

A new dimension of future human-vehicle interaction: Mercedes-Benz gives an outlook on possible applications of brain-computer interface technology in the car at the IAA MOBILITYgroup.mercedes-benz.com

Some wonderful dots exposed here Bravo, Bravo, Bravo.

My opinion only DYOR

FF

AKIDA BALLISTA

Makeme 2020

Regular

Another post from Professor Hossam Haick he has been posting quite frequently lately on Twitter, Could we be close to getting a Announcement regarding NaNose.

Similar threads

- Replies

- 0

- Views

- 4K

- Replies

- 9

- Views

- 6K

- Replies

- 0

- Views

- 3K