Fact Finder

Top 20

Ours occasionally flipped out as intended but never ever blinked. LOLOurs was a woody Morris station wagon with a mechanical flip out arm with orange light - can't remember if it blinked.

Ours occasionally flipped out as intended but never ever blinked. LOLOurs was a woody Morris station wagon with a mechanical flip out arm with orange light - can't remember if it blinked.

Hi @DiogeneseThe discussion about neuromorphic computing being the province of analog and lumping Akida into analog is a bit unfortunate, and probably derives with the pre-Akida obsession with analog SNNs

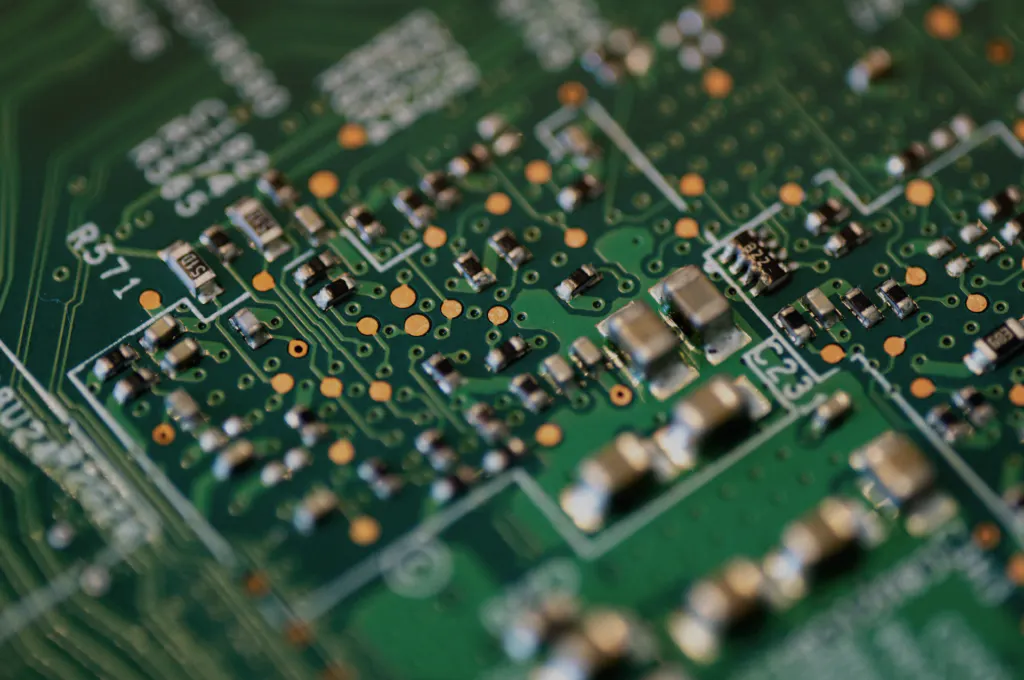

Page 27:

View attachment 16789

Page 28:

Analog hardware: The biggest time and energy costs in most computers occur when lots of data has to move between external memory and computational resources such as CPUs, GPUs, or NPUs. This is the "von Neumann bottleneck," named after the classic computer architecture that separates memory and logic. One way to greatly reduce the power needed for machine learning is to avoid moving the data — to do the computation where the data is located. Because there is no movement of data, tasks can be performed in a fraction of the time and require much less energy. In-memory and neuromorphic analog computing are such approaches that take this approach. In-memory computing is the design of memories next to or within the processing elements of hardware such that bitwise operations and arithmetic operations can occur in memory. Neuromorphic analog computing allows in-place compute but also mimics the brain’s function and efficiency by building artificial neural systems that implement «neurons» and «synapses» to transfer electrical signals via an analog circuit design. This circuit is the breakthrough technology solution to the VonNeumann bottleneck problem. Analog neuromorphic ICs are intrinsically parallel, and better adapted for neural network operations than current digital hardware solutions offering orders of magnitude improvements for edge applications. Market-ready, end-user programmable chips are an essential need for neuromorphic computing to expand its visibility and to achieve a variety of “real-world applications” with an increasing number of users. As large technology companies are waiting for the technology to become more mature, some start-ups have released their chips to fill the gap and to have a competitive advantage against those tech-giants. Examples include BrainChip AKIDA, GrAI Matter LabsVIP, and SynSense DYNAP processors.

Neuromorphic digital SNN computing also enables the blending of memory and compute, obviating the von Neumann bottleneck.

Thumbs up if you got a little bit creeped out by that comment

Great find generously shared.Great exposure on Stake! Things are heating up people!

Usually they get the apprentices to get Blinker fluid from supercheap, imagine the expression on their faces when they return with thisOurs occasionally flipped out as intended but never ever blinked. LOL

During winter it was 7am, -2 degrees, I witnessed a P plater driver driving around the round about with his head out the drivers window because his windscreen was covered in ice.... and for rainy days and night driving, you could buy a reflective plastic sleeve for your forearm.

Great find generously shared.

Finally an analyst that can read, add up, subtract, multiply and has heard of Annual Reports to Shareholders AND some of the partnerships and licensees of AKIDA IP though the EAP clients like NASA, Mercedes Benz etc; slipped past like a Warnie flipper.

Under the Spotlight AUS: BrainChip (BRN) | Stake

AI is the future, but hardware gaps must be closed before it can reach its full potential. An ASX-listed company is on the case, with a brain-mimicking processor designed for better machine learning. Today we put BrainChip Under the Spotlight.hellostake.com

My opinion only DYOR

FF

AKIDA BALLISTA

Similar gender split to HC:

Not if you are a foot model insurance would not cover.

50 points where compute is required in a modern motor vehicle.

SiFive Seeks to Fuel Next-Gen Designs with Automotive RISC-V Cores

one day ago by Jake Hertz

With its new portfolio of automotive RISC-V processor cores, SiFive aims to solve challenges in the design of evolving digital cars.

The RISC-V movement has been gaining some serious momentum this summer, finding value in a variety of fields and use cases. As a testament to this, the past week alone has seen the RISC-V movement has been bolstered by news from both Intel and NASA.

Now, that momentum is continuing again this week, this time with RISC-V finding its way into the automotive sector. This week, SiFive led the way along those lines with the announcement of a new portfolio of RISC-V processors designed to meet the demands of the next-generation automotive market.

In this article, we’ll look at the benefits of RISC-V for automotive as well as the new releases from SiFive.

Automotive Challenges

Today, the automotive industry is undergoing a revolution unlike any other time in history. The complete electrification and digitization of almost every facet of the automobile are creating smarter, safer, and more sustainable cars, but also come with a number of technical challenges.

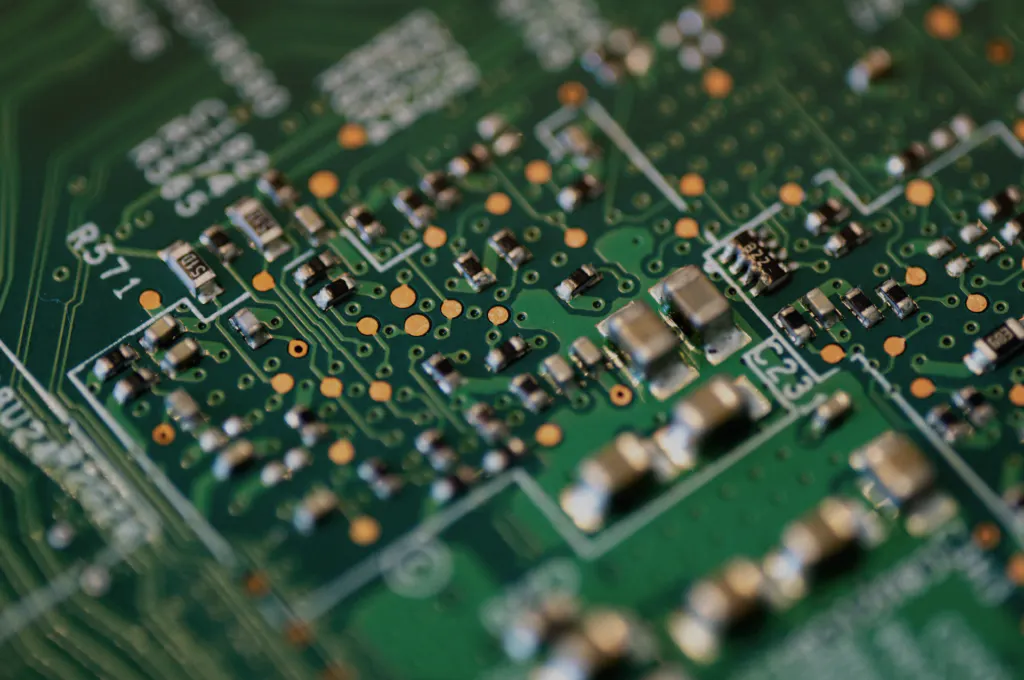

View attachment 16778

Some of the many computing tasks within a modern automobile.

Some of the many computing tasks within a modern automobile. Image used courtesy of Johnson Automotive (Click image to enlarge)

One of the major challenges is the increased dependence on compute-intensive software and services within the car. For example, many of the applications within a vehicle, such as autonomous driving functions, rely on real-time processing for proper execution.

The result is that automotive designers are finding themselves in need of greater onboard computing capabilities in order to perform fast and reliable processing.

At the same time, next-generation automobiles simultaneously rely on a myriad of different computing tasks, ranging from infotainment systems, to wireless communications, to machine learning processing and computer vision. This broad range of applications makes it difficult to design a system that can perform all these tasks at a high level.

Together, these are driving the need for flexible and powerful computing hardware that is capable of supporting a variety of tasks without sacrificing performance or reliability.

RISC-V for Automotive

In the eyes of many, RISC-V presents a solution to many of these challenges. Some of the major benefits of RISC-V in the automotive space are the simplicity and performance that it brings to automotive design.

By using a single instruction set architecture (ISA) with RISC-V, a designer can create an automotive computing platform that enables a high level of code portability as well as decreased time to market. At the same time, many RISC-V offerings have been shown to offer high levels of performance as well as energy efficiency, making these devices ideal tools for automotive applications.

Beyond this, the RISC-V movement also provides designers with flexibility. Since RISC-V has grown globally, now consisting of thousands of members, there has emerged a wide variety of existing IP and resources on the market as well as the tools and information necessary to help designers create their own IP if needed.

SiFive’s Automotive RISC-V Portfolio

SiFive’s new portfolio of automotive-facing RISC-V offerings is part of the first phase of a long-term roadmap, says the company. The announcement describes three automotive solutions: the E6-A, X280-A, and S7-A.

The E6-A series is a 32-bit RISC-V processor that was designed specifically for real-time computing applications including system control and security. Built off of a single issue, in-order 8-stage Harvard Pipeline, and tightly integrated memory and cache subsystems, SiFive is describing the E6-A processors as offering mid-range power-efficient performance. SiFive tells us that the E6-A will be offered in ASIL A, B, and D safety levels and will be available by the end of 2022. The E6-A’s product page does not include a datasheet. It mentions a “Automotive E6-A Development Kit,” but with few details about it so far.

The E6-A RISC-V processor will support ASIL A, B, and D safety levels.

The E6-A RISC-V processor will support ASIL A, B, and D safety levels. Image used courtesy of SiFive

Following the E6-A, SiFive plans to release both the X280-A and S7-A by the second half of 2023. The X280-A will be a vector-capable processor that is optimized for sensor fusion, ADAS, and machine learning applications within the vehicle.

S7-A, on the other hand, will be tailored for applications such as ADAS, gateways, and domain controllers. To do this, the S7-A will be a real-time core that features native 64-bit support. As of now, there are no product pages on SiFive’s site for either the S7-A or the X280-A.

Together, SiFive hopes that its automotive line will provide automotive designers with the computing flexibility and performance needed to support the compute-intensive and varied tasks required by next-generation automobiles.

LOL, some countries had invented break lights and indicators before the combustion engine.... and for rainy days and night driving, you could buy a reflective plastic sleeve for your forearm.

So was Andrej fixated on cameras or did he leave because of Elon's fixation on cameras?Not sure if it’s been posted before, but it looks like Tesla are going backwards more than ever this year.

Tesla AI leader Andrej Karpathy announces he's leaving the company

Big changes are afoot within Tesla's Autopilot unit as AI leader Andrej Karpathy announces he's no longer working for the company.www.cnbc.com

The historical performances of indexes, like the DJIA, the S&P/ASX200, or any you wish to choose, are always going to go up over time, because they are weighted, which is an oxymoronInteresting historical graph of the Dow jones with world events timeline View attachment 16799

But surely the ad would only show the back of your head because of professional etiquette.The historical performances of indexes, like the DJIA, the S&P/ASX200, or any you wish to choose, are always going to go up over time, because they are weighted, which is an oxymoron..

Company’s that are doing well, get put in, while company’s that are doing poorly, get turfed..

This is why the funds weight their portfolios to them.

It's an idiot proof way, of ensuring future gains for their clients.

Meaning a fund manager of this type, can basically be a buffoon and most, probably are..

All the hard work of selecting the companies, is done by someone else..

So those kind of charts, are completely misleading.

If you're only selecting a few stocks, you need to make sure, they are doing everything in their power and have the goods, to have a BIG future.

That's why I choose BrainChip

(I can just imagine the last two sentences, as an investment ad, with me holding up an AKIDA chip at the end of it)

But of course!But surely the ad would only show the back of your head because of professional etiquette.