And what happened the last time BRN mentioned a North American car maker?The board needs to come out at this coming AGM and give us an honest take on how Sean has been performing with regard to his targets and forecasts, rather than say everything is confidential and under NDAs. They could easily also mention how many companies they are working with by industry, and also differentiate the involvement of the engineering teams, e.g., proof-of-concept, prototyping, commercial products. E.g. Amongst the xx car manufacturers, we are working with, yy% are POC, zz% are prototyping and aa% are commercial products. Within the xx consumer electronics / appliance manufacturers we are working with, yy% are POC, zz% are prototyping and aa% are commercial products. The markets for industries are so large that there is not much a competitor can glean from that. For example there are several hundred motor vehicle manufacturers worldwide.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

BRN Discussion Ongoing

- Thread starter TechGirl

- Start date

BrainChip and Frontgrade Gaisler to Augment Space-Grade Microprocessors with AI Capabilities

LAGUNA HILLS, Calif. & GÖTEBORG, Sweden, May 05, 2024--BrainChip and Frontgrade Gaisler to Augment Space-Grade Microprocessors with AI Capabilities

IndepthDiver

Regular

TLDR: I think TENNs is based a lot on ABRs LMUs. ABR are a competitor but not as big as they don't do IP or currently use SNNs.

For those more technically minded:

Regarding TENNs, the presentation slides by Tony Lewis from the other week were pretty interesting.

The slides indicate TENNs is based on the Chebyshev polynomial, and it's compared to the Legendre polynomial on the same page. For reference, these are both from the family of Jacobi polynomials. Chebyshev are generally thought to converge faster (which is better) but there are a few applications where Legendre or other Jacobi polynomials are better. I find this really interesting because I think TENNs was highly inspired by the work from Applied Brain Research (ABR) on their Legendre Memory Unit (LMU).

LMUs were first proposed in a 2019 paper by Chris Eliasmith from ABR. Many on here will recall that PVDM won an award in 2021 following a presentation done on LMUs by Eliasmith (who got second place). PVDM won because a lot of shareholders from here voted for him, which I find it ironic as the last big breakthrough from Brainchip was TENNs (which I think are heavily based off the LMU algorithm).

That said, I think this was one of the better directions Brainchip could have taken. The LMU is an RNN but which overcomes many of the RNN limitations, and the ABR paper indicated it would work well on SNNs.

It's worth noting that ABR have produced a chip based on the LMU but they don't do IP so they have a smaller market. I also don't think their chip is an SNN. They have also licensed their LMU so anyone who wants to use it must pay them. Note that by using a different algorithm and possibly making other changes, Brainchip have bypassed this and potentially made their work patentable (you can't patent something that's been published in a paper). By integrating TENNs with the Akida platform, both further complement each other.

On a side note, one of the more promising architectures to replace transformers for certain tasks right now is Mamba, as it needs less training and works well with long sequences.

I initially thought Mamba may have been the next direction Brainchip would have taken for LLMs (after TENNs) but now I'm not so sure as one of the slides also does a comparison with Mamba, which it shows TENNs does relatively well against. Hopefully we find out more at the AGM.

Comparison research paper:

Original LMU paper:

Tony's slides (from Berlinlover):

ABR chip:

appliedbrainresearch.com

appliedbrainresearch.com

For those more technically minded:

Regarding TENNs, the presentation slides by Tony Lewis from the other week were pretty interesting.

The slides indicate TENNs is based on the Chebyshev polynomial, and it's compared to the Legendre polynomial on the same page. For reference, these are both from the family of Jacobi polynomials. Chebyshev are generally thought to converge faster (which is better) but there are a few applications where Legendre or other Jacobi polynomials are better. I find this really interesting because I think TENNs was highly inspired by the work from Applied Brain Research (ABR) on their Legendre Memory Unit (LMU).

LMUs were first proposed in a 2019 paper by Chris Eliasmith from ABR. Many on here will recall that PVDM won an award in 2021 following a presentation done on LMUs by Eliasmith (who got second place). PVDM won because a lot of shareholders from here voted for him, which I find it ironic as the last big breakthrough from Brainchip was TENNs (which I think are heavily based off the LMU algorithm).

That said, I think this was one of the better directions Brainchip could have taken. The LMU is an RNN but which overcomes many of the RNN limitations, and the ABR paper indicated it would work well on SNNs.

It's worth noting that ABR have produced a chip based on the LMU but they don't do IP so they have a smaller market. I also don't think their chip is an SNN. They have also licensed their LMU so anyone who wants to use it must pay them. Note that by using a different algorithm and possibly making other changes, Brainchip have bypassed this and potentially made their work patentable (you can't patent something that's been published in a paper). By integrating TENNs with the Akida platform, both further complement each other.

On a side note, one of the more promising architectures to replace transformers for certain tasks right now is Mamba, as it needs less training and works well with long sequences.

I initially thought Mamba may have been the next direction Brainchip would have taken for LLMs (after TENNs) but now I'm not so sure as one of the slides also does a comparison with Mamba, which it shows TENNs does relatively well against. Hopefully we find out more at the AGM.

Comparison research paper:

Original LMU paper:

Tony's slides (from Berlinlover):

ABR chip:

AI Chip Experts | Powering Future-Ready Artificial Intelligence with ABR

Explore ABR's Edge Time Series Processor (TSP1), a revolutionary AI chip for voice recognition, biomedical monitoring, and industrial IoT. Download the datasheet today.

Frangipani

Top 20

Here is further evidence - postdating that of cosors (02/2022) - that AKD1000 has been used for research at FZI (Forschungszentrum Informatik / Research Centre for Information Technology) in Karlsruhe, Germany.

Julius von Egloffstein is presently both an M.Sc. student at Karlsruhe Institute of Technology (KIT) and a research assistant at FZI. The technical university and the non-profit research institute FZI collaborate closely, and hence quite a few KIT students will do research for their Bachelor’s or Master’s theses at FZI, like this young gentleman did two years ago, when he worked on his Bachelor’s thesis titled Application Specific Neural Branch Prediction with Sparse Encoding (for which he got a perfect score, by the way).

And look what kind of neuromorphic hardware he used for evaluation:

View attachment 60120

It is worth noting that FZI researchers are not working in an ivory tower - instead, their research is all about applied computer science and technology transfer to companies and public institutions:

FZI Forschungszentrum Informatik - Wikipedia

en.m.wikipedia.org

View attachment 60227

View attachment 60180

View attachment 60182

View attachment 60183

FZI is currently looking for a research assistant in the field of “Neuromorphic Computing in Edge Systems”, with a focus on automated and autonomous driving. It is a two year fixed-term contract, which may or may not be renewed.

Even those of you who don’t speak German can spot intriguing terms such as spiking neural networks, event-based computing, near-memory computing, biosignals and predictive maintenance. There is also a reference to intelligent traffic junction systems for automated and autonomous driving.

Doesn’t that sound very akida-esque?!

https://karriere.fzi.de/Vacancies/401/Description/1 (German only)

FZI currently has a number of other job openings that involve research on SNNs - mostly aimed at students looking for a topic for either their B.Sc or M.Sc. theses (topics range from crowd monitoring, development of innovative neuromorphic hardware, event-based radar processing, vital sign analysis during sleep, the development of an EEG-based BCI to analyse colour vision to event-based sensors in service robots and mobile manipulators), but to me the job description above is the one most likely to involve Akida due to those specific terms used. Some ongoing projects at FZI involving neuromorphic technology have Intel as their partner (eg GreenEdge).

I also discovered an interesting workshop on “Application of Intelligent Infrastructure for Automated Driving”, scheduled for June 2 and co-organised by FZI, Bosch and Heilbronn University of Applied Sciences. It won’t take place in Germany, though, but on Jeju Island in Korea, at the fully integrated resort Jeju Shinhwa World, as part of the IEEE Intelligent Vehicles Symposium 2024 (https://ieee-iv.org/2024/ - weirdly, the location is only given in the German version of the workshop announcement).

https://www.fzi.de/en/veranstaltung...lligent-infrastructure-for-automated-driving/ (English version)

Just a gentle reminder of my previous post on FZI:

“It is worth noting that FZI researchers are not working in an ivory tower - instead, their research is all about applied computer science and technology transfer to companies and public institutions.”

MadMayHam | 合氣道

Regular

Ford would have been POC phase, they could provide an update by means of a general update like I mentioned earlier, no need for names. I'm actually expecting a board spill given the last AGM results and the lack of visible progress in between.

And what happened the last time BRN mentioned a North American car make

Hi IDD,TLDR: I think TENNs is based a lot on ABRs LMUs. ABR are a competitor but not as big as they don't do IP or currently use SNNs.

For those more technically minded:

Regarding TENNs, the presentation slides by Tony Lewis from the other week were pretty interesting.

The slides indicate TENNs is based on the Chebyshev polynomial, and it's compared to the Legendre polynomial on the same page. For reference, these are both from the family of Jacobi polynomials. Chebyshev are generally thought to converge faster (which is better) but there are a few applications where Legendre or other Jacobi polynomials are better. I find this really interesting because I think TENNs was highly inspired by the work from Applied Brain Research (ABR) on their Legendre Memory Unit (LMU).

LMUs were first proposed in a 2019 paper by Chris Eliasmith from ABR. Many on here will recall that PVDM won an award in 2021 following a presentation done on LMUs by Eliasmith (who got second place). PVDM won because a lot of shareholders from here voted for him, which I find it ironic as the last big breakthrough from Brainchip was TENNs (which I think are heavily based off the LMU algorithm).

That said, I think this was one of the better directions Brainchip could have taken. The LMU is an RNN but which overcomes many of the RNN limitations, and the ABR paper indicated it would work well on SNNs.

It's worth noting that ABR have produced a chip based on the LMU but they don't do IP so they have a smaller market. I also don't think their chip is an SNN. They have also licensed their LMU so anyone who wants to use it must pay them. Note that by using a different algorithm and possibly making other changes, Brainchip have bypassed this and potentially made their work patentable (you can't patent something that's been published in a paper). By integrating TENNs with the Akida platform, both further complement each other.

On a side note, one of the more promising architectures to replace transformers for certain tasks right now is Mamba, as it needs less training and works well with long sequences.

I initially thought Mamba may have been the next direction Brainchip would have taken for LLMs (after TENNs) but now I'm not so sure as one of the slides also does a comparison with Mamba, which it shows TENNs does relatively well against. Hopefully we find out more at the AGM.

Comparison research paper:

Original LMU paper:

Tony's slides (from Berlinlover):

ABR chip:

AI Chip Experts | Powering Future-Ready Artificial Intelligence with ABR

Explore ABR's Edge Time Series Processor (TSP1), a revolutionary AI chip for voice recognition, biomedical monitoring, and industrial IoT. Download the datasheet today.appliedbrainresearch.com

Just ignore her - so rude!

The patent specifications give a comprehensive background of the development of AI time series implementations.

WO2023250092A1 METHOD AND SYSTEM FOR PROCESSING EVENT-BASED DATA IN EVENT-BASED SPATIOTEMPORAL NEURAL NETWORKS 20220622

Paras [0004] to [0011] set out the SotA as known to the inventors as of mid-2022.

Paras [0114] to [0127] discuss various ways of implementing the invention, including as you say Chebyshev polynomials [0126] as a subset of orthogonal polynomials.

Claim 1 sets out the primary inventive concept, which is much broader than Chebyshev. In fact, orthogonal polynomials only come in in subordinate claim 10.

A system to process event-based data using a neural network, the neural network comprising

a plurality of neurons associated with a corresponding portion of the eventbased data received at the plurality of neurons, and

one or more connections associated with each of the plurality of neurons,

the system comprising:

a memory; and

a processor communicatively coupled to the memory,

the processor being configured to:

receive, at a neuron of the plurality of neurons, a plurality of events associated with the event-based data over the one or more connections associated with the neuron,

wherein

each of the one or more connections is associated with a first kernel and a second kernel,

and wherein

each of the plurality of events belongs to one of a first category or a second category,

determine, at the neuron, a potential by processing the plurality of events received over the one or more connections,

wherein

to process the plurality of events, the processor is configured to:

when the received plurality of events belong to the first category, select the first kernel for determining the potential,

when the received plurality of events belong to the second category, select the second kernel for determining the potential,

and

generate, at the neuron, output based on the determined potential.

The core of this TeNN invention is that the system determines whether the events are "spatio" or 'temporal" and directs them to the appropriate processing kernel.

To demonstrate that TeNN is derived from ABR's system, it would be probative to show that their inventive concept had been appropriated, bearing in mind that Legendre and Chebyshev polynomials have been around for a long time.

To me, TeNN seems to be an entirely different invention from ABR's system.

This is one of ABR's Legendre Memory Unit patents from 2019:

US11238345B2 Legendre memory units in recurrent neural networks 20190306+

Applicants APPLIED BRAIN RES INC [CA]

Inventors VOELKER AARON RUSSELL [CA]; ELIASMITH CHRISTOPHER DAVID [CA]

1. A method comprising: defining, by a computer processor, a node response function for each node in a network, the node response function representing a state over time, wherein the state is encoded into one of binary events or real values, each node having a node input and a node output;

defining, by the computer processor, a set of connection weights with each node input;

defining, by the computer processor, a set of connection weights with each node output;

defining, by the computer processor, one or more Legendre Memory Unit (LMU) cells having a set of recurrent connections defined as a matrix that determines node connection weights based on the formula:

where q is an integer determined by the user, and i and j are greater than or equal to zero; and

generating, by the computer processor, a recurrent neural network comprising the node response function for each node, the set of connection weights with each node input, the set of connection weights with each node output, and the LMU cells by training the network as a recurrent neural network by updating a plurality of its parameters or by fixing one or more parameters while updating the remaining parameters.

Pom down under

Top 20

When’s the latest you can vote?

IndepthDiver

Regular

Hi Dio,Hi IDD,

Just ignore her - so rude!

The patent specifications give a comprehensive background of the development of AI time series implementations.

WO2023250092A1 METHOD AND SYSTEM FOR PROCESSING EVENT-BASED DATA IN EVENT-BASED SPATIOTEMPORAL NEURAL NETWORKS 20220622

Paras [0004] to [0011] set out the SotA as known to the inventors as of mid-2022.

Paras [0114] to [0127] discuss various ways of implementing the invention, including as you say Chebyshev polynomials [0126] as a subset of orthogonal polynomials.

Claim 1 sets out the primary inventive concept, which is much broader than Chebyshev. In fact, orthogonal polynomials only come in in subordinate claim 10.

View attachment 62255

A system to process event-based data using a neural network, the neural network comprising

a plurality of neurons associated with a corresponding portion of the eventbased data received at the plurality of neurons, and

one or more connections associated with each of the plurality of neurons,

the system comprising:

a memory; and

a processor communicatively coupled to the memory,

the processor being configured to:

receive, at a neuron of the plurality of neurons, a plurality of events associated with the event-based data over the one or more connections associated with the neuron,

wherein

each of the one or more connections is associated with a first kernel and a second kernel,

and wherein

each of the plurality of events belongs to one of a first category or a second category,

determine, at the neuron, a potential by processing the plurality of events received over the one or more connections,

wherein

to process the plurality of events, the processor is configured to:

when the received plurality of events belong to the first category, select the first kernel for determining the potential,

when the received plurality of events belong to the second category, select the second kernel for determining the potential,

and

generate, at the neuron, output based on the determined potential.

The core of this TeNN invention is that the system determines whether the events are "spatio" or 'temporal" and directs them to the appropriate processing kernel.

To demonstrate that TeNN is derived from ABR's system, it would be probative to show that their inventive concept had been appropriated, bearing in mind that Legendre and Chebyshev polynomials have been around for a long time.

To me, TeNN seems to be an entirely different invention from ABR's system.

This is one of ABR's Legendre Memory Unit patents from 2019:

US11238345B2 Legendre memory units in recurrent neural networks 20190306+

Applicants APPLIED BRAIN RES INC [CA]

Inventors VOELKER AARON RUSSELL [CA]; ELIASMITH CHRISTOPHER DAVID [CA]

1. A method comprising:

defining, by a computer processor, a node response function for each node in a network, the node response function representing a state over time, wherein the state is encoded into one of binary events or real values, each node having a node input and a node output;

defining, by the computer processor, a set of connection weights with each node input;

defining, by the computer processor, a set of connection weights with each node output;

defining, by the computer processor, one or more Legendre Memory Unit (LMU) cells having a set of recurrent connections defined as a matrix that determines node connection weights based on the formula:

View attachment 62254

where q is an integer determined by the user, and i and j are greater than or equal to zero; and

generating, by the computer processor, a recurrent neural network comprising the node response function for each node, the set of connection weights with each node input, the set of connection weights with each node output, and the LMU cells by training the network as a recurrent neural network by updating a plurality of its parameters or by fixing one or more parameters while updating the remaining parameters.

Thanks, that was great. Patents can be very revealing (but only if you know how to look)

The part about the 2 kernels was interesting. From the slide contents I suspect your right about what each data type refers to. Which means TENNs is just be treated as an add-on to the Akida platform and everything works with no intervention required by the user. It's pretty clever the way it's been configured, as it automatically handles switching between images and other streams like audio seamlessly, ensuring optimal efficiency.

Slide on TENNs:

Replacement for many Transformer tasks

• Language Models

• Time-series Data

• Spatiotemporal Data

Well said Pradeep from TensorWave

www.linkedin.com

www.linkedin.com

siliconcanals.com

siliconcanals.com

tensorwave.com

tensorwave.com

BrainChip and Frontgrade Gaisler to Augment Space-Grade Microprocessors | Pradeep Reddy 🌊 posted on the topic | LinkedIn

BrainChip and Frontgrade Gaisler to Augment Space-Grade Microprocessors with AI Capabilities These next generation microprocessors would include BrainChip’s AI processing capabilities, thereby enabling a considerable step forward in the computing resources available for space-borne systems. In...

BrainChip and Frontgrade Gaisler to Augment Space-Grade Microprocessors with AI Capabilities - Silicon Canals

LAGUNA HILLS, Calif. & GÖTEBORG, Sweden--(BUSINESS WIRE)--

TensorWave: AI & HPC Cloud with AMD Instinct™

Accelerate LLMs, HPC workloads, and AI research with AMD Instinct GPUs on TensorWave's scalable cloud.

Last edited:

The podcasts would blow it's memory anyhoo...

And some on this forum have the audacity to suggest that the Board, Executive Management Team have or are sitting on their

hands, enjoying some sort of lifestyle in the Bahamas, yesterday's news announcement just shows the respect that our team is

gaining in the space field alone !

If Akida can perform in the harshness of space, which I'm sure it will excel in, doesn't that open up even more doors here on Earth ?

but once again, producing radiation-hardened microprocessors isn't a 6 month sign off just to appease shareholders, it's a step by step

process, isn't it ?

For the ones whom wish to remove the Board, just remember, currently we have 3 NEDs based here in Australia, which I personally

feel is extremely important, you may ask why ?...well having no Directors based here in Australia and say only the US just doesn't

sit well with me, simple as that.

Attempting to remove our original founder, the genius in Peter would be another mistake, despite retiring, I'm 100% sure Peter is still

very much hands on with his continuing research and Chair of the SAB, try thinking of the bigger picture, too many I believe are just

too focused on the now, Sean as I have mentioned is really only halfway through his business plan, if the Board thought that he was

leading us all down the garden path, he would have been removed already, that I'm also 100% sure of....did Lou just quit of his own

accord ? you decide the answer to that.

Have a great week ahead........Tech

AKD II just waking up !

hands, enjoying some sort of lifestyle in the Bahamas, yesterday's news announcement just shows the respect that our team is

gaining in the space field alone !

If Akida can perform in the harshness of space, which I'm sure it will excel in, doesn't that open up even more doors here on Earth ?

but once again, producing radiation-hardened microprocessors isn't a 6 month sign off just to appease shareholders, it's a step by step

process, isn't it ?

For the ones whom wish to remove the Board, just remember, currently we have 3 NEDs based here in Australia, which I personally

feel is extremely important, you may ask why ?...well having no Directors based here in Australia and say only the US just doesn't

sit well with me, simple as that.

Attempting to remove our original founder, the genius in Peter would be another mistake, despite retiring, I'm 100% sure Peter is still

very much hands on with his continuing research and Chair of the SAB, try thinking of the bigger picture, too many I believe are just

too focused on the now, Sean as I have mentioned is really only halfway through his business plan, if the Board thought that he was

leading us all down the garden path, he would have been removed already, that I'm also 100% sure of....did Lou just quit of his own

accord ? you decide the answer to that.

Have a great week ahead........Tech

AKD II just waking up !

Last edited:

Baron Von Ricta

Regular

I believe that ASX listed companies must have a minimum of 3 directors, at least 2 must ordinarily reside in Australia.And some on this forum have the audacity to suggest that the Board, Executive Management Team have or are sitting on their

hands, enjoying some sort of lifestyle in the Bahamas, yesterday's news announcement just shows the respect that our team is

gaining in the space field alone !

If Akida can perform in the harshness of space, which I'm sure it will excel in, doesn't that open up even more doors here on Earth ?

but once again, producing radiation-hardened microprocessors isn't a 6 month sign off just to appease shareholders, it's a step by step

process, isn't it ?

For the ones whom wish to remove the Board, just remember, currently we have 3 NEDs based here in Australia, which I personally

feel is extremely important, you may ask why ?...well having no Directors based here in Australia and say only the US just doesn't

sit well with me, simple as that.

Attempting to remove our original founder, the genesis in Peter would be another mistake, despite retiring, I'm 100% sure Peter is still

very much hands on with his continuing research and Chair of the SAB, try thinking of the bigger picture, too many I believe are just

too focused on the now, Sean as I have mentioned is really only halfway through his business plan, if the Board thought that he was

leading us all down the garden path, he would have been removed already, that I'm also 100% sure of....did Lou just quit of his own

accord ? you decide the answer to that.

Have a great week ahead........Tech

AKD II just waking up !

It was/is my view that LOU left on his own account for medical reason after sustaining significant burns to both his hands .......And some on this forum have the audacity to suggest that the Board, Executive Management Team have or are sitting on their

hands, enjoying some sort of lifestyle in the Bahamas, yesterday's news announcement just shows the respect that our team is

gaining in the space field alone !

If Akida can perform in the harshness of space, which I'm sure it will excel in, doesn't that open up even more doors here on Earth ?

but once again, producing radiation-hardened microprocessors isn't a 6 month sign off just to appease shareholders, it's a step by step

process, isn't it ?

For the ones whom wish to remove the Board, just remember, currently we have 3 NEDs based here in Australia, which I personally

feel is extremely important, you may ask why ?...well having no Directors based here in Australia and say only the US just doesn't

sit well with me, simple as that.

Attempting to remove our original founder, the genesis in Peter would be another mistake, despite retiring, I'm 100% sure Peter is still

very much hands on with his continuing research and Chair of the SAB, try thinking of the bigger picture, too many I believe are just

too focused on the now, Sean as I have mentioned is really only halfway through his business plan, if the Board thought that he was

leading us all down the garden path, he would have been removed already, that I'm also 100% sure of....did Lou just quit of his own

accord ? you decide the answer to that.

Have a great week ahead........Tech

AKD II just waking up !

HopalongPetrovski

I'm Spartacus!

Or......maybe this is what happened to poor Lou, after the casino deals got the old kibosh......It was/is my view that LOU left on his own account for medical reason after sustaining significant burns to both his hands .......

buena suerte :-)

BOB Bank of Brainchip

Very nice

Last edited:

toasty

Regular

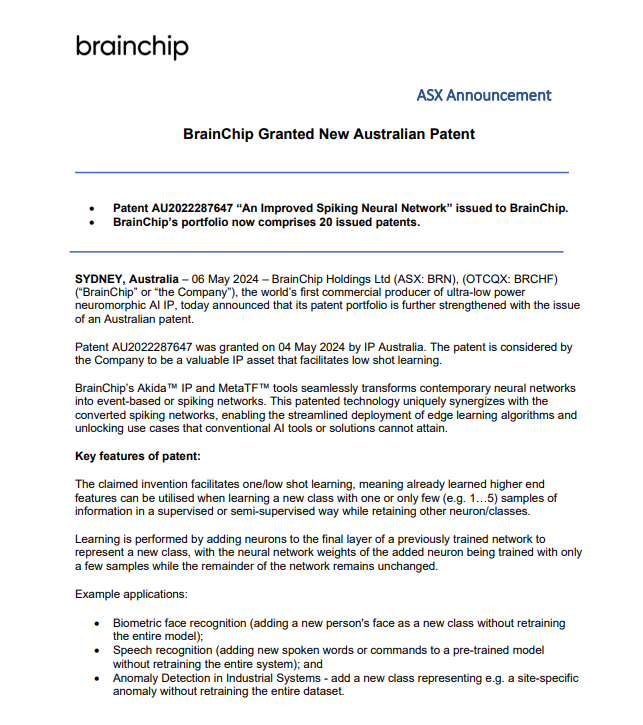

Am I reading this right that this patent means that new classes can be added without affecting those that are existing. And as we know, our CNN/SNN converter has the ability to "re-use" existing models. Soooooo, does that mean potential objections to "change" are reduced or eliminated? Asking for a friend...........

Hey Diogenese,Am I reading this right that this patent means that new classes can be added without affecting those that are existing. And as we know, our CNN/SNN converter has the ability to "re-use" existing models. Soooooo, does that mean potential objections to "change" are reduced or eliminated? Asking for a friend...........

Would love to hear your explanation in layman's terms of what this patent means. Also, I assume it has also been applied for in other countries and Australia is the first cab off the ramp to approve it.

It was/is my view that LOU left on his own account for medical reason after sustaining significant burns to both his

It was/is my view that LOU left on his own account for medical reason after sustaining significant burns to both his hands .......

Lou confided in me prior to the market about his burns accident, but that was only part of it, his hands were healing good, I would

have to dig up the email, which I still have among the thousands and thousands

This morning I did relisten to Sean's podcast the other day, and with regard to Rob leaving, his answer wasn't an answer and probably

should have just abstained in my opinion, we all know now Rob has moved onto another challenge...good on him.

Similar threads

- Replies

- 1

- Views

- 4K

- Replies

- 10

- Views

- 6K

- Replies

- 1

- Views

- 3K