This guy with the red shirt looks like Georgios Tsoukalos… “and WE HAVE the evidence that extraterrestrial life used akida THOUSANDS OF YEARS AGO… its PROOFED by ancient Sumerian texts which are still not discovered… but we know it!”

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

BRN Discussion Ongoing

- Thread starter TechGirl

- Start date

This guy with the red shirt looks like Georgios Tsoukalos… “and WE HAVE the evidence that extraterrestrial life used akida THOUSANDS OF YEARS AGO… its PROOFED by ancient Sumerian texts which are still not discovered… but we know it!”

DerAktienDude

Regular

Jesus Christ that was quite an outrage. Where should I even begin?

Regarding the excitement:

For example “Brilliant quarterly update” comes to my mind.

Fact Finder the bankruptcy and insolvency issue is noted and something that I learned today. I was in the wrong.

How you come up that I post something criminal though I have no idea.

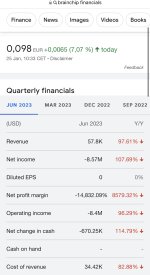

A company with -14000% net profit margin in the penultimate 4C is *closer* I repeat closer! to insolvency than it is to being profitable. That’s not rocket science and in no way something against the law.

Even our CEO stated that this year makes or breaks the company. Do you want to file a lawsuit because our own CEO acknowledges the situation we’re in?

Altering what I’ve written changing the context and claiming that I said that the company is *close*, close! to bankruptcy, hence insolvency, that’s unlawful. That’s spreading misinformation at its finest.

Do I live closer to Egypt than you do? Yes I do. Do I live close to Egypt? No I don’t.

How you come up that we’re partnered with Samsung is something you have to explain to us.

That’s what I’ve written “The issue is. If Samsung is not using us through ARM or whoever else it might be we compete against a company with its own foundries.”

Tell me where do I claim our partnership with Samsung? Sounds made up to me.

Hell, I’ll even link it for your convenience.

thestockexchange.com.au

thestockexchange.com.au

If we’re not involved through a third party that uses Akida (Arm, Megachips or whoever else it might be), not partnership with Samsung, a third party, we’re gonna have some serious issues.

Stop claiming things I supposedly said but didn’t. And for god sake stop altering my sentences to make them fit your narrative and changing the context of what I’ve written. That really pissed me off!

Seeing all the cheerleaders mindlessly agreeing with everything you say and lashing out against anyone who thinks otherwise makes me question why I’m even here. This place has turned into an echo chamber that just allows one single point of view. I hope that’s a wake up call for some.

I always regarded you highly since you’ve been there since day one with great research but threatening me, altering my sentences and the context of what I’ve written and then suggesting me not to continue with this vein left an enormous dent.

Regarding the excitement:

For example “Brilliant quarterly update” comes to my mind.

Fact Finder the bankruptcy and insolvency issue is noted and something that I learned today. I was in the wrong.

How you come up that I post something criminal though I have no idea.

A company with -14000% net profit margin in the penultimate 4C is *closer* I repeat closer! to insolvency than it is to being profitable. That’s not rocket science and in no way something against the law.

Even our CEO stated that this year makes or breaks the company. Do you want to file a lawsuit because our own CEO acknowledges the situation we’re in?

Altering what I’ve written changing the context and claiming that I said that the company is *close*, close! to bankruptcy, hence insolvency, that’s unlawful. That’s spreading misinformation at its finest.

Do I live closer to Egypt than you do? Yes I do. Do I live close to Egypt? No I don’t.

How you come up that we’re partnered with Samsung is something you have to explain to us.

That’s what I’ve written “The issue is. If Samsung is not using us through ARM or whoever else it might be we compete against a company with its own foundries.”

Tell me where do I claim our partnership with Samsung? Sounds made up to me.

Hell, I’ll even link it for your convenience.

BRN Discussion Ongoing

Did that interviewer, just walk in off the street? He had absolutely no idea..

thestockexchange.com.au

thestockexchange.com.au

If we’re not involved through a third party that uses Akida (Arm, Megachips or whoever else it might be), not partnership with Samsung, a third party, we’re gonna have some serious issues.

Stop claiming things I supposedly said but didn’t. And for god sake stop altering my sentences to make them fit your narrative and changing the context of what I’ve written. That really pissed me off!

Seeing all the cheerleaders mindlessly agreeing with everything you say and lashing out against anyone who thinks otherwise makes me question why I’m even here. This place has turned into an echo chamber that just allows one single point of view. I hope that’s a wake up call for some.

I always regarded you highly since you’ve been there since day one with great research but threatening me, altering my sentences and the context of what I’ve written and then suggesting me not to continue with this vein left an enormous dent.

Attachments

stockduck

Regular

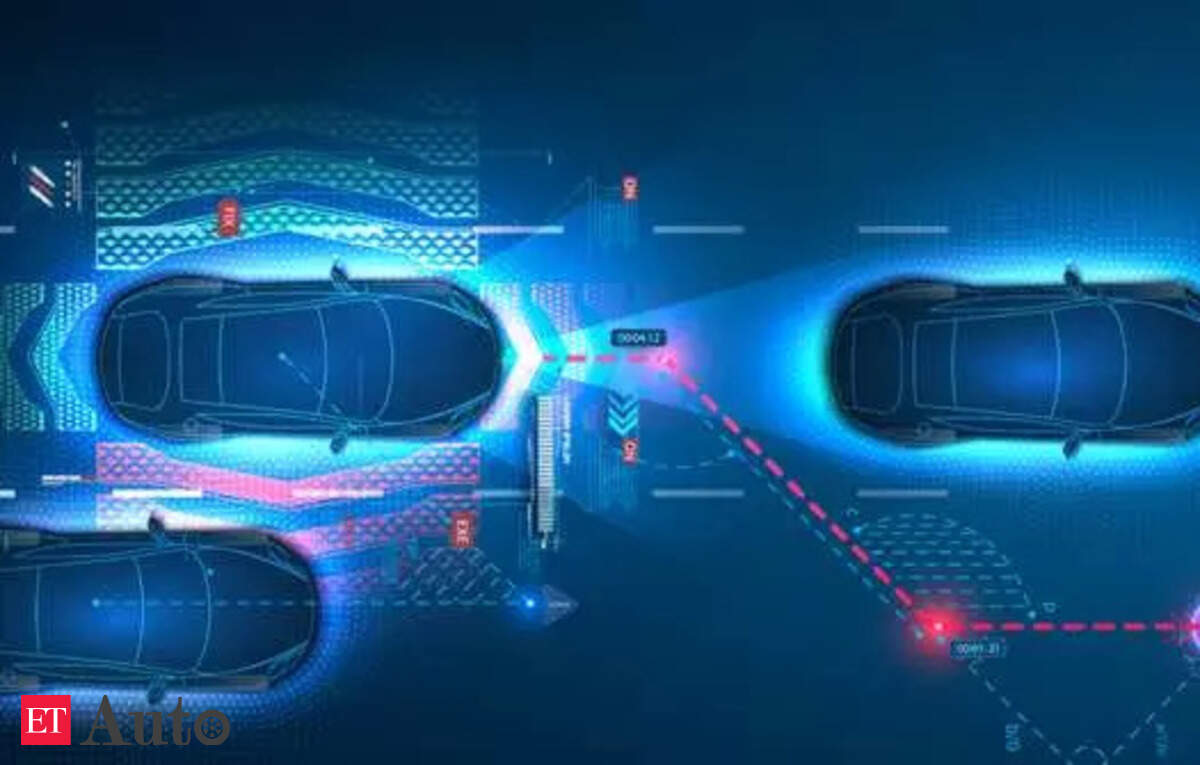

This is one of the most interesting article...View attachment 55019

Valeo bets on ADAS, electrification; showcases new tech at CES - ET Auto

Valeo: The France-based Tier-1 supplier has created hardware and perception software functions for LiDAR technology to create real-time visuals for autonomous vehicle systems. It is now showcasing Scala 3, its 3rd generation LiDAR (Light Detection & Ranging), which claims to spot and react to...auto.economictimes.indiatimes.com

the more I read it, the more ideas comes in my mind in how many cases could be akida IP built in.

What if valeo hasn`t "lost the presumed business" on Lidar-Systems with MB... only the technology will be different that they now are working together

"

"to drive thermal management strategies of EVs for extended driving range and extendet battery lifespan.." with a software from valeo?

It is only speculation, but 2028 will be exciting I guess.

Read more at:

https://auto.economictimes.indiatim...ff0&utm_medium=smarpshare&utm_source=linkedin

Last edited:

Tothemoon24

Top 20

| ||||||||||||||||||||||||||||||||||||||||||

|

Patrick Ahern on LinkedIn: BrainChip’s All things AI Podcast Live from CES with Jeff Bier

It is great to hear Jeff Bier's insights on the Computer Vision, Machine Learning, and Perceptual AI market. Please take a listen to BrainChip's "All Things…

David holland

Regular

And yes thats the problem here, if you put a negative comment up it doesn't fit the bill of the forum your a negative arse, Facts are the Facts, yes exciting times but the balance needs some cashJesus Christ that was quite an outrage. Where should I even begin?

Regarding the excitement:

For example “Brilliant quarterly update” comes to my mind.

Fact Finder the bankruptcy and insolvency issue is noted and something that I learned today. I was in the wrong.

How you come up that I post something criminal though I have no idea.

A company with -14000% net profit margin in the penultimate 4C is *closer* I repeat closer! to insolvency than it is to being profitable. That’s not rocket science and in no way something against the law.

Even our CEO stated that this year makes or breaks the company. Do you want to file a lawsuit because our own CEO acknowledges the situation we’re in?

Altering what I’ve written changing the context and claiming that I said that the company is *close*, close! to bankruptcy, hence insolvency, that’s unlawful. That’s spreading misinformation at its finest.

Do I live closer to Egypt than you do? Yes I do. Do I live close to Egypt? No I don’t.

How you come up that we’re partnered with Samsung is something you have to explain to us.

That’s what I’ve written “The issue is. If Samsung is not using us through ARM or whoever else it might be we compete against a company with its own foundries.”

Tell me where do I claim our partnership with Samsung? Sounds made up to me.

Hell, I’ll even link it for your convenience.

BRN Discussion Ongoing

Did that interviewer, just walk in off the street? He had absolutely no idea..thestockexchange.com.au

If we’re not involved through a third party that uses Akida (Arm, Megachips or whoever else it might be), not partnership with Samsung, a third party, we’re gonna have some serious issues.

Stop claiming things I supposedly said but didn’t. And for god sake stop altering my sentences to make them fit your narrative and changing the context of what I’ve written. That really pissed me off!

Seeing all the cheerleaders mindlessly agreeing with everything you say and lashing out against anyone who thinks otherwise makes me question why I’m even here. This place has turned into an echo chamber that just allows one single point of view. I hope that’s a wake up call for some.

I always regarded you highly since you’ve been there since day one with great research but threatening me, altering my sentences and the context of what I’ve written and then suggesting me not to continue with this vein left an enormous dent.

Pom down under

Top 20

Jesus Christ that was quite an outrage. Where should I even begin?

Regarding the excitement:

For example “Brilliant quarterly update” comes to my mind.

Fact Finder the bankruptcy and insolvency issue is noted and something that I learned today. I was in the wrong.

How you come up that I post something criminal though I have no idea.

A company with -14000% net profit margin in the penultimate 4C is *closer* I repeat closer! to insolvency than it is to being profitable. That’s not rocket science and in no way something against the law.

Even our CEO stated that this year makes or breaks the company. Do you want to file a lawsuit because our own CEO acknowledges the situation we’re in?

Altering what I’ve written changing the context and claiming that I said that the company is *close*, close! to bankruptcy, hence insolvency, that’s unlawful. That’s spreading misinformation at its finest.

Do I live closer to Egypt than you do? Yes I do. Do I live close to Egypt? No I don’t.

How you come up that we’re partnered with Samsung is something you have to explain to us.

That’s what I’ve written “The issue is. If Samsung is not using us through ARM or whoever else it might be we compete against a company with its own foundries.”

Tell me where do I claim our partnership with Samsung? Sounds made up to me.

Hell, I’ll even link it for your convenience.

BRN Discussion Ongoing

Did that interviewer, just walk in off the street? He had absolutely no idea..thestockexchange.com.au

If we’re not involved through a third party that uses Akida (Arm, Megachips or whoever else it might be), not partnership with Samsung, a third party, we’re gonna have some serious issues.

Stop claiming things I supposedly said but didn’t. And for god sake stop altering my sentences to make them fit your narrative and changing the context of what I’ve written. That really pissed me off!

Seeing all the cheerleaders mindlessly agreeing with everything you say and lashing out against anyone who thinks otherwise makes me question why I’m even here. This place has turned into an echo chamber that just allows one single point of view. I hope that’s a wake up call for some.

I always regarded you highly since you’ve been there since day one with great research but threatening me, altering my sentences and the context of what I’ve written and then suggesting me not to continue with this vein left an enormous dent.

Pom down under

Top 20

Pom down under

Top 20

And yes thats the problem here, if you put a negative comment up it doesn't fit the bill of the forum your a negative arse, Facts are the Facts, yes exciting times but the balance needs some cash

Video interview is worth watching, gets interesting around 8min

www.edge-ai-vision.com

www.edge-ai-vision.com

www.linkedin.com

www.linkedin.com

The State of AI and Where It’s Heading in 2024 - Edge AI and Vision Alliance

This blog post was originally published at Qualcomm’s website. It is reprinted here with the permission of Qualcomm. An interview with Qualcomm Technologies’ SVP, Durga Malladi, about AI benefits, challenges, use cases and regulations It’s an exciting and dynamic time as the adoption of...

www.edge-ai-vision.com

www.edge-ai-vision.com

Edge AI and Vision Alliance on LinkedIn: The State of AI and Where It’s Heading in 2024

New Blog Post from Qualcomm: "The State of AI and Where It’s Heading in 2024"

Last edited:

GStocks123

Regular

The year we either sink or swim… if no revenue from Edgebox, MegaChips or Renesas I believe we’re fooked.The excitement for 780k in revenue in this forum is actually frightening. At least this 4c isn’t as bad as the prior one. Logically speaking this company is closer to bankruptcy than it is to be profitable. But hopes are high. Let’s see what 2024 brings.

David holland

Regular

Don't worry ARM Will buy us outThe year we either sink or swim… if no revenue from Edgebox, MegaChips or Renesas I believe we’re fooked.

GStocks123

Regular

I bloody hope so!Don't worry ARM Will buy us out

Fact Finder

Top 20

Hi All

As it is time for wild predictions about Brainchips imminent collapse this is mine:

China will invade Taiwan. The US will respond China will declare war on the US and it’s allies. Donald Trump will become President and do a deal that ends the war between China and the US on the basis China gets Taiwan, Japan and Australia.

Australia is overrun the ASX collapses. All ASX listed companies and their assets are forfeited to the CCP and we all are penniless and in detention camps.

Our PM just happens to be overseas in the USA. He claims asylum and is appointed economic adviser to the President.

Laughable yes but as sensible as any other prediction I have read this morning.

1. Samsung with its own foundries will destroy Brainchip unless Brainchip IP is being used by Samsung through ARM or otherwise.

“Samsung is the second-biggest foundry globally by revenue, with a 17.3% market share compared to 52.9% for TSMC, according to TrendForce”

This means 82% of the worlds chips are not made by Samsung.

ARM supplies 90% of the chips used in mobile phones. This means Samsung has less than ten percent of the market for chips in mobile phones. There are others supplying chips within that 10% market space out competing Samsung.

Brainchip supplies IP which could be used in 82% of all chips made by foundries but if they are not being used by Samsung might as well shut up shop and go home.

Makes complete sense apparently.

2. Brainchip is partnered with dozens of companies but if revenue does not come from Renesas, VVDN and MegaChips this year it’s all over. The disclosed relationships with OnSemi,Infineon & Microchip companies with a combined capitalisation of 112 billion dollars means nothing. The partnership with Mercedes Benz isn’t worth a cracker. The Unigen AKIDA Cupcake is a write off. The EDGX and ANT61 space applications of no consequence. Tata Elxsi driving AKIDA technology use in medical and industrial applications forget it.

Valeo dead in the water does not rate a mention. ARM who has even heard of it.

The list of dismissals this wild prediction assumes even exceeds what Warnie used to do to an English Ashes team.

If you want to make unsupported idiotic statements on this investment forum yes you will be attacked.

You will be ridiculed and hounded.

You might even be a genuine shareholder unhappy and hurting as a result of the share price but the posters who come here are in the same boat.

There are in case you didn’t know not two classes of shares where your shares are 15.5 cents and mine are $10.00. Every share holder is holding BRNASX at 15.5 cents.

Allowing illogical unsupported rubbish does not improve investor sentiment nor does it encourage new investment to enquire more deeply potentially improving prospects of recovery over time.

There is a place for you to go and rant and it’s free and does not care about accuracy and is open 24/7. No one will fact check you there you can go for it till the cows come home.

This of course is not news to you. The fact you come here raises concerns when you by your own admission know you will be attacked.

Maybe you are like those who sit among the rival teams supporters and barrack for your team while hurling abuse at those around you. Then complain when a beer is emptied over your head.

My opinion only DYOR

Fact Finder

As it is time for wild predictions about Brainchips imminent collapse this is mine:

China will invade Taiwan. The US will respond China will declare war on the US and it’s allies. Donald Trump will become President and do a deal that ends the war between China and the US on the basis China gets Taiwan, Japan and Australia.

Australia is overrun the ASX collapses. All ASX listed companies and their assets are forfeited to the CCP and we all are penniless and in detention camps.

Our PM just happens to be overseas in the USA. He claims asylum and is appointed economic adviser to the President.

Laughable yes but as sensible as any other prediction I have read this morning.

1. Samsung with its own foundries will destroy Brainchip unless Brainchip IP is being used by Samsung through ARM or otherwise.

“Samsung is the second-biggest foundry globally by revenue, with a 17.3% market share compared to 52.9% for TSMC, according to TrendForce”

This means 82% of the worlds chips are not made by Samsung.

ARM supplies 90% of the chips used in mobile phones. This means Samsung has less than ten percent of the market for chips in mobile phones. There are others supplying chips within that 10% market space out competing Samsung.

Brainchip supplies IP which could be used in 82% of all chips made by foundries but if they are not being used by Samsung might as well shut up shop and go home.

Makes complete sense apparently.

2. Brainchip is partnered with dozens of companies but if revenue does not come from Renesas, VVDN and MegaChips this year it’s all over. The disclosed relationships with OnSemi,Infineon & Microchip companies with a combined capitalisation of 112 billion dollars means nothing. The partnership with Mercedes Benz isn’t worth a cracker. The Unigen AKIDA Cupcake is a write off. The EDGX and ANT61 space applications of no consequence. Tata Elxsi driving AKIDA technology use in medical and industrial applications forget it.

Valeo dead in the water does not rate a mention. ARM who has even heard of it.

The list of dismissals this wild prediction assumes even exceeds what Warnie used to do to an English Ashes team.

If you want to make unsupported idiotic statements on this investment forum yes you will be attacked.

You will be ridiculed and hounded.

You might even be a genuine shareholder unhappy and hurting as a result of the share price but the posters who come here are in the same boat.

There are in case you didn’t know not two classes of shares where your shares are 15.5 cents and mine are $10.00. Every share holder is holding BRNASX at 15.5 cents.

Allowing illogical unsupported rubbish does not improve investor sentiment nor does it encourage new investment to enquire more deeply potentially improving prospects of recovery over time.

There is a place for you to go and rant and it’s free and does not care about accuracy and is open 24/7. No one will fact check you there you can go for it till the cows come home.

This of course is not news to you. The fact you come here raises concerns when you by your own admission know you will be attacked.

Maybe you are like those who sit among the rival teams supporters and barrack for your team while hurling abuse at those around you. Then complain when a beer is emptied over your head.

My opinion only DYOR

Fact Finder

They change their opinion like underwear… it’s a crapsideMotely Fool seems to of changed their tune a bit on BrainChip

View attachment 55189 a

View attachment 55190

View attachment 55191

View attachment 55192

Another quote from the BRN website. A post CES write up.

I posted this link yesterday. https://brainchip.com/designing-smarter-and-safer-cars-with-essential-ai/

"AKIDA also enables sophisticated voice control technology that instantly responds to commands – as well as gaze estimation and emotion classification systems that proactively prompt drivers to focus on the road. Indeed, the Mercedes-Benz Vision EQXX features AKIDA-powered neuromorphic AI voice control technology which is five to ten times more efficient than conventional systems. "

My bold above.

Its no wonder BRN also says " " That’s why automotive companies are untethering edge AI functions from the cloud – and performing distributed inference computation on local neuromorphic silicon using BrainChip’s AKIDA. " My bold.

Note: automotive companies - plural

As soon as the Mercedes/BRN connection became public other Autos would have zoomed in on BRN. That is just how business works. No one wants to be caught short.

There now quite a few direct references to Mercedes on the BRN website. There is now no doubt whatsoever that BRN/Mercedes are working together. Also other Autos are at the very least looking at our products.

Diehard no credibility downrampers will just say BRN dreams it all up.

This combined with other known links such as Tata Elxsi could end up being huge.

I posted this link yesterday. https://brainchip.com/designing-smarter-and-safer-cars-with-essential-ai/

"AKIDA also enables sophisticated voice control technology that instantly responds to commands – as well as gaze estimation and emotion classification systems that proactively prompt drivers to focus on the road. Indeed, the Mercedes-Benz Vision EQXX features AKIDA-powered neuromorphic AI voice control technology which is five to ten times more efficient than conventional systems. "

My bold above.

Its no wonder BRN also says " " That’s why automotive companies are untethering edge AI functions from the cloud – and performing distributed inference computation on local neuromorphic silicon using BrainChip’s AKIDA. " My bold.

Note: automotive companies - plural

As soon as the Mercedes/BRN connection became public other Autos would have zoomed in on BRN. That is just how business works. No one wants to be caught short.

There now quite a few direct references to Mercedes on the BRN website. There is now no doubt whatsoever that BRN/Mercedes are working together. Also other Autos are at the very least looking at our products.

Diehard no credibility downrampers will just say BRN dreams it all up.

This combined with other known links such as Tata Elxsi could end up being huge.

Wow I’m surprised you didn’t mentioned Putin in your prediction because he is responsible for everything actually… last week I heard in a restaurant someone dropped a plate of a expensive piece of steak on the flor and he screamed “PUUUUTIIIIN”…..Hi All

As it is time for wild predictions about Brainchips imminent collapse this is mine:

China will invade Taiwan. The US will respond China will declare war on the US and it’s allies. Donald Trump will become President and do a deal that ends the war between China and the US on the basis China gets Taiwan, Japan and Australia.

Australia is overrun the ASX collapses. All ASX listed companies and their assets are forfeited to the CCP and we all are penniless and in detention camps.

Our PM just happens to be overseas in the USA. He claims asylum and is appointed economic adviser to the President.

Laughable yes but as sensible as any other prediction I have read this morning.

1. Samsung with its own foundries will destroy Brainchip unless Brainchip IP is being used by Samsung through ARM or otherwise.

“Samsung is the second-biggest foundry globally by revenue, with a 17.3% market share compared to 52.9% for TSMC, according to TrendForce”

This means 82% of the worlds chips are not made by Samsung.

ARM supplies 90% of the chips used in mobile phones. This means Samsung has less than ten percent of the market for chips in mobile phones. There are others supplying chips within that 10% market space out competing Samsung.

Brainchip supplies IP which could be used in 82% of all chips made by foundries but if they are not being used by Samsung might as well shut up shop and go home.

Makes complete sense apparently.

2. Brainchip is partnered with dozens of companies but if revenue does not come from Renesas, VVDN and MegaChips this year it’s all over. The disclosed relationships with OnSemi,Infineon & Microchip companies with a combined capitalisation of 112 billion dollars means nothing. The partnership with Mercedes Benz isn’t worth a cracker. The Unigen AKIDA Cupcake is a write off. The EDGX and ANT61 space applications of no consequence. Tata Elxsi driving AKIDA technology use in medical and industrial applications forget it.

Valeo dead in the water does not rate a mention. ARM who has even heard of it.

The list of dismissals this wild prediction assumes even exceeds what Warnie used to do to an English Ashes team.

If you want to make unsupported idiotic statements on this investment forum yes you will be attacked.

You will be ridiculed and hounded.

You might even be a genuine shareholder unhappy and hurting as a result of the share price but the posters who come here are in the same boat.

There are in case you didn’t know not two classes of shares where your shares are 15.5 cents and mine are $10.00. Every share holder is holding BRNASX at 15.5 cents.

Allowing illogical unsupported rubbish does not improve investor sentiment nor does it encourage new investment to enquire more deeply potentially improving prospects of recovery over time.

There is a place for you to go and rant and it’s free and does not care about accuracy and is open 24/7. No one will fact check you there you can go for it till the cows come home.

This of course is not news to you. The fact you come here raises concerns when you by your own admission know you will be attacked.

Maybe you are like those who sit among the rival teams supporters and barrack for your team while hurling abuse at those around you. Then complain when a beer is emptied over your head.

My opinion only DYOR

Fact Finder

Or was it Puta?

Pom down under

Top 20

Hi All

As it is time for wild predictions about Brainchips imminent collapse this is mine:

China will invade Taiwan. The US will respond China will declare war on the US and it’s allies. Donald Trump will become President and do a deal that ends the war between China and the US on the basis China gets Taiwan, Japan and Australia.

Australia is overrun the ASX collapses. All ASX listed companies and their assets are forfeited to the CCP and we all are penniless and in detention camps.

Our PM just happens to be overseas in the USA. He claims asylum and is appointed economic adviser to the President.

Laughable yes but as sensible as any other prediction I have read this morning.

1. Samsung with its own foundries will destroy Brainchip unless Brainchip IP is being used by Samsung through ARM or otherwise.

“Samsung is the second-biggest foundry globally by revenue, with a 17.3% market share compared to 52.9% for TSMC, according to TrendForce”

This means 82% of the worlds chips are not made by Samsung.

ARM supplies 90% of the chips used in mobile phones. This means Samsung has less than ten percent of the market for chips in mobile phones. There are others supplying chips within that 10% market space out competing Samsung.

Brainchip supplies IP which could be used in 82% of all chips made by foundries but if they are not being used by Samsung might as well shut up shop and go home.

Makes complete sense apparently.

2. Brainchip is partnered with dozens of companies but if revenue does not come from Renesas, VVDN and MegaChips this year it’s all over. The disclosed relationships with OnSemi,Infineon & Microchip companies with a combined capitalisation of 112 billion dollars means nothing. The partnership with Mercedes Benz isn’t worth a cracker. The Unigen AKIDA Cupcake is a write off. The EDGX and ANT61 space applications of no consequence. Tata Elxsi driving AKIDA technology use in medical and industrial applications forget it.

Valeo dead in the water does not rate a mention. ARM who has even heard of it.

The list of dismissals this wild prediction assumes even exceeds what Warnie used to do to an English Ashes team.

If you want to make unsupported idiotic statements on this investment forum yes you will be attacked.

You will be ridiculed and hounded.

You might even be a genuine shareholder unhappy and hurting as a result of the share price but the posters who come here are in the same boat.

There are in case you didn’t know not two classes of shares where your shares are 15.5 cents and mine are $10.00. Every share holder is holding BRNASX at 15.5 cents.

Allowing illogical unsupported rubbish does not improve investor sentiment nor does it encourage new investment to enquire more deeply potentially improving prospects of recovery over time.

There is a place for you to go and rant and it’s free and does not care about accuracy and is open 24/7. No one will fact check you there you can go for it till the cows come home.

This of course is not news to you. The fact you come here raises concerns when you by your own admission know you will be attacked.

Maybe you are like those who sit among the rival teams supporters and barrack for your team while hurling abuse at those around you. Then complain when a beer is emptied over your head.

My opinion only DYOR

Fact Finder

Similar threads

- Replies

- 1

- Views

- 4K

- Replies

- 10

- Views

- 6K

- Replies

- 1

- Views

- 3K