Fox151

Regular

I can't believe nobody's posted this yet... or tried to contact the bloke n tell him about us...

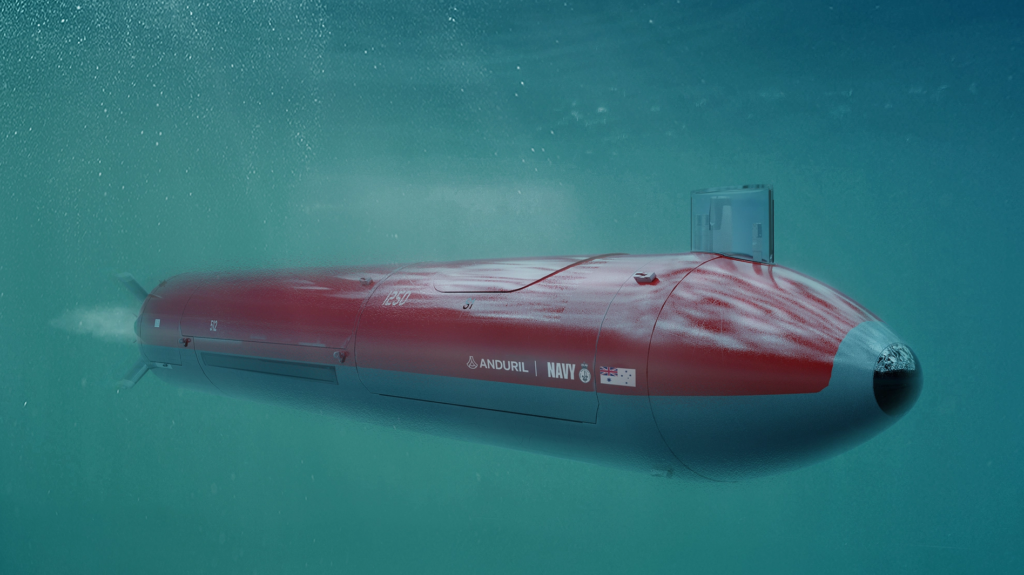

Hi Lex, Further to your research, came across this today from the Breaking Defence website, that if Akida is not being trialled/used in this, then it certainly should.I was looking into military edge applications for Akida and found Anduril, a Silicon Valley military AI upstart. It’s core system is Lattice OS, an autonomous sense-making and command & control platform.

“Lattice uses technologies like sensor fusion, computer vision, edge computing, and machine learning and artificial intelligence to detect, track, and classify every object of interest in an operator's vicinity.”

View attachment 9586

Anduril — Lattice

www.anduril.com

Now imagine a ‘Neuromorphic bomb’ being deployed from either air or sea that could drop 1000’s of smart sensors in a defensive or offensive situations to utilise Lattice.

The Australian defence force is already interested in their DIVE-LD UAV, with Anduril having established Australian headquarters based in Sydney, and preparing component manufacturing over multiple sites.

Imagine 10x AUV’s released from a mother-sub and each of these having a 1000+ sensor babies with Akida that get deployed deep into hostile territory, these tennis ball sized sensors could return or be collected again after mission by the AUV. If WWIII broke out I know I’d want a few 1000’s in grid formation surrounding all key military infrastructure.

Anduril — Dive-LD

www.anduril.com

10x microsoft = 20 trillion plus and that is in US $If I can repeat and remind everybody what Bill Gates has said previously-

“If you invent a breakthrough in artificial intelligence, so machines can learn, that is worth 10 Microsofts,”

Who has machine learning?

Who has on-chip learning?

Who has one-shot learning?

Who has on-chip convolution?

Yeah that’s right…BRAINCHIP!

Only a matter of time!!!

Can someone please just buy all my shares now then @ $100 each so I can retire and move to the bloody Bahamas!If I can repeat and remind everybody what Bill Gates has said previously-

“If you invent a breakthrough in artificial intelligence, so machines can learn, that is worth 10 Microsofts,”

Who has machine learning?

Who has on-chip learning?

Who has one-shot learning?

Who has on-chip convolution?

Yeah that’s right…BRAINCHIP!

Only a matter of time!!!

Can someone please just buy all my shares now then @ $100 each so I can retire and move to the bloody Bahamas!

I don’t agree that BRN just want ‘Akida IP to be tied into their smallest chip’ - so just to MCUs; so there’s actually a mistake in this anyway since Renesas licensed 2 nodes with multi-pass to go right into air quality sensors. They’ve created a neural fabric IP that is so configurable that it can be licensed with as little as 2 nodes or as many as 240 nodes for very complex inference where power budget is not as important. The multi-pass allows the 2 nodes to provide more inference capability and keep the very low power budget but there’s no reason why they wouldn’t license for high-end complexity since they can charge proportionately more for the extra nodes. I agree that at the moment they are probably pretty focussed on the far edge and proliferation, but their longer term plans would not discount being involved in more complexity. AIMO.On the technical front here is my take

. Because we are trying to take over the edge, Akida's performance is not measured so much in TOPS, but TOPS/W (ie per watt.)

. We really want Akida IP to be tied into their smallest chip, ( so just an MCU), so that this can be placed adjacent to each and every sensor.

( so the SiFive E2 would be best choice.)

. This Akida informed MCU will pass on just the information required to whatever vector based trained neural network is appropriate.

. So What I'm praying for is an ann that says "SuchandSuch SoC developer publicly announces their adoption of SiFive and Akida IP to produce worlds smallest and most versatile sensor MCU"

. So the question is, which sensor manufacturer apart from Renesas who seems to have gone down the Arm road, is big enough to push for this?

Ha ha keep dreaming mate10x microsoft = 20 trillion plus and that is in US $

My guess is that will have 1trillion market cap in 2030.

My opinion only

DYOR

TheDon

| ||||||||||||

Announcement What is that????There is no announcement for this beautiful Monday!

Awesome, just bought another parcel of shares! Silly people for Selling.Damn it we are going back down, can we get a freaking update? Even Nviso has more updates on their website on the progress for their investors.

Tend to agree. I've been in since 2016 and don't remember such a long "blackout" period....sure hope the news will be spectacular when it eventually sees the light of day.......Damn it we are going back down, can we get a freaking update? Even Nviso has more updates on their website on the progress for their investors.