You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

BRN Discussion Ongoing

- Thread starter TechGirl

- Start date

Investig8ed

Regular

What was that company's name. I came across them at an investor road show in Melbourne that I attended only to meet Peter as I was already a shareholderThere is a Western Autralian company which has developed a low cost system for converting natural gas to hydrogen using iron ore as a catalyst while capturing the cabon as graphite which can be used, for example in the manufacture of steel.

They are currently testing a pilot plant in WA.

With all the zero carbon objectives, I think that gas producers would embrace this tech.

Food4 thought

Regular

Hi ya all

NVIDIA must know us pretty well by the looks of this thank you note from Edge impulse.

And as I read this morning something about working on or with someone on the (AWS) platform.

Mmm

The mind boggles

Edge Impulse supports our customers in bringing Edge AI to their devices across an incredible range of use cases and industries - but my heart will always be with our Partners and our THRIVING ecosystem who provide all of the pieces to make that happen. Edge AI is a team effort and I'm privileged to be part of this exciting extended team.

THANK YOU PARTNERS

AAEON Advantech USA Alif Semiconductor Ambiq Arm Amazon Web Services (AWS) Blues BrainChip Capgemini Invent Ceva, Inc. Golioth IAR Microchip Technology Inc. Network Optix Nordic Semiconductor NVIDIA OpenMV LLC Synapse Product Development Think Silicon, an Applied Materials company Wind River

Adam B. Amir Sherman Joshua Buck Zach Shelby Spencer Huang Raquel L. Roberts, MBA Keelin Murphy David Tischler Teague Gudemann

Love is in the air

Are we ready to fly to the moon and beyond

NVIDIA must know us pretty well by the looks of this thank you note from Edge impulse.

And as I read this morning something about working on or with someone on the (AWS) platform.

Mmm

The mind boggles

Edge Impulse supports our customers in bringing Edge AI to their devices across an incredible range of use cases and industries - but my heart will always be with our Partners and our THRIVING ecosystem who provide all of the pieces to make that happen. Edge AI is a team effort and I'm privileged to be part of this exciting extended team.

THANK YOU PARTNERS

AAEON Advantech USA Alif Semiconductor Ambiq Arm Amazon Web Services (AWS) Blues BrainChip Capgemini Invent Ceva, Inc. Golioth IAR Microchip Technology Inc. Network Optix Nordic Semiconductor NVIDIA OpenMV LLC Synapse Product Development Think Silicon, an Applied Materials company Wind River

Adam B. Amir Sherman Joshua Buck Zach Shelby Spencer Huang Raquel L. Roberts, MBA Keelin Murphy David Tischler Teague Gudemann

Love is in the air

Are we ready to fly to the moon and beyond

TheDrooben

Pretty Pretty Pretty Pretty Good

Hazer Group HZR ??? I have been looking into them for about 12 months but have been buying more BRN while the SP is where it is. Will probably make an initial investment in HZR soon. Tech looks interesting just "watching the financials".......mmmmWhat was that company's name. I came across them at an investor road show in Melbourne that I attended only to meet Peter as I was already a shareholder

I've heard that somewhere before

Happy as Larry

Frangipani

Top 20

Edge AI-Driven Vision Detection Solution Introduced at 500 Convenience StoreLocations to Measure Advertising Effectiveness|News Releases|Sony Semiconductor Solutions Group

Sony Semiconductor Solutions Group develops device business which includes Micro display, LSIs, and Semiconductor Laser, in focusing on Image Sensor.www.sony-semicon.com

April 24, 2024

Edge AI-Driven Vision Detection Solution Introduced at 500 Convenience Store Locations to Measure Advertising Effectiveness

Sony Semiconductor Solutions Corporation

Atsugi, Japan, April 24, 2024 —

Today, Sony Semiconductor Solutions Corporation (SSS) announced that it has introduced and begun operating an edge AI-driven vision detection solution at 500 convenience store locations in Japan to improve the benefits of in-store advertising.

Edge AI technology automatically detects the number of digital signage viewers and how long they viewed it.

SSS has been providing 7-Eleven and other retail outlets in Japan with vision-based technology to improve the implementation of digital signage systems and in-store advertising at their brick-and-mortar locations as part of their retail media*1 strategy. To help ensure that effective content is shown for brands and stores, this solution gives partners sophisticated tools to evaluate the effectiveness of advertising on their customers.

As part of this effort, SSS has recently introduced a solution that uses edge devices with on-sensor AI processing to automatically detect when customers see digital signage, count how many people paused to view it, and measure the percentage of viewers. The AI capabilities of the sensor collects data points such as the number of shoppers who enter the detection area, whether they saw the signage, the number who stopped to view the signage, and how long they watched for. The system does not output image data capable of identifying individuals, making it possible to provide insightful measurements while helping to preserve privacy.

Click here for an overview video of the solution and interview with 7-Eleven Japan.

Solution features:

-IMX500 intelligent vision sensor delivers optimal data collection, while helping to preserve privacy.

SSS’s IMX500 intelligent vision sensor with AI-processing capabilities automatically detects the number of customers who enter the detection area, the number who stopped to view the signage, and how long they viewed it. The acquired metadata (semantic information) is then sent to a back-end system where it’s combined with content streaming information and purchasing data to conduct a sophisticated analysis of advertising effectiveness. Because the system does not output image data that could be used to identify individuals, it helps to preserve customer privacy.

-Edge devices equipped with the IMX500 save space in store.

The IMX500 is made using SSS’s proprietary structure with the pixel chip and logic chip stacked, enabling the entire process, from imaging to AI inference, to be done on a single sensor. Compact, IMX500-equipped edge devices (approx. 55 x 40 x 35 mm) are unobtrusive in shops, and compared to other solutions that require an AI box or other additional devices for AI inference, can be installed more flexibly in convenience stores and shops with limited space.

-The AITRIOS™ platform contributes to operational stability and system expandability.

The IMX500 also handles AI computing, eliminating the need for other devices such as an AI box, resulting in a simple device configuration, streamlining device maintenance and reducing costs of installation.

*1 A new form of advertising media that provides advertising space for retailers and e-commerce sites using their own platforms

*2 AITRIOS is an AI sensing platform for streamlined device management, AI development, and operation. It offers the development environment, tools, features, etc., which are necessary for deploying AI-driven solutions, and it contributes to shorter roll-out times when launching operations, while ensuring privacy, reducing introductory cost, and minimizing complications. For more information on AITRIOS, visit: https://www.aitrios.sony-semicon.com/en

About Sony Semiconductor Solutions Corporation

Sony Semiconductor Solutions Corporation is a wholly owned subsidiary of Sony Group Corporation and the global leader in image sensors. It operates in the semiconductor business, which includes image sensors and other products. The company strives to provide advanced imaging technologies that bring greater convenience and fun. In addition, it also works to develop and bring to market new kinds of sensing technologies with the aim of offering various solutions that will take the visual and recognition capabilities of both human and machines to greater heights. For more information, please visit https://www.sony-semicon.com/en/index.html.

AITRIOS and AITRIOS logos are the registered trademarks or trademarks of Sony Group Corporation or its affiliated companies.

Microsoft and Azure are registered trademarks of Microsoft Corporation in the United States and other countries.

All other company and product names herein are trademarks or registered trademarks of their respective owners.

Here is some wild speculation: Could thispossibly be a candidate for the mysterious Custom Customer SoC, featured in the recent Investor Roadshow presentation (provided the licensing of Akida IP was done via MegaChips)?

Post in thread 'AITRIOS'

https://thestockexchange.com.au/threads/aitrios.18971/post-31633

View attachment 63835

Visit us at Booth 1947B, where we will be showcasing exciting demos, including our Temporal Event-based Neural Networks (TENNs) and the Raspberry Pi 5 with Face and Edge Learning. Don’t miss the opportunity to connect and learn more about our innovative solutions.

The sold-out Raspberry Pi Akida Dev Kit was based on a Raspberry 4…

View attachment 69849

Raspberry Pi offers an AI Kit with a Hailo AI acceleration module containing an NPU for use with the Raspberry Pi 5.

View attachment 69850

Maybe there has been something similar in the works for our company?

Could the mysterious Akida Pico have anything to do with the Raspberry Pi Pico series of microcontrollers?

Could Akida by any chance be the integrated AI accelerator used in the newly launched Raspberry Pi AI Camera, jointly developed by Raspberry Pi and Sony Semiconductor Solutions (SSS)? (For the sake of completeness, I should, however, add that while Sony’s IMX500 Camera Module is being promoted as having on-chip AI image processing, there is no specific mention of this involving neuromorphic technology.)

I am afraid I can’t answer the legitimate question regarding potential revenue, though. It is a fact that Sony Semiconductor Solutions has not signed a license with us, so it would have to be a license through either Megachips or Renesas (both of which also happen to be Japanese companies).

I guess - as usual - we will have to resort to watching the financials, unless we find out sooner one way or the other…

So here goes my train of thought: Yesterday (Sept 30) was the Raspberry Pi AI Camera’s official launch - coincidentally (or not?), this happened to be the day when the BRN share price soared without any official news as a catalyst… Could there have been some kind of leak, though?

Also, BrainChip has promised “exciting demos, including our Temporal Event-based Neural Networks (TENNs) and the Raspberry Pi 5 with Face and Edge Learning” for the Embedded World North America, taking place from October 8-10, 2024, at the Convention Center in Austin, TX.

The now sold-out Akida Raspberry Pi Dev Kit was based on the Raspberry Pi 4, so they won’t be using one of those for the announced Raspberry Pi 5 demo. Since we are nowadays primarily an IP company, would manufacturing and releasing a new Akida Dev Kit based on a Raspberry Pi 5 make sense? Not really. How about demonstrating an affordable AI camera using Akida technology manufactured by someone else (the mysterious Custom Customer SoC?)…

The Raspberry Pi AI Kit that came out in June and uses a Hailo AI acceleration module will only work with a Raspberry Pi 5 (introduced in October 2023), whereas the new Raspberry Pi AI Camera will work with all Raspberry Pi models. So while it may perform best on the latest Raspberry Pi 5, it will still be useful for developers with older RPI models as well.

Maybe one of the resident TSE techies will be able to tell us right away that this is a dead end or a pie in the sky, but until then I’ll keep my fingers crossed…

Sony Semiconductor Solutions and Raspberry Pi Launch the Raspberry Pi AI Camera Accelerating the development of edge AI solutions|News Releases|Sony Semiconductor Solutions Group

Sony Semiconductor Solutions Group develops device business which includes Micro display, LSIs, and Semiconductor Laser, in focusing on Image Sensor.

Sony Semiconductor Solutions and Raspberry Pi Launch the Raspberry Pi AI Camera

Accelerating the development of edge AI solutions

Sony Semiconductor Solutions CorporationRaspberry Pi Ltd.

Atsugi, Japan and Cambridge, UK — Sony Semiconductor Solutions Corporation (SSS) and Raspberry Pi Ltd today announced that they are launching a jointly developed AI camera. The Raspberry Pi AI Camera, which is compatible with Raspberry Pi’s range of single-board computers, will accelerate the development of AI solutions which process visual data at the edge. Starting from September 30, the product will be available for purchase from Raspberry Pi’s network of Approved Resellers, for a suggested retail price of $70.00*.

* Not including any applicable local taxes.

In April 2023, it was announced that SSS would make a minority investment in Raspberry Pi Ltd. Since then, the companies have been working to develop an edge AI platform for the community of Raspberry Pi developers, based on SSS technology. The AI Camera is powered by SSS’s IMX500 intelligent vision sensor, which is capable of on-chip AI image processing, and enables Raspberry Pi users around the world to easily and efficiently develop edge AI solutions that process visual data.

- AI camera features

- Because vision data is normally massive, using it to develop AI solutions can require a graphics processing unit (GPU), an accelerator, and a variety of other components in addition to a camera. The new Raspberry Pi AI Camera, however, is equipped with the IMX500 intelligent vision sensor which handles AI processing, making it easy to develop edge AI solutions with just a Raspberry Pi and the AI Camera.

- The new AI Camera is compatible with all Raspberry Pi single-board computers, including the latest Raspberry Pi 5. This enables users to develop solutions with familiar hardware and software, taking advantage of the widely used and powerful libcamera and Picamera2 software libraries.

“AI-based image processing is becoming an attractive tool for developers around the world,” said Eben Upton, CEO, Raspberry Pi Ltd. “Together with our longstanding image sensor partner Sony Semiconductor Solutions, we have developed the Raspberry Pi AI Camera, incorporating Sony’s image sensor expertise. We look forward to seeing what our community members are able to achieve using the power of the Raspberry Pi AI Camera.”

Specifications

- Sensor model: SSS's approx. 12.3 effective megapixel IMX500 intelligent vision sensor with a powerful neural network accelerator

- Sensor modes: 4,056(H) x 3,040(V) at 10 fps / 2,028(H) x 1,520(V) at 40 fps

- Unit cell size: 1.55 µm x 1.55 µm

- 76 degree FoV with manual/mechanical adjustable focus

- Integrated RP2040 for neural network firmware management

- Works with all Raspberry Pi models using only Raspberry Pi standard camera connector cable

- Pre-loaded with MobileNetSSD model

- Fully integrated with libcamera

Sony Semiconductor Solutions Corporation is a wholly owned subsidiary of Sony Group Corporation and the global leader in image sensors. It operates in the semiconductor business, which includes image sensors and other products. The company strives to provide advanced imaging technologies that bring greater convenience and fun. In addition, it also works to develop and bring to market new kinds of sensing technologies with the aim of offering various solutions that will take the visual and recognition capabilities of both human and machines to greater heights.

For more information, please visit https://www.sony-semicon.com/en/index.html.

About Raspberry Pi Ltd

Raspberry Pi is on a mission to put high-performance, low-cost, general-purpose computing platforms in the hands of enthusiasts and engineers all over the world. Since 2012, we’ve been designing single-board and modular computers, built on the Arm architecture, and running the Linux operating system. Whether you’re an educator looking to excite the next generation of computer scientists; an enthusiast searching for inspiration for your next project; or an OEM who needs a proven rock-solid foundation for your next generation of smart products, there’s a Raspberry Pi computer for you.

Note: AITRIOS is the registered trademark or trademark of Sony Group Corporation or its affiliates.

Attachments

Frangipani

Top 20

Hackster.io just revealed what the Akida Pico is all about:

Gareth HalfacreeFollow

59 minutes ago • Machine Learning & AI / Wearables

https://events.hackster.io/impactspotlights

Edge artificial intelligence (edge AI) specialist BrainChip has announced a new entry in its Akida range of brain-inspired neuromorphic processors, the Akida Pico — claiming that it's the "lowest power acceleration coprocessor" yet developed, with eyes on the wearable and sensor-integrated markets.

"Like all of our Edge AI enablement platforms, Akida Pico was developed to further push the limits of AI on-chip compute with low latency and low power required of neural applications," claims BrainChip chief executive officer Sean Hehir of the company's latest unveiling. "Whether you have limited AI expertise or are an expert at developing AI models and applications, Akida Pico and the Akida Development Platform provides users with the ability to create, train and test the most power and memory efficient temporal-event based neural networks quicker and more reliably."

BrainChip has announced a new entry in its Akida family of neuromorphic processors, the tiny Akida Pico. ( : BrainChip)

: BrainChip)

The Akida Pico is, as the name suggests, based on BrainChip's Akida platform — specifically, the second-generation Akida2. Like its predecessors, it uses neuromorphic processing technology inspired by the human brain to handle selected machine learning and artificial intelligence workloads with a high efficiency — but unlike its predecessors, the Akida Pico has been built to deliver the lowest possible power draw while still offering enough compute performance to be useful.

According to BrainChip, the Akida Pico draws under 1mW under load and uses power island design to offer a "minimal" standby power draw. Chips built around the core are also expected to be extremely small physically, ideal for wearables, thanks to a compact die area and customizable overall footprint through configurable data buffer and model parameter memory specifications. The part, its creators explain, is ideal for always-on AI in battery-powered or high-efficiency systems, where it can be used to wake a more powerful microcontroller or application processor when certain conditions are met.

The Akida Pico is based on the company's second-generation Akida2 platform, but tailored for sub-milliwatt power draw. ( : BrainChip)

: BrainChip)

On the software side, the Akida Pico is supported by BrainChip's in-house MetaTF software flow — allowing the compilation and optimization of Temporal-Enabled Neural Networks (TENNs) for execution on the device. MetaTF also supports importation of existing models developed in TensorFlow, Keras, and PyTorch — meaning, BrainChip says, there's no need to learn a whole new framework to use the Akida Pico.

BrainChip has not yet announced plans to release Akida Pico in hardware, instead concentrating on making it available as Intellectual Property (IP) for others to integrate into their own chip designs; pricing had not been publicly disclosed at the time of writing.

More information is available on the BrainChip website.

energy efficiency

machine learning

artificial intelligence

wearables

gpio

Gareth HalfacreeFollow

Freelance journalist, technical author, hacker, tinkerer, erstwhile sysadmin. For hire: freelance@halfacree.co.uk.

BrainChip Shrinks the Akida, Targets Sub-Milliwatt Edge AI with the Neuromorphic Akida Pico

Second-generation Akida2 neuromorphic computing platform is now available in a battery-friendly form, targeting wearables and always-on AI.

www.hackster.io

BrainChip Shrinks the Akida, Targets Sub-Milliwatt Edge AI with the Neuromorphic Akida Pico

Second-generation Akida2 neuromorphic computing platform is now available in a battery-friendly form, targeting wearables and always-on AI.

Gareth HalfacreeFollow

59 minutes ago • Machine Learning & AI / Wearables

https://events.hackster.io/impactspotlights

Edge artificial intelligence (edge AI) specialist BrainChip has announced a new entry in its Akida range of brain-inspired neuromorphic processors, the Akida Pico — claiming that it's the "lowest power acceleration coprocessor" yet developed, with eyes on the wearable and sensor-integrated markets.

"Like all of our Edge AI enablement platforms, Akida Pico was developed to further push the limits of AI on-chip compute with low latency and low power required of neural applications," claims BrainChip chief executive officer Sean Hehir of the company's latest unveiling. "Whether you have limited AI expertise or are an expert at developing AI models and applications, Akida Pico and the Akida Development Platform provides users with the ability to create, train and test the most power and memory efficient temporal-event based neural networks quicker and more reliably."

BrainChip has announced a new entry in its Akida family of neuromorphic processors, the tiny Akida Pico. (

The Akida Pico is, as the name suggests, based on BrainChip's Akida platform — specifically, the second-generation Akida2. Like its predecessors, it uses neuromorphic processing technology inspired by the human brain to handle selected machine learning and artificial intelligence workloads with a high efficiency — but unlike its predecessors, the Akida Pico has been built to deliver the lowest possible power draw while still offering enough compute performance to be useful.

According to BrainChip, the Akida Pico draws under 1mW under load and uses power island design to offer a "minimal" standby power draw. Chips built around the core are also expected to be extremely small physically, ideal for wearables, thanks to a compact die area and customizable overall footprint through configurable data buffer and model parameter memory specifications. The part, its creators explain, is ideal for always-on AI in battery-powered or high-efficiency systems, where it can be used to wake a more powerful microcontroller or application processor when certain conditions are met.

The Akida Pico is based on the company's second-generation Akida2 platform, but tailored for sub-milliwatt power draw. (

On the software side, the Akida Pico is supported by BrainChip's in-house MetaTF software flow — allowing the compilation and optimization of Temporal-Enabled Neural Networks (TENNs) for execution on the device. MetaTF also supports importation of existing models developed in TensorFlow, Keras, and PyTorch — meaning, BrainChip says, there's no need to learn a whole new framework to use the Akida Pico.

BrainChip has not yet announced plans to release Akida Pico in hardware, instead concentrating on making it available as Intellectual Property (IP) for others to integrate into their own chip designs; pricing had not been publicly disclosed at the time of writing.

More information is available on the BrainChip website.

energy efficiency

machine learning

artificial intelligence

wearables

gpio

Gareth HalfacreeFollow

Freelance journalist, technical author, hacker, tinkerer, erstwhile sysadmin. For hire: freelance@halfacree.co.uk.

Last edited:

Frangipani

Top 20

https://www.embedded.com/brainchips-akida-npu-redefining-ai-processing-with-event-based-architecture

01. Okt. 2024 / 15:55

BrainChip’s Akida NPU: Redefining AI Processing with Event-Based Architecture

Maurizio Di Paolo Emilio

6 min read

0

BrainChip has launched the Akida Pico, enabling the development of compact, ultra-low power, intelligent devices for applications in wearables, healthcare, IoT, defense, and wake-up systems, integrating AI into various sensor-based technologies. According to BrainChip, Akida Pico offers the lowest power standalone NPU core (less than 1mW), supports power islands for minimal standby power, and operates within an industry-standard development environment. It’s very small logic die area and configurable data buffer and model parameter memory help optimize the overall die size.

AI era

In the sophisticated artificial intelligence (AI) era of today, including smart technology into consumer items is usually connected with cloud services, complicated infrastructure, and high expenses. Computational power and energy economy are occasionally in conflict in the realm of edge artificial intelligence. Designed for deep learning activities, traditional neural processing units (NPUs) require significant quantities of power, so they are less suited for always-on, ultra-low-power applications including sensor monitoring, keyword detection, and other extreme edge artificial intelligence uses. BrainChip is providing a fresh approach to this challenge.BrainChip’s solution addresses one of the major challenges in edge AI: how to perform continuous AI processing without draining power. Traditional microcontroller-based AI solutions can manage low-power requirements but often lack the processing capability for complex AI tasks.

Steve Brightfield, CMO at BrainChip

2014 saw the launch of BrainChip, which took its inspiration from Peter Van Der Made’s work on neuromorphic computing concepts. Especially using spiking neural networks (SNNs), this technique replicates how the brain manages information, therefore transforming a fundamentally different method to traditional convolutional neural networks (CNNs). The SNN-based systems of BrainChip only compute when triggered by events rather than doing continuous calculations, hence optimizing power efficiency.

In an interview with Embedded, Steve Brightfield, CMO at BrainChip, talked about how this new method will change the game for ultra-low-power AI apps, showing big steps forward in the field. Brightfield said that this new technology makes it possible for common things like drills, hand tools, and other consumer products to have smart features without costing a lot more. “Today, a battery with a built-in tester can show how healthy it is with a simple color code: green means it’s good, red means it needs to be replaced. Providing a similar indicator, AI in these products can tell you when parts are wearing out before they break. BrainChip’s low-power, low-maintenance AI works in the background without being noticed, so advanced tests can be used by anyone without needing to know a lot about them,” Brightfield said.

Traditional NPUs vs. Event-Based Computing

Brightfield claimed that ordinary NPUs—including those with multiplier-accumulator arrays—run on fixed pipelines, processing every input whether or not it is beneficial. Particularly in cases of sparse data, a typical occurrence in AI applications where most input values have little impact on the final outcome, this inefficacy often leads in wasted calculations. By use of an event-based computing architecture, BrainChip saves computational resources and electricity by activating calculations only when relevant data is present.“Most NPUs keep calculating all data values, even for sparse data,” Brightfield remarked. “We schedule computations dynamically using our event-based architecture, so cutting out unnecessary processing.”

The Influence of Sparsity

BrainChip’s main benefit comes from using data and neural weights’ sparsity. Traditional NPU architectures can take advantage of weight sparsity with pre-compilation, benefiting from model weight pruning, but cannot dynamically schedule for data sparsity, they must process all of the inputs.By processing data just when needed, BrainChip’s SNN technology can drastically lower power usage based on the degree of sparsity in the data. BrainChip’s Akida NPU, for instance, could execute only when the sensor detects a significant signal in audio-based edge applications such as gunshot recognition or keyword detection, therefore conserving energy in the lack of acceptable data.

Akida Pico Block Diagram (Source: Brainchip)

Introducing the Akida Pico: Ultra-Low Power NPU for Extreme Edge AI

Designed on a spiking neural network (SNN) architecture, BrainChip’s Akida Pico processor transforms event-based computing. Unlike conventional artificial intelligence models that demand constant processing capability, Akida runs just in response to particular circumstances. For always-on uses like anomaly detection or keyword identification, where power economy is vital, this makes it perfect. The latest innovation from BrainChip is built on the Akida2 event-based computing platform configuration engine, which can execute with power suitable for battery-powered operation of less than a single milliwatt.Wearables, IoT devices, and industrial sensors are among the jobs that call for continual awareness without draining the battery where the Akida Pico is well suited. Operating in the microwatt to milliwatt power range, this NPU is among the most efficient ones available; it surpasses even microcontrollers in several artificial intelligence applications.

For some always-on artificial intelligence uses, “the Akida Pico can be lower power than microcontrollers,” Brightfield said. “Every microamp counts in extreme battery-powered use cases, depending on how long it is intended to perform.”

The Akida Pico can stay always-on without significantly affecting battery life, whereas microcontroller-based AI systems often require duty cycling—turning the CPU off and on in bursts to save power. For edge AI devices that must run constantly while keeping a low power consumption, this benefit becomes very vital.

BrainChip’s MetaTF software flow allows developers to compile and optimize Temporal-Enabled Neural Networks (TENNs) on the Akida Pico. Supporting models created with TensorFlow/Keras and Pytorch, MetaTF eliminates the need to learn a new machine language framework, facilitating rapid AI application development for the Edge.

Akida Pico die area versus process (mm2) (Source: Brainchip)

Standalone Operation Without a Microcontroller

Another remarkable feature of the Akida Pico is its ability to function alone, that is, without a host microcontroller to manage its tasks. Usually beginning, regulating, and halting operations using a microcontroller, the Akida Pico comprises an integrated micro-sequencer managing the full neural network execution on its own. This architecture reduces total system complexity, latency, and power consumption.For applications needing a microcontroller, the Akida Pico is a rather useful co-processor for offloading AI tasks and lowering power requirements. From battery-powered wearables to industrial monitoring tools, this flexibility appeals to a wide range of edge artificial intelligence applications.

Targeting Key Edge AI Applications

The ultra-low power characteristics of the Akida Pico help medical devices that need continuous monitoring—such as glucose sensors or wearable heart rate monitors—benefit.Likewise, good candidates for this technology are speech recognition chores like voice-activated assistants or security systems listening for keywords. Edge artificial intelligence’s toughest obstacle is combining compute requirements with power consumption. In markets where battery life is crucial, the Akida Pico can scale performance while keeping inside limited power budgets.

One of the most notable uses of BrainChip’s artificial intelligence, according to Brightfield, is anomaly detection for motors or other mechanical systems Both costly and power-intensive, traditional methods monitor and diagnose equipment health using cloud-based infrastructure and edge servers. BrainChip embeds artificial intelligence straight within the motor or gadget, therefore flipping this concept on its head.

BrainChip’s ultra-efficient Akida Neural Processor Unit (NPU) for example, may continually examine vibration data from a motor. Should an abnormality, such as an odd vibration, be found, the system sets off a basic alert—akin to turning on an LED. Without internet access or a thorough examination, this “dumb and simple” option warns maintenance staff that the motor needs care instead of depending on distant servers or sophisticated diagnosis sites.

“In the field, a maintenance technician could only glance at the motor. Brightfield said, “they know it’s time to replace the motor before it fails if they spot a red light.” This method eliminates the need for costly software upgrades or cloud access, therefore benefiting equipment in distant areas where connectivity may be restricted.

Regarding keyword detection, BrainChip has included artificial intelligence right into the device. According to Brightfield, with 4-5% more accuracy than historical methods using raw audio data and modern algorithms, the Akida Pico uses just under 2 milliwatts of power to provide amazing results. Temporal Event-Based Neural Networks (TENNS), a novel architecture built from state space models that permits high-quality performance without the requirement for power-hungry microcontrollers, enable this achievement.

As demand for edge AI grows, BrainChip’s advancements in neuromorphic computing and event-based processing are poised to contribute significantly to the development of ultra-efficient, always-on AI systems, providing flexible solutions for various applications.

Tags:

Maurizio Di Paolo Emilio

Last edited:

BrainChip Introduces Lowest-Power AI Acceleration Co-Processor

Laguna Hills, Calif. – October 1, 2024 – BrainChip Holdings Ltd (ASX: BRN, OTCQX: BRCHF, ADR: BCHPY), the world’s first commercial producer of ultra-low power, fully digital, event- based, brain-inspired AI, today introduced the Akida™ Pico, the lowest power acceleration co-processor that enables the creation of very compact, ultra-low power, portable and intelligent devices for wearable and sensor integrated AI into consumer, healthcare, IoT, defense and wake-up applications.

Akida Pico accelerates limited use case-specific neural network models to create an ultra-energy efficient, purely digital architecture. Akida Pico enables secure personalization for applications including voice wake detection, keyword spotting, speech noise reduction, audio enhancement, presence detection, personal voice assistant, automatic doorbell, wearable AI, appliance voice interfaces and more.

The latest innovation from BrainChip is built on the Akida2 event-based computing platform configuration engine, which can execute with power suitable for battery-powered operation of less than a single milliwatt. Akida Pico provides power-efficient footprint for waking up microcontrollers or larger system processors, with a neural network to filter out false alarms to preserve power consumption until an event is detected. It is ideally suited for sensor hubs or systems that need to be monitored continuously using only battery power with occasional need for additional processing from a host.

BrainChip’s exclusive MetaTF™ software flow enables developers to compile and optimize their specific Temporal-Enabled Neural Networks (TENNs) on the Akida Pico. With MetaTF’s support for models created with TensorFlow/Keras and Pytorch, users avoid needing to learn a new machine language framework while rapidly developing and deploying AI applications for the Edge.

Among the benefits of Akida Pico are:

– Ultra-low power standalone NPU core (<1mW)

– Support power islands for minimal standby power

– Industry-standard development environment

– Very Small logic die area

– Optimize overall die size with configurable data buffer and model parameter memory

“Like all of our Edge AI enablement platforms, Akida Pico was developed to further push the limits of AI on-chip compute with low latency and low power required of neural applications,” said Sean Hehir, CEO at BrainChip. “Whether you have limited AI expertise or are an expert at developing AI models and applications, Akida Pico and the Akida Development Platform provides users with the ability to create, train and test the most power and memory efficient temporal-event based neural networks quicker and more reliably.”

BrainChip’s Akida is an event-based compute platform ideal for early detection, low-latency solutions without massive compute resources for robotics, drones, automotive and traditional sense-detect-classify-track solutions. BrainChip provides a range of software, hardware and IP products that can be integrated into existing and future designs, with a roadmap for customers to deploy multi-modal AI models at the edge.

BrainChip Introduces Lowest-Power AI Acceleration Co-Processor

BrainChip Introduces Lowest-Power AI Acceleration Co-Processor Laguna Hills, Calif. – October 1, 2024 – BrainChip Holdings Ltd (ASX: BRN, OTCQX: BRCHF, ADR: BCHPY), the world’s first commercial producer of ultra-low power, fully digital, event- based, brain-inspired AI, today introduced the...

Frangipani

Top 20

Hackster.io just revealed what the Akida Pico is all about:

View attachment 70198

BrainChip Shrinks the Akida, Targets Sub-Milliwatt Edge AI with the Neuromorphic Akida Pico

Second-generation Akida2 neuromorphic computing platform is now available in a battery-friendly form, targeting wearables and always-on AI.www.hackster.io

BrainChip Shrinks the Akida, Targets Sub-Milliwatt Edge AI with the Neuromorphic Akida Pico

Second-generation Akida2 neuromorphic computing platform is now available in a battery-friendly form, targeting wearables and always-on AI.

Gareth HalfacreeFollow

59 minutes ago • Machine Learning & AI / Wearables

https://events.hackster.io/impactspotlights

Edge artificial intelligence (edge AI) specialist BrainChip has announced a new entry in its Akida range of brain-inspired neuromorphic processors, the Akida Pico — claiming that it's the "lowest power acceleration coprocessor" yet developed, with eyes on the wearable and sensor-integrated markets.

"Like all of our Edge AI enablement platforms, Akida Pico was developed to further push the limits of AI on-chip compute with low latency and low power required of neural applications," claims BrainChip chief executive officer Sean Hehir of the company's latest unveiling. "Whether you have limited AI expertise or are an expert at developing AI models and applications, Akida Pico and the Akida Development Platform provides users with the ability to create, train and test the most power and memory efficient temporal-event based neural networks quicker and more reliably."

BrainChip has announced a new entry in its Akida family of neuromorphic processors, the tiny Akida Pico. (: BrainChip)

The Akida Pico is, as the name suggests, based on BrainChip's Akida platform — specifically, the second-generation Akida2. Like its predecessors, it uses neuromorphic processing technology inspired by the human brain to handle selected machine learning and artificial intelligence workloads with a high efficiency — but unlike its predecessors, the Akida Pico has been built to deliver the lowest possible power draw while still offering enough compute performance to be useful.

According to BrainChip, the Akida Pico draws under 1mW under load and uses power island design to offer a "minimal" standby power draw. Chips built around the core are also expected to be extremely small physically, ideal for wearables, thanks to a compact die area and customizable overall footprint through configurable data buffer and model parameter memory specifications. The part, its creators explain, is ideal for always-on AI in battery-powered or high-efficiency systems, where it can be used to wake a more powerful microcontroller or application processor when certain conditions are met.

The Akida Pico is based on the company's second-generation Akida2 platform, but tailored for sub-milliwatt power draw. (: BrainChip)

On the software side, the Akida Pico is supported by BrainChip's in-house MetaTF software flow — allowing the compilation and optimization of Temporal-Enabled Neural Networks (TENNs) for execution on the device. MetaTF also supports importation of existing models developed in TensorFlow, Keras, and PyTorch — meaning, BrainChip says, there's no need to learn a whole new framework to use the Akida Pico.

BrainChip has not yet announced plans to release Akida Pico in hardware, instead concentrating on making it available as Intellectual Property (IP) for others to integrate into their own chip designs; pricing had not been publicly disclosed at the time of writing.

More information is available on the BrainChip website.

energy efficiency

machine learning

artificial intelligence

wearables

gpio

Gareth HalfacreeFollow

Freelance journalist, technical author, hacker, tinkerer, erstwhile sysadmin. For hire: freelance@halfacree.co.uk.

View attachment 70199

https://www.embedded.com/brainchips-akida-npu-redefining-ai-processing-with-event-based-architecture

01. Okt. 2024 / 15:55

BrainChip’s Akida NPU: Redefining AI Processing with Event-Based Architecture

Maurizio Di Paolo Emilio

6 min read

0

BrainChip has launched the Akida Pico, enabling the development of compact, ultra-low power, intelligent devices for applications in wearables, healthcare, IoT, defense, and wake-up systems, integrating AI into various sensor-based technologies. According to BrainChip, Akida Pico offers the lowest power standalone NPU core (less than 1mW), supports power islands for minimal standby power, and operates within an industry-standard development environment. It’s very small logic die area and configurable data buffer and model parameter memory help optimize the overall die size.

AI era

In the sophisticated artificial intelligence (AI) era of today, including smart technology into consumer items is usually connected with cloud services, complicated infrastructure, and high expenses. Computational power and energy economy are occasionally in conflict in the realm of edge artificial intelligence. Designed for deep learning activities, traditional neural processing units (NPUs) require significant quantities of power, so they are less suited for always-on, ultra-low-power applications including sensor monitoring, keyword detection, and other extreme edge artificial intelligence uses. BrainChip is providing a fresh approach to this challenge.

BrainChip’s solution addresses one of the major challenges in edge AI: how to perform continuous AI processing without draining power. Traditional microcontroller-based AI solutions can manage low-power requirements but often lack the processing capability for complex AI tasks.

Steve Brightfield, CMO at BrainChip

2014 saw the launch of BrainChip, which took its inspiration from Peter Van Der Made’s work on neuromorphic computing concepts. Especially using spiking neural networks (SNNs), this technique replicates how the brain manages information, therefore transforming a fundamentally different method to traditional convolutional neural networks (CNNs). The SNN-based systems of BrainChip only compute when triggered by events rather than doing continuous calculations, hence optimizing power efficiency.

In an interview with Embedded, Steve Brightfield, CMO at BrainChip, talked about how this new method will change the game for ultra-low-power AI apps, showing big steps forward in the field. Brightfield said that this new technology makes it possible for common things like drills, hand tools, and other consumer products to have smart features without costing a lot more. “Today, a battery with a built-in tester can show how healthy it is with a simple color code: green means it’s good, red means it needs to be replaced. Providing a similar indicator, AI in these products can tell you when parts are wearing out before they break. BrainChip’s low-power, low-maintenance AI works in the background without being noticed, so advanced tests can be used by anyone without needing to know a lot about them,” Brightfield said.

Traditional NPUs vs. Event-Based Computing

Brightfield claimed that ordinary NPUs—including those with multiplier-accumulator arrays—run on fixed pipelines, processing every input whether or not it is beneficial. Particularly in cases of sparse data, a typical occurrence in AI applications where most input values have little impact on the final outcome, this inefficacy often leads in wasted calculations. By use of an event-based computing architecture, BrainChip saves computational resources and electricity by activating calculations only when relevant data is present.

“Most NPUs keep calculating all data values, even for sparse data,” Brightfield remarked. “We schedule computations dynamically using our event-based architecture, so cutting out unnecessary processing.”

The Influence of Sparsity

BrainChip’s main benefit comes from using data and neural weights’ sparsity. Traditional NPU architectures can take advantage of weight sparsity with pre-compilation, benefiting from model weight pruning, but cannot dynamically schedule for data sparsity, they must process all of the inputs.

By processing data just when needed, BrainChip’s SNN technology can drastically lower power usage based on the degree of sparsity in the data. BrainChip’s Akida NPU, for instance, could execute only when the sensor detects a significant signal in audio-based edge applications such as gunshot recognition or keyword detection, therefore conserving energy in the lack of acceptable data.

Akida Pico Block Diagram (Source: Brainchip)

Introducing the Akida Pico: Ultra-Low Power NPU for Extreme Edge AI

Designed on a spiking neural network (SNN) architecture, BrainChip’s Akida Pico processor transforms event-based computing. Unlike conventional artificial intelligence models that demand constant processing capability, Akida runs just in response to particular circumstances. For always-on uses like anomaly detection or keyword identification, where power economy is vital, this makes it perfect. The latest innovation from BrainChip is built on the Akida2 event-based computing platform configuration engine, which can execute with power suitable for battery-powered operation of less than a single milliwatt.

Wearables, IoT devices, and industrial sensors are among the jobs that call for continual awareness without draining the battery where the Akida Pico is well suited. Operating in the microwatt to milliwatt power range, this NPU is among the most efficient ones available; it surpasses even microcontrollers in several artificial intelligence applications.

For some always-on artificial intelligence uses, “the Akida Pico can be lower power than microcontrollers,” Brightfield said. “Every microamp counts in extreme battery-powered use cases, depending on how long it is intended to perform.”

The Akida Pico can stay always-on without significantly affecting battery life, whereas microcontroller-based AI systems often require duty cycling—turning the CPU off and on in bursts to save power. For edge AI devices that must run constantly while keeping a low power consumption, this benefit becomes very vital.

BrainChip’s MetaTF software flow allows developers to compile and optimize Temporal-Enabled Neural Networks (TENNs) on the Akida Pico. Supporting models created with TensorFlow/Keras and Pytorch, MetaTF eliminates the need to learn a new machine language framework, facilitating rapid AI application development for the Edge.

Akida Pico die area versus process (mm2) (Source: Brainchip)

Standalone Operation Without a Microcontroller

Another remarkable feature of the Akida Pico is its ability to function alone, that is, without a host microcontroller to manage its tasks. Usually beginning, regulating, and halting operations using a microcontroller, the Akida Pico comprises an integrated micro-sequencer managing the full neural network execution on its own. This architecture reduces total system complexity, latency, and power consumption.

For applications needing a microcontroller, the Akida Pico is a rather useful co-processor for offloading AI tasks and lowering power requirements. From battery-powered wearables to industrial monitoring tools, this flexibility appeals to a wide range of edge artificial intelligence applications.

Targeting Key Edge AI Applications

The ultra-low power characteristics of the Akida Pico help medical devices that need continuous monitoring—such as glucose sensors or wearable heart rate monitors—benefit.

Likewise, good candidates for this technology are speech recognition chores like voice-activated assistants or security systems listening for keywords. Edge artificial intelligence’s toughest obstacle is combining compute requirements with power consumption. In markets where battery life is crucial, the Akida Pico can scale performance while keeping inside limited power budgets.

One of the most notable uses of BrainChip’s artificial intelligence, according to Brightfield, is anomaly detection for motors or other mechanical systems Both costly and power-intensive, traditional methods monitor and diagnose equipment health using cloud-based infrastructure and edge servers. BrainChip embeds artificial intelligence straight within the motor or gadget, therefore flipping this concept on its head.

BrainChip’s ultra-efficient Akida Neural Processor Unit (NPU) for example, may continually examine vibration data from a motor. Should an abnormality, such as an odd vibration, be found, the system sets off a basic alert—akin to turning on an LED. Without internet access or a thorough examination, this “dumb and simple” option warns maintenance staff that the motor needs care instead of depending on distant servers or sophisticated diagnosis sites.

“In the field, a maintenance technician could only glance at the motor. Brightfield said, “they know it’s time to replace the motor before it fails if they spot a red light.” This method eliminates the need for costly software upgrades or cloud access, therefore benefiting equipment in distant areas where connectivity may be restricted.

Regarding keyword detection, BrainChip has included artificial intelligence right into the device. According to Brightfield, with 4-5% more accuracy than historical methods using raw audio data and modern algorithms, the Akida Pico uses just under 2 milliwatts of power to provide amazing results. Temporal Event-Based Neural Networks (TENNS), a novel architecture built from state space models that permits high-quality performance without the requirement for power-hungry microcontrollers, enable this achievement.

As demand for edge AI grows, BrainChip’s advancements in neuromorphic computing and event-based processing are poised to contribute significantly to the development of ultra-efficient, always-on AI systems, providing flexible solutions for various applications.

Tags:

Maurizio Di Paolo Emilio

Well, I suppose the tiny Akida Pico (respectively pre-announcement leakage relating to it) may have been the real reason for yesterday’s massive spike in share price….

Frangipani

Top 20

Akida Pico Announcement

Ready to Integrate Akida Pico Into Your Next Product Design? Download the complete Akida Pico Brochure and unlock detailed insights, technical specifications, and case studies that showcase the transformative potential of ultra-low power AI for your applications.

Last edited:

Frangipani

Top 20

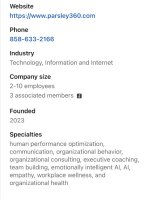

Antonio J. Viana, the Chairman of our Board of Directors, has joined Parsley’s Board of Directors:

“Parsley360 (Parsley), is a human centered, AI-enabled performance optimization system with universal access to an emotionally intelligent pocket coach for all employees.”

www.einpresswire.com

www.einpresswire.com

“Parsley360 (Parsley), is a human centered, AI-enabled performance optimization system with universal access to an emotionally intelligent pocket coach for all employees.”

AI Innovator Parsley Appoints Former ARM Executive Antonio J. Viana to Board of Directors

Technology Leader Brings Extensive Experience to Help Parsley Scale Its Empathy-Enabled AI Platform to Accelerate Growth in Corporate Performance Solutions

Attachments

DingoBorat

Slim

Hackster.io just revealed what the Akida Pico is all about:

View attachment 70198

BrainChip Shrinks the Akida, Targets Sub-Milliwatt Edge AI with the Neuromorphic Akida Pico

Second-generation Akida2 neuromorphic computing platform is now available in a battery-friendly form, targeting wearables and always-on AI.www.hackster.io

BrainChip Shrinks the Akida, Targets Sub-Milliwatt Edge AI with the Neuromorphic Akida Pico

Second-generation Akida2 neuromorphic computing platform is now available in a battery-friendly form, targeting wearables and always-on AI.

Gareth HalfacreeFollow

59 minutes ago • Machine Learning & AI / Wearables

https://events.hackster.io/impactspotlights

Edge artificial intelligence (edge AI) specialist BrainChip has announced a new entry in its Akida range of brain-inspired neuromorphic processors, the Akida Pico — claiming that it's the "lowest power acceleration coprocessor" yet developed, with eyes on the wearable and sensor-integrated markets.

"Like all of our Edge AI enablement platforms, Akida Pico was developed to further push the limits of AI on-chip compute with low latency and low power required of neural applications," claims BrainChip chief executive officer Sean Hehir of the company's latest unveiling. "Whether you have limited AI expertise or are an expert at developing AI models and applications, Akida Pico and the Akida Development Platform provides users with the ability to create, train and test the most power and memory efficient temporal-event based neural networks quicker and more reliably."

BrainChip has announced a new entry in its Akida family of neuromorphic processors, the tiny Akida Pico. (: BrainChip)

The Akida Pico is, as the name suggests, based on BrainChip's Akida platform — specifically, the second-generation Akida2. Like its predecessors, it uses neuromorphic processing technology inspired by the human brain to handle selected machine learning and artificial intelligence workloads with a high efficiency — but unlike its predecessors, the Akida Pico has been built to deliver the lowest possible power draw while still offering enough compute performance to be useful.

According to BrainChip, the Akida Pico draws under 1mW under load and uses power island design to offer a "minimal" standby power draw. Chips built around the core are also expected to be extremely small physically, ideal for wearables, thanks to a compact die area and customizable overall footprint through configurable data buffer and model parameter memory specifications. The part, its creators explain, is ideal for always-on AI in battery-powered or high-efficiency systems, where it can be used to wake a more powerful microcontroller or application processor when certain conditions are met.

The Akida Pico is based on the company's second-generation Akida2 platform, but tailored for sub-milliwatt power draw. (: BrainChip)

On the software side, the Akida Pico is supported by BrainChip's in-house MetaTF software flow — allowing the compilation and optimization of Temporal-Enabled Neural Networks (TENNs) for execution on the device. MetaTF also supports importation of existing models developed in TensorFlow, Keras, and PyTorch — meaning, BrainChip says, there's no need to learn a whole new framework to use the Akida Pico.

BrainChip has not yet announced plans to release Akida Pico in hardware, instead concentrating on making it available as Intellectual Property (IP) for others to integrate into their own chip designs; pricing had not been publicly disclosed at the time of writing.

More information is available on the BrainChip website.

energy efficiency

machine learning

artificial intelligence

wearables

gpio

Gareth HalfacreeFollow

Freelance journalist, technical author, hacker, tinkerer, erstwhile sysadmin. For hire: freelance@halfacree.co.uk.

Multiple websites are now promoting this news

What I want to know is...

How is AKIDA Pico, different from AKIDA-E ?

(both based on AKIDA 2.0 IP)

I'm guessing it's obviously smaller again?

But AKIDA-E is already from "1" node?..

Is this BrainChip going for the "low hanging fruit" ?..

(any similarity to an apple, is purely coincidental)

Last edited:

Pom down under

Top 20

What happened with the last reveal they had or was I dreaming that?

Justchilln

Regular

Okay so now I guess we’re supposed to wait Another few years for customers to trial akida pico are we?

Your don't have to post this. People already did in here

Tothemoon24

Top 20

Cheers skipperYour don't have to post this. People already did in here

Similar threads

- Replies

- 0

- Views

- 4K

- Replies

- 9

- Views

- 6K

- Replies

- 0

- Views

- 3K