You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

BRN Discussion Ongoing

- Thread starter TechGirl

- Start date

Bennysmadness

Regular

I feel a short squeeze coming on, 38c today. Hold on to your gems

️

️

DingoBorat

Slim

I think maybe you're "mad" BennyI feel a short squeeze coming on, 38c today. Hold on to your gems

️

There won't be a short squeeze, without significant news and stronger demand than what we have now..

But that's just my seat of the pants opinion..

I know mine will remain clean and fresh though, regardless.

buena suerte :-)

BOB Bank of Brainchip

I know it's not much to go on with MStar...But would be nice to see these levels again!...... Soon!!....I think maybe you're "mad" Benny..

There won't be a short squeeze, without significant news and stronger demand than what we have now..

But that's just my seat of the pants opinion..

I know mine will remain clean and fresh though, regardless.

Pom down under

Top 20

Something fishy going on with 30 million stacked on the buy side

Fishy smell? Seems like someone had a crazy night ha? Always look at the bright side

Last edited:

Pom down under

Top 20

I think the dam could be about to breakFishy smell? Seems like someone had a crazy night ha? Always look at the bright side

View attachment 70597

buena suerte :-)

BOB Bank of Brainchip

buena suerte :-)

BOB Bank of Brainchip

Last edited:

Prostate?EDIT.......

This might have something to do with us although I can’t find a link between Microchip and Lorser . We have history with Lorser and the benefit of Akida with SDR.

“We believe that neuromorphic computing is the future of AI/ML, and an SDR with neuromorphic AI/ML capability will offer users significantly more functionality, flexibility, and efficiency,” said Diane Serban, CEO of Lorser Industries. “The Akida processor and IP is the ideal solution for SDR devices because of its low power consumption, high performance, and, importantly, its ability to learn on-chip, after deployment in the field..”

www.linkedin.com

www.linkedin.com

“We believe that neuromorphic computing is the future of AI/ML, and an SDR with neuromorphic AI/ML capability will offer users significantly more functionality, flexibility, and efficiency,” said Diane Serban, CEO of Lorser Industries. “The Akida processor and IP is the ideal solution for SDR devices because of its low power consumption, high performance, and, importantly, its ability to learn on-chip, after deployment in the field..”

#softwaredefinedradio #tacticalradio #spacecommunications #beamforming #pic64hpsc | Microchip Technology Inc.

Explore our low-power Software-Defined Radio platform for tactical radio, space communications and beamforming. In this webinar we will introduce our new PIC64-HPSC family, beneficial in building large-scale beamforming applications. Register now: https://mchp.us/3ZCZnSu. #SoftwareDefinedRadio...

Bad day for dropped "r"s.This might have something to do with us although I can’t find a link between Microchip and Loser. We have history with Lorser and the benefit of Akida with SDR.

“We believe that neuromorphic computing is the future of AI/ML, and an SDR with neuromorphic AI/ML capability will offer users significantly more functionality, flexibility, and efficiency,” said Diane Serban, CEO of Lorser Industries. “The Akida processor and IP is the ideal solution for SDR devices because of its low power consumption, high performance, and, importantly, its ability to learn on-chip, after deployment in the field..”

View attachment 70599

#softwaredefinedradio #tacticalradio #spacecommunications #beamforming #pic64hpsc | Microchip Technology Inc.

Explore our low-power Software-Defined Radio platform for tactical radio, space communications and beamforming. In this webinar we will introduce our new PIC64-HPSC family, beneficial in building large-scale beamforming applications. Register now: https://mchp.us/3ZCZnSu. #SoftwareDefinedRadio...www.linkedin.com

Last edited:

Thanks…fixed itBad day for dropper "r"s.

HopalongPetrovski

I'm Spartacus!

BrainChip atm......... trying to get back to 30 cents.

Mt09

Regular

BrainChip is live at Embedded World North America in Austin, TX, running today through October 10, 2024, at the Austin Convention Center. This event brings together leading experts and innovators in… | BrainChip

BrainChip is live at Embedded World North America in Austin, TX, running today through October 10, 2024, at the Austin Convention Center. This event brings together leading experts and innovators in the embedded community, making it a perfect place for BrainChip to showcase our cutting-edge...

Stmicro staff at the Brainchip booth

Attachments

They don’t look excited

BrainChip is live at Embedded World North America in Austin, TX, running today through October 10, 2024, at the Austin Convention Center. This event brings together leading experts and innovators in… | BrainChip

BrainChip is live at Embedded World North America in Austin, TX, running today through October 10, 2024, at the Austin Convention Center. This event brings together leading experts and innovators in the embedded community, making it a perfect place for BrainChip to showcase our cutting-edge...www.linkedin.com

Stmicro staff at the Brainchip booth

STM -“Hmmm guess I should buy one license tho…”

BRN-“hmm come on man… just sign that f..in contract… TSE is whatching me 24/7”

STM-“why he looks so uncomfortable? Is this whole thing a scam like T&J claims every day?”

BRN-oh jeez.. hope he don’t follow the HC forum.. what a mess..”

STM-“oh eye contact … eye contact…” “yeah … so akida pico you say..right? Cool cool….”

BRN-shit… I lost the ball.. “yeah… it’s… it’s fast you know… eeeehhh do you know our robot mascot? (What the heck are you talking about….)

Last edited:

They don’t look excitedbut I guess that’s how it needs looking all the time when they make several thoughts….

STM -“Hmmm guess I should buy one license tho…”

BRN-“hmm come on man… just sign that f..in contract… TSE is whatching me 24/7”

STM-“why he looks so uncomfortable? Is this whole thing a scam like T&J claims every day?”

BRN-oh jeez.. hope he don’t follow the HC forum.. what a mess..”

STM-“oh eye contact … eye contact…” “yeah … so akida pico you say..right? Cool cool….”

BRN-shit… I lost the ball.. “yeah… it’s… it’s fast you know… eeeehhh do you know our robot mascot? (What the heck are you talking about….)

Let me explain the problem with german humour

It may be clichéd but it’s true: Germans have no sense of humour. The Economist’s Berlin bureau chief explains why

Last edited:

Fullmoonfever

Top 20

Love to know if these guys have been exploring with us as well and if not, maybe one of our sales team (yes Alf, looking at you for EMEA haha) should be in contact

Though, looks like they playing with crossbar arrays (analog?).

www.abacus-neo.de

www.abacus-neo.de

Insight

Software & IT

Scientific informatics

Over the last five years, neuromorphic computing has rapidly advanced through the development of state-of-the-art hardware and software technologies that mimic the information processing dynamics of animal brains. This development provides ultra-low power computation capabilities, especially for edge computing devices. Helbling experts have recently built up extensive interdisciplinary knowledge in this field in a project with partner Abacus neo. The focus was also on how the potential of neuromorphic computing can be optimally utilized.

Similar to the natural neural networks found in animal brains, neuromorphic computing uses compute-in-memory, sparse spike data encoding, or both together to provide higher energy efficiencies and lower computational latencies versus traditional digital neural networks. This makes neuromorphic computing ideal for ultra-low power edge applications, especially those where energy harvesting can provide autonomous always-on devices for environmental monitoring, medical implants, or wearables.

Currently, the adoption of neuromorphic computing is restricted by the maturity of the available hardware and software frameworks, the limited number of suppliers, and competition from the ongoing development of traditional digital devices. Another factor is resistance from conservative communities due to their limited experience of the theory behind and practical use of neuromorphic devices, with the need for a new mindset to approach problems relating to both hardware and software.

Given the inherent relative benefits of neuromorphic computing, it will be a key technology for future low-power edge applications. Accordingly, Helbling has been actively investigating currently available solutions to assess their suitability for a broad range of existing and new applications. Helbling also cooperates with partners here, such as in an ongoing project with Abacus neo, a company that focuses on developing innovative ideas into new business models.

The von Neumann bottleneck needs to be overcome

The main disadvantage of current computer architectures, both in terms of energy consumption and speed, is the need to transfer data and instructions between the memory and the central processing unit (CPU) during each fetch-execute cycle. In von Neumann devices, the increased length and thus electrical resistance of these communication paths leads to greater energy dissipation as heat. In fact, often more energy is used for transferring the data than for processing it by the CPU. Furthermore, since the data transfer rate between the CPU and memory is lower than the processing rate of the CPU, the CPU must constantly wait for data, thus limiting the system’s processing rate. In the future, this von Neumann bottleneck will become more restrictive as CPU and memory speeds continue to increase faster than the data transfer rate between them.

Neuromorphic computing collocates processing and memory

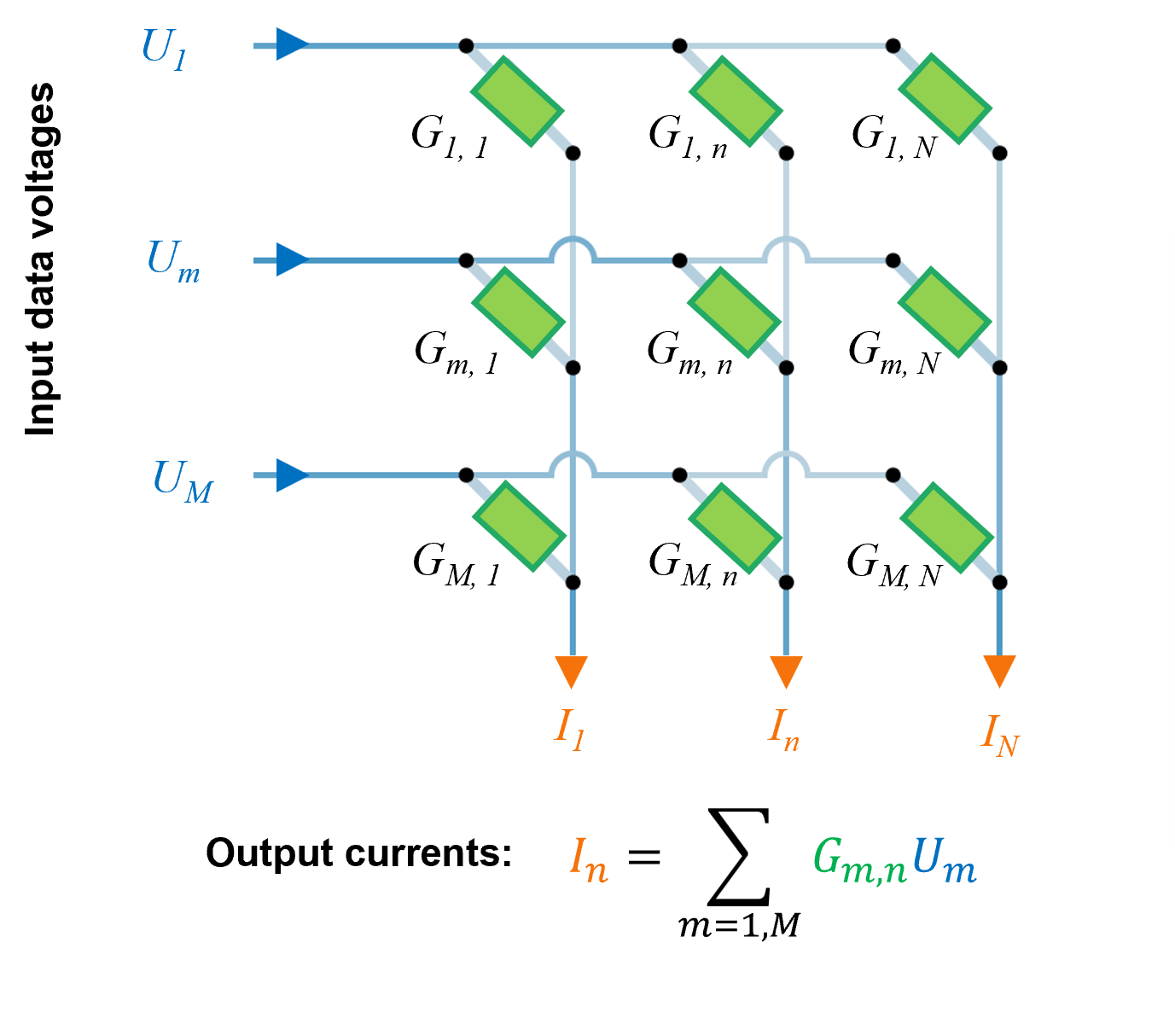

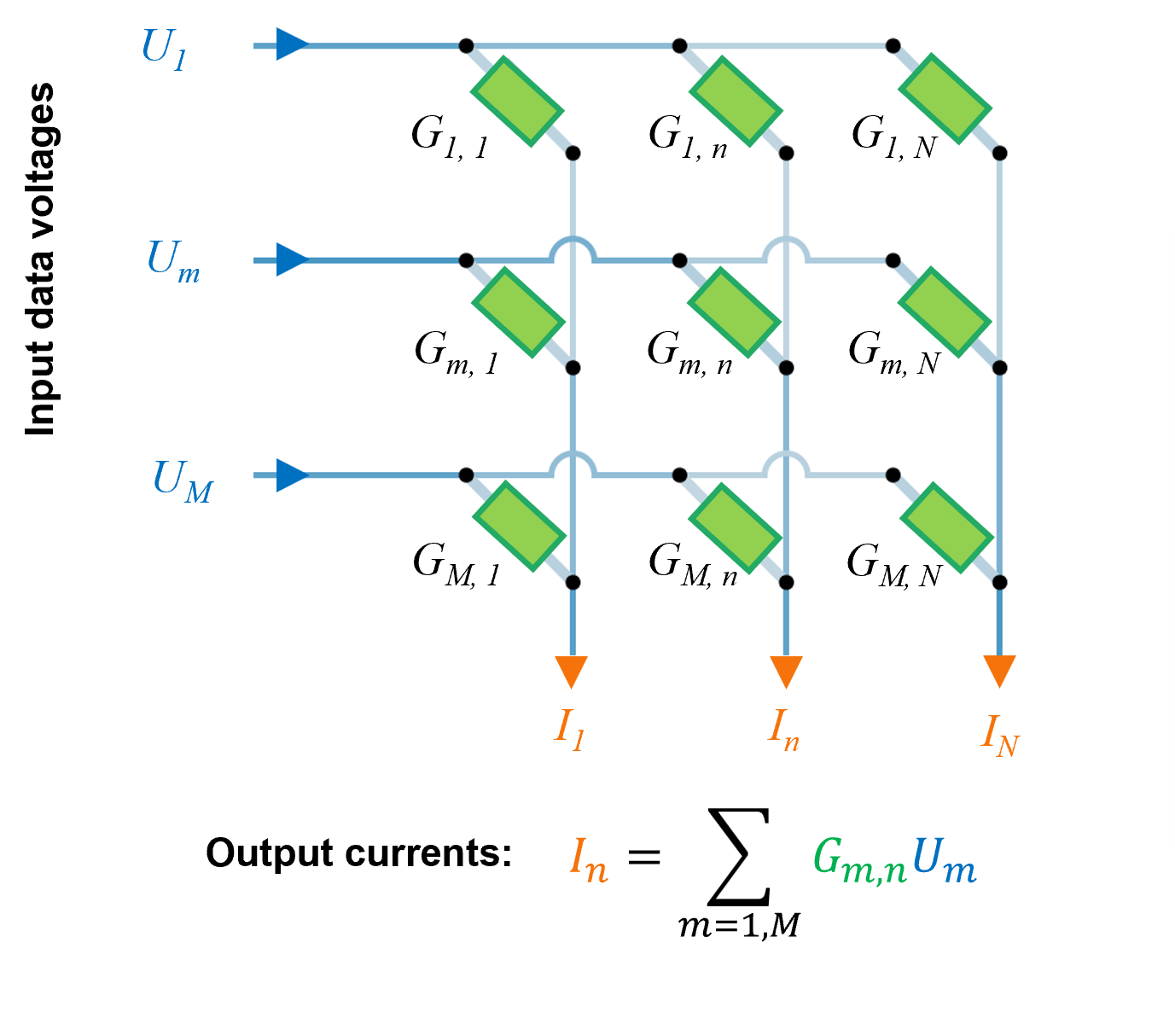

Neuromorphic computing aims to remove the von Neumann bottleneck and minimize heat dissipation by eliminating the distance between data and the CPU. These non-von Neumann compute-in-memory (CIM) architectures collocate processing and memory to provide ultra-low power and massively parallel data processing capabilities. Practically, this has been achieved through the development of programmable crossbar arrays (CBA), which are effectively dot-product engines that are highly optimized for the vector-matrix multiplication (VMM) operations that are the fundamental building blocks of most classical and deep learning algorithms.

These crossbars comprise input word and output bit lines, where the junctions between them have programmable conductance values that can be set to carry out specific algorithmic tasks.

Therefore, for a neuromorphic computer the algorithm is defined by the architecture of the system rather than by the sequential execution of instructions by a CPU. For example, to perform a VMM, voltages (U) representing the vector are applied to the input word lines whilst the matrix is represented by the conductance values (G) of the crossbar junction grid. The result of the VMM is then given by the currents (I) flowing from the output bit lines (see Figure 1). Since a VMM operation is performed instantaneously in a clockless, asynchronous manner, the latencies and processing times are much lower than for traditional von Neumann systems.

Figure 1: Neuromorphic crossbar array for vector-matrix multiplication. Figure: Helbling.

Figure 1: Neuromorphic crossbar array for vector-matrix multiplication. Figure: Helbling.

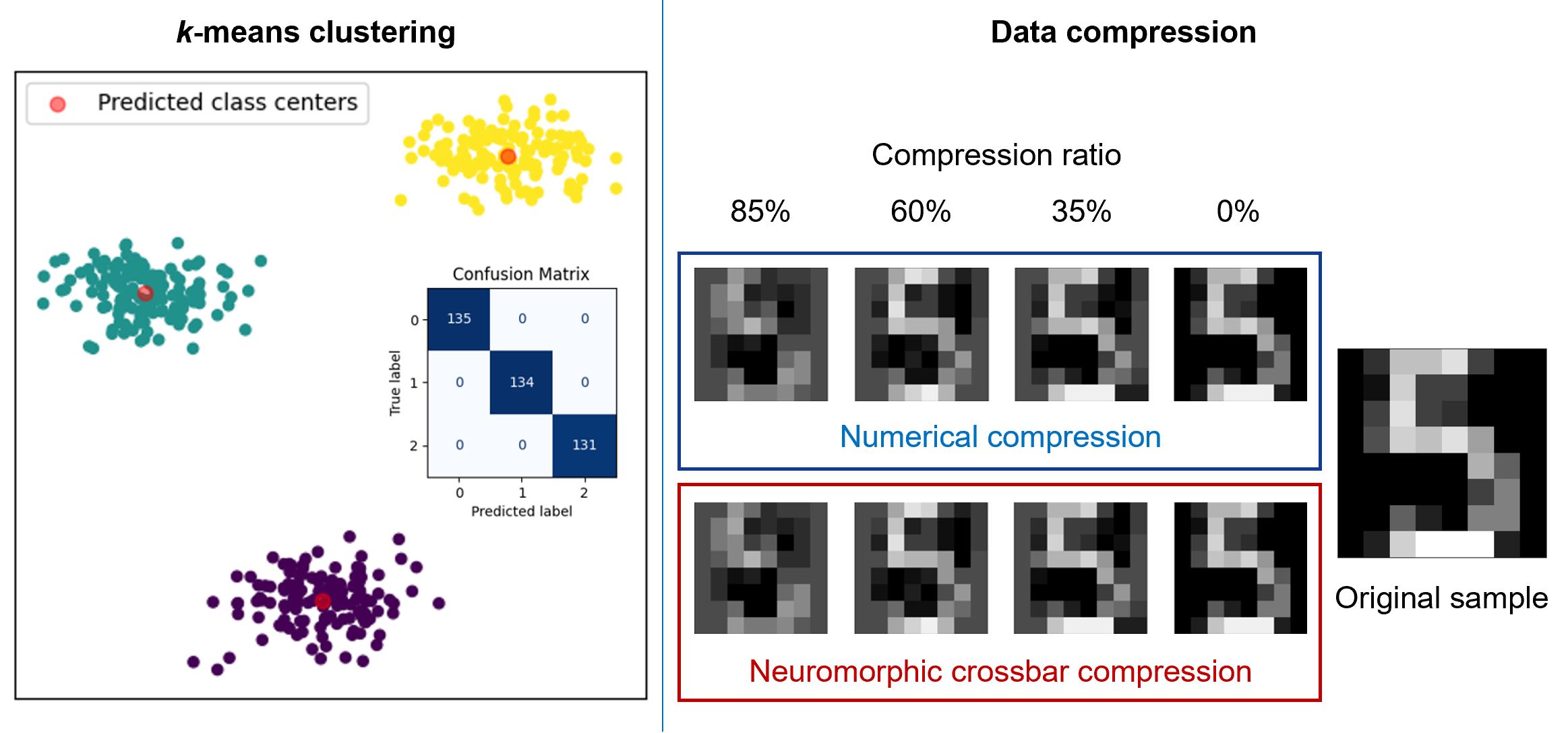

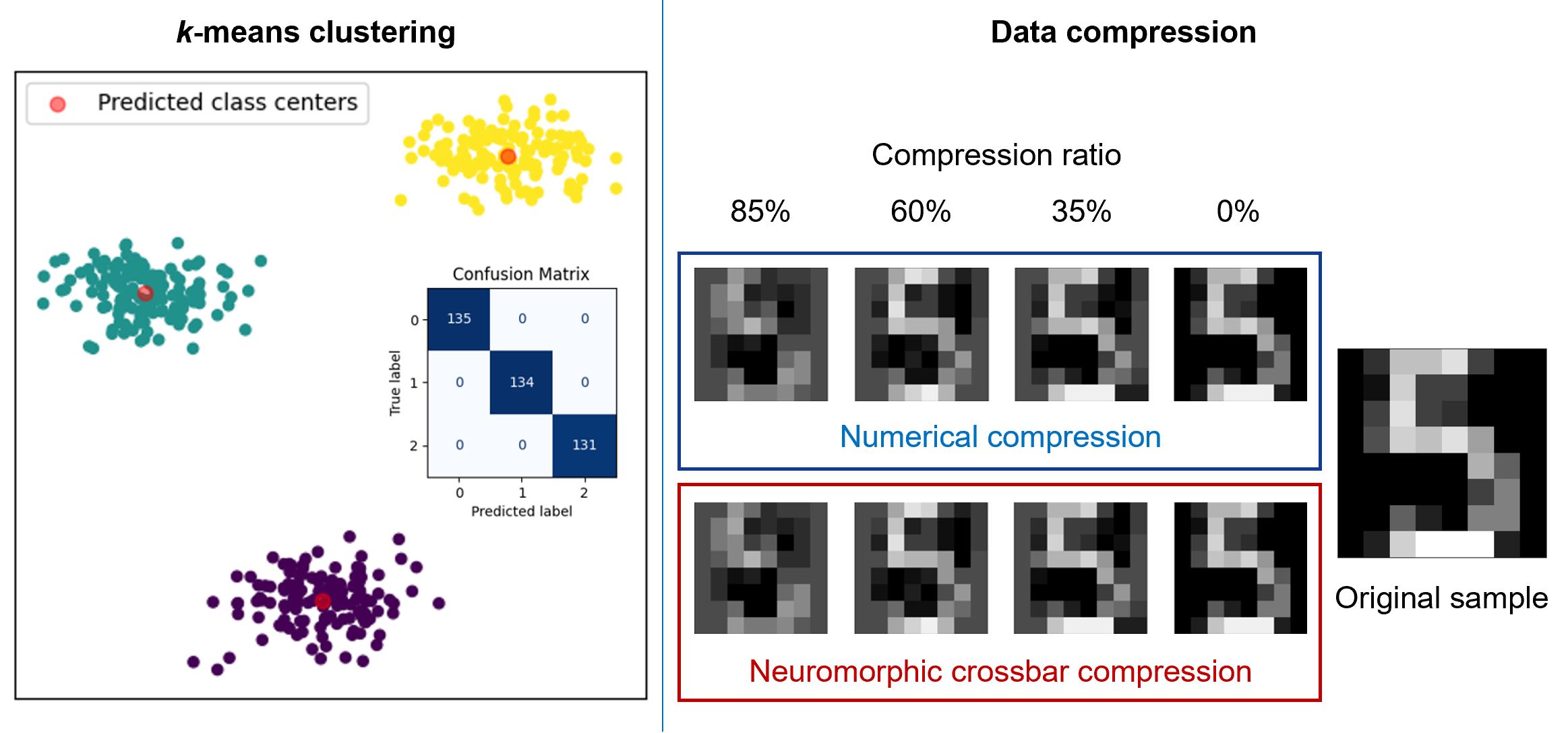

Figure 2: Examples of algorithms implemented by Helbling on neuromorphic crossbar arrays. Note the strong similarity between the exact numerical compression results and the approximate ones obtained from the neuromorphic crossbar array. Figure: Helbling and Abacus neo.

Figure 2: Examples of algorithms implemented by Helbling on neuromorphic crossbar arrays. Note the strong similarity between the exact numerical compression results and the approximate ones obtained from the neuromorphic crossbar array. Figure: Helbling and Abacus neo.

The energy cost of implementing a VMM on a CBA is very low versus that of a von Neumann device since energy is only required to impose the input word line voltages and to overcome the electrical resistance losses of the CBA.

Sparse data representation reduces energy requirements

The second main feature of neuromorphic computing is its time-dynamic nature and the flow of sparse event encoded data through spiking neural networks (SNN).

Event encoding typically involves converting a continuous signal into a train of representative short-duration analog spikes. Techniques include rate encoding, where the spike frequency is proportional to the instantaneous signal amplitude, or time-encoded spikes that are generated when a signal satisfies pre-defined thresholds. The advantages of this sparse representation are the very low power required for transmission and the ability to develop asynchronous, event-driven systems.

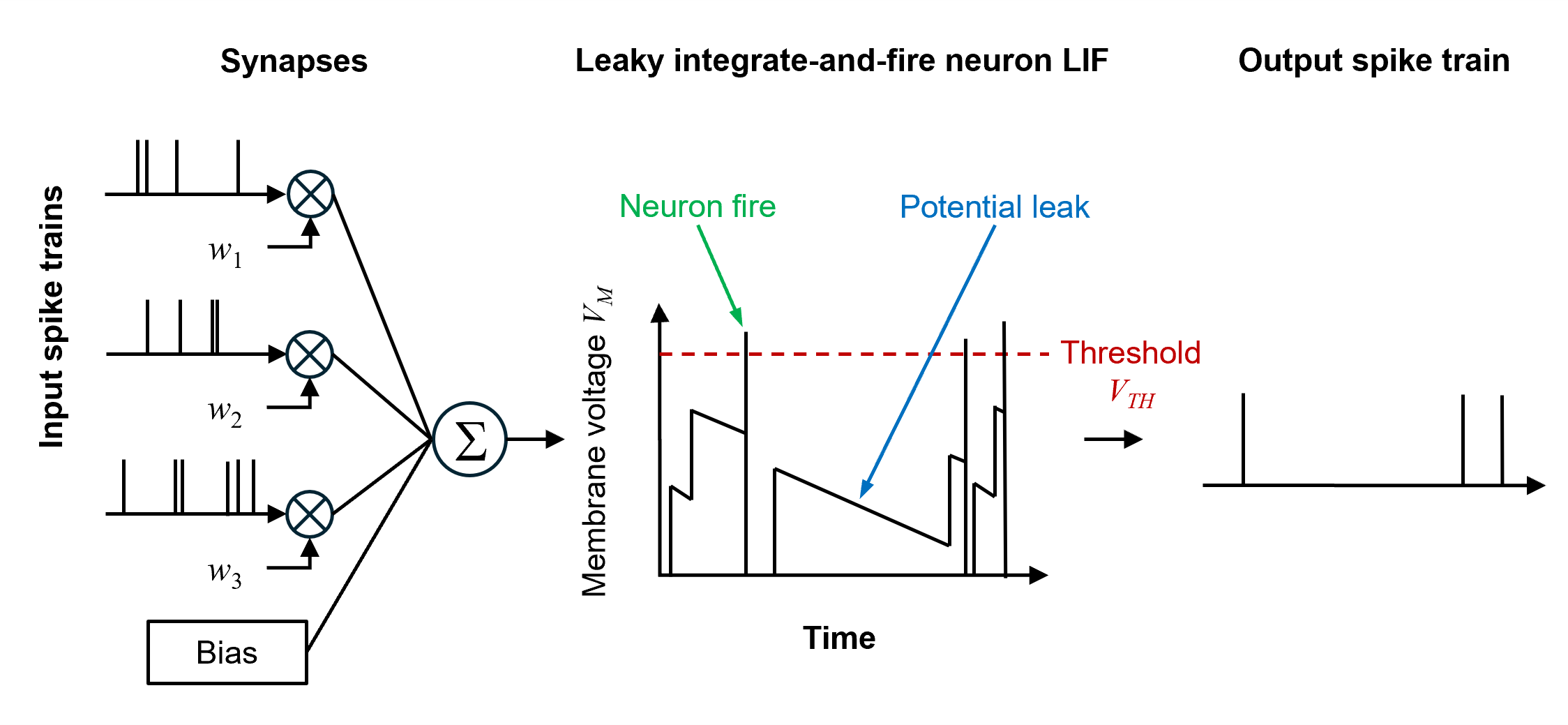

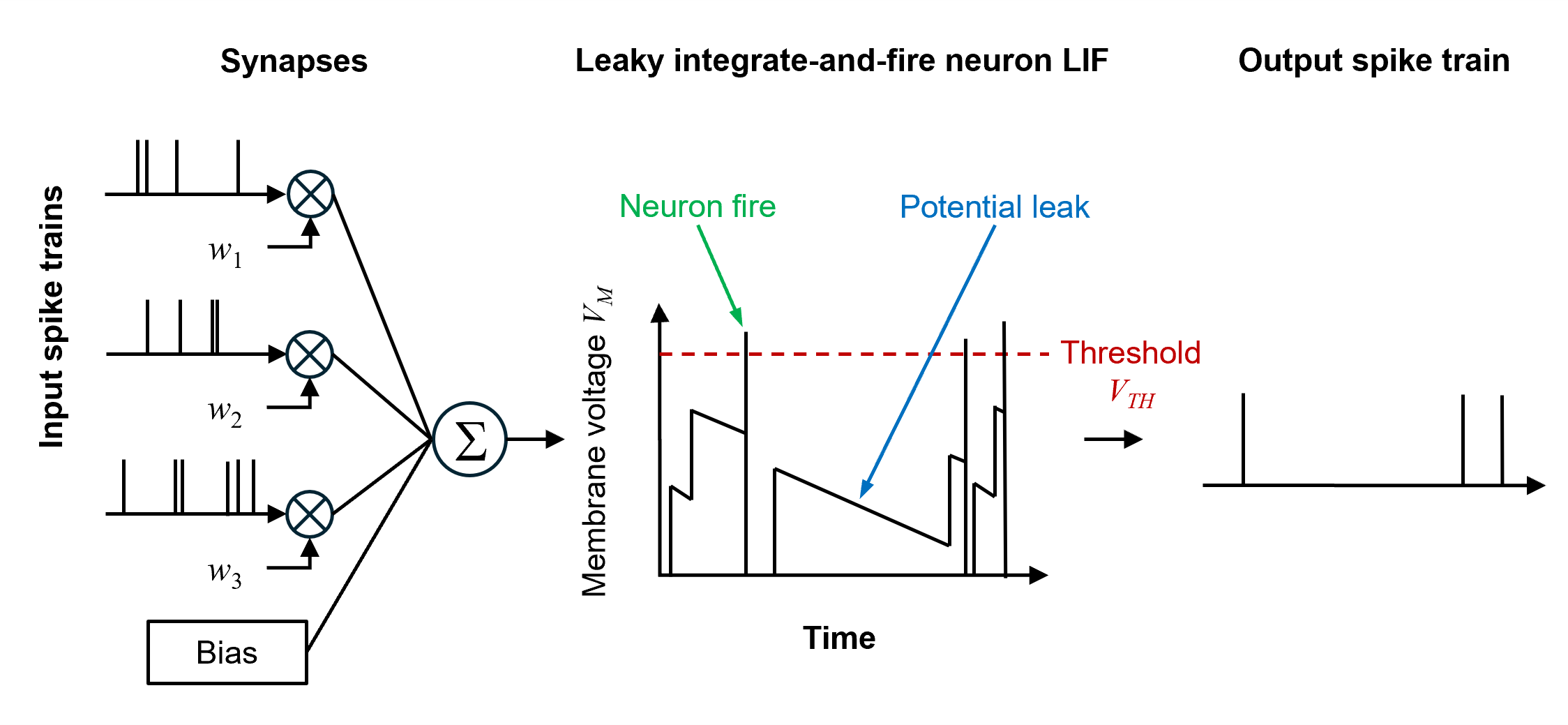

Leaky integrate-and-fire (LIF) neurons implemented at the hardware level form the basis of the SNN used in neuromorphic computing. The operation of these LIF neurons is shown in Figure 3. Essentially, the spikes entering a neuron are multiplied by the pretrained weight (w) of their respective channels. These are then integrated and added to a bias value, before being added to the instantaneous membrane potential (VM) of that neuron.

This membrane potential leaks with time as it decreases at a programmable rate, thus providing the neuron with a memory of previous spiking events. If the membrane potential is greater than a predefined threshold (VTH), the neuron fires a spike downstream before resetting to its base state to create a continuous time dynamic process.

Figure 3: Leaky integrate-and-fire neuron spike event processing. Figure: Helbling..

Figure 3: Leaky integrate-and-fire neuron spike event processing. Figure: Helbling..

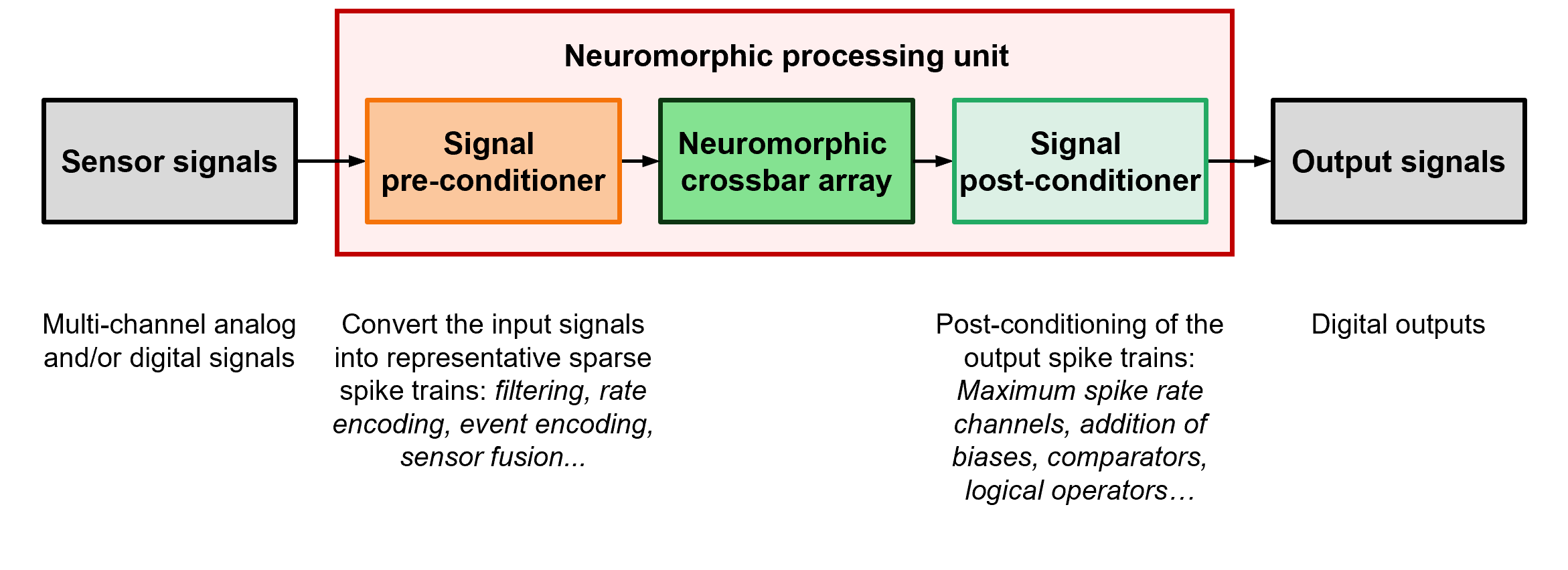

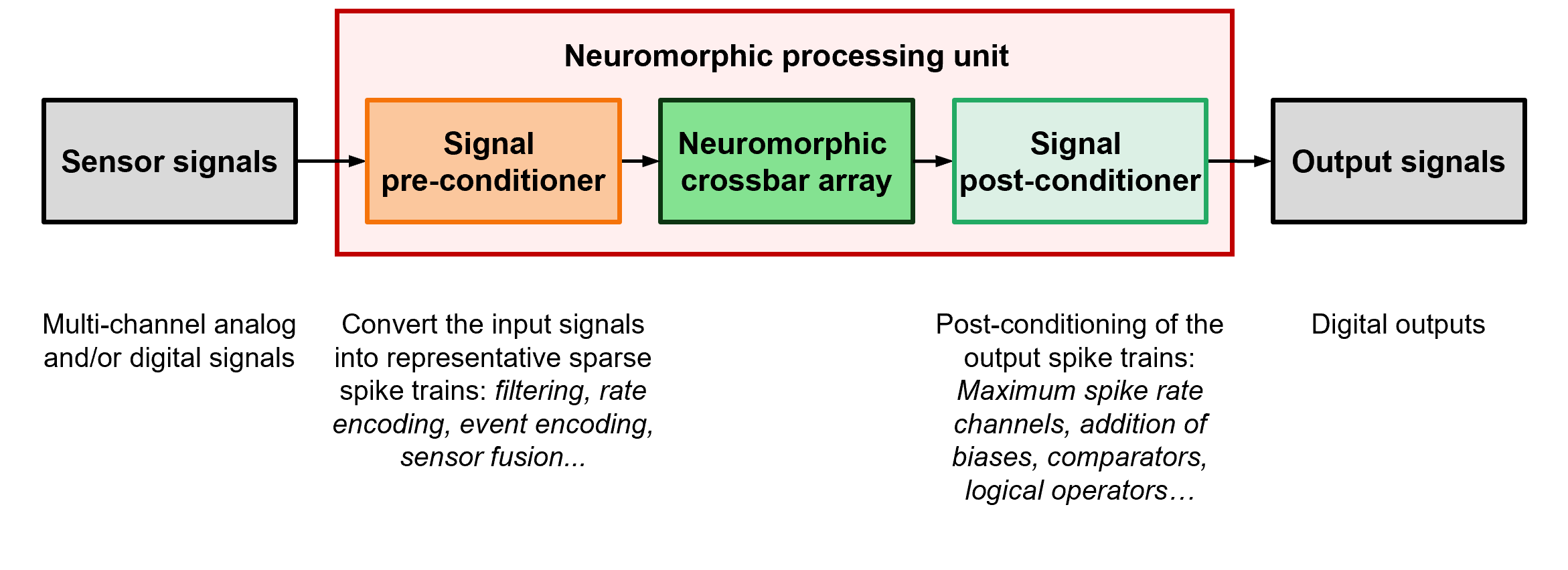

Neuromorphic elements need to be integrated into devices

In a practical neuromorphic device, the CBA is integrated into a neuromorphic processing unit (NPU) with pre- and post-signal conditioners to encode the input spikes and decode the output spikes, respectively (see Figure 4). Since the nature of these signal conditioners greatly affects the overall performance of the system and can eliminate any energy or latency benefits gained from the use of a neuromorphic computation core, their choice is critical to the overall comparative effectiveness of a neuromorphic solution. For example, the energy consumption and latency of typical microcontrollers are much higher than for a CBA, thus limiting their suitability. Ideally, input spike generation should be purely analog or performed on the sensors before application to the input word lines of the CBA. An interesting solution is the implementation of sensor fusion during pre-conditioning to reduce the dimensionality of the combined multi-sensor inputs, thus only processing features of relevance to the application. This is particularly beneficial when the number of input word lines of the CBA is limited.

Figure 4: Neuromorphic processing unit components. Figure: Helbling.

Figure 4: Neuromorphic processing unit components. Figure: Helbling.

Summary: Neuromorphic computing has untapped potential for future technologies

Due to its inherent features, neuromorphic computing provides enormous advantages versus traditional digital electronic devices with von Neumann architectures. Benefits include very low computation latencies and ultra-low energy requirements. However, due to the need for a new engineering mindset to approach problems and a lack of community knowledge of the relevant technologies, the full potential of neuromorphic computing has yet to be leveraged. As such, Helbling experts from various disciplines have studied the topic intensively and believe that it will be a decisive factor in future MedTech and system monitoring applications. With this expertise and the intensive partnership with Abacus neo, Helbling is positioning itself as an important industry partner and trailblazer.

Authors: Navid Borhani, Matthias Pfister

Though, looks like they playing with crossbar arrays (analog?).

Our projects | Abacus neo in Frankenthal

Abacus neo turns business ideas into reality: We are a young company and our portfolio is growing. Take a look at our examples!

Insight

Neuromorphic computing enables ultra-low power edge devices

Industries

Software & IT

Technologies

Scientific informatics

Over the last five years, neuromorphic computing has rapidly advanced through the development of state-of-the-art hardware and software technologies that mimic the information processing dynamics of animal brains. This development provides ultra-low power computation capabilities, especially for edge computing devices. Helbling experts have recently built up extensive interdisciplinary knowledge in this field in a project with partner Abacus neo. The focus was also on how the potential of neuromorphic computing can be optimally utilized.

Similar to the natural neural networks found in animal brains, neuromorphic computing uses compute-in-memory, sparse spike data encoding, or both together to provide higher energy efficiencies and lower computational latencies versus traditional digital neural networks. This makes neuromorphic computing ideal for ultra-low power edge applications, especially those where energy harvesting can provide autonomous always-on devices for environmental monitoring, medical implants, or wearables.

Currently, the adoption of neuromorphic computing is restricted by the maturity of the available hardware and software frameworks, the limited number of suppliers, and competition from the ongoing development of traditional digital devices. Another factor is resistance from conservative communities due to their limited experience of the theory behind and practical use of neuromorphic devices, with the need for a new mindset to approach problems relating to both hardware and software.

Given the inherent relative benefits of neuromorphic computing, it will be a key technology for future low-power edge applications. Accordingly, Helbling has been actively investigating currently available solutions to assess their suitability for a broad range of existing and new applications. Helbling also cooperates with partners here, such as in an ongoing project with Abacus neo, a company that focuses on developing innovative ideas into new business models.

The von Neumann bottleneck needs to be overcome

The main disadvantage of current computer architectures, both in terms of energy consumption and speed, is the need to transfer data and instructions between the memory and the central processing unit (CPU) during each fetch-execute cycle. In von Neumann devices, the increased length and thus electrical resistance of these communication paths leads to greater energy dissipation as heat. In fact, often more energy is used for transferring the data than for processing it by the CPU. Furthermore, since the data transfer rate between the CPU and memory is lower than the processing rate of the CPU, the CPU must constantly wait for data, thus limiting the system’s processing rate. In the future, this von Neumann bottleneck will become more restrictive as CPU and memory speeds continue to increase faster than the data transfer rate between them.

Neuromorphic computing collocates processing and memory

Neuromorphic computing aims to remove the von Neumann bottleneck and minimize heat dissipation by eliminating the distance between data and the CPU. These non-von Neumann compute-in-memory (CIM) architectures collocate processing and memory to provide ultra-low power and massively parallel data processing capabilities. Practically, this has been achieved through the development of programmable crossbar arrays (CBA), which are effectively dot-product engines that are highly optimized for the vector-matrix multiplication (VMM) operations that are the fundamental building blocks of most classical and deep learning algorithms.

These crossbars comprise input word and output bit lines, where the junctions between them have programmable conductance values that can be set to carry out specific algorithmic tasks.

Therefore, for a neuromorphic computer the algorithm is defined by the architecture of the system rather than by the sequential execution of instructions by a CPU. For example, to perform a VMM, voltages (U) representing the vector are applied to the input word lines whilst the matrix is represented by the conductance values (G) of the crossbar junction grid. The result of the VMM is then given by the currents (I) flowing from the output bit lines (see Figure 1). Since a VMM operation is performed instantaneously in a clockless, asynchronous manner, the latencies and processing times are much lower than for traditional von Neumann systems.

Options for the central component of the crossbar arrays

Currently, the CBA synapses are either fabricated from analog memristors, the conductance of which can be approximately programmed within a limited continuous range, or from a collection of CMOS transistors that can be set to provide constant quantified conductance values. The critical shortcoming of the former case is the drifting of the set conductance values with time. In both cases, since the conductance matrix can only be defined approximately, crossbar arrays are limited to approximate computation tasks such as qualitative classification, lossy compression, and convolutional filtering (see Figure 2).

The energy cost of implementing a VMM on a CBA is very low versus that of a von Neumann device since energy is only required to impose the input word line voltages and to overcome the electrical resistance losses of the CBA.

Sparse data representation reduces energy requirements

The second main feature of neuromorphic computing is its time-dynamic nature and the flow of sparse event encoded data through spiking neural networks (SNN).

Event encoding typically involves converting a continuous signal into a train of representative short-duration analog spikes. Techniques include rate encoding, where the spike frequency is proportional to the instantaneous signal amplitude, or time-encoded spikes that are generated when a signal satisfies pre-defined thresholds. The advantages of this sparse representation are the very low power required for transmission and the ability to develop asynchronous, event-driven systems.

Leaky integrate-and-fire (LIF) neurons implemented at the hardware level form the basis of the SNN used in neuromorphic computing. The operation of these LIF neurons is shown in Figure 3. Essentially, the spikes entering a neuron are multiplied by the pretrained weight (w) of their respective channels. These are then integrated and added to a bias value, before being added to the instantaneous membrane potential (VM) of that neuron.

This membrane potential leaks with time as it decreases at a programmable rate, thus providing the neuron with a memory of previous spiking events. If the membrane potential is greater than a predefined threshold (VTH), the neuron fires a spike downstream before resetting to its base state to create a continuous time dynamic process.

Neuromorphic elements need to be integrated into devices

In a practical neuromorphic device, the CBA is integrated into a neuromorphic processing unit (NPU) with pre- and post-signal conditioners to encode the input spikes and decode the output spikes, respectively (see Figure 4). Since the nature of these signal conditioners greatly affects the overall performance of the system and can eliminate any energy or latency benefits gained from the use of a neuromorphic computation core, their choice is critical to the overall comparative effectiveness of a neuromorphic solution. For example, the energy consumption and latency of typical microcontrollers are much higher than for a CBA, thus limiting their suitability. Ideally, input spike generation should be purely analog or performed on the sensors before application to the input word lines of the CBA. An interesting solution is the implementation of sensor fusion during pre-conditioning to reduce the dimensionality of the combined multi-sensor inputs, thus only processing features of relevance to the application. This is particularly beneficial when the number of input word lines of the CBA is limited.

Summary: Neuromorphic computing has untapped potential for future technologies

Due to its inherent features, neuromorphic computing provides enormous advantages versus traditional digital electronic devices with von Neumann architectures. Benefits include very low computation latencies and ultra-low energy requirements. However, due to the need for a new engineering mindset to approach problems and a lack of community knowledge of the relevant technologies, the full potential of neuromorphic computing has yet to be leveraged. As such, Helbling experts from various disciplines have studied the topic intensively and believe that it will be a decisive factor in future MedTech and system monitoring applications. With this expertise and the intensive partnership with Abacus neo, Helbling is positioning itself as an important industry partner and trailblazer.

Authors: Navid Borhani, Matthias Pfister

Similar threads

- Replies

- 0

- Views

- 4K

- Replies

- 9

- Views

- 6K

- Replies

- 0

- Views

- 3K