Hi

@Investig8ed

This previous post of mine give a pretty good run down on wafer / chip process and costs.

Great point Excellent point, So I'll sit back and wait for future positive announcements to roll thru 24

thestockexchange.com.au

This post from Sept 23 from a Chinese tech company by the looks gives a pretty good run down of the process and approx. costs.

Let’s talk about chip design, tape-out, verification, manufacturing, and cost. Wafer Terminology 1.

www.linkedin.com

Talk about Chip Design, Tape-out, Verification, Manufacturing, and Cost

YM Innovation Technolgy (Shenzhen)Co.,Ltd

YM Innovation Technolgy (Shenzhen)Co.,Ltd

Be a leading professional manufacturer of micro…

Published Sep 22, 2023

+ Follow

Let’s talk about chip design, tape-out, verification, manufacturing, and cost.

Wafer Terminology

1. Chip (chip, die), device (device), circuit (circuit), microchip (microchip) or barcode (bar): All these terms refer to the microchip pattern that occupies most of the area on the wafer surface;

2. Scribe line (scribe line, saw line) or street (street, avenue): These areas are used to separate the intervals between different chips on the wafer. The scribe lines are usually blank, but some companies place alignment marks in the spacer areas, or structures to be tested;

3. Engineering die and test die: These chips are different from formal chips or circuit chips. It includes special devices and circuit modules for electrical testing of wafer production processes;

4. Edge die: Area loss caused by some chips with incomplete masks on the edge of the wafer. More edge waste due to larger individual chip sizes is offset by the use of larger diameter wafers. One of the driving forces driving the semiconductor industry toward larger diameter wafers is to reduce the area occupied by edge chips;

5. Wafer crystal plane: The cross-section in the figure marks the lattice structure under the device. The direction of the edge of the device and the lattice structure shown in this figure is determined;

6. Wafer flats/notche: The wafer shown in the figure consists of a major flat and a minor flat, indicating that it is a P-type <100> crystal orientation of wafers. Both 300mm and 450mm diameter wafers use grooves as lattice guide marks. These locating edges and grooves also assist in wafer registration in some wafer production processes.

Chip Tape-out Method (Full Mask, MPW)

Full Mask and MPW are both a tape-out (handing over the design results for production and manufacturing) method of integrated circuits. Full Mask means all masks in the manufacturing process serve a certain design; and MPW stands for Multi Project Wafer, literally translated as multi-project wafer, that is, multiple projects share a certain wafer , that is, the same manufacturing process can undertake the manufacturing tasks of multiple IC designs.

1. Full Mask: for Full Mask chips, one wafer can produce thousands of DIEs; and then packaged into chips, they can support large-scale Bulk customer demand.

2. Multi-project wafer is to tape out multiple integrated circuit designs using the same process on the same wafer. After manufacturing is completed, dozens of chip samples can be obtained for each design. This number is very important for the prototype design stage. Experimentation and testing are enough. This method of operation can reduce tape-out fees by 90%-95%, which greatly reduces the cost of chip development.

The wafer fab has several fixed MPW opportunities every year, called Shuttle, which leaves as soon as it arrives. Isn’t it very impressive? Different companies compete for wafer. There must be a rule. MPW presses SEAT to lock the area. A SEAT is generally 3mm. *In an area of 4mm, in order to ensure that different chip companies can participate in MPW, general wafer factories will limit the number of SEATs reserved by each company (in fact, the cost of SEAT will go up, and the meaning of MPW will be lost). The advantage of MPW is that the production cost is small, usually only a few hundred thousand, which can greatly reduce risks. It should be noted that MPW is a complete production process from a production perspective, so it is still time-consuming. One MPW generally requires 6 to 9 months, which will cause a delay in the delivery time of chips.

Because it is a wafer business, the number of chips obtained through MPW will be very limited. They are mainly used for internal verification testing of the chip company, and may also be provided to a very small number of head customers. From here, you may have understood that MPW is an incomplete and cannot be mass-produced.

Chip ECO Process

ECO refers to Engineering Change Order. ECO can occur before, during, or after tapeout; for ECO after tapeout, small changes may require only a few metal layers to be changed, while large changes may require more than a dozen metal layers or even re-tapeout. The implementation process of ECO is shown in the figure.

If the MPW or FullMask chip is verified to have functional or performance defects, small-scale adjustments to the circuit and standard unit layout are made through ECO, small-scale optimization is performed while keeping the original design layout and wiring results basically unchanged, and the remaining violations of the chip are repaired. Finally, the chip sign-off standard is reached. Violations cannot be repaired through the back-end placement and routing process (it is too time-consuming to go through the process again), but timing, DRC, DRV, and power consumption must be optimized through the ECO process.

Tape-out Corner

1. Corner

Chip manufacturing is a physical process, and there are process deviations (including doping concentration, diffusion depth, etching degree, etc.), resulting in different batches, different wafers in the same batch, and different wafers. The situation is different between chips.

On a wafer, it is impossible for the average drift speed of carriers at every point to be the same. As the voltage and temperature are different, their characteristics will be different. To classify them, PVT (Process, Voltage, Temperature) process is divided into different corners:

TT: Typical N Typical P

FF: Fast N Fast P

SS: Slow N Slow P

FS: Fast N Slow P

SF: Slow N Fast P

The first letter represents NMOS, the second letter Represents PMOS, which is for different concentrations of N-type and P-type doping. NMOS and PMOS are made independently in the process and will not affect each other. However, for circuits, NMOS and PMOS work at the same time. NMOS will be fast and PMOS will be fast or slow at the same time, so FF and SS will appear. , FS, SF four situations. Through the adjustment of process injection, the speed of the device is simulated, and different levels of FF and SS are set according to the size of the deviation. Under normal circumstances, most of them are TT, and the above five corners can cover about 99.73% of the range at +/-3sigma. The occurrence of this randomness is consistent with the normal distribution.

2. The significance of corner wafer.

During the tape-out of engineering chips, FAB will pirun key levels to adjust inline variation, and some will also run backup wafer to ensure that the shipped wafer device is on target, that is, near the TT corner. If it is simply to make some samples and only perform engineering tape-out, then you do not need to verify the corners, but if you are preparing for subsequent mass production, you must consider the corners. Since the process will have deviations during the production process, and the corner is an estimate of the normal fluctuations of the production line, FAB will also have requirements for corner verification of mass-produced chips. Therefore, corners must be met in the design stage, and the circuit must be simulated under various corners and extreme temperature conditions to make it work normally on various corners, so that the final chip produced can have a high yield.

3. Corner split table strategy for products.

The corner is usually on the spec. under normal circumstances, the spec has 6 sigmas. For example, FF2 (or 2FF) means 2 Sigma in the faster direction, and SS3 (or 3SS) means sigma in the faster direction. The slow direction is 3 Sigma. Sigma mainly represents the fluctuation of Vt. The larger the fluctuation, the larger the sigma. The three sigma here are on the spec line of the process device. It can be allowed to exceed a little, because the fluctuation on the line cannot be exactly on the spec.

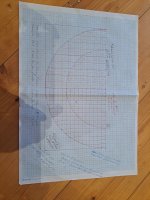

The following is an example of a 55nm Logic process chip and the proposed corner split table:

①#1 & #2 two pieces of pilot wafer, one for blind sealing and one for CP measurement;

②#3 & #4 hold two pieces in Contact to reserve engineering wafer for subsequent revisions, which can save ECO tape-out time;

③#5~#12 eight pieces are held in Poly, wait for the pilot result to see if the device speed needs to be adjusted, and verify the corner;

④ In addition to leaving enough chips for testing and verification, Metal Fix should also reserve as many wafers as possible for mass production and shipment according to project requirements.

4. Confirm the Corner result

First of all, most of them should fall within the window range determined by the four corners. If there is a big deviation, it may be a process shift. If the yield of each corner is not affected and meets expectations, it means that the process window is sufficient. If there are individual conditions where the yield is low, the process window needs to be adjusted. The purpose of the corner wafer is to verify the design margin and examine whether there is any loss in yield. In general, chips that exceed the performance range of this corner constraint are scrapped.

Corner verification benchmarks are WAT test results, which are generally led by FAB, but the cost of corner wafer is borne by the design company. Generally, with a mature and stable process, the parameters of chips on the same wafer, the same batch of wafers, and even different batches of wafers are very close, and the range of deviation is relatively small. Process Corner PVT (Precess Voltage Temperature) process errors are different from bipolar transistors. MOSFETs parameters vary greatly between different wafers and between different batches.

In order to alleviate the difficulty of circuit design tasks to a certain extent, process engineers must ensure that the performance of the device is within a certain range. In general, they strictly control expected parameter changes by scrapping chips that exceed this performance range.

①The speed of the MOS tube refers to the level of the threshold voltage respectively. Fast speed corresponds to a low threshold, and slow speed corresponds to a high threshold. GBW=GM/CC. Under other conditions being the same, the lower the vth, the higher the gm value. Therefore, the larger the GBW, the faster the speed. (Detailed analysis of specific situations)

②The speed of the resistance. Fast corresponds to a small square resistance, and slow corresponds to a large square resistance.

③The speed of the capacitor. Fast corresponds to the smallest capacitance, and slow corresponds to the largest capacitance.

Tape-out Cost and Wafer Price

The tape-out mask cost of 40nm is about US$800,000-900,000, and the wafer cost is about US$3,000-4,000 per piece. Including IP merge, it costs at least seven to eight million yuan.

A tape-out of the 28nm process costs US$2 million;

A tape-out of the 14nm process costs US$5 million;

A 7nm process tapeout costs US$15 million;

5nm process tape-out costs US$47.25 million per time;

Taping out the 3nm process may cost hundreds of millions of dollars;

Among the two main tape-out costs, mask and wafer, mask is the most expensive.

The more advanced the process node, the more mask layers are required; because each layer of "mask" corresponds to one application of photoresist, exposure, development, etching and other operations, involving material costs, instrument depreciation costs , these costs need to be paid by fabless customers!

The 28nm process requires about 40 layers,

The 14nm process requires 60 masks;

The 7nm process requires 80 or even hundreds of masks.

One layer of mask costs 80,000 US dollars, so the chip must be mass-produced to reduce costs!

Take the 40nm MCU process as an example: if 10 wafers are produced, the cost of each wafer is (900,000+4000*10)/10=94,000 US dollars; if 10,000 wafers are produced, the cost of each wafer is (900,000+4000* 10000)/10000=4090 US dollars. The larger the wafer quantity, the cheaper it is, and different manufacturers have different quotations.

The latest quotation given by TSMC this year: the most advanced 3nm process, US$19,865 per wafer, equivalent to about 14.2w in RMB.