Learning

Learning to the Top 🕵♂️

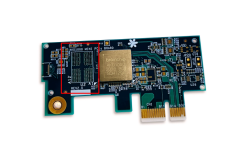

That look like the Akida 1000 PCIe Board to me?

Anyone has a zooming software?

EDGX | Home

We build the world’s fastest edge computers for satellites, empowering constellations to process data onboard and act instantly. No downlink delays. No wasted bandwidth. Just pure performance in orbit.

#edgx #space #spacetech #innovation #ai #neuromorphic #dpu #obdp #edhpc | Nick Destrycker

🚀 Exciting News 🚀 EDGX emerging from the shadows to join the SpaceTech Revolution! 🛰️ Thrilled to announce the official launch of EDGX, a brand-new spacetech company pioneering the future of onboard AI technology in space. 🛰️ At EDGX, we envision a world where intelligent space systems play a...

Learning

Attachments

Last edited: