rgupta

Regular

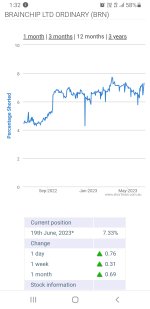

To me nothing is changing but style of management.I've read lots of posts over the last couple of weeks about the VERY depressed share price. Some posters are well underwater, at least one has had to postpone planned retirement and others have lamented the SP fall since we have been in the ASX200, presumably because it exposed us to the machinations of the large institutions (aka the shorters).

I guess I'm one of the lucky ones who bought in early - I'm still 200+% up on my average purchase price. Does that give me any consolation? Frankly no. I've made it clear before that I am very disappointed in the way management has communicated with shareholders and the market in general. If I was a suspicious person I'd probably be thinking by now that they are hiding something.

That said, I am still a firm believer in the technology created by Peter and Anil and therefore willing to hold until the end of this year. By then I will have been on board for over 7 years and believe I should be seeing some solid progress towards a sustainable enterprise by then. The sort of progress that the market is looking for - more genuine customers (not just "partners") and some real royalty revenue. Having been in the IT world for a fair portion of my working life I understand that the introduction of radical new technologies takes time. I'm just wondering now if we got ahead of ourselves a bit here. Would we have been better remaining private for longer and developing a solution that a customer was actually ready for?? Guess we'll never know..................

FWIW and DYOR

While LDN was interested in playing in hands of big players Sean wants to creat big players.

If we understand technology we should also understand the value of eco system.

Before Sean were having to IP contracts but where was the ecosystem. So to me Sean wants to prove himself by the worl he is doing than just give benefit to day traders.

If someone believes in technology then hold if you don't believe in technology then go.

To me the message is loud and clear from Sean. No more artificial pumps or dumps but strick to basics and have patience.

Dyor