Check out this article dated 18 September 2022, entitled - "Mercedes-Benz Boasts Automated Driving Redundancy Strategy,

Elon should see it".

Here's two extracts I found particularly interesting, with the entire article following.

Elon should see it, he-he-he!!!

Extract 1

Extract 2

Earlier this year, in March, Mercedes-Benz announced that it

will accept liability if the new S-Class crashes while driving on the company’s Drive Pilot. The system was demonstrated in its automated valet parking mode during the same month and later offered in production cars starting in May 2022. Now, the brand underlined its redundancy strategy.

Mercedes-Benz Boasts Automated Driving Redundancy Strategy, Elon Should See It

Home >

News >

Coverstory

18 Sep 2022, 20:04 UTC ·

by

Sebastian Toma

Ever since December 2021, Mercedes-Benz's

Drive Pilot has become the world’s first internationally certified SAE (Society of Automotive Engineers) Level 3 driving system. Initially, it was only available as an option for the EQS and the S-Class in Germany, but its availability was extended to other markets later this year, including the U.S., and its certification level has yet to be matched by any automaker.

Mercedes-Benz is proud of this, as one could imagine, and the company states that it has been possible thanks to its safety-focused approach to system design.

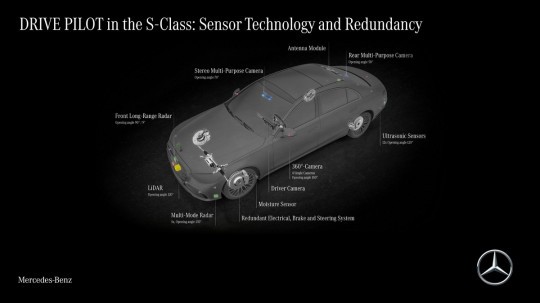

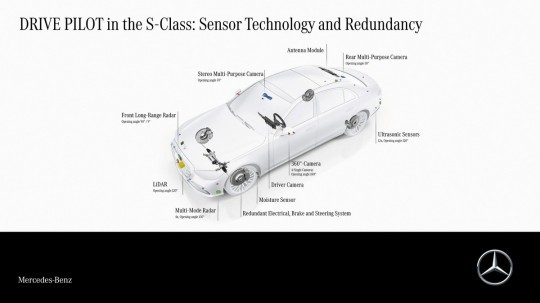

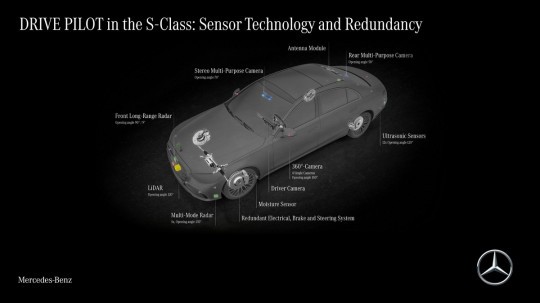

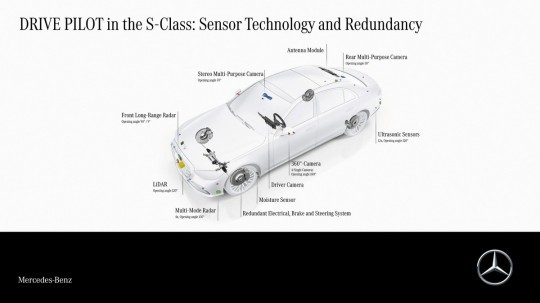

The German marque underlines the use of physical and functional redundancy for steering, braking, power supply, and some parts of the sensor system. In other words, the vehicles that have the system come with backup solutions that work seamlessly when something goes wrong.

For example, all the sensors used for the steering motor, battery, and wheel speed are duplicated for good measure, and the algorithms that make them work have been adapted accordingly. Redundancies in the sensor system have been achieved by blending optical sensors, referred to as cameras, with radio sensors,

and LiDAR.

There are even moisture sensors, microphones, and ultrasound sensors that deliver data that even human drivers might not have access to. After all, you have no idea what the outside humidity is like when you drive, but these sensors

will know all about it.

Thanks to a suite of computers, the system will never be tired and will always score maximum points at its reaction times, unlike a human driver.

The entire system involves over 30 sensors to make sure that reliable driving is possible with the setup, and we cannot wait to test it out in the real world against other systems that claim to do something similar. Who knows, maybe a large technology supplier might organize a face-off with, say, Tesla’s Autopilot.

Yes, we did it, we mentioned Tesla, and we did that because the American company cannot pride itself

on the same certification as Mercedes-Benz painstakingly obtained for its system.

Moreover, Tesla vehicles do not come with LiDAR sensors, so they rely on optical ones, along with a few other sensors, to keep the vehicle on the road. We have seen teardowns of Tesla vehicles, and there is no sign of sensor redundancy strategies.

Coming back to the ‘Benz, the

Drive Pilot system has a few limitations, such as velocity, as well as the kind of roads that it can operate on. For example, a Mercedes-Benz with Drive Pilot can have its driver switch the system on to drive the vehicle on highways at speeds of up to 37 mph (ca. 60 kph) when heavy traffic or traffic jams are observed.

As Mercedes-Benz explains, if one part of the system fails, the rest are prepared to help the driver make a safe handover. If the latter is not complied with by the driver, the vehicle is capable of safely coming to a slow and steady stop that does not pose a risk to traffic following behind. The vehicle’s systems then presume that a medical emergency has occurred, and then they notify the driver that an emergency call will be made.

Having experienced an advanced Level 2 system in a Mercedes-Benz, I can say that the vehicle can figure out where it cannot stop and that it will continue to drive with its hazard lights on at a slow pace until it finds a place that it considers suitable to stop.

I did not let the vehicle call the authorities, but it did find a straight piece of road that was far enough from a corner to allow the drivers behind to avoid the vehicle that I was driving.

Returning to the Drive Pilot system, a company official noted that “

the use of multiple sensors is particularly indispensable, as it helps them compensate for the situation-dependent deficits of one sensor with the characteristics of another.”

Markus Schafer, Member of the Board of Management of Mercedes-Benz Group AG and Chief Technology Officer, who is also responsible for Development and Procurement, has also noted that “

relying on just one type of sensor would not meet Mercedes-Benz's high safety standards.” One can only wonder if the official was thinking of another brand in particular when he made that statement.

For example, the automated parking valet mode, which

Mercedes-Benz calls Intelligent Park Pilot (written in FULL CAPS, nonetheless), is a splendid example of SAE Level 4 autonomy, as the company describes, while the Drive Pilot system is Level 3 autonomy according to SAE.

The difference is that Level 4 can operate without a driver behind the wheel in certain conditions. For now, those are limited to parking lots with dedicated sensors, but V2X tech will improve, and the vehicles of the future will be able to park themselves while you are already in line to pick up your latte.

Mercedes-Benz prides itself on installing the technology and sensors for its Intelligent Park Pilot, or pre-installation as the company describes it, for the moment when the technology will be approved in multiple markets.

The activation might happen Over-The-Air, Tesla-style, and we must hand it to them to prove that it can be made possible and that a manufacturer can survive by selling only electric vehicles. The latter two are things that nobody can take away from Tesla, as nobody can take Mercedes-Benz's title of having the world's first internationally certified SAE Level 3 system.