You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

BRN Discussion Ongoing

- Thread starter TechGirl

- Start date

Fastback6666

Regular

Interesting there are no large selling walls on BRN stock until around $1.195/$1.20 this morning.

Only 10 minutes out from open you would expect to see some closer to the open price.

Today may just be a softer day in the market with US futures a little red.

Only a daily observation and does not mean much for us long term investors.

Only 10 minutes out from open you would expect to see some closer to the open price.

Today may just be a softer day in the market with US futures a little red.

Only a daily observation and does not mean much for us long term investors.

Izzzzzzzzzzy

Regular

Announcements???

Izzzzzzzzzzy

Regular

Why tf did trading freeze for a second and then resume. ASX corruption

Good Morning Chippers,

US Senate passed the Climate Change Bill over the weekend.

Includes $369 billion US to expediate green technologies to meet greenhouse environmental targets.

Should be a shot in the arm for companys involved in earth saving technologies.

GO BRAINCHIP.

Wooo Hooo.

Smiling

.

.

Regards,

Esq.

US Senate passed the Climate Change Bill over the weekend.

Includes $369 billion US to expediate green technologies to meet greenhouse environmental targets.

Should be a shot in the arm for companys involved in earth saving technologies.

GO BRAINCHIP.

Wooo Hooo.

Smiling

Regards,

Esq.

Lots of smart people here, thanks for helping us newbies at the code level. I have been trying to wrap my brain around the Renesas 2-node license. Dio might have mentioned 2 nodes and 8 NPUs. Perhaps the term node is not working for me. The 8 NPUs is Neural Processing Units I assume. The nodes, layers, NPUs kind of get foggy for those of us javascript/sql programmers!

Brainchip's big advantage is tangled in the SNN architecture. I also realize that the output of Akida often is to another receiving function. Meaning we are part of a solution, in a chain to the final outcome of message to the user. Like Nasa for example, Akida after being dormmate (non-spiked! non-threshold!) for months, awakes to send a signal that an asteroid is approaching and might fire up the energy usage next step.

The more everyone talks about the architecture details the better in my opinion. Layers, nodes, activation functions, all that. Keep it coming, very helpful.

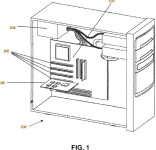

View attachment 13563

Hi Stuart,

This shows an array of 16 nodes, each with 4 NPUs.

The matrix is a representation of the layout of the nodes/NPUs in silicon.

The layers are the electrical inteconnexions of the NPUs.

https://www.bing.com/images/search?...tedindex=12&ajaxhist=0&ajaxserp=0&vt=0&sim=11

Last edited:

equanimous

Norse clairvoyant shapeshifter goddess

We are in the right place at the right time.Good Morning Chippers,

US Senate passed the Climate Change Bill over the weekend.

Includes $369 billion US to expediate green technologies to meet greenhouse environmental targets.

Should be a shot in the arm for companys involved in earth saving technologies.

GO BRAINCHIP.

Wooo Hooo.

Smiling

.

Regards,

Esq.

You want to save on power and increase performance, Companies just select and add BRN to cart.

Steve10

Regular

We are in the right place at the right time.

You want to save on power and increase performance, Companies just select and add BRN to cart.

ARM Holdings built a name for itself via power savings. Saving power is a huge benefit.

ARM’s secret recipe for power efficient processing

There are several different companies that design microprocessors. There is Intel, AMD, Imagination (MIPS), and Oracle (Sun SPARC) to name a few. However, none of these companies is known exclusively for their power efficiency. One company that does specialize in energy efficient processors is ARM. That isn’t to say they don’t have designs aimed at power efficiency, but this isn’t their specialty. One company that does specialize in energy efficient processors is ARM.While Intel might be making chips needed to break the next speed barrier, ARM has never designed a chip that doesn’t fit into a predefined energy budget. As a result, all of ARM’s designs are energy efficient and ideal for running in smartphones, tablets and other embedded devices. But what is ARM’s secret? What is the magic ingredient that helps ARM to produce continually high performance processor designs with low power consumption?

In a nutshell, the ARM architecture. Based on RISC (Reduced Instruction Set Computing), the ARM architecture doesn’t need to carry a lot of the baggage that CISC (Complex Instruction Set Computing) processors include to perform their complex instructions. Although companies like Intel have invested heavily in the design of their processors so that today they include advanced superscalar instruction pipelines, all that logic means more transistors on the chip, more transistors means more energy usage. The performance of an Intel i7 chip is very impressive, but here is the thing, a high-end i7 processor has a maximum TDP (Thermal Design Power) of 130 watts. The highest performance ARM-based mobile chip consumes less than four watts, oftentimes much less.

And this is why ARM is so special, it doesn’t try to create 130W processors, not even 60W or 20W. The company is only interested in designing low-power processors. Over the years, ARM has increased the performance of its processors by improving the micro-architecture design, but the target power budget has remained basically the same. In very general terms, you can breakdown the TDP of an ARM SoC (System on a Chip, which includes the CPU, the GPU and the MMU, etc.) as follows. Two watts max budget for the multi-core CPU cluster, two watts for the GPU and maybe 0.5 watts for the MMU and the rest of the SoC. If the CPU is a multi-core design, then each core will likely use between 600 to 750 milliwatts.

Full article here:

ARM’s secret recipe for power efficient processing

There are several different companies that design microprocessors. There is Intel, AMD, Imagination (MIPS), and Oracle (Sun SPARC) to name a few. However, none of these companies is known exclusively for their power efficiency. One company that does specialize in energy efficient processors is ARM.

www.androidauthority.com

Stable Genius

Regular

I think most are content and confident with their knowledge of the company called Brainchip and are patiently waiting for success!No one here this morning

A quote from the great man Warren Buffett:

“It's far better to buy a wonderful company at a fair price, than a fair company at a wonderful price.”

My opinion is the company is wonderful and the price is very fair. Can’t wait to see where we are in 2025!

A very interesting article. Not sure how relevant to BRN.

www.techradar.com

www.techradar.com

Intel is working on a new type of processor you've never heard of

Meet the versatile processing unit (VPU)

uiux

Regular

A very interesting article. Not sure how relevant to BRN.

Intel is working on a new type of processor you've never heard of

Meet the versatile processing unit (VPU)www.techradar.com

Interesting!

Intel confirms 14th Gen Meteor Lake has 'Versatile Processing Unit' for AI/Deep Learning applications - VideoCardz.com

Versatile Processing Unit for deep learning and AI inference Intel is adding VPU to Meteor Lake and newer. A new commit to Linux VPU driver today confirms that the company has plans to introduce a new processing unit into consumer 14th Gen Core processors, a Versatile Processing Unit. The VPU...

Would like to see the stats

M_C

Founding Member

Benefits and Limitations of AI Training in an IMU

A significant challenge with incorporating AI training into IMUs is the larger footprints of these products. However, ST claims to have mitigated this challenge by incorporating a small ISPU that eliminates the need for additional external MCUs, reducing the footprint and power consumption of the module.Incorporating AI training into the sensor:

- Increases efficiency and performance for pattern recognition and anomaly detection

- Offers an ultra-low-power programmable core for signal processing execution

- Leverages high-performance AI algorithms on the edge

Bravo

Meow Meow 🐾

Press Releases Archive

A global media and thought leadership platform that elevates voices in the news cycle. Online since 1998.

www.digitaljournal.com

Bravo

Meow Meow 🐾

uiux

Regular

Afternoon Uiux and Medina.

Cheers for posting Medina.

Uiux, the NEURAL COMPUTE SUBSYSTEM ( NCS ) sounds promising.

Regards,

Esq.

Esq, we know Intel have heaps of options for neural compute, not just Loihi

It's interesting that Intel are adding it into desktop processors though. How it relates to BrainChip ? Well we are targeting edge, but personally I am quite fond of having a neuromorphic chip in my PC.

We do have patents that cover this set up I think (@Diogenese can prob explain better if I'm wrong)

US11157800B2 - Neural processor based accelerator system and method - Google Patents

A configurable spiking neural network based accelerator system is provided. The accelerator system may be executed on an expansion card which may be a printed circuit board. The system includes one or more application specific integrated circuits comprising at least one spiking neural processing...

patents.google.com

Either way it's interesting and a story to watch

krugerrands

Regular

At the beginning it was shorts go up our price goes down, now we are seeing an increase in shorts and our price is also going up, looks like they are well and truly buggered and can’t get the price down.

It is starting to look that way.

Shorts have stabilised at ~4.5% for a while now and price is creeping up, faster on days with a bit more volume.

~2.5% of them shorted ~$0.90-$1.10

It is a bit hard to get a read on what is happening ( dodgy data contributes to this ).

For example here is a graph for the shorts on VUL.

It is almost symmetrical in the shorts vs price action. ( not accounting for volume )

BRN doesn't have such a strong correlation.

Looking at 17/06/2022 for example. ( hard to align the graph to a date but it is the clear spike in volume and shorts between 14-20 Jun)

58m shares traded.

Shorts increases by 23m ( 1.35% ).

And yet almost no movement on the price.

May they run out of road... and soon.

Last edited:

Similar threads

- Replies

- 0

- Views

- 4K

- Replies

- 9

- Views

- 6K

- Replies

- 0

- Views

- 3K