Fullmoonfever

Top 20

This upcoming conference could be interesting.

Wonder if the workshop on Cortex M etc might get us a mention. Be nice.

www.newelectronics.co.uk

www.newelectronics.co.uk

Join us on the 24th of November in Warwickshire for the 18th Arm MCU Conference by Hitex. This year we are pleased to welcome keynote speakers from Arm, Keil and Linaro. The conference is accompanied by a tabletop exhibition and training workshops to help you get the best from the day.

Hitex Arm Microcontroller Conference

The 2022 conference will bring together experts from ARM and their partners to present new and emerging technologies for Cortex-M microcontrollers - with some exciting new speakers as well.

18 years of partnership, collaboration, and innovation

Since the first Cortex-M based microcontroller was launched in 2006 the family has gone from strength to strength to become today’s standard microcontroller processor. Now with the Armv8.x architectural revision Silicon vendors can release the next generation of Cortex-M processors with enhanced hardware extensions for today's critical applications such as the IoT, Machine Learning and Functional Safety.

On the 24th of November 2022, we are pleased to present a diverse range of experts from the UK embedded community gathered under one roof to discuss all the latest developments in microcontroller silicon, software and design techniques.

The day will include:

Technical conference

Industry-leading keynote speakers

Latest technology round-up

Exhibition

Full conference content to take home

URL: https://www.hitexarmconference.co.uk

Training courses

In the run-up to the conference, we will be running our most popular training courses. If you are starting with Cortex-M processors these courses are a great springboard for your first project

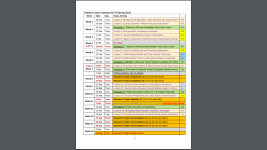

Cortex-M MCU Workshop 22 November 2022

A one-day introduction to the Cortex-M processor family, development tools, software standards and key programming techniques

Using a Real-Time Operating System 23 November 2022

This course provides a complete introduction to starting development with an RTOS for current ‘bare metal’ developers. Including concepts and introduction to the thee CMSIS-RTOSv2 API, How to design application code with an RTOS and adopting a layered software architecture for productivity and code reuse.

URL: https://www.hitexarmconference.co.uk/training-courses

Not forgetting the important bits...

The conference opens at 8.30, 24th November at the Delta Hotel Warwick. With parking, breakfast, lunch and the infamous goody bag all included free of charge, make sure you secure your seat today.

www.hitexarmconference.co.uk

www.hitexarmconference.co.uk

Wonder if the workshop on Cortex M etc might get us a mention. Be nice.

Arm MCU conference by Hitex - New Electronics

Join us on the 24th of November in Warwickshire for the 18th Arm MCU Conference by Hitex. This year we are pleased to welcome keynote speakers from Arm, Keil and Linaro. The conference is accompanied by a tabletop exhibition and training workshops to help you get the best from the day.

Join us on the 24th of November in Warwickshire for the 18th Arm MCU Conference by Hitex. This year we are pleased to welcome keynote speakers from Arm, Keil and Linaro. The conference is accompanied by a tabletop exhibition and training workshops to help you get the best from the day.

Hitex Arm Microcontroller Conference

The 2022 conference will bring together experts from ARM and their partners to present new and emerging technologies for Cortex-M microcontrollers - with some exciting new speakers as well.

18 years of partnership, collaboration, and innovation

Since the first Cortex-M based microcontroller was launched in 2006 the family has gone from strength to strength to become today’s standard microcontroller processor. Now with the Armv8.x architectural revision Silicon vendors can release the next generation of Cortex-M processors with enhanced hardware extensions for today's critical applications such as the IoT, Machine Learning and Functional Safety.

On the 24th of November 2022, we are pleased to present a diverse range of experts from the UK embedded community gathered under one roof to discuss all the latest developments in microcontroller silicon, software and design techniques.

The day will include:

Technical conference

Industry-leading keynote speakers

Latest technology round-up

Exhibition

Full conference content to take home

URL: https://www.hitexarmconference.co.uk

Training courses

In the run-up to the conference, we will be running our most popular training courses. If you are starting with Cortex-M processors these courses are a great springboard for your first project

Cortex-M MCU Workshop 22 November 2022

A one-day introduction to the Cortex-M processor family, development tools, software standards and key programming techniques

Using a Real-Time Operating System 23 November 2022

This course provides a complete introduction to starting development with an RTOS for current ‘bare metal’ developers. Including concepts and introduction to the thee CMSIS-RTOSv2 API, How to design application code with an RTOS and adopting a layered software architecture for productivity and code reuse.

URL: https://www.hitexarmconference.co.uk/training-courses

Not forgetting the important bits...

The conference opens at 8.30, 24th November at the Delta Hotel Warwick. With parking, breakfast, lunch and the infamous goody bag all included free of charge, make sure you secure your seat today.

Hitex ARM Conference Training Courses

ARM-based courses covering Cortex-M and RTOS (Real Time Operating Systems).

www.hitexarmconference.co.uk

www.hitexarmconference.co.uk