What does everyone think of Sima.ai? There has been previous discussions about whether or not they could be a potential licensee plus, I know that various Sima.ai posts have been "liked" by both LDN and Anil in the past.

Race to the gen AI edge heats up as Dell invests in SiMa.ai

James Thomason@jathomason

April 5, 2024 1:22 PM

technology and innovation concept

Image Credit: MF3d

Join us in Atlanta on April 10th and explore the landscape of security workforce. We will explore the vision, benefits, and use cases of AI for security teams. Request an invite here.

SiMa.ai, a chip startup developing a software-centric edge AI solution, yesterday announced a $70 million funding round that highlights the growing interest and investment in edge AI. But it’s the participation of

Dell Technologies Capital, the technology titan’s strategic investment arm, that signals a major vote of confidence in SiMa.ai’s approach and a shared vision for the future of AI at the edge.

The investment stands out as the only “hard tech” deal in Dell Technologies Capital’s portfolio in the past 12 months, according to data from

Pitchbook, This underscores the potential of edge AI to drive new use cases for Dell’s products and unlock value for enterprises. SiMa.ai’s approach to simplifying AI deployment and management at the edge aligns closely with Dell’s product portfolio and go-to-market strategy, positioning the tech giant to capitalize on the growing demand for AI at the edge.

Edge computing is so back

Over the last decade, the use of edge computing focused primarily on industrial deployments, connecting machines and harvesting data from sensors, all of which are use cases with relatively low computational requirements.

IoT was a major driver of edge computing in retail, heavy industry, logistics and supply chain services. However, despite significant investments in IoT projects over the past decade, many enterprises struggled to derive clear business value from these initiatives.

Today, the rise of AI is breathing new life into the edge computing market. Over the past three years, IT solution providers like Dell and HPE have been quickly transforming their edge offerings, evolving from simple gateway devices to powerful, ruggedized servers capable of handling more computationally intensive workloads like AI. According to analysts at

Fortune Business Insights, Markets and Markets, the global edge computing market is expected to at least double in the next few years.

VB EVENT

The AI Impact Tour – Atlanta

Continuing our tour, we’re headed to Atlanta for the AI Impact Tour stop on April 10th. This exclusive, invite-only event, in partnership with Microsoft, will feature discussions on how generative AI is transforming the security workforce. Space is limited, so request an invite today.

Request an invite

SiMa.ai’s machine learning system-on-chip (MLSoC) technology, could integrate with Dell’s edge computing offerings such as the PowerEdge XR series of ruggedized servers, enabling the company to deliver generative AI use cases to the edge.

ADVERTISEMENT

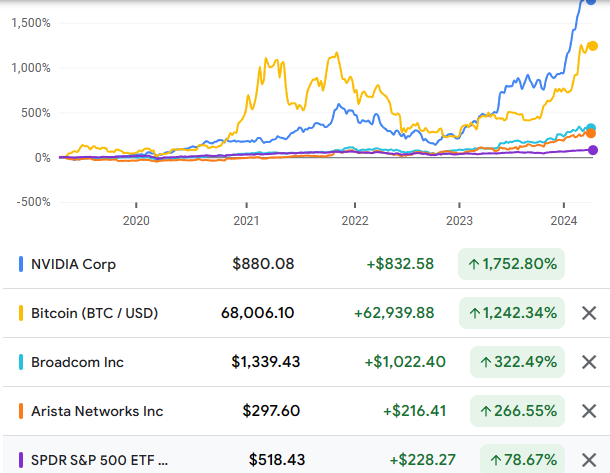

Credit: Koyfin

Generative AI at the edge

“AI — particularly the rapid rise of generative AI — is fundamentally reshaping the way that humans and machines work together,” said Krishna Rangasayee, founder and CEO at SiMa.ai. “Our customers are poised to benefit from giving sight, sound and speech to their edge devices, which is exactly what our next-generation MLSoC is designed to do.”

ADVERTISEMENT

In the last year, we have all witnessed the emergence of generative AI in chatbots and virtual assistants, but the potential applications of generative AI at the edge are enormous. According to

IDC’s Future Enterprise Resiliency and Spending Survey, 38% of enterprises expect to see improved personalization of employee experiences in areas like call centers and customer interaction through the use of AI at the edge.

ADVERTISEMENT

For example, in retail, voice-assisted shopping experiences could revolutionize customer engagement, with AI-powered systems offering personalized product recommendations, answering queries and even guiding customers through virtual try-ons. Restaurants could leverage interactive AI-driven menus and ordering systems, enhancing the dining experience while optimizing kitchen operations based on real-time demand forecasting.

Beyond consumer-facing applications, generative AI at the edge could transform industrial operations and supply chain management. Autonomous quality control systems could identify defects and anomalies in real time, learning from past data to continuously improve their accuracy. Predictive maintenance models could analyze sensor data and generate proactive alerts, minimizing downtime and optimizing resource allocation. In logistics, AI-powered demand forecasting and route optimization could streamline operations, reducing costs and improving delivery times.

Generative AI is also poised to transform industrial operations and supply chain management across multiple fronts,

according to the World Economic Forum (WEF). The use of large language models (LLMs) could automatically generate maintenance instructions, standard operating procedures and other textual assets, driving process automation. Additionally, deploying LLMs could enable robots and machines to comprehend and act upon voice commands without task-specific training or frequent retraining. Autonomous quality control powered by generative AI could identify defects and anomalies in real-time, continuously learning from past data to improve accuracy, while predictive maintenance models analyzing sensor information could generate proactive alerts, minimizing downtime while optimizing resource allocation.

An analysis from McKinsey suggests the healthcare sector could also benefit greatly from generative AI at the edge. Real-time patient monitoring systems could analyze vital signs, generate early warning alerts, and provide personalized treatment recommendations. AI-assisted diagnostic tools could help healthcare professionals make more accurate and timely decisions, improving patient outcomes and reducing the burden on overworked medical staff.

ADVERTISEMENT

The challenge of generative AI edge deployments

Deploying generative AI models at the edge is particularly challenging because it requires balancing the need for fast, real-time responses with the ability to leverage local data for personalization,

as highlighted in a recent IEEE working paper. These models must quickly adapt to new information and user behaviors directly at the edge, where computational resources are more limited than in centralized cloud environments. This requires AI models that are not only efficient and responsive but also capable of learning and evolving from localized datasets to provide tailored services. The dual demands of speed and personalization, all within the resource-constrained context of edge computing, underscore the complexity of deploying generative AI in such settings.

SiMa.ai claims to overcome these hurdles with its machine learning system-on-chip (MLSoC) designed specifically for edge AI use cases. Unlike other solutions that often require combining a machine learning (ML) accelerator with a separate processor, SiMa.ai claims MLSoC integrates everything needed for edge AI into a single chip. This includes specialized processors for computer vision and machine learning, a high-performance ARM processor and efficient memory and interfaces. The result is a compact, power-efficient solution that simplifies the deployment of AI at the edge. This combination of features could make SiMa.ai’s platform potentially attractive for infrastructure providers like Dell looking to bring powerful AI capabilities to edge devices.

The race to the AI edge is on

As enterprises increasingly seek to harness the power of AI at the edge, Dell’s strategic investment in SiMa.ai suggests that edge computing may have finally found its killer use case in AI. With SiMa.ai’s platform and Dell’s edge computing strategy aligned, the future of edge AI looks brighter than ever, promising to transform the way businesses operate and interact with their customers.

The market has already picked who it thinks the winners in AI will be, with Dell stock up 70.83% YTD, HPE up 7.08% and Cisco down -2.49%. Meanwhile, Supermicro has seen its stock soar a staggering 232% YTD, largely due to expectations of its increased data center sales.

However, as Dell’s investment in SiMa.ai suggests, the edge could be the next critical lap in the race.

Of course, this is just the beginning. Over the next few years, we can expect to see a flurry of strategic investments and acquisitions as major tech companies race to stake their claim in the edge AI market. The fight to bring powerful AI capabilities to the enterprise edge could put a strain on existing partnerships, reminiscent of the virtualization era when we saw the disruptive VCE alliance between VMware, Cisco and EMC, which ultimately sparked the enormous merger between Dell and EMC.