No matter what… yes, competition stimulates business… and this way the technology also finds broader application and acceptance. But it doesn’t make me happy if the competitors manage to offer market-ready products with their customers faster while we’re still talking about test phases, studies, and demos and trials…it’s almost midnight…we’re on the brink…

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

BRN Discussion Ongoing

- Thread starter TechGirl

- Start date

HopalongPetrovski

I'm Spartacus!

It's that good old .1975 bot back in business. May work in our favour today on what is predicted to be a bloody Monday.Every one of these trades is exactly 15 seconds apart. Nothing to see here. Carry on.

View attachment 89277

At least the condition of the perpetrator is clear. They are a complete CXXT.

Last edited:

Bravo

Meow Meow 🐾

My Question:

Can you please describe the significance of this research in layman's terms and explain what this means for compannies like BrainChip?

ChatGPT's Response:

Sure — here’s a layman’s summary of the significance of this research:

What It’s About (Simple Terms)

What It’s About (Simple Terms)

Scientists and engineers often need to predict and control complex, unpredictable systems — like how a drone balances itself in the wind, or how a robot adjusts to tricky terrain. One powerful mathematical tool for doing this is called Koopman operator theory, which helps them track and forecast how these dynamic systems evolve over time.

Problem? The math and AI models normally used to do this — like traditional neural networks — are heavy, power-hungry, and slow, especially when you want to run them in real time on small devices (like drones, robots, or cars).

What They Did

What They Did

This study shows that spiking neural networks (SNNs) — the kind used in neuromorphic chips that mimic how real brains work — can do the same job with way less power and complexity.

Specifically:

- They needed 43× fewer multiplications and 4× fewer additions than traditional AI models.

- They only needed 20% of the input data to make predictions.

- The system could still analyze, learn, and predict the behavior of complex systems — just much more efficiently.

Why It Matters

Why It Matters

- Smarter, Greener AI at the Edge:

This could make it possible to run advanced predictive models directly on low-power edge devices — like autonomous vehicles, wearables, or even satellites — without needing a big server or draining batteries. - Huge Power Savings for Real-Time Systems:

Drones that stabilize themselves, robots that adapt to the environment, or cars that need to make split-second decisions — all could benefit from this kind of low-energy, high-speed computing. - Pushes Neuromorphic AI Forward:

It proves that brain-inspired computing isn’t just cool — it’s practical, and in many cases better than traditional AI for real-world applications.

What This Means for Companies Like BrainChip

What This Means for Companies Like BrainChip

- Validation: It reinforces the value of spiking neural networks and neuromorphic hardware like BrainChip’s Akida.

- Opportunity: More demand for these kinds of ultra-efficient chips in robotics, autonomous vehicles, aerospace, and IoT.

- Differentiation: Shows how neuromorphic tech can do things that even large neural networks struggle with — in a faster, leaner, and more power-conscious way.

What Brainchip is selling:

First we have silicon and/or silicon IP for the following:

Akida 1 SoC/IP

Akida 1500 SoC/IP

Pico IP

Akida/TENNs 2 FPGA/IP Developer Platform access

Akida GenAI FPGA in Development

Akida 3 SoC in development

TENNS is a special case where it can is part of Akida 2 SoC, but is also available as an algorithm which seems to be available for use with a CPU?GPU?

TENNs Bing Videos

(TENNS is an) Algorithm (not only to run in our hardware but a very efficient edge-based algorithm that allow you to do LLMs at the edge. SSM ~ S4/S5 (Tod Vierra 20240105)

Then we have the "off-the-shelf models and the POA advanced TENNs models to be run on the on-line Akida 2 FPGA.

Ready to use:

AkidaNet Object Detection/Recognition

AkidaNet Regression (Facial Age prediction)

AkidaNet/KWS

AkidaNet/Point Cloud Classification

AkidaNet/TENN Gesture Recognition (DVS)

AkidaNet/TENN Eye Tracking (90% activation sparsity)

ADVANCED TENNS MODELS – Available to run on Akida 2 FPGA Developer Platform – By Request

AkidaNet/TENN Audio Denoising

AkidaNet/TENN Automatic Speech Recognition (ASR)

AkidaNet/TENN LLM

The advanced TENNs models can only be tested on the on-line Akida 2 FPGA Developer Platform by prior arrangement. I guess this is to control access so the system does not get overloaded.

The use of TENNs with LLMs and with ASR opens the door for spoken LLM queries at the edge.

First we have silicon and/or silicon IP for the following:

Akida 1 SoC/IP

Akida 1500 SoC/IP

Pico IP

Akida/TENNs 2 FPGA/IP Developer Platform access

Akida GenAI FPGA in Development

Akida 3 SoC in development

TENNS is a special case where it can is part of Akida 2 SoC, but is also available as an algorithm which seems to be available for use with a CPU?GPU?

TENNs Bing Videos

(TENNS is an) Algorithm (not only to run in our hardware but a very efficient edge-based algorithm that allow you to do LLMs at the edge. SSM ~ S4/S5 (Tod Vierra 20240105)

Then we have the "off-the-shelf models and the POA advanced TENNs models to be run on the on-line Akida 2 FPGA.

Ready to use:

AkidaNet Object Detection/Recognition

AkidaNet Regression (Facial Age prediction)

AkidaNet/KWS

AkidaNet/Point Cloud Classification

AkidaNet/TENN Gesture Recognition (DVS)

AkidaNet/TENN Eye Tracking (90% activation sparsity)

ADVANCED TENNS MODELS – Available to run on Akida 2 FPGA Developer Platform – By Request

AkidaNet/TENN Audio Denoising

AkidaNet/TENN Automatic Speech Recognition (ASR)

AkidaNet/TENN LLM

The advanced TENNs models can only be tested on the on-line Akida 2 FPGA Developer Platform by prior arrangement. I guess this is to control access so the system does not get overloaded.

The use of TENNs with LLMs and with ASR opens the door for spoken LLM queries at the edge.

Hi Bravo,View attachment 89278

My Question:

Can you please describe the significance of this research in layman's terms and explain what this means for compannies like BrainChip?

ChatGPT's Response:

Sure — here’s a layman’s summary of the significance of this research:

What It’s About (Simple Terms)

Scientists and engineers often need to predict and control complex, unpredictable systems — like how a drone balances itself in the wind, or how a robot adjusts to tricky terrain. One powerful mathematical tool for doing this is called Koopman operator theory, which helps them track and forecast how these dynamic systems evolve over time.

Problem? The math and AI models normally used to do this — like traditional neural networks — are heavy, power-hungry, and slow, especially when you want to run them in real time on small devices (like drones, robots, or cars).

What They Did

This study shows that spiking neural networks (SNNs) — the kind used in neuromorphic chips that mimic how real brains work — can do the same job with way less power and complexity.

Specifically:

- They needed 43× fewer multiplications and 4× fewer additions than traditional AI models.

- They only needed 20% of the input data to make predictions.

- The system could still analyze, learn, and predict the behavior of complex systems — just much more efficiently.

Why It Matters

- Smarter, Greener AI at the Edge:

This could make it possible to run advanced predictive models directly on low-power edge devices — like autonomous vehicles, wearables, or even satellites — without needing a big server or draining batteries.- Huge Power Savings for Real-Time Systems:

Drones that stabilize themselves, robots that adapt to the environment, or cars that need to make split-second decisions — all could benefit from this kind of low-energy, high-speed computing.- Pushes Neuromorphic AI Forward:

It proves that brain-inspired computing isn’t just cool — it’s practical, and in many cases better than traditional AI for real-world applications.

What This Means for Companies Like BrainChip

- Validation: It reinforces the value of spiking neural networks and neuromorphic hardware like BrainChip’s Akida.

- Opportunity: More demand for these kinds of ultra-efficient chips in robotics, autonomous vehicles, aerospace, and IoT.

- Differentiation: Shows how neuromorphic tech can do things that even large neural networks struggle with — in a faster, leaner, and more power-conscious way.

I see our friend Dylan Muir from Synsense is in there pitching.

Thats me

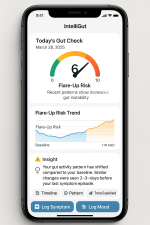

Gas Sensors in the Toilet? — Intelligut.ai

Why the Future of Gut Health Is Passive and Personalwww.intelligut.ai

I am currently lobbying the wife to let me put in a camera too, combined with the gas sensors I would get a lot more useful data.

I does not (yet) use Brainchip Akida but that is on the roadmap, I want to process the data on the edge in the future so that users embaressing toilet data is not hackable. But for now I am collecting data, analysing and building algorithms ontop of models to measure & monitor gut health.

For those wondering why I started this project, I have divertaticulitis (see attached infographic for those who don't know). I'm 54 and that condition started 4 years ago for me; it is really really really unpleasant - so I want an early warning, I want AI to predict a flare up is coming so I can start mitigation procedures (no solids, liquids only). I can avoid the worst of its symptoms. I think of how my business monitors motors/engines with vibration sensors & predicts future maintenance activities - I want the same for my colon.

Still very early stages as I am currently testing the market; would peple want to buy such a product & can I raise $'s from investors to fund the many clinical trials I need to run?

Its actually a really interesting project for me as it is pushing me hard, I know nothing about biology & I am leveraging AI a lot; it is incredibly impressive (I am an engineer).

Right now I am looking for:

- gut health experts: gastroenterologists looking to improve gut health with AI, I need some on my advisory board and to help with clinical trials

- potential investors: rich people & venture capitalists looking to invest in healthtech & AI

- people keen to put in a prototype sensor in thier toilet and help me collect more data from as many individuals as possible

So if you lot know of any and think they might be intersted in this project, tell them about it

Attachments

What Brainchip is selling:

First we have silicon and/or silicon IP for the following:

Akida 1 SoC/IP

Akida 1500 SoC/IP

Pico IP

Akida/TENNs 2 FPGA/IP Developer Platform access

Akida GenAI FPGA in Development

Akida 3 SoC in development

TENNS is a special case where it can is part of Akida 2 SoC, but is also available as an algorithm which seems to be available for use with a CPU?GPU?

TENNs Bing Videos

(TENNS is an) Algorithm (not only to run in our hardware but a very efficient edge-based algorithm that allow you to do LLMs at the edge. SSM ~ S4/S5 (Tod Vierra 20240105)

Then we have the "off-the-shelf models and the POA advanced TENNs models to be run on the on-line Akida 2 FPGA.

Ready to use:

AkidaNet Object Detection/Recognition

AkidaNet Regression (Facial Age prediction)

AkidaNet/KWS

AkidaNet/Point Cloud Classification

AkidaNet/TENN Gesture Recognition (DVS)

AkidaNet/TENN Eye Tracking (90% activation sparsity)

ADVANCED TENNS MODELS – Available to run on Akida 2 FPGA Developer Platform – By Request

AkidaNet/TENN Audio Denoising

AkidaNet/TENN Automatic Speech Recognition (ASR)

AkidaNet/TENN LLM

The advanced TENNs models can only be tested on the on-line Akida 2 FPGA Developer Platform by prior arrangement. I guess this is to control access so the system does not get overloaded.

The use of TENNs with LLMs and with ASR opens the door for spoken LLM queries at the edge.

Hmm… selling? At the moment we are more like borrowing mode I would say…. Would be happy to see we sell that stuff

Thats meThanks for sharing Stable Genius!

I am currently lobbying the wife to let me put in a camera too, combined with the gas sensors I would get a lot more useful data.

I does not (yet) use Brainchip Akida but that is on the roadmap, I want to process the data on the edge in the future so that users embaressing toilet data is not hackable. But for now I am collecting data, analysing and building algorithms ontop of models to measure & monitor gut health.

For those wondering why I started this project, I have divertaticulitis (see attached infographic for those who don't know). I'm 54 and that condition started 4 years ago for me; it is really really really unpleasant - so I want an early warning, I want AI to predict a flare up is coming so I can start mitigation procedures (no solids, liquids only). I can avoid the worst of its symptoms. I think of how my business monitors motors/engines with vibration sensors & predicts future maintenance activities - I want the same for my colon.

Still very early stages as I am currently testing the market; would peple want to buy such a product & can I raise $'s from investors to fund the many clinical trials I need to run?

Its actually a really interesting project for me as it is pushing me hard, I know nothing about biology & I am leveraging AI a lot; it is incredibly impressive (I am an engineer).

Right now I am looking for:

- gut health experts: gastroenterologists looking to improve gut health with AI, I need some on my advisory board and to help with clinical trials

- potential investors: rich people & venture capitalists looking to invest in healthtech & AI

- people keen to put in a prototype sensor in thier toilet and help me collect more data from as many individuals as possible

So if you lot know of any and think they might be intersted in this project, tell them about itI'd be very appreciative of any support, even if you just register for our newsletter - that helps me convince investors that there is a big market for this product!

Ketauk , if you’re looking for -

Right now I am looking for:

- gut health experts: gastroenterologists looking to improve gut health with AI, I need some on my advisory board and to help with clinical trials

Maybe reach out to Professor Barry Marshall , Ex Brainchip Advisory Board member , he knows a thing or two about gut health .

smoothsailing18

Regular

What a great project.....How doI register for your newsletter ?.Thats meThanks for sharing Stable Genius!

I am currently lobbying the wife to let me put in a camera too, combined with the gas sensors I would get a lot more useful data.

I does not (yet) use Brainchip Akida but that is on the roadmap, I want to process the data on the edge in the future so that users embaressing toilet data is not hackable. But for now I am collecting data, analysing and building algorithms ontop of models to measure & monitor gut health.

For those wondering why I started this project, I have divertaticulitis (see attached infographic for those who don't know). I'm 54 and that condition started 4 years ago for me; it is really really really unpleasant - so I want an early warning, I want AI to predict a flare up is coming so I can start mitigation procedures (no solids, liquids only). I can avoid the worst of its symptoms. I think of how my business monitors motors/engines with vibration sensors & predicts future maintenance activities - I want the same for my colon.

Still very early stages as I am currently testing the market; would peple want to buy such a product & can I raise $'s from investors to fund the many clinical trials I need to run?

Its actually a really interesting project for me as it is pushing me hard, I know nothing about biology & I am leveraging AI a lot; it is incredibly impressive (I am an engineer).

Right now I am looking for:

- gut health experts: gastroenterologists looking to improve gut health with AI, I need some on my advisory board and to help with clinical trials

- potential investors: rich people & venture capitalists looking to invest in healthtech & AI

- people keen to put in a prototype sensor in thier toilet and help me collect more data from as many individuals as possible

So if you lot know of any and think they might be intersted in this project, tell them about itI'd be very appreciative of any support, even if you just register for our newsletter - that helps me convince investors that there is a big market for this product!

Ketauk , if you’re looking for -

Right now I am looking for:

- gut health experts: gastroenterologists looking to improve gut health with AI, I need some on my advisory board and to help with clinical trials

Maybe reach out to Professor Barry Marshall , Ex Brainchip Advisory Board member , he knows a thing or two about gut health .

View attachment 89287

Thanks for the tip - I will try to connect with him on Linkedin - https://www.linkedin.com/in/barry-marshall-3241b756/

What a great project.....How doI register for your newsletter ?.

Newsletter — Intelligut.ai

Or if you want to be part of the future beta program and get a sensor in your toilet - https://www.intelligut.ai/sign-up-for-beta-program

DingoBorat

Slim

Only a couple of minutes to, by my reckoning.No matter what… yes, competition stimulates business… and this way the technology also finds broader application and acceptance. But it doesn’t make me happy if the competitors manage to offer market-ready products with their customers faster while we’re still talking about test phases, studies, and demos and trials…it’s almost midnight…we’re on the brink…

Pocketchange321

Member

What the actual fuck thats so blatant, how is this not immediately punished? Like I would think they head software to track that easily? Whaaaat?Every one of these trades is exactly 15 seconds apart. Nothing to see here. Carry on.

View attachment 89277

You must be new to BRNWhat the actual fuck thats so blatant, how is this not immediately punished? Like I would think they head software to track that easily? Whaaaat?

Baneino

Regular

"That exactly what you see when the market is not allowed to decide anything. Micro-trades every 15 seconds, always 1 share, always the same price - that's not an investor, that's a bot making its rounds.Every one of these trades is exactly 15 seconds apart. Nothing to see here. Carry on.

View attachment 89277

Such behavior is often seen when either: - the order book is tested for liquidity

artificial volume is to be generated (keyword wash trading)

the price is to be kept within a narrow range in a controlled manner

Is this illegal? Not necessarily. Is it transparent? Not necessarily.

But it is a cler indication that the 'free market' does not rule here, but that an algorithm is "playing for time.

Such patterns are nothing new at BRN - especially in phases in which the news situation is calm, but movement is being prepared under the surface. You don't have to be an aluhut to recognize this: It's not a coincidence, it's a system.

As always, only the next major movement will decide whether this is good or bad. Until then, don't get nervous - just recognize the game.

The Pope

Regular

Anil mankar likes this.

www.linkedin.com

www.linkedin.com

Ever seen a 'Bread Sandwich'? It’s exactly what it sounds like, just bread. No filling. And just like some bids, it ticks the boxes but leaves you wondering… where’s the flavour? More often than not… | Joanne Tryner | 117 comments

Ever seen a 'Bread Sandwich'? It’s exactly what it sounds like, just bread. No filling. And just like some bids, it ticks the boxes but leaves you wondering… where’s the flavour? More often than not I see bids that are: ‼️ Full of content ‼️ Technically accurate ‼️ Tick all the boxes... But...

Pom down under

Top 20

TENNs is a NN model, so it can be used on any NN-capable processor.What Brainchip is selling:

First we have silicon and/or silicon IP for the following:

Akida 1 SoC/IP

Akida 1500 SoC/IP

Pico IP

Akida/TENNs 2 FPGA/IP Developer Platform access

Akida GenAI FPGA in Development

Akida 3 SoC in development

TENNS is a special case where it can is part of Akida 2 SoC, but is also available as an algorithm which seems to be available for use with a CPU?GPU?

TENNs Bing Videos

(TENNS is an) Algorithm (not only to run in our hardware but a very efficient edge-based algorithm that allow you to do LLMs at the edge. SSM ~ S4/S5 (Tod Vierra 20240105)

Then we have the "off-the-shelf models and the POA advanced TENNs models to be run on the on-line Akida 2 FPGA.

Ready to use:

AkidaNet Object Detection/Recognition

AkidaNet Regression (Facial Age prediction)

AkidaNet/KWS

AkidaNet/Point Cloud Classification

AkidaNet/TENN Gesture Recognition (DVS)

AkidaNet/TENN Eye Tracking (90% activation sparsity)

ADVANCED TENNS MODELS – Available to run on Akida 2 FPGA Developer Platform – By Request

AkidaNet/TENN Audio Denoising

AkidaNet/TENN Automatic Speech Recognition (ASR)

AkidaNet/TENN LLM

The advanced TENNs models can only be tested on the on-line Akida 2 FPGA Developer Platform by prior arrangement. I guess this is to control access so the system does not get overloaded.

The use of TENNs with LLMs and with ASR opens the door for spoken LLM queries at the edge.

In Akida 2, the neuromorphic engines (NE) are arranged in groups of 4 to form nodes. One of the NEs is used for the TENNs model.

TENNs can operate in recurrence mode or in convolution mode.

TENNs performs inference in recurrence mode. It can be trained in convolution mode.

Had a quick scan couldn't see this posted as yet?

www.linkedin.com

www.linkedin.com

Why On-Device AI Matters? | Sk Afridi Rahaman

Why On-Device AI Matters? Over the past year, BrainChip has made major strides—securing new patents and expanding its suite of Akida neuromorphic processors. Their partnerships with leading organizations like the Air Force Research Labs, Frontgrade Gaisler, and others signal strong market...

Last edited:

Similar threads

- Replies

- 0

- Views

- 4K

- Replies

- 9

- Views

- 6K

- Replies

- 0

- Views

- 3K