Can we get Elon to block @Bravo from seeing this?

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

BRN Discussion Ongoing

- Thread starter TechGirl

- Start date

If there are no peaks, what's the point in peeking?Yeah, but no more free

Yeah, but no more free peaks under the shirt without at least buying us dinner!

MadMayHam | 合氣道

Regular

"We were told BRN pulled out of poducing an Akida 2 SoC ..."

Apologies I've not been here for a while... anyone can tell me where this statement is from?

-MMH

Apologies I've not been here for a while... anyone can tell me where this statement is from?

-MMH

We were told BRN pulled out of poducing an Akida 2 SoC because we didn't want to step on "someone's" toes.

Now we are doing a pas de deux with Nviso.

Out of 17 business-related items on Nviso's News page, 4 relate to BRN, starting from 19/4/2022:

https://nviso.ai/news/

NVISO and BrainChip partner on Human Behavioral Analytics in automotive and edge AI devices

April 19, 2022

Lausanne, Switzerland & Laguna Hills, Calif. – April 19, 2022 – BrainChip Holdings Ltd (ASX: BRN, OTCQX: BRCHF, ADR: BCHPY), the world’s first commercial producer of neuromorphic AI

NVISO advances its Human Behaviour AI SDK for neuromorphic computing using the BrainChip Akida platform

May 11, 2022

Lausanne, Switzerland – May 11, 2022 – nViso SA (NVISO), the leading Human Behavioural Analytics AI company, today announced at the AI Expo Japan new capabilities

NVISO announces it has reached a key interoperability milestone with BrainChip Akida Neuromorphic IP

July 18, 2022

To learn more about the BrainChip Akdia interoperability results please click here to register for the results presentation on 20th July 2022 at 7pm AEST. NVISO has

NVISO’s latest Neuro SDK to be demonstrated running on the BrainChip Akida fully digital neuromorphic processor platform at CES 2023

January 2, 2023

Following the recent NVISO Neuro SDK milestone release, including two new high performance AI Apps from its Human Behavior AI App catalogue, Gaze and Action

The key interoperability milestone was announced 3 months after the Nviso/Brainchip partnership was announced. That is an indication that the technologies fit like a hand in a glove.

There are several other Nviso partnerships mentioned:

Panasonic, Interior Monitoring Systems, Siemens, Unith, Privately SA.

I'm guessing Nviso is handling these partnerships with kid gloves.

Nviso list healthcare, Consumer Robots, Automotive Interiors, and Gaming as their fields of interest.

Nviso's patent relates to a software-implemented CNN method, so Akida would have been a revelation.

US11048921B2 Image processing system for extracting a behavioral profile from images of an individual specific to an event 20180509

View attachment 61211

So did BRN hold off on Akida2 SoC at Nviso's behest? - Highly improbable because Nviso is software-based.

So who(m?)?

Frangipani

Top 20

AKD 1000 AKD 1500 AKD 2000 and moving ever so closer...AKD 3000...and 3 of which are 100% available now and setting the benchmark

which the company believe their IP will end up in everything, everywhere...(that I'd suggest is the target, NOT a proven fact to date).

Hi TECH,

just an attempt to sort out the terminology…

The following is how I understand BrainChip’s nomenclature, but I could be wrong, so everybody please feel free to brainstorm and chip in…

In early 2022, shortly after Sean Hehir had joined BrainChip as CEO, “AKD1000” was still used as an umbrella term to describe everything our company had for sale at the time (chip, IP, PCIe board), as evident by the following investor conference presentation slides:

These days, however, a distinction appears to be made between the generational iterations of the Akida processor technology platform (categorised as akida, akida 2.0…) as opposed to the physical reference chips (so far AKD1000 and AKD1500).

The AKD1000 and AKD1500 SoCs are both silicon implementations of the Akida technology embodied in BrainChip’s 1st Generation Edge AI neuromorphic processor platform akida (technically speaking akida 1.0).

AKD1000 was implemented with TSMC at 28nm, whereas AKD1500 was taped out on GlobalFoundries’ MCU-friendly 22nm fully depleted silicon-on-insulator (FD-SOI) process, aka GF’s 22FDX technology. As reference chips, they were primarily meant to target prospective IP licencees (as a proof of concept), but at the same time the AKD1000 (on a PCIe board or inside a Dev Kit) benefitted individual developers (professional hardware engineers in companies or academic settings as well as advanced hobbyists) who were not interested in mass production of edge devices and the signing of an IP licence, but instead may have only required a single PCIe Board or Dev Kit for their projects or research (take note that it says on the BrainChip shop website in bold capital letters that development kits are not intended to be used for production purposes). Meanwhile, AKD1000 chips have also been integrated into the VVDN Edge AI Boxes, and the Unigen Cupcake Edge AI Server will soon be offered with a new configuration based on the AKD1500 (?) as an AI option.

Akida 2.0, the neural processing system’s enhanced 2nd Generation, was announced and introduced last year, but purely as an IP offering, productised in three different variations: akida-E, akida-S and akida-P, depending on where in the Edge AI spectrum (sensor edge < server edge) its prowess is required.

AKD2000, however, doesn’t exist - at least not yet.

The way I understand it, AKD2000 would be the name of BrainChip’s (hypothetical) reference chip based on Akida Gen 2, which may or may not materialise.

Let’s recall what was said earlier this year:

In an interview with Jim McGregor from TIRIAS Research during CES 2024 (January 9-12), Todd Vierra replied the following to his interview partner’s comment “And correct me if I’m wrong, but this is the Akida 2?”

Todd: “This is actually all ran [sic] on Akida 1 hardware. Akida 2, erm - we are in the process of taping out and we’ll get that silicon back a little bit later, but these are all just Gen 1...” (from 9:26 min). His statement about an imminent tapeout seemed to confirm what (according to FF) Sean Hehir had told select shareholders in the November 2023 Sydney “secret meeting”.

Surprisingly, a mere seven weeks later, during the Virtual Investor Roadshow (February 27), neither Sean Hehir nor Tony Lewis mentioned anything at all about a tape-out in progress, but instead argued a second generation reference chip as proof of concept was unnecessary, while at the same time not totally excluding a potential future tape-out complementing their core IP business. However, our CTO made it clear that it is definitely not their intention to manufacture chips on a large scale:

From 43:17

Roger Manning’s question: “Given BrainChip’s business model is to largely sell its akida IP, will it be necessary to prove each new version of akida in silicon?”

Tony: “So I think the big question was, will this event-based paradigm yield, erm can it be done and will it yield some benefits to customers, and I think we achieved that by taping out our earlier generation of products, and so we’ve already achieved that. And it’s my belief that there is only marginal benefit in taping out the next generation, we already proved the main points of it. And clearly we don’t want to start to manufacture chips on a large scale. We’d be competing with our customers and that would really break our business model right now.”

Sean: “Yeah, and to be clear, a lot of work has gone on with our ability to simulate workloads in Generation 2 as well. As Tony said, we certainly have reference chips in Generation 1, and the typical engagement course that we work with IP license prospects is we allow them to run models on there and/or simulate them in our simulation tools that we have for Generation 2, so Roger, I would say stay tuned, we may or may not, erm, but right now, there is no need for us to do that.”

Now the way I understood the “We’d be competing with our customers” comment is not for fear of treading on their customers’ or a specific customer’s toes (as other TSE users have interpreted it), but because they would shoot themselves in the foot by doing that: after all, it would be less profitable for our company when customers could just buy those chips off the shelves to utilise them in their in-house development (and hence save a lot of money, as there won’t be any follow-up costs for them) rather than having to pay an initial IP license fee and future royalties.

Does my interpretation make sense or am I overlooking something here?

And as for the next iteration of the Akida processor family, we don’t even know, yet, whether it will be called akida 3.0 or akida 2.X…

From min 47:41 min:

Sean Hehir: “… but round Generation 3, if you will: You know, I mentioned earlier in my slide that when I talked about our product planning and our execution cycle, if you will, you could see we are always planning on this product and we are always looking for improvements on our IP offering right there. Now whether we call it formally “Generation 3” or “2 something x” - but yes, we are in the middle of a planning cycle right now to make some changes and we’ll make announcements over time.”

So what we can say with confidence (provided we believe our CEO’s words) is that the advent of the Akida neural processor family’s 3rd generation is approaching… Whether there will ever be an AKD3000, though, is uncertain.

Regards

Frangipani

P.S.: I still can’t get my head around Todd Vierra’s statement, though. Would they really have backed out at the last minute, eg due to financial constraints?

Or was he possibly referring to a tapeout not of AKD2000 as a reference chip, but to a tape-out of a SoC by a specific customer that includes Akida 2.0 IP, along the lines of what DingoBorat said?

To me, it's saying there's an in the flesh, integrated circuit, customer custom SOC, with our IP in it...

But then again, would he really say “…we’ll get that silicon back a little later”?

I suppose only if it was a joint development, such as possibly one with Socionext or Tata Elxsi (which could explain the different colour of the mysterious Custom SoC on that presentation slide)? As otherwise, wouldn’t such a SoC be taped out by the customer itself rather than by / in collaboration with BrainChip? I am a bit confused here.

It seems too early as a mere placeholder for the planned integration of Akida IP into the Frontgrade Gaisler SoC, which according to ESA’s Laurent Hili “We aim to tape out ideally before the end of the year, beginning of next year” (from 47:25 min shortly before the end of the mid -March BrainChip podcast Episode 31). After all, they could have labelled it “prospective Customer Custom SoC”, but the way it is presented on the slide, I agree with DingoBorat that it does appear to be an already existing physical implementation, indeed. Mmmmh…

Last edited:

Pom down under

Top 20

Nothing to see here as they have similar events which are normally just gamingView attachment 61438

Nintendo Live 2024 Sydney

Fans are invited to register for a chance to join us at the International Convention Centre Sydney on Saturday 31st August and Sunday 1st September for in-person Nintendo fun!www.nintendo.com.au

I wonder why it’s in Australia ....

From their newsletter (small part of it) sent to my email:Hi TECH,

just an attempt to sort out the terminology…

The following is how I understand BrainChip’s nomenclature, but I could be wrong, so everybody please feel free to brainstorm and chip in…

In early 2022, shortly after Sean Hehir had joined BrainChip as CEO, “AKD1000” was still used as an umbrella term to describe everything our company had for sale at the time (chip, IP, PCIe board), as evident by the following investor conference presentation slides:

View attachment 61365

These days, however, a distinction appears to be made between the generational iterations of the Akida processor technology platform (categorised as akida, akida 2.0…) as opposed to the physical reference chips (so far AKD1000 and AKD1500).

The AKD1000 and AKD1500 SoCs are both silicon implementations of the Akida technology embodied in BrainChip’s 1st Generation Edge AI neuromorphic processor platform akida (technically speaking akida 1.0).

AKD1000 was implemented with TSMC at 28nm, whereas AKD1500 was taped out on GlobalFoundries’ MCU-friendly 22nm fully depleted silicon-on-insulator (FD-SOI) process, aka GF’s 22FDX technology. As reference chips, they were primarily meant to target prospective IP licencees (as a proof of concept), but at the same time the AKD1000 (on a PCIe board or inside a Dev Kit) benefitted individual developers (professional hardware engineers in companies or academic settings as well as advanced hobbyists) who were not interested in mass production of edge devices and the signing of an IP licence, but instead may have only required a single PCIe Board or Dev Kit for their projects or research (take note that it says on the BrainChip shop website in bold capital letters that development kits are not intended to be used for production purposes). Meanwhile, AKD1000 chips have also been integrated into the VVDN Edge AI Boxes, and the Unigen Cupcake Edge AI Server will soon be offered with a new configuration based on the AKD1500 (?) as an AI option.

Akida 2.0, the neural processing system’s enhanced 2nd Generation, was announced and introduced last year, but purely as an IP offering, productised in three different variations: akida-E, akida-S and akida-P, depending on where in the Edge AI spectrum (sensor edge < server edge) its prowess is required.

AKD2000, however, doesn’t exist - at least not yet.

The way I understand it, AKD2000 would be the name of BrainChip’s (hypothetical) reference chip based on Akida Gen 2, which may or may not materialise.

Let’s recall what was said earlier this year:

In an interview with Jim McGregor from TIRIAS Research during CES 2024 (January 9-12), Todd Vierra replied the following to his interview partner’s comment “And correct me if I’m wrong, but this is the Akida 2?”

Todd: “This is actually all ran [sic] on Akida 1 hardware. Akida 2, erm - we are in the process of taping out and we’ll get that silicon back a little bit later, but these are all just Gen 1...” (from 9:26 min). His statement about an imminent tapeout seemed to confirm what (according to FF) Sean Hehir had told select shareholders in the November 2023 Sydney “secret meeting”.

Surprisingly, a mere seven weeks later, during the Virtual Investor Roadshow (February 27), neither Sean Hehir nor Tony Lewis mentioned anything at all about a tape-out in progress, but instead argued a second generation reference chip as proof of concept was unnecessary, while at the same time not totally excluding a potential future tape-out complementing their core IP business. However, our CTO made it clear that it is definitely not their intention to manufacture chips on a large scale:

From 43:17

Roger Manning’s question: “Given BrainChip’s business model is to largely sell its akida IP, will it be necessary to prove each new version of akida in silicon?”

Tony: “So I think the big question was, will this event-based paradigm yield, erm can it be done and will it yield some benefits to customers, and I think we achieved that by taping out our earlier generation of products, and so we’ve already achieved that. And it’s my belief that there is only marginal benefit in taping out the next generation, we already proved the main points of it. And clearly we don’t want to start to manufacture chips on a large scale. We’d be competing with our customers and that would really break our business model right now.”

Sean: “Yeah, and to be clear, a lot of work has gone on with our ability to simulate workloads in Generation 2 as well. As Tony said, we certainly have reference chips in Generation 1, and the typical engagement course that we work with IP license prospects is we allow them to run models on there and/or simulate them in our simulation tools that we have for Generation 2, so Roger, I would say stay tuned, we may or may not, erm, but right now, there is no need for us to do that.”

Now the way I understood the “We’d be competing with our customers” comment is not for fear of treading on their customers’ or a specific customer’s toes (as other TSE users have interpreted it), but because they would shoot themselves in the foot by doing that: after all, it would be less profitable for our company when customers could just buy those chips off the shelves to utilise them in their in-house development (and hence save a lot of money, as there won’t be any follow-up costs for them) rather than having to pay an initial IP license fee and future royalties.

Does my interpretation make sense or am I overlooking something here?

And as for the next iteration of the Akida processor family, we don’t even know, yet, whether it will be called akida 3.0 or akida 2.X…

From min 47:41 min:

Sean Hehir: “… but round Generation 3, if you will: You know, I mentioned earlier in my slide that when I talked about our product planning and our execution cycle, if you will, you could see we are always planning on this product and we are always looking for improvements on our IP offering right there. Now whether we call it formally “Generation 3” or “2 something x” - but yes, we are in the middle of a planning cycle right now to make some changes and we’ll make announcements over time.”

So what we can say with confidence (provided we believe our CEO’s words) is that the advent of the Akida neural processor family’s 3rd generation is approaching… Whether there will ever be an AKD3000, though, is uncertain.

Regards

Frangipani

P.S.: I still can’t get my head around Todd Vierra’s statement, though. Would they really have backed out at the last minute, eg due to financial constraints?

Or was he possibly referring to a tapeout not of AKD2000 as a reference chip, but to a tape-out of a SoC by a specific customer that includes Akida 2.0 IP, along the lines of what DingoBorat said?

But then again, would he really say “…we’ll get that silicon back a little later”?

I suppose only if it was a joint development, such as possibly one with Socionext or Tata Elxsi (which could explain the different colour of the mysterious Custom SoC on that presentation slide)? As otherwise, wouldn’t such a SoC be taped out by the customer itself rather than by / in collaboration with BrainChip? I am a bit confused here.

It seems too early as a mere placeholder for the planned integration of Akida IP into the Frontgrade Gaisler SoC, which according to ESA’s Laurent Hili “We aim to tape out ideally before the end of the year, beginning of next year” (from 47:25 min shortly before the end of the mid -March BrainChip podcast Episode 31). After all, they could have labelled it “prospective Customer Custom SoC”, but the way it is presented on the slide, I agree with DingoBorat that it does appear to be an already existing physical implementation, indeed. Mmmmh…

April has been buzzing with innovation and discovery here at BrainChip. We recently spent a whirlwind week at Embedded World 2024, where the team demonstrated Akida in real-life use cases and engaged in extensive conversations around AI, Edge processing, and Akida neuromorphic technology.

And it was about gen2 if I am correct so they must have some physical Gen2 chips around to build the models.

You can't really simulate using somebody's else's hardware, can you? Well I guess you can but not very professional and won't impress anybody.

Hi TECH,

just an attempt to sort out the terminology…

The following is how I understand BrainChip’s nomenclature, but I could be wrong, so everybody please feel free to brainstorm and chip in…

In early 2022, shortly after Sean Hehir had joined BrainChip as CEO, “AKD1000” was still used as an umbrella term to describe everything our company had for sale at the time (chip, IP, PCIe board), as evident by the following investor conference presentation slides:

View attachment 61365

These days, however, a distinction appears to be made between the generational iterations of the Akida processor technology platform (categorised as akida, akida 2.0…) as opposed to the physical reference chips (so far AKD1000 and AKD1500).

The AKD1000 and AKD1500 SoCs are both silicon implementations of the Akida technology embodied in BrainChip’s 1st Generation Edge AI neuromorphic processor platform akida (technically speaking akida 1.0).

AKD1000 was implemented with TSMC at 28nm, whereas AKD1500 was taped out on GlobalFoundries’ MCU-friendly 22nm fully depleted silicon-on-insulator (FD-SOI) process, aka GF’s 22FDX technology. As reference chips, they were primarily meant to target prospective IP licencees (as a proof of concept), but at the same time the AKD1000 (on a PCIe board or inside a Dev Kit) benefitted individual developers (professional hardware engineers in companies or academic settings as well as advanced hobbyists) who were not interested in mass production of edge devices and the signing of an IP licence, but instead may have only required a single PCIe Board or Dev Kit for their projects or research (take note that it says on the BrainChip shop website in bold capital letters that development kits are not intended to be used for production purposes). Meanwhile, AKD1000 chips have also been integrated into the VVDN Edge AI Boxes, and the Unigen Cupcake Edge AI Server will soon be offered with a new configuration based on the AKD1500 (?) as an AI option.

Akida 2.0, the neural processing system’s enhanced 2nd Generation, was announced and introduced last year, but purely as an IP offering, productised in three different variations: akida-E, akida-S and akida-P, depending on where in the Edge AI spectrum (sensor edge < server edge) its prowess is required.

AKD2000, however, doesn’t exist - at least not yet.

The way I understand it, AKD2000 would be the name of BrainChip’s (hypothetical) reference chip based on Akida Gen 2, which may or may not materialise.

Let’s recall what was said earlier this year:

In an interview with Jim McGregor from TIRIAS Research during CES 2024 (January 9-12), Todd Vierra replied the following to his interview partner’s comment “And correct me if I’m wrong, but this is the Akida 2?”

Todd: “This is actually all ran [sic] on Akida 1 hardware. Akida 2, erm - we are in the process of taping out and we’ll get that silicon back a little bit later, but these are all just Gen 1...” (from 9:26 min). His statement about an imminent tapeout seemed to confirm what (according to FF) Sean Hehir had told select shareholders in the November 2023 Sydney “secret meeting”.

Surprisingly, a mere seven weeks later, during the Virtual Investor Roadshow (February 27), neither Sean Hehir nor Tony Lewis mentioned anything at all about a tape-out in progress, but instead argued a second generation reference chip as proof of concept was unnecessary, while at the same time not totally excluding a potential future tape-out complementing their core IP business. However, our CTO made it clear that it is definitely not their intention to manufacture chips on a large scale:

From 43:17

Roger Manning’s question: “Given BrainChip’s business model is to largely sell its akida IP, will it be necessary to prove each new version of akida in silicon?”

Tony: “So I think the big question was, will this event-based paradigm yield, erm can it be done and will it yield some benefits to customers, and I think we achieved that by taping out our earlier generation of products, and so we’ve already achieved that. And it’s my belief that there is only marginal benefit in taping out the next generation, we already proved the main points of it. And clearly we don’t want to start to manufacture chips on a large scale. We’d be competing with our customers and that would really break our business model right now.”

Sean: “Yeah, and to be clear, a lot of work has gone on with our ability to simulate workloads in Generation 2 as well. As Tony said, we certainly have reference chips in Generation 1, and the typical engagement course that we work with IP license prospects is we allow them to run models on there and/or simulate them in our simulation tools that we have for Generation 2, so Roger, I would say stay tuned, we may or may not, erm, but right now, there is no need for us to do that.”

Now the way I understood the “We’d be competing with our customers” comment is not for fear of treading on their customers’ or a specific customer’s toes (as other TSE users have interpreted it), but because they would shoot themselves in the foot by doing that: after all, it would be less profitable for our company when customers could just buy those chips off the shelves to utilise them in their in-house development (and hence save a lot of money, as there won’t be any follow-up costs for them) rather than having to pay an initial IP license fee and future royalties.

Does my interpretation make sense or am I overlooking something here?

And as for the next iteration of the Akida processor family, we don’t even know, yet, whether it will be called akida 3.0 or akida 2.X…

From min 47:41 min:

Sean Hehir: “… but round Generation 3, if you will: You know, I mentioned earlier in my slide that when I talked about our product planning and our execution cycle, if you will, you could see we are always planning on this product and we are always looking for improvements on our IP offering right there. Now whether we call it formally “Generation 3” or “2 something x” - but yes, we are in the middle of a planning cycle right now to make some changes and we’ll make announcements over time.”

So what we can say with confidence (provided we believe our CEO’s words) is that the advent of the Akida neural processor family’s 3rd generation is approaching… Whether there will ever be an AKD3000, though, is uncertain.

Regards

Frangipani

P.S.: I still can’t get my head around Todd Vierra’s statement, though. Would they really have backed out at the last minute, eg due to financial constraints?

Or was he possibly referring to a tapeout not of AKD2000 as a reference chip, but to a tape-out of a SoC by a specific customer that includes Akida 2.0 IP, along the lines of what DingoBorat said?

But then again, would he really say “…we’ll get that silicon back a little later”?

I suppose only if it was a joint development, such as possibly one with Socionext or Tata Elxsi (which could explain the different colour of the mysterious Custom SoC on that presentation slide)? As otherwise, wouldn’t such a SoC be taped out by the customer itself rather than by / in collaboration with BrainChip? I am a bit confused here.

It seems too early as a mere placeholder for the planned integration of Akida IP into the Frontgrade Gaisler SoC, which according to ESA’s Laurent Hili “We aim to tape out ideally before the end of the year, beginning of next year” (from 47:25 min shortly before the end of the mid -March BrainChip podcast Episode 31). After all, they could have labelled it “prospective Customer Custom SoC”, but the way it is presented on the slide, I agree with DingoBorat that it does appear to be an already existing physical implementation, indeed. Mmmmh…

Hi Frangi,

I will try to answer you tomorrow to the best of my understanding....regards Chris (Tech)

Frangipani

Top 20

From their newsletter (small part of it) sent to my email:

April has been buzzing with innovation and discovery here at BrainChip. We recently spent a whirlwind week at Embedded World 2024, where the team demonstrated Akida in real-life use cases and engaged in extensive conversations around AI, Edge processing, and Akida neuromorphic technology.

And it was about gen2 if I am correct so they must have some physical Gen2 chips around to build the models.

You can't really simulate using somebody's else's hardware, can you? Well I guess you can but not very professional and won't impress anybody.

I assume Akida 2.0 was merely presented in simulation and possibly per brochure.

According to the BrainChip website (https://brainchip.com/embeddedworld/), the demo partnerships at embedded world 2024 were with NVISO and VVDN and thus based on Gen 1:

The BrainChip presentation area within the tinyML Foundation pavilion wasn’t exactly gigantic - strangely, we can’t even spot one of the VVDN Edge AI Boxes in the pictures…

A tapeout is an essential part of the IP, whether or not we were to progress to SoC.Hi TECH,

just an attempt to sort out the terminology…

The following is how I understand BrainChip’s nomenclature, but I could be wrong, so everybody please feel free to brainstorm and chip in…

In early 2022, shortly after Sean Hehir had joined BrainChip as CEO, “AKD1000” was still used as an umbrella term to describe everything our company had for sale at the time (chip, IP, PCIe board), as evident by the following investor conference presentation slides:

View attachment 61365

These days, however, a distinction appears to be made between the generational iterations of the Akida processor technology platform (categorised as akida, akida 2.0…) as opposed to the physical reference chips (so far AKD1000 and AKD1500).

The AKD1000 and AKD1500 SoCs are both silicon implementations of the Akida technology embodied in BrainChip’s 1st Generation Edge AI neuromorphic processor platform akida (technically speaking akida 1.0).

AKD1000 was implemented with TSMC at 28nm, whereas AKD1500 was taped out on GlobalFoundries’ MCU-friendly 22nm fully depleted silicon-on-insulator (FD-SOI) process, aka GF’s 22FDX technology. As reference chips, they were primarily meant to target prospective IP licencees (as a proof of concept), but at the same time the AKD1000 (on a PCIe board or inside a Dev Kit) benefitted individual developers (professional hardware engineers in companies or academic settings as well as advanced hobbyists) who were not interested in mass production of edge devices and the signing of an IP licence, but instead may have only required a single PCIe Board or Dev Kit for their projects or research (take note that it says on the BrainChip shop website in bold capital letters that development kits are not intended to be used for production purposes). Meanwhile, AKD1000 chips have also been integrated into the VVDN Edge AI Boxes, and the Unigen Cupcake Edge AI Server will soon be offered with a new configuration based on the AKD1500 (?) as an AI option.

Akida 2.0, the neural processing system’s enhanced 2nd Generation, was announced and introduced last year, but purely as an IP offering, productised in three different variations: akida-E, akida-S and akida-P, depending on where in the Edge AI spectrum (sensor edge < server edge) its prowess is required.

AKD2000, however, doesn’t exist - at least not yet.

The way I understand it, AKD2000 would be the name of BrainChip’s (hypothetical) reference chip based on Akida Gen 2, which may or may not materialise.

Let’s recall what was said earlier this year:

In an interview with Jim McGregor from TIRIAS Research during CES 2024 (January 9-12), Todd Vierra replied the following to his interview partner’s comment “And correct me if I’m wrong, but this is the Akida 2?”

Todd: “This is actually all ran [sic] on Akida 1 hardware. Akida 2, erm - we are in the process of taping out and we’ll get that silicon back a little bit later, but these are all just Gen 1...” (from 9:26 min). His statement about an imminent tapeout seemed to confirm what (according to FF) Sean Hehir had told select shareholders in the November 2023 Sydney “secret meeting”.

Surprisingly, a mere seven weeks later, during the Virtual Investor Roadshow (February 27), neither Sean Hehir nor Tony Lewis mentioned anything at all about a tape-out in progress, but instead argued a second generation reference chip as proof of concept was unnecessary, while at the same time not totally excluding a potential future tape-out complementing their core IP business. However, our CTO made it clear that it is definitely not their intention to manufacture chips on a large scale:

From 43:17

Roger Manning’s question: “Given BrainChip’s business model is to largely sell its akida IP, will it be necessary to prove each new version of akida in silicon?”

Tony: “So I think the big question was, will this event-based paradigm yield, erm can it be done and will it yield some benefits to customers, and I think we achieved that by taping out our earlier generation of products, and so we’ve already achieved that. And it’s my belief that there is only marginal benefit in taping out the next generation, we already proved the main points of it. And clearly we don’t want to start to manufacture chips on a large scale. We’d be competing with our customers and that would really break our business model right now.”

Sean: “Yeah, and to be clear, a lot of work has gone on with our ability to simulate workloads in Generation 2 as well. As Tony said, we certainly have reference chips in Generation 1, and the typical engagement course that we work with IP license prospects is we allow them to run models on there and/or simulate them in our simulation tools that we have for Generation 2, so Roger, I would say stay tuned, we may or may not, erm, but right now, there is no need for us to do that.”

Now the way I understood the “We’d be competing with our customers” comment is not for fear of treading on their customers’ or a specific customer’s toes (as other TSE users have interpreted it), but because they would shoot themselves in the foot by doing that: after all, it would be less profitable for our company when customers could just buy those chips off the shelves to utilise them in their in-house development (and hence save a lot of money, as there won’t be any follow-up costs for them) rather than having to pay an initial IP license fee and future royalties.

Does my interpretation make sense or am I overlooking something here?

And as for the next iteration of the Akida processor family, we don’t even know, yet, whether it will be called akida 3.0 or akida 2.X…

From min 47:41 min:

Sean Hehir: “… but round Generation 3, if you will: You know, I mentioned earlier in my slide that when I talked about our product planning and our execution cycle, if you will, you could see we are always planning on this product and we are always looking for improvements on our IP offering right there. Now whether we call it formally “Generation 3” or “2 something x” - but yes, we are in the middle of a planning cycle right now to make some changes and we’ll make announcements over time.”

So what we can say with confidence (provided we believe our CEO’s words) is that the advent of the Akida neural processor family’s 3rd generation is approaching… Whether there will ever be an AKD3000, though, is uncertain.

Regards

Frangipani

P.S.: I still can’t get my head around Todd Vierra’s statement, though. Would they really have backed out at the last minute, eg due to financial constraints?

Or was he possibly referring to a tapeout not of AKD2000 as a reference chip, but to a tape-out of a SoC by a specific customer that includes Akida 2.0 IP, along the lines of what DingoBorat said?

But then again, would he really say “…we’ll get that silicon back a little later”?

I suppose only if it was a joint development, such as possibly one with Socionext or Tata Elxsi (which could explain the different colour of the mysterious Custom SoC on that presentation slide)? As otherwise, wouldn’t such a SoC be taped out by the customer itself rather than by / in collaboration with BrainChip? I am a bit confused here.

It seems too early as a mere placeholder for the planned integration of Akida IP into the Frontgrade Gaisler SoC, which according to ESA’s Laurent Hili “We aim to tape out ideally before the end of the year, beginning of next year” (from 47:25 min shortly before the end of the mid -March BrainChip podcast Episode 31). After all, they could have labelled it “prospective Customer Custom SoC”, but the way it is presented on the slide, I agree with DingoBorat that it does appear to be an already existing physical implementation, indeed. Mmmmh…

Frangipani

Top 20

View attachment 61438

Nintendo Live 2024 Sydney

Fans are invited to register for a chance to join us at the International Convention Centre Sydney on Saturday 31st August and Sunday 1st September for in-person Nintendo fun!www.nintendo.com.au

I wonder why its in Australia ....

I wouldn’t read anything into it.

Looks like they moved it to Australia, after having had to cancel Nintendo Live 2024 Tokyo (planned for January 2024) at short notice for safety fears…

Suspect behind Nintendo Live Tokyo 2024 threats arrested

April 3: According to the Kyoto Shimbun, police have made an arrest followings threats tied to the cancelled Nintendo Live Tokyo 2024 event. Nintendo Live 2024 Tokyo, which would have taken place this past January, was cancelled at the start of December. The suspect made “persistent threats”...

nintendoeverything.com

nintendoeverything.com

“April 3: According to the Kyoto Shimbun, police have made an arrest followings [sic] threats tied to the cancelled Nintendo Live Tokyo 2024 event.

Nintendo Live 2024 Tokyo, which would have taken place this past January, was cancelled at the start of December. The suspect made “persistent threats” against employees as well as participants ahead of the Splatoon Koshien 2023 finals. Nintendo Live Tokyo 2024 was cancelled as a precautionary measure.

The man who has been arrested was not named. However, it’s been reported that he’s in his twenties and is a local civil servant from the Ibaraki Prefecture.

The suspect was arrested after sending death threats through Nintendo’s enquiry form on its website. Because of repeated threats sent through that form, he’s been accused of obstructing the company’s business. As of now it’s unclear what his motives were

April 24: Kyoto Shimbun has followed up with another report and the 27 year-old man has been formally charged. He sent messages to Nintendo between August 22 and November 29 of last year with 39 threats. The man said things such as “I’m going to make you regret releasing such a crappy game to the world” and threatened to “kill everyone involved.” Messages also warned Nintendo about events with fans.”

chapman89

Founding Member

Can somebody help me try understand something please.

For those that haven’t seen the post by Markus Schafer from Mercedes which was posted an hour or so ago, here it is below, followed by a screenshot of my comment and the Advanced UX Director of Mercedes to me.

“Hello Beijing and Auto China 2024! Where better to present our Concept CLA Class to Chinese audiences for the first time.

It was a huge pleasure to see it receive such a positive reaction in the world’s biggest single automotive market. This close-to-production insight into the vehicle family based on our Mercedes-Benz Modular Architecture (MMA) offers digital-savvy Chinese customers a feel for the hyper-personalised user experience based on our Mercedes-Benz Operating System (MB.OS).

Our software experts in China have been closely involved in the development of MB.OS from the very start and pay close attention to Chinese feedback. That’s why we brought with us to Auto China demos of some great new digital features and functions of the user interface in the Concept CLA Class.

We want MB.OS to provide our customers in China with an intelligent digital experience based on their own preferences as well as their favourite apps.

And of course, automated driving is another important domain, where the specifics of Chinese roads, traffic and driving styles have a clear impact on how we develop and adapt assistance systems such as our Automatic Lane Change (ALC) function for customers in China.

Furthermore, our MMA vehicles will be equipped with a comprehensive sensor set that feeds our neural network, which enables continuous learning capabilities. This will result in a steady stream of new functions, all provided over-the-air of course. One example will be our point-to-point urban navigation feature. This system can handle challenging urban driving scenarios and supports a fast city rollout due to its “map-less” nature.

But that’s not all I presented in Beijing. Stay “tuned” for more soon…”

So below here is my comment on the post, followed by the reply to me by the Advanced UX Director at Mercedes, and take note, the post itself & my comment didn’t say anything about neuromorphic, so my question is, why the reply from the gentleman at Mercedes with a link to the “In The Loop” post over a year ago??

For those that haven’t seen the post by Markus Schafer from Mercedes which was posted an hour or so ago, here it is below, followed by a screenshot of my comment and the Advanced UX Director of Mercedes to me.

“Hello Beijing and Auto China 2024! Where better to present our Concept CLA Class to Chinese audiences for the first time.

It was a huge pleasure to see it receive such a positive reaction in the world’s biggest single automotive market. This close-to-production insight into the vehicle family based on our Mercedes-Benz Modular Architecture (MMA) offers digital-savvy Chinese customers a feel for the hyper-personalised user experience based on our Mercedes-Benz Operating System (MB.OS).

Our software experts in China have been closely involved in the development of MB.OS from the very start and pay close attention to Chinese feedback. That’s why we brought with us to Auto China demos of some great new digital features and functions of the user interface in the Concept CLA Class.

We want MB.OS to provide our customers in China with an intelligent digital experience based on their own preferences as well as their favourite apps.

And of course, automated driving is another important domain, where the specifics of Chinese roads, traffic and driving styles have a clear impact on how we develop and adapt assistance systems such as our Automatic Lane Change (ALC) function for customers in China.

Furthermore, our MMA vehicles will be equipped with a comprehensive sensor set that feeds our neural network, which enables continuous learning capabilities. This will result in a steady stream of new functions, all provided over-the-air of course. One example will be our point-to-point urban navigation feature. This system can handle challenging urban driving scenarios and supports a fast city rollout due to its “map-less” nature.

But that’s not all I presented in Beijing. Stay “tuned” for more soon…”

So below here is my comment on the post, followed by the reply to me by the Advanced UX Director at Mercedes, and take note, the post itself & my comment didn’t say anything about neuromorphic, so my question is, why the reply from the gentleman at Mercedes with a link to the “In The Loop” post over a year ago??

Last edited:

Frank Zappa

Regular

Quarterly Report out tomorrow? I hope so . With everyone working so hard for success , one would feel that we all deserve something in it to quietly celebrate. If there is good news in it , I will also happy for a Monday or Tuesday release.A tapeout is an essential part of the IP, whether or not we were to progress to SoC.

Damo4

Regular

Can somebody help me try understand something please.

For those that haven’t seen the post by Markus Schafer from Mercedes which was posted an hour or so ago, here it is below, followed by a screenshot of my comment and the Advanced UX Director of Mercedes to me.

“Hello Beijing and Auto China 2024! Where better to present our Concept CLA Class to Chinese audiences for the first time.

It was a huge pleasure to see it receive such a positive reaction in the world’s biggest single automotive market. This close-to-production insight into the vehicle family based on our Mercedes-Benz Modular Architecture (MMA) offers digital-savvy Chinese customers a feel for the hyper-personalised user experience based on our Mercedes-Benz Operating System (MB.OS).

Our software experts in China have been closely involved in the development of MB.OS from the very start and pay close attention to Chinese feedback. That’s why we brought with us to Auto China demos of some great new digital features and functions of the user interface in the Concept CLA Class.

We want MB.OS to provide our customers in China with an intelligent digital experience based on their own preferences as well as their favourite apps.

And of course, automated driving is another important domain, where the specifics of Chinese roads, traffic and driving styles have a clear impact on how we develop and adapt assistance systems such as our Automatic Lane Change (ALC) function for customers in China.

Furthermore, our MMA vehicles will be equipped with a comprehensive sensor set that feeds our neural network, which enables continuous learning capabilities. This will result in a steady stream of new functions, all provided over-the-air of course. One example will be our point-to-point urban navigation feature. This system can handle challenging urban driving scenarios and supports a fast city rollout due to its “map-less” nature.

But that’s not all I presented in Beijing. Stay “tuned” for more soon…”

So below here is my comment on the post, followed by the reply to me by the Advanced UX Director at Mercedes, and take note, the post itself & my comment didn’t say anything about neuromorphic, so my question is, why the reply from the gentleman at Mercedes with a link to the “In The Loop” post over a year ago??

View attachment 61539

Another stellar post Chapman!

All but confirms it.

For those wanting to save a couple of seconds, see the below link for what Zane Amiralis posted:

https://www.linkedin.com/posts/mark...ity-7021055729805467648-WQHK?utm_source=share

Evermont

Stealth Mode

Can somebody help me try understand something please.

For those that haven’t seen the post by Markus Schafer from Mercedes which was posted an hour or so ago, here it is below, followed by a screenshot of my comment and the Advanced UX Director of Mercedes to me.

“Hello Beijing and Auto China 2024! Where better to present our Concept CLA Class to Chinese audiences for the first time.

It was a huge pleasure to see it receive such a positive reaction in the world’s biggest single automotive market. This close-to-production insight into the vehicle family based on our Mercedes-Benz Modular Architecture (MMA) offers digital-savvy Chinese customers a feel for the hyper-personalised user experience based on our Mercedes-Benz Operating System (MB.OS).

Our software experts in China have been closely involved in the development of MB.OS from the very start and pay close attention to Chinese feedback. That’s why we brought with us to Auto China demos of some great new digital features and functions of the user interface in the Concept CLA Class.

We want MB.OS to provide our customers in China with an intelligent digital experience based on their own preferences as well as their favourite apps.

And of course, automated driving is another important domain, where the specifics of Chinese roads, traffic and driving styles have a clear impact on how we develop and adapt assistance systems such as our Automatic Lane Change (ALC) function for customers in China.

Furthermore, our MMA vehicles will be equipped with a comprehensive sensor set that feeds our neural network, which enables continuous learning capabilities. This will result in a steady stream of new functions, all provided over-the-air of course. One example will be our point-to-point urban navigation feature. This system can handle challenging urban driving scenarios and supports a fast city rollout due to its “map-less” nature.

But that’s not all I presented in Beijing. Stay “tuned” for more soon…”

So below here is my comment on the post, followed by the reply to me by the Advanced UX Director at Mercedes, and take note, the post itself nor my comment said anything about neuromorphic, so my question is, why the reply from the gentleman at Mercedes with a link to the “In The Loop” post over a year ago??

View attachment 61539

Hi @chapman89,

Nothing I have read previously from MB suggests we are integrated within the MMA or Concept CLA at this time.

MB have referenced 'neural' previously in the context of Chat GPT for Hey Mercedes and the personality traits for the MBUX Virtual Assistant.

Still doesn't explain why you were pointed to an article which is solely neuromorphic related.

Cheers, I got nothing.

Damo4

Regular

Still there for me.Interesting, I've just had a look at your post on LinkedIn and the link that was provided isn't there, can you still see it Jesse, perhaps it's only available to you as it's a reply to you or it's been taken down?

Oh brilliant, it's obviously me then, thanks for confirming, I deleted my post, I wasn't signed in that's why I couldn't see it, pillock I am!Still there for me.

View attachment 61541

Last edited:

From the edge impulse April newsletter

...

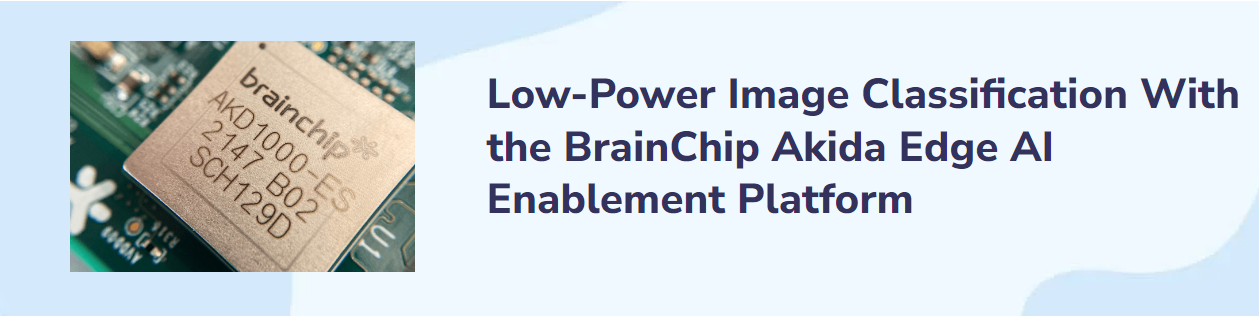

Webinar: From Prototype to Finished Product with BrainChip

Join Edge Impulse on May 6th for a demonstration of using the BrainChip AkidaTM neuromorphic processor to classify images for edge AI workloads. In this webinar, Low-Power Image Classification With the BrainChip Akida Edge AI Enablement Platform, we will cover X-ray image classification running on an edge device. You'll also learn machine learning model creation, deployment to the BrainChip Akida Enablement Platform, and the path from prototype to product.

https://events.edgeimpulse.com/brai...693&utm_content=304317693&utm_source=hs_email

...

New from the Edge Impulse Blog

Tech for Good: Using AI to Track Rhinos in Africa

Making Strides in Personalized Healthcare

Classifying Medical Imaging On-Device with Edge Impulse and BrainChip

Picking the Brain of AI: BrainChip on the State of Neuromorphic Processing

ML on the Edge with Zach Shelby Ep. 7 — EE Time's Sally Ward-Foxton on the Evolution of AI

Pump Up the Predictions: Prototyping Predictive Maintenance with RASynBoard

This webinar will dive into X-ray image classification running on an Edge device. We’ll cover machine learning model creation, deployment to the BrainChip Akida Enablement Platform, and the path from prototype to product.

Edge Impulse insightsnewsletter@edgeimpulse.com

26/4/24 01:13

...

Webinar: From Prototype to Finished Product with BrainChip

Join Edge Impulse on May 6th for a demonstration of using the BrainChip AkidaTM neuromorphic processor to classify images for edge AI workloads. In this webinar, Low-Power Image Classification With the BrainChip Akida Edge AI Enablement Platform, we will cover X-ray image classification running on an edge device. You'll also learn machine learning model creation, deployment to the BrainChip Akida Enablement Platform, and the path from prototype to product.

https://events.edgeimpulse.com/brai...693&utm_content=304317693&utm_source=hs_email

...

New from the Edge Impulse Blog

Tech for Good: Using AI to Track Rhinos in Africa

Making Strides in Personalized Healthcare

Classifying Medical Imaging On-Device with Edge Impulse and BrainChip

Picking the Brain of AI: BrainChip on the State of Neuromorphic Processing

ML on the Edge with Zach Shelby Ep. 7 — EE Time's Sally Ward-Foxton on the Evolution of AI

Pump Up the Predictions: Prototyping Predictive Maintenance with RASynBoard

Launching May 6, 2024

Join Edge Impulse as we demonstrate using the BrainChip AkidaTM neuromorphic processor to classify images for Edge AI workloads.This webinar will dive into X-ray image classification running on an Edge device. We’ll cover machine learning model creation, deployment to the BrainChip Akida Enablement Platform, and the path from prototype to product.

Renesas: You make the Akida2000 chip.

BRN: No! You make the Akida2000 chip.

Renesas: No, You make the Akida2000 chip.

BRN: No! You make the Akida2000 chip.

Renesas: No, You make the Akida2000 chip.

BRN: But you told us what changes you wanted in the chip!

Renesas: We never said we'd manufacture the thing.

BRN: You make the Akida2000 chip.

Renesas: We're selling our own.

BRN: You make the Akida2000 chip.

Renesas: No, You make the Akida2000 chip.

BRN: But we held off because we thought you were. We didn't want to tread on your toes.

Renesas: No. We have to protect our shareholders.

BRN: Who?

Renesas: Good bye.

BRN: No! You make the Akida2000 chip.

Renesas: No, You make the Akida2000 chip.

BRN: No! You make the Akida2000 chip.

Renesas: No, You make the Akida2000 chip.

BRN: But you told us what changes you wanted in the chip!

Renesas: We never said we'd manufacture the thing.

BRN: You make the Akida2000 chip.

Renesas: We're selling our own.

BRN: You make the Akida2000 chip.

Renesas: No, You make the Akida2000 chip.

BRN: But we held off because we thought you were. We didn't want to tread on your toes.

Renesas: No. We have to protect our shareholders.

BRN: Who?

Renesas: Good bye.

Morning Chippers ,

Apparently a new release on Twitter.

brainchip.com

brainchip.com

Regards,

Esq.

Apparently a new release on Twitter.

A Lightweight Spatiotemporal Network for Online Eye Tracking

Learn about a lightweight spatiotemporal network by BrainChip for online eye-tracking—efficient, adaptive AI optimized for edge deployment.

Regards,

Esq.

Similar threads

- Replies

- 0

- Views

- 4K

- Replies

- 9

- Views

- 6K

- Replies

- 0

- Views

- 3K