I’d suggest

Armakida.

The term works especially well in Germanic languages such as German, Dutch, Danish or Norwegian as a combination of not only ARM and Akida, but also of ARMada with integrated KI (= Künstliche Intelligenz / kunstmatige intelligentie / kunstig intelligens), which is the equivalent of AI).

Some interesting history trivia to keep those of you in Down Under awake for the ARM tech talk…

In 1588, the so-called Invincible Armada - the imposing Spanish war fleet - was famously defeated by the English naval forces with assistance from Dutch vessels blocking Flemish ports, where contingents of the Spanish infantry under the Duke of Parma were gathered at the time, and also thanks to the incompetence of the Spanish commanders (partly with no naval experience at all) as well as with some major help from unusually bad weather, which favoured the English, who in turn interpreted the heavy storms that ravaged the Catholic Spanish Crown’s Armada as divine intervention and thus God‘s approval for the Protestant cause.

Much less known than the nation-defining David vs Goliath myth that underdog England by the grace of God triumphantly sank the mighty Spanish Armada and that Spain never recovered from that fiasco is the fact that in 1589 the English Counter Armada aka the Drake-Norris Expedition suffered a catastrophic loss that was double that of the Spanish Armada the previous year - the greatest naval disaster in English history. However, Elizabeth I largely succeeded in concealing the truth thanks to her skilful propaganda strategy, by banning publications about the crushing defeat and instead having pamphlets printed depicting an alternative reality. In the 16th century, “alternative facts” were of course much harder to fact check compared to today, but then again they didn’t have to deal with online misinformation, Telegram echo chambers, deepfakes and generative AI blurring the line between real and fake content, a technology that can be and has already been misused, raising urgent questions over how to regulate it.

Which brings us back to the soon to be launched Armakida (and by this I don’t mean an actual off-the-shelf product, but the demonstration of its compatability for custom-made applications).

Fortunately, it is highly unlikely that our state-of-the-art Armakida with its technological supremacy will suffer a similar fate on the economic edge AI battleground as the Spanish and English Armadas in 1588/89 - on the contrary, I foresee a glorious victory by unhindered landfall on all continents resulting in a ubiquitous, yet peaceful invasion, benefitting mankind. As for when exactly this will happen, I’m afraid none of us can access the digital history book files of the future.

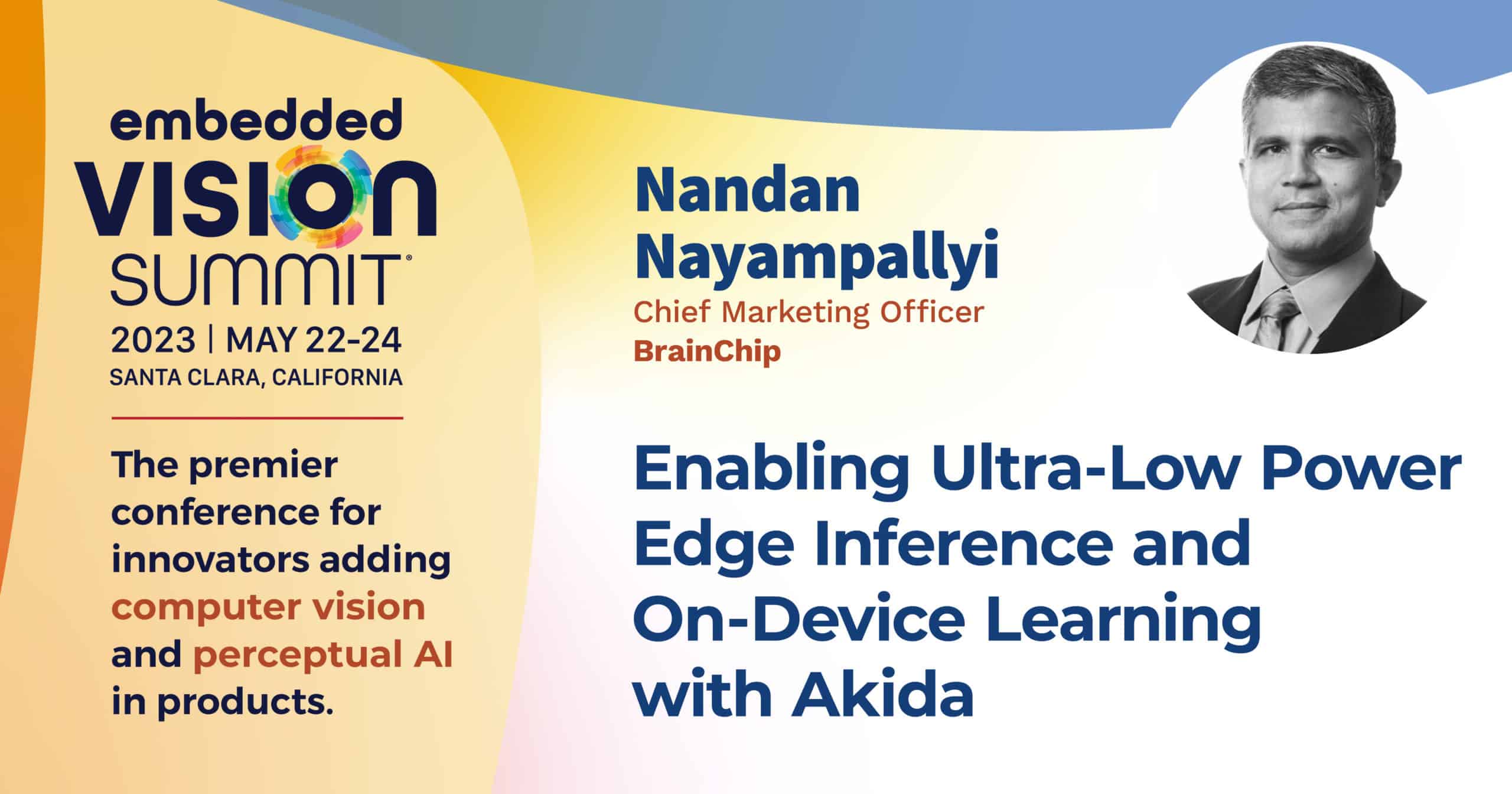

Personally, I don‘t expect today’s ARM tech talk to affect the share price much, unless it goes hand-in-hand (or rather arm-in-arm

) with a price-sensitive announcement (which I think is rather unlikely), but the target audience (which is obviously not us retail shareholders) will hopefully prick up their ears and then act in their (and our) best interest. I believe we are still in the reconnaissance phase for most potential customers that would like to see real life applications first before committing, so this event is undoubtedly great exposure for Brainchip, but won’t send the share price sky-rocketing like the Mercedes announcement early last year. Of course I am happy to be proven wrong.