You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

BRN Discussion Ongoing

- Thread starter TechGirl

- Start date

Adam82

Member

Just came across this on FB if anyone has any insight for the new EV with Kia connect.

Fullmoonfever

Top 20

Skimming around google & came across Steve Blank again.

I've posted a post previously by him, including a ref to BRN, and has quite a background.

Refresher from May.

steveblank.com

steveblank.com

Read his recent blog & felt like posting as it just so much sounded like the dot joining process of the 1000 eyes or in his quote....1000 miles

steveblank.com

steveblank.com

Posted on September 20, 2022 by steve blank

A journey of a thousand miles begins with a single step

Lǎozi 老子

I just had lunch with Shenwei, one of my ex-students who had just taken a job in a mid-sized consulting firm. After a bit of catching up I offered he was looking a bit lost. “I just got handed a project to help our firm enter a new industry – semiconductors. They want me to map out the space so we can figure out where we can add value.

When I asked what they already knew about it, they tossed me a tall stack of industry and stock analyst reports, company names, web sites, blogs. I started reading through a bunch of it and I’m drowning in data but don’t know where to start. I feel like I don’t know a thing.”

I told Shenwei I was happy for him because he had just been handed an awesome learning opportunity – how to rapidly understand and then map any new market. He gave me a “easy for you to say” look, but before he could object I handed him a pen and a napkin and asked him to write down the names of companies and concepts he read about that have anything to do with the semiconductor business – in 30 seconds. He quickly came up with a list with 9 names/terms. (See Mapping – First Pass)

“Great, now we have a start. Now give me a few words that describe what they do, or mean, or what you don’t know about them.”

Don’t let the enormity of unknowns frighten you. Start with what you do know.

After a few minutes he came up with a napkin sketch that looked like the picture in Mapping – Second Pass.

https://steveblank.com/wp-content/uploads/2022/09/Mapping-2nd-pass.jpg

Now we had some progress.

I pointed out he now had a starter list that not only contained companies but the beginning of a map of the relationships between those companies. And while he had a few facts, others were hypotheses and concepts. And he had a ton of unanswered questions.

We spent the next 20 minutes deconstructing that sketch and mapping out the Second Pass list as a diagram (see Mapping – Third Pass.)

As you keep reading more materials, you’ll have more questions than facts. Your goal is to first turn the questions into testable hypotheses (guesses). Then see if you can find data that turns the hypotheses into facts. For a while the questions will start accumulating faster than the facts. That’s OK.

Note that even with just the sparse set of information Shenwei had, in the bottom right-hand corner of his third mapping pass, a relationship diagram of the semiconductor industry was beginning to emerge.

Drawing a diagram of the relationships of companies in an industry can help you deeply understand how the industry works and who the key players are. Start building one immediately. As you find you can’t fill in all the relationships, the gaps outlining what you need to learn will become immediately visible.

As the information fog was beginning to lift, I could see Shenwei’s confidence returning. I pointed out that he had a real advantage that his assignment was in a known industry with lots of available information. He quickly realized that he could keep adding information to the columns in the third mapping pass as he read through the reports and web sites.

Google and Google Scholar are your best friends. As you discover new information increase your search terms.

My suggestion was to use the diagram in the third mapping pass as the beginning of a wall chart – either physically (or virtually if he could keep it in all in his head). And every time he learned more about the industry to update the relationship diagram of the industry and its segments. (When he pointed out that there were existing diagrams of the semiconductor industry he could copy, I suggested that he ignore them. The goal was for him to understand the industry well enough that he could draw his own map ab initio – from the beginning. And if he did so, he might create a much better one.)

When lunch was over Shenwei asked if it was OK if he checked in with me as he learned new things and I agreed. What he didn’t know was that this was only the first step in a ten-step industry mapping process.

Epilog

Over the next few weeks Shenwei shared what he had learned and sent me his increasingly refined and updated industry relationship map. (The 4th mapping pass showed up 48 hours later.)

In exchange I shared with him the news that he was on step one of a ten step industry mapping program. Other the next few weeks he quickly built on the industry map to answer questions 2 through 10 below.

Two weeks later he handed his leadership an industry report that covered the ten steps below and contained a sophisticated industry diagram he created from scratch. A far cry from his original napkin sketch!

Six months later his work on this project convinced his company that there was a large opportunity in the semiconductor space, and they started a new practice with him in it. His work won him the “best new employee” award.

The Ten Steps to Map any Industry

Start by continuously refining your understanding of the industry by diagramming it. List all the new words you encounter and create a glossary in your own words. Start collecting the best sources of information you’ve read.

Basic Industry Understanding

Detailed Industry Understanding

Forecasting

I've posted a post previously by him, including a ref to BRN, and has quite a background.

Refresher from May.

Steve Blank Artificial Intelligence and Machine Learning– Explained

Artificial Intelligence is a once-in-a lifetime commercial and defense game changer (download a PDF of this article here) Hundreds of billions in public and private capital is being invested in Art…

steveblank.com

steveblank.com

Read his recent blog & felt like posting as it just so much sounded like the dot joining process of the 1000 eyes or in his quote....1000 miles

Steve Blank Mapping the Unknown – The Ten Steps to Map Any Industry

A journey of a thousand miles begins with a single step Lǎozi 老子 I just had lunch with Shenwei, one of my ex-students who had just taken a job in a mid-sized consulting firm. After a bit of catch…

steveblank.com

steveblank.com

Posted on September 20, 2022 by steve blank

A journey of a thousand miles begins with a single step

Lǎozi 老子

I just had lunch with Shenwei, one of my ex-students who had just taken a job in a mid-sized consulting firm. After a bit of catching up I offered he was looking a bit lost. “I just got handed a project to help our firm enter a new industry – semiconductors. They want me to map out the space so we can figure out where we can add value.

When I asked what they already knew about it, they tossed me a tall stack of industry and stock analyst reports, company names, web sites, blogs. I started reading through a bunch of it and I’m drowning in data but don’t know where to start. I feel like I don’t know a thing.”

I told Shenwei I was happy for him because he had just been handed an awesome learning opportunity – how to rapidly understand and then map any new market. He gave me a “easy for you to say” look, but before he could object I handed him a pen and a napkin and asked him to write down the names of companies and concepts he read about that have anything to do with the semiconductor business – in 30 seconds. He quickly came up with a list with 9 names/terms. (See Mapping – First Pass)

“Great, now we have a start. Now give me a few words that describe what they do, or mean, or what you don’t know about them.”

Don’t let the enormity of unknowns frighten you. Start with what you do know.

After a few minutes he came up with a napkin sketch that looked like the picture in Mapping – Second Pass.

https://steveblank.com/wp-content/uploads/2022/09/Mapping-2nd-pass.jpg

Now we had some progress.

I pointed out he now had a starter list that not only contained companies but the beginning of a map of the relationships between those companies. And while he had a few facts, others were hypotheses and concepts. And he had a ton of unanswered questions.

We spent the next 20 minutes deconstructing that sketch and mapping out the Second Pass list as a diagram (see Mapping – Third Pass.)

As you keep reading more materials, you’ll have more questions than facts. Your goal is to first turn the questions into testable hypotheses (guesses). Then see if you can find data that turns the hypotheses into facts. For a while the questions will start accumulating faster than the facts. That’s OK.

Note that even with just the sparse set of information Shenwei had, in the bottom right-hand corner of his third mapping pass, a relationship diagram of the semiconductor industry was beginning to emerge.

Drawing a diagram of the relationships of companies in an industry can help you deeply understand how the industry works and who the key players are. Start building one immediately. As you find you can’t fill in all the relationships, the gaps outlining what you need to learn will become immediately visible.

As the information fog was beginning to lift, I could see Shenwei’s confidence returning. I pointed out that he had a real advantage that his assignment was in a known industry with lots of available information. He quickly realized that he could keep adding information to the columns in the third mapping pass as he read through the reports and web sites.

Google and Google Scholar are your best friends. As you discover new information increase your search terms.

My suggestion was to use the diagram in the third mapping pass as the beginning of a wall chart – either physically (or virtually if he could keep it in all in his head). And every time he learned more about the industry to update the relationship diagram of the industry and its segments. (When he pointed out that there were existing diagrams of the semiconductor industry he could copy, I suggested that he ignore them. The goal was for him to understand the industry well enough that he could draw his own map ab initio – from the beginning. And if he did so, he might create a much better one.)

When lunch was over Shenwei asked if it was OK if he checked in with me as he learned new things and I agreed. What he didn’t know was that this was only the first step in a ten-step industry mapping process.

Epilog

Over the next few weeks Shenwei shared what he had learned and sent me his increasingly refined and updated industry relationship map. (The 4th mapping pass showed up 48 hours later.)

In exchange I shared with him the news that he was on step one of a ten step industry mapping program. Other the next few weeks he quickly built on the industry map to answer questions 2 through 10 below.

Two weeks later he handed his leadership an industry report that covered the ten steps below and contained a sophisticated industry diagram he created from scratch. A far cry from his original napkin sketch!

Six months later his work on this project convinced his company that there was a large opportunity in the semiconductor space, and they started a new practice with him in it. His work won him the “best new employee” award.

The Ten Steps to Map any Industry

Start by continuously refining your understanding of the industry by diagramming it. List all the new words you encounter and create a glossary in your own words. Start collecting the best sources of information you’ve read.

Basic Industry Understanding

- Diagram the industry and its segments

- Start with anything

- Build your learning by successive iteration

- Who are the key suppliers to each segment?

- How does this industry feed into the larger economy?

- Create a glossary of industry unique terms

- Can you explain them to others? Are there analogies to other markets?

- Who are the industry experts in each segment? For the entire industry?

- Economic experts? E.g. industry analysts, universities, think tanks

- Technology experts? E.g. universities, think tanks

- Geographic experts?

- Key Conferences, blogs, web sites, etc.

- What are the best opensource data feeds?

- What are the best paid resources?

Detailed Industry Understanding

- Who are the market leaders? New entrants? In revenue, market share and growth rate

- In the U.S.

- Western countries

- China

- Understand the technology flows

- Who builds on top of who

- Who is critical versus who can be substituted

- Understand the economic flows

- Who buys from who in this industry

- Who buys the output from this industry.

- How cyclical is demand?

- What are the demand drivers?

- How do companies inside each segment get funded? Any differences in capital requirements? Ease of starting, etc.

- If applicable, understand the personnel flow for each segment

- Do people move just between their segments or up and down through the entire industry?

- Where do they get trained?

Forecasting

- What’s changed in the last 10 years? 5 years?

- Diagram the past incarnations of the industry

- What’s going to change in the next 5 years?

- Any big insight on disruption?

- New entrants?

- New technology?

- New foreign suppliers?

- Diagram your model of the industry in 5 years

S

Straw

Guest

Ok they are theoretical investors, interesting.well how that now, *laughs, Brainchip was never with his tips

oh how fooooooooool.... if only you had known that sooner

"Good investment?

Let’s imagine I had invested $1,000 in BrainChip shares after market close on 25 October 2021. I would have walked away with 2,020 shares with ten cents left over.

Today, these shares are fetching 89 cents a share, based on the share price at the time of writing. So this investment would now be worth $1,797.80.

If I’d invested $10,000 in BrainChip shares a year ago, my investment would now be fetching $17,978.

Looking at the bigger picture for BrainChip shares, on 19 January 2022, the company’s share price hit a high of $2.13.

At that time, my $1,000 investment would have been worth a mammoth $4,302.6 If I had cashed in, I could have walked away with a more than $3,300 profit from a $1,000 investment.

Overall, if I had invested in BrainChip shares a year ago, I would certainly be happy with my investment.

Currently, BrainChip does not pay any dividends and has never done so in the past. As my Foolish colleague James reported last week, short sellers have also been targeting BrainChip shares of late."

If I'd invested $1,000 in BrainChip shares a year ago, would I be happy right now?

BrainChip Holdings Ltd (ASX: BRN) shares have rocketed ahead in the past year, making them a fine investment over that time.www.fool.com.au

Let's imagine if I had invested 7 years ago, took a genuine interest in the activities of the company and what they were trying to create, didn't listen to people peddling investment services or shorter thugs/high volume trader thugs trying to screw me over via a combination of psychological/algorithmic thuggery and -not at all plausibly deniable but ingrained self interest of the market operator/organised legal crime syndicate- thuggery (offering zero to negative support to the company or its investors they intended to make money off through creating synthetic volatility and taking from both ends) and instead did my own research, accepted a certain level of risk, made my own decisions and held my shares through good and bad periods both in my life and the company's fortunes. Then continued to accumulate well over a million shares when a huge company like Mercedes (just as a possible example amongst others) decides to publicly validate their work as being commercial. Oh, gee well maybe ARM listing the company prominently on their website as a partner might have been a good thing as well. Of course this is all purely a case in hindsight.

Let's imagine also that I sold some at a great price around that point, changing my life (having felt Id done my bit of risk and personal pain) and still held 2/3 of those shares in the expectation of the company producing increases in revenue over time whilst massively increasing their talent base and profile amongst potential partners in order to become cash flow positive. And then imagine that that revenue did increase in a non-linear manner over time. Would I panic and dump the rest or would I then have begun to consider the possibility of significant potential growth of the company and its activities possibility leading to greater potential dividends given the very high margins provided by their business model and the ridiculously enormous and varied markets for their patented IP.

Gosh I don't know. Just as well it's all hypothetical. My head hurts so I'll just have to hypothetically pay some benevolent advisor to talk me out of it.

Fullmoonfever

Top 20

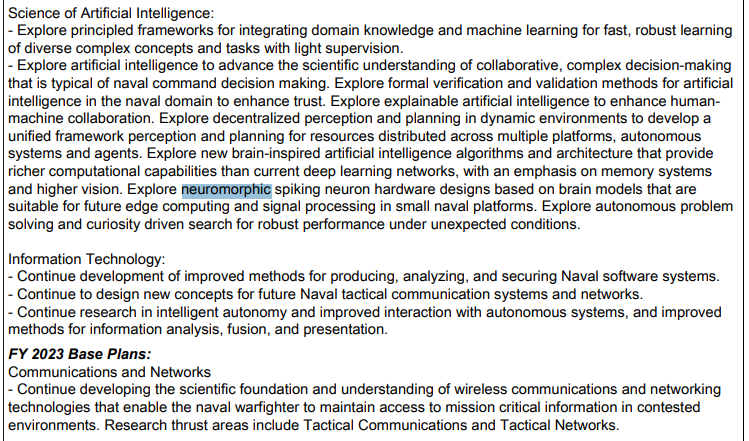

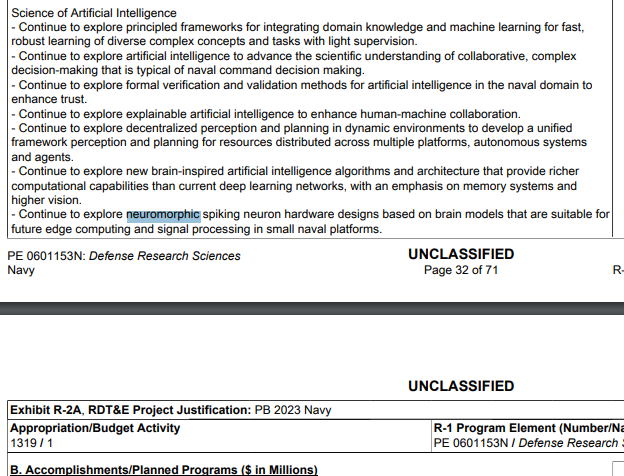

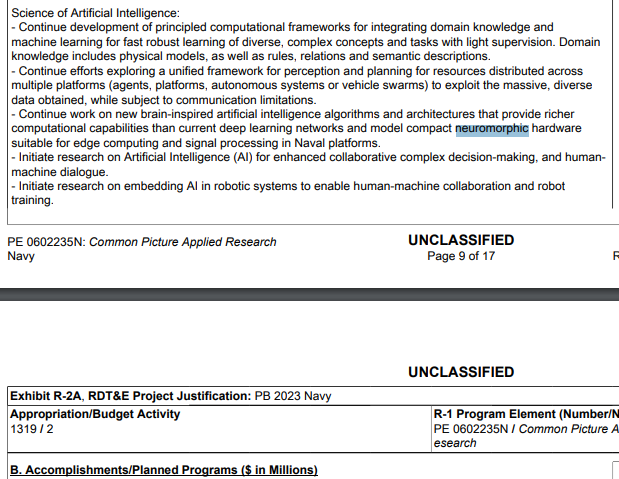

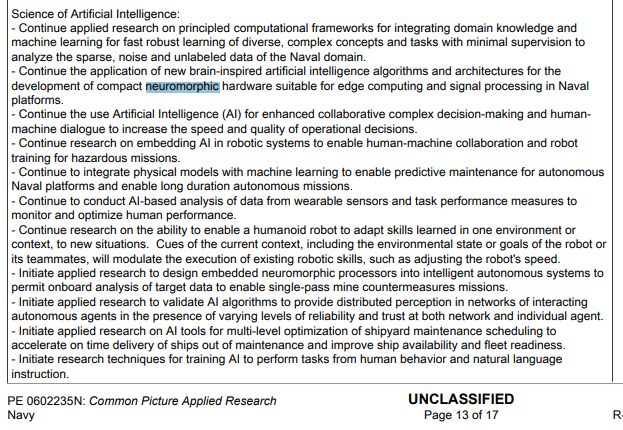

Having a look through for what they thinking on Neuromorphic.

Looks like they have a few upcoming projects amongst the multitudes they want to explore neuromorphic in & hopefully we get a look in with some partners.

Department of Defense

Fiscal Year (FY) 2023 Budget Estimates

April 2022

PDF Book HERE

warning - 684 pages

Looks like they have a few upcoming projects amongst the multitudes they want to explore neuromorphic in & hopefully we get a look in with some partners.

Department of Defense

Fiscal Year (FY) 2023 Budget Estimates

April 2022

PDF Book HERE

warning - 684 pages

Fullmoonfever

Top 20

Learning How to Learn:

Neuromorphic AI Inference at the Edge

Q&A with Peter van der Made, BrainChip Founder and Chief Technology Officer

Don't recall seeing this before and checked BRN website and couldn't see an upload so correct me if I missed it.

Thought may have been awhile ago but the colours, style of the presentation pdf is our current new branding.

Search indicates late September interview by the Global Semiconductor Alliance with PVDM.

Worth a read and a snip below of a couple points PVDM mentioned I really liked around use case / customers and tense as in current / happening not...might or potentially.

Q: BrainChip customers are already deploying smarter endpoints with

independent learning and inference capabilities, faster response times, and a

lower power budget. Can you give us some real-world examples of how AKIDA is revolutionizing AI inference at the edge?

Peter: Automotive is a good place to start. Using AKIDA-powered sensors and

accelerators, automotive companies can now design lighter, faster, and more

energy efficient in-cabin systems that enable advanced driver verification and

customization, sophisticated voice control technology, and next-level gaze

estimation and emotion classification capabilities

In addition to redefining the automotive in-cabin experience, AKIDA is helping

enable new computer vision and LiDAR systems to detect vehicles, pedestrians,

bicyclists, street signs, and objects with incredibly high levels of precision. We’re

looking forward to seeing how these fast and energy efficient ADAS systems help

automotive companies accelerate the rollout of increasingly advanced assisted

driving capabilities.

In the future, we’d also like to power self-driving cars and trucks. But we don’t

want to program these vehicles. To achieve true autonomy, cars and trucks mus tindependently learn how to drive in different environmental and topographical

conditions such as icy mountain roads with limited visibility, crowded streets, and fast-moving highways.

Q: Aside from automotive, what are some other multimodal edge use cases

AKIDA enables?

Peter: Smart homes, automated factories and warehouses, vibration monitoring

and analysis of industrial equipment, as well as advanced speech and facial

recognition applications. AKIDA is also accelerating the design of robots using

sophisticated sensors to see, hear, smell, touch, and even taste.

The simple layered structure of today’s AI networks is limiting the ability of AI to

progress beyond basic classification.

BrainChip’s advanced research is pushing

the boundaries of artificial intelligence by researching neuromorphic models of

the human cortex, the seat of intelligence.

The frontal lobes of the cortex are

significantly associated with intelligence.

The aim of our research is to construct

in hardware a temporal neuromorphic cortical network that is as modular and

flexible as the human brain.

Advanced artificial intelligence systems that result from this research must

be capable of recognizing partially obscured objects, anticipating expected

outcomes, and recognizing behavior. This is the next generation of neuromorphic

computing, and I hope to continue contributing to the research and science that will make this possible

Neuromorphic AI Inference at the Edge

Q&A with Peter van der Made, BrainChip Founder and Chief Technology Officer

Don't recall seeing this before and checked BRN website and couldn't see an upload so correct me if I missed it.

Thought may have been awhile ago but the colours, style of the presentation pdf is our current new branding.

Search indicates late September interview by the Global Semiconductor Alliance with PVDM.

Worth a read and a snip below of a couple points PVDM mentioned I really liked around use case / customers and tense as in current / happening not...might or potentially.

Q: BrainChip customers are already deploying smarter endpoints with

independent learning and inference capabilities, faster response times, and a

lower power budget. Can you give us some real-world examples of how AKIDA is revolutionizing AI inference at the edge?

Peter: Automotive is a good place to start. Using AKIDA-powered sensors and

accelerators, automotive companies can now design lighter, faster, and more

energy efficient in-cabin systems that enable advanced driver verification and

customization, sophisticated voice control technology, and next-level gaze

estimation and emotion classification capabilities

In addition to redefining the automotive in-cabin experience, AKIDA is helping

enable new computer vision and LiDAR systems to detect vehicles, pedestrians,

bicyclists, street signs, and objects with incredibly high levels of precision. We’re

looking forward to seeing how these fast and energy efficient ADAS systems help

automotive companies accelerate the rollout of increasingly advanced assisted

driving capabilities.

In the future, we’d also like to power self-driving cars and trucks. But we don’t

want to program these vehicles. To achieve true autonomy, cars and trucks mus tindependently learn how to drive in different environmental and topographical

conditions such as icy mountain roads with limited visibility, crowded streets, and fast-moving highways.

Q: Aside from automotive, what are some other multimodal edge use cases

AKIDA enables?

Peter: Smart homes, automated factories and warehouses, vibration monitoring

and analysis of industrial equipment, as well as advanced speech and facial

recognition applications. AKIDA is also accelerating the design of robots using

sophisticated sensors to see, hear, smell, touch, and even taste.

The simple layered structure of today’s AI networks is limiting the ability of AI to

progress beyond basic classification.

BrainChip’s advanced research is pushing

the boundaries of artificial intelligence by researching neuromorphic models of

the human cortex, the seat of intelligence.

The frontal lobes of the cortex are

significantly associated with intelligence.

The aim of our research is to construct

in hardware a temporal neuromorphic cortical network that is as modular and

flexible as the human brain.

Advanced artificial intelligence systems that result from this research must

be capable of recognizing partially obscured objects, anticipating expected

outcomes, and recognizing behavior. This is the next generation of neuromorphic

computing, and I hope to continue contributing to the research and science that will make this possible

Attachments

Fullmoonfever

Top 20

Well these new standards now accepted around privacy, security etc should be a good thing for Akida imo.

Given our inbuilt ability around privacy and security by being cloud independent should give manufacturers, engineers, designers a great easy option in some use cases.

www.eenewsembedded.com

www.eenewsembedded.com

Matter 1.0 release brings interoperability and security to smart home IoT

Technology News | October 6, 2022

By Jean-Pierre Joosting

COMCAST IOT APPLE AMAZON SECURITY

The Connectivity Standards Alliance, the international community of more than 550 technology companies committed to open standards for the Internet of Things, has announced the release of the Matter 1.0 specification and the opening of the Matter certification program. Member companies who make up all facets of the IoT now have a complete program for bringing the next generation of interoperable products that work across brands and platforms to market with greater privacy, security, and simplicity for consumers.

As part of the Matter 1.0 release, authorized test labs are open for product certification, the test harnesses and tools are available, and the open-source reference design software development kit (SDK) is complete — all to bring new, innovative products to market. Further, Alliance members with devices already deployed and with plans to update their products to support Matter can now do so, once their products are certified.

“What started as a mission to unravel the complexities of connectivity has resulted in Matter, a single, global IP-based protocol that will fundamentally change the IoT,” said Tobin Richardson, President and CEO of the Connectivity Standards Alliance. “This release is the first step on a journey our community and the industry are taking to make the IoT more simple, secure, and valuable no matter who you are or where you live.”

Over 280 member companies — including Amazon, Apple, Comcast, Google, Signify and SmartThings — have brought their technologies, experience, and innovations together to ensure Matter met the needs of all stakeholders including users, product makers, and platforms. Collectively, these companies led the way through requirements and specification development, reference design, multiple test events and final specification validation to reach this industry milestone.

“We would not be where we are today without the strength and dedication of the Alliance members who have provided thousands of engineers, intellectual property, software accelerators, security protocols, and the financial resources to accomplish what no single company could ever do on their own,” said Bruno Vulcano, Chair of the Alliance Board and R&D Manager for Legrand Digital Infrastructure. “With members equally distributed throughout the world, Matter is the realization of a truly global effort that will benefit manufacturers, customers and consumers alike, not just in a single region or continent.”

More than just a specification, the Matter 1.0 standard launches with test cases and comprehensive test tools for Alliance members and a global certification program including eight authorized test labs who are primed to test not only Matter, but also Matter’s underlying network technologies, Wi-Fi and Thread. Wi-Fi enables Matter devices to interact over a high-bandwidth local network and allows smart home devices to communicate with the cloud. Thread provides an energy efficient and highly reliable mesh network within the home. Both the Wi-Fi Alliance and Thread Group partnered with the Connectivity Standards Alliance to help realize the complete vision of Matter.

“Matter and Thread resolve interoperability and connectivity issues in smart homes so manufacturers can focus on other value-adding innovations,” Thread Group president Vividh Siddha said. “Thread creates a self-healing mesh network which grows more responsive and reliable with each added device, and its ultra-lower power architecture extends battery life. Combined, Thread with Matter is a powerful choice for product companies and a great value for consumers.”

“Matter leverages Wi-Fi’s sophisticated network efficiency, global pervasiveness of more than 18 billion devices in use today, and robust standards-based foundation to help deliver the IoT vision,” said Edgar Figueroa, president and CEO, Wi-Fi Alliance®. “Together, Wi-Fi CERTIFIED and Matter bring simple and secure interoperability for a better user experience with a wide range of IoT devices.”

Matter is also striking new ground with security policies and processes using distributed ledger technology and Public Key Infrastructure to validate device certification and provenance. This will help to ensure users are connecting authentic, certified, and up-to-date devices to their homes and networks.

This initial release of Matter, running over Ethernet, Wi-Fi, and Thread, and using Bluetooth Low Energy for device commissioning, will support a variety of common smart home products, including lighting and electrical, HVAC controls, window coverings and shades, safety and security sensors, door locks, media devices including TVs, controllers as both devices and applications, and bridges.

www.csa-iot.org

www.buildwithmatter.com

Given our inbuilt ability around privacy and security by being cloud independent should give manufacturers, engineers, designers a great easy option in some use cases.

Matter 1.0 release brings interoperability and security to smart home IoT

The Connectivity Standards Alliance and its members have released the Matter 1.0 standard and certification program.

Matter 1.0 release brings interoperability and security to smart home IoT

Technology News | October 6, 2022

By Jean-Pierre Joosting

COMCAST IOT APPLE AMAZON SECURITY

The Connectivity Standards Alliance, the international community of more than 550 technology companies committed to open standards for the Internet of Things, has announced the release of the Matter 1.0 specification and the opening of the Matter certification program. Member companies who make up all facets of the IoT now have a complete program for bringing the next generation of interoperable products that work across brands and platforms to market with greater privacy, security, and simplicity for consumers.

As part of the Matter 1.0 release, authorized test labs are open for product certification, the test harnesses and tools are available, and the open-source reference design software development kit (SDK) is complete — all to bring new, innovative products to market. Further, Alliance members with devices already deployed and with plans to update their products to support Matter can now do so, once their products are certified.

“What started as a mission to unravel the complexities of connectivity has resulted in Matter, a single, global IP-based protocol that will fundamentally change the IoT,” said Tobin Richardson, President and CEO of the Connectivity Standards Alliance. “This release is the first step on a journey our community and the industry are taking to make the IoT more simple, secure, and valuable no matter who you are or where you live.”

Over 280 member companies — including Amazon, Apple, Comcast, Google, Signify and SmartThings — have brought their technologies, experience, and innovations together to ensure Matter met the needs of all stakeholders including users, product makers, and platforms. Collectively, these companies led the way through requirements and specification development, reference design, multiple test events and final specification validation to reach this industry milestone.

“We would not be where we are today without the strength and dedication of the Alliance members who have provided thousands of engineers, intellectual property, software accelerators, security protocols, and the financial resources to accomplish what no single company could ever do on their own,” said Bruno Vulcano, Chair of the Alliance Board and R&D Manager for Legrand Digital Infrastructure. “With members equally distributed throughout the world, Matter is the realization of a truly global effort that will benefit manufacturers, customers and consumers alike, not just in a single region or continent.”

More than just a specification, the Matter 1.0 standard launches with test cases and comprehensive test tools for Alliance members and a global certification program including eight authorized test labs who are primed to test not only Matter, but also Matter’s underlying network technologies, Wi-Fi and Thread. Wi-Fi enables Matter devices to interact over a high-bandwidth local network and allows smart home devices to communicate with the cloud. Thread provides an energy efficient and highly reliable mesh network within the home. Both the Wi-Fi Alliance and Thread Group partnered with the Connectivity Standards Alliance to help realize the complete vision of Matter.

“Matter and Thread resolve interoperability and connectivity issues in smart homes so manufacturers can focus on other value-adding innovations,” Thread Group president Vividh Siddha said. “Thread creates a self-healing mesh network which grows more responsive and reliable with each added device, and its ultra-lower power architecture extends battery life. Combined, Thread with Matter is a powerful choice for product companies and a great value for consumers.”

“Matter leverages Wi-Fi’s sophisticated network efficiency, global pervasiveness of more than 18 billion devices in use today, and robust standards-based foundation to help deliver the IoT vision,” said Edgar Figueroa, president and CEO, Wi-Fi Alliance®. “Together, Wi-Fi CERTIFIED and Matter bring simple and secure interoperability for a better user experience with a wide range of IoT devices.”

Matter is also striking new ground with security policies and processes using distributed ledger technology and Public Key Infrastructure to validate device certification and provenance. This will help to ensure users are connecting authentic, certified, and up-to-date devices to their homes and networks.

This initial release of Matter, running over Ethernet, Wi-Fi, and Thread, and using Bluetooth Low Energy for device commissioning, will support a variety of common smart home products, including lighting and electrical, HVAC controls, window coverings and shades, safety and security sensors, door locks, media devices including TVs, controllers as both devices and applications, and bridges.

www.csa-iot.org

www.buildwithmatter.com

Fullmoonfever

Top 20

US ARL hitting neuromorphic as well.

Think someone stuffed up with dates though unless running for 5 yrs haha

Think someone stuffed up with dates though unless running for 5 yrs haha

D

Deleted member 118

Guest

Go and winge to the company, flood Tony with useless, time-wasting emails that he feels obliged to answer.

I couldn't care less if zero posters liked, loved, on fire or laughed at my posts.

Listen to the presentation yourself, rather than being lazy, and then form your own opinion.

OR....you can email me directly at graphitearms@gmail.com

Then you can reveal yourself, rather than hiding behind your keyboard and whining like a spoilt little brat.

The only positive thing that I can say is that you have invested in a great company.

Goodnight from Perth xxx

thelittleshort

Top Bloke

Not sure of relevance, but new from SiFive

Izzzzzzzzzzy

Regular

Morning, I’m in the way to raid the Sydney office of BRN to get first eyes on the 4c today and I won’t even tell any of you

The Pope

Regular

Gees it looks like you have thrown all the toys out of the cot on this occasion BUT sounds like you feel a lot better for doing it. Hope you are ok.Go and winge to the company, flood Tony with useless, time-wasting emails that he feels obliged to answer.

I couldn't care less if zero posters liked, loved, on fire or laughed at my posts.

Listen to the presentation yourself, rather than being lazy, and then form your own opinion.

OR....you can email me directly at graphitearms@gmail.com

Then you can reveal yourself, rather than hiding behind your keyboard and whining like a spoilt little brat.

The only positive thing that I can say is that you have invested in a great company.

Goodnight from Perth xxx

Good morning from east coast of Australia. Xxx

wilzy123

Founding Member

Gees it looks like you have thrown all the toys out of the cot on this occasion BUT sounds like you feel a lot better for doing it. Hope you are ok.

Good morning from east coast of Australia. Xxx

Dozzaman1977

Regular

What a complete waste of time reading shit from these foolswell how that now, *laughs, Brainchip was never with his tips

oh how fooooooooool.... if only you had known that sooner

"Good investment?

Let’s imagine I had invested $1,000 in BrainChip shares after market close on 25 October 2021. I would have walked away with 2,020 shares with ten cents left over.

Today, these shares are fetching 89 cents a share, based on the share price at the time of writing. So this investment would now be worth $1,797.80.

If I’d invested $10,000 in BrainChip shares a year ago, my investment would now be fetching $17,978.

Looking at the bigger picture for BrainChip shares, on 19 January 2022, the company’s share price hit a high of $2.13.

At that time, my $1,000 investment would have been worth a mammoth $4,302.6 If I had cashed in, I could have walked away with a more than $3,300 profit from a $1,000 investment.

Overall, if I had invested in BrainChip shares a year ago, I would certainly be happy with my investment.

Currently, BrainChip does not pay any dividends and has never done so in the past. As my Foolish colleague James reported last week, short sellers have also been targeting BrainChip shares of late."

If I'd invested $1,000 in BrainChip shares a year ago, would I be happy right now?

BrainChip Holdings Ltd (ASX: BRN) shares have rocketed ahead in the past year, making them a fine investment over that time.www.fool.com.au

It's not worth the paper it's printed on.

So these fools get paid $50 to $100 per bullshit article they get approved by fool management.

You tooGees it looks like you have thrown all the toys out of the cot on this occasion BUT sounds like you feel a lot better for doing it. Hope you are ok.

Good morning from east coast of Australia. Xxx

dont be a dick.

show some respect….

Nan. xxx

TheUnfairAdvantage

Regular

Gee TechGo and winge to the company, flood Tony with useless, time-wasting emails that he feels obliged to answer.

I couldn't care less if zero posters liked, loved, on fire or laughed at my posts.

Listen to the presentation yourself, rather than being lazy, and then form your own opinion.

OR....you can email me directly at graphitearms@gmail.com

Then you can reveal yourself, rather than hiding behind your keyboard and whining like a spoilt little brat.

The only positive thing that I can say is that you have invested in a great company.

Goodnight from Perth xxx

That’s a little OTT. Whaaaat. Spoilt little brat. MD is a valuable contributor.

Being Lazy was not the point. It’s just another example of your pompous “I know something but I can’t tell you” suggestive posts.

Plus. No one is suggesting emailing Tony.

Prnewy Poor Tech. Tech seems like a sensitive soul.Gees it looks like you have thrown all the toys out of the cot on this occasion BUT sounds like you feel a lot better for doing it. Hope you are ok.

Good morning from east coast of Australia. Xxx

Gee Nanna. Prnewy was just concerned about Techs welfare.You too

dont be a dick.

show some respect….

Nan. xxx

D

Deleted member 118

Guest

I apologise, but sometimes I just can’t help myselfDon’t be a dick….

show some respect….

nan. xxx

Izzzzzzzzzzy

Regular

We don’t need the other forum we got the stock exchange where BRN lovers attack BRN lovers in a massive orgy

Similar threads

- Replies

- 0

- Views

- 4K

- Replies

- 9

- Views

- 6K

- Replies

- 0

- Views

- 3K