Part 2

Design and operation of a Prophesee event-based GenX320 Camera.

The event-based camera selected for the technical analysis is the Prophesee's GenX320 Metavision sensor, launched on the 16th of October, 2023 [9]—the GenX320 designs inspired by the human eye, as shown in Figure 5. The illustration depicts light passing through the eye's lens, striking individual photoreceptors, and being interpreted into electrical signals for processing by the cortex [10].

With the inspiration of the human eye forming the foundations of the Prophesee GenX320 design, Table 2 provides the key specifications of the GenX320 sensor; this section will aim to demystify the hardware that drives an event camera and associated specifications.

As previously discussed, event cameras can fully utilise a continuous data stream at rates above 10,000 FPS time equivalent and a latency rate of sub-one microseconds. The constant analysis of a dynamic scene can assist in revealing details of hyper-fast dynamic scenes without motion blur, a common issue with standard frame-based cameras [25]. Figure 12 and Figure 13 display the raw data output of an event camera restructured on an X-Y Time-space plot. Figure 13 also depicts a rotating disc sampled to compare an event and standard camera output streams over time to emphasise the distinct difference highlighted in the previous section.

Standard cameras process pixels progressively and synchronously at a fixed FPS and on request by a processing unit. Unlike a standard camera, the latency at which an event camera transfers information is significantly lower due to the reduction of redundant data transmission and the asynchronous operation of a pixel. As shown in Figure 6, the synchronous transmission displays continuous execution of data packets. The synchronous transmission includes relevant and redundant information, whereas the asynchronous transmission of an event camera transmits valuable information only and on demand when required by a triggered event.

With a core understanding of the fundamental operation of an event camera, we can now look deeper into the hardware design that makes the technology possible. The processing flow shown in Figure 6 includes a magnification of the X320 sensor and shows a portion of the 102,400 (320×320) pixels and internal circuit tracks sitting within the 3x4mm sensor [2]. Each pixel comprises a photodiode, the equivalent of a photoreceptor in a human eye and a contrast Change Detector (CD) unit. An individual pixel receives light via the photodiode, generating a current that flows to a logarithmic converter and a voltage outputted once an events triggered for processing into meaningful data.

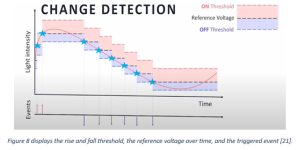

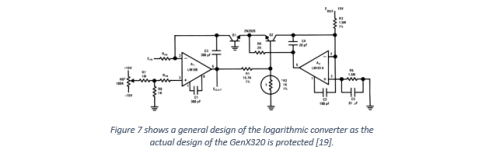

The role of the CD is to convert a linear current to a logarithmic voltage; the event camera perceives the light intensity similarly to that of a human eye, which measures light intensity logarithmically [17]. The output response, measured in decibels (dB), is a logarithmic measure of energy and quantifies the dynamic range of light intensity in which GenX320 can operate [18]. To provide context to the complexity and miniaturisation of the CD unit depicted in Figure 7, an AN-30 Log converter was produced by Texas Instruments [19]. The circuit does not need to be understood. The operation of the CD unit will not be covered in detail as the actual design of the GenX320 is commercially sensitive, providing little detail to the actual design. Summarised, a small change in low light levels and a large change in high light levels produce similar changes in voltage and allow a pixel to respond effectively over a broad range of light intensities.

On triggering an event, a pixel will demand a readout to occur. The row arbiter will select the row (Y) on a first-come-first-served basis. The triggered pixels from each CD unit are enabled for transmission on a selected row's pixel array to the readout unit depicted in Figure 9. Active pixels are stored in an array of X coordinates and assigned with the same timestamp by the readout unit. At this point, each retrieved pixel is separated into a unique event. The event is assigned a data packet containing the X-Y coordinate (X, Y), increase or decrease in intensity (Polarity, P), and timestamp (microseconds, T) in the format [X, Y, P, T]. The recorded event data from the readout unit is transmitted to a central, graphics, or neuromorphic processing unit for use by the wider application, as shown in Figure 10.

In summary of sections one and two, we fundamentally explain each camera type, associated characteristics, benefits, and shortcomings. We have delved into how an event camera mimics the human eye, what drives the critical specifications detailed in Table 2, and the hardware-level design and operation of the Prophesee GenX320 event-based camera. The following section will cover the camera software-related configurations and simulation of recorded RAW data in Metavision Studio.

Reference

[9] Prophesee Releases Fifth-Generation GenX320 Event-based Metavision Sensor (ts2.pl)

[10] Prophesees Metavision Training Video

[11] What is Event-Based Vision | Metavision by Prophesee 00:30

[12] What is Event-Based Vision | Metavision by Prophesee 00:40

[13] What is Event-Based Vision | Metavision by Prophesee 01:00

[14] GenX320_Sensor_Size_Branded_CES_Web_3-1.jpg (1440×1440) (prophesee.ai)

[15] What is Event-Based Vision | Metavision by Prophesee 01:31

[16] What is Event-Based Vision | Metavision by Prophesee 01:31

[17] logs and the human eye

[18] Log dB of the eye

[19] Texas Instrument AN-Log Converter 100 db

[20] Event-Based Metavision® Sensor GENX320 | PROPHESEE

[21] What is Event-Based Vision | Metavision by Prophesee 06:13

[22] Event-Based Metavision® Sensor GENX320 | PROPHESEE

[23] What is Event-Based Vision | Metavision by Prophesee 07:08

[24] What is Event-Based Vision | Metavision by Prophesee 04:34

[25] Event-Based Metavision® Sensor GENX320 | PROPHESEE

[26] Metavision-XY Time Space Plot

Attachments

Last edited: