You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

BRN Discussion Ongoing

- Thread starter TechGirl

- Start date

That's how the Sami reindeer herders distinguish their livestock.Any thoughts as to why this Pokemon is making a comeback

View attachment 72603

FJ-215

Regular

G'day sleepy,With due respect, no offense intended

1: Really? a former NED venting out his frustration is something you believe unprofessional? He obviously has his skin attached to our beloved BRN, That is why he has every right to express his own opinion with or without inside info

2: We had our director Pia selling her shares right after Sean exploding his confidence and our chairman stating he can't wait to join her on any boards in the AGM, don't forget, she sold before the CR. I, SPEAKING FOR MYSELF ONLY, DO NOT BELIEVE THIS IS RIGHT.

3: CR, as a reference here though, which was denied in our last AGM, came out as a surprise (at least to me) at the almost lowest SP 19.3C, DOES THIS RING THE BELL?

4: I am absolutely shocked and embarrassed when someone could even dare to claim that he built a better team and ecosystem in comparison to a less competitive team, not so competitive product line but landing 2, yes two, IP LICENCES. THIS IS BEYOND MY DOT LINE

IN SHORT, I DO NOT BELIEVE SEAN IS CAPABLE OF DOING HIS JOB AT ALL.

Back in April, Rinaldi was a top 50 holder (50th spot), with just under 2.2 million shares. Still some skin in the game there. I think his comments will ring true for many of us.

BRN Top 50

Don't get me started on the CR that came from nowhere......

Good news is the SP is showing signs of life so hopefully signed deals are imminent. (As in next week)

FJ-215

Regular

Would have recommended working on the red nose but........That's how the Sami reindeer herders distinguish their livestock.

All the other reindeer just seem to have been caught in the headlights.

Frangipani

Regular

Haven’t come across any concrete links, but here is an observation that may be of interest:

A couple of months ago, I noticed that Steve Harbour had left Southwest Research Institute aka SwRI (which has been closely collaborating with Intel on neuromorphic research for years) to become Director of AI Hardware Research at Parallax Advanced Research, a non-profit research institute headquartered in Dayton, OH that describes its purpose as “Solving critical challenges for the Nation's security and prosperity.”

He has since liked a few LinkedIn posts by BrainChip, if I remember correctly (sorry, don’t have the time right now to search for them).

This may not mean anything (especially given he seems to be quite a prolific liker in general), but who knows…

Definitely good to know that he is aware of our company and its technology.

Home | Parallax Research

We are a 501(c)(3) nonprofit research institute. We solve critical challenges for the Nation's security and prosperity. We do this by focusing on creating impact by accelerating investments in the U.S. science and technology enterprise.

parallaxresearch.org

Some of them do two at once.Would have recommended working on the red nose but........

All the other reindeer just seem to have been caught in the headlights.

Pom down under

Top 20

Frangipani

Regular

The University of Washington in Seattle, interesting…

UW’s Department of Electrical & Computer Engineering had already spread the word about a summer internship opportunity at BrainChip last May. In fact, it was one of their graduating Master’s students, who was a BrainChip intern himself at the time (April 2023 - July 2023), promoting said opportunity.

I wonder what exactly Rishabh Gupta is up to these days, hiding in stealth mode ever since graduating from UW & simultaneously wrapping up his internship at BrainChip. What he has chosen to reveal on LinkedIn is that he now resides in San Jose, CA and is “Building next-gen infrastructure and aligned services optimized for multi-modal Generative AI” resp. that his start-up intends to build said infrastructure and services “to democratise AI”…He certainly has a very impressive CV so far as well as first-hand experience with Akida 2.0, given the time frame of his internship and him mentioning vision transformers.

Brainchip Inc: Summer Internship Program 2023

I am Rishabh Gupta, I am a 2nd year ECE PMP masters student and currently working at Brainchip.advisingblog.ece.uw.edu

View attachment 63099

View attachment 63101

View attachment 63102

View attachment 63103

View attachment 63104

Meanwhile, yet another university is encouraging their students to apply for a summer internship at BrainChip:

BrainChip– Internship Program 2024 - USC Viterbi | Career Services

BrainChip– Internship Opportunity! Apply Today! About BrainChip: BrainChip develops technology that brings commonsense to the processing of sensor data, allowing efficient use for AI inferencing enabling one to do more with less. Accurately. Elegantly. Meaningfully. They call this Essential AI...viterbicareers.usc.edu

View attachment 63100

I guess it is just a question of time before USC will be added to the BrainChip University AI Accelerator Program, although Nandan Nayampally is sadly no longer with our company…

IMO there is a good chance that Akida will be utilised in future versions of that UW prototype @cosors referred to.

AI headphones let wearer listen to a single person in a crowd, by looking at them just once

A University of Washington team has developed an artificial intelligence system that lets someone wearing headphones look at a person speaking for three to five seconds to “enroll” them. The...www.washington.edu

“A University of Washington team has developed an artificial intelligence system that lets a user wearing headphones look at a person speaking for three to five seconds to “enroll” them. The system, called “Target Speech Hearing,” then cancels all other sounds in the environment and plays just the enrolled speaker’s voice in real time even as the listener moves around in noisy places and no longer faces the speaker.

The team presented its findings May 14 in Honolulu at the ACM CHI Conference on Human Factors in Computing Systems. The code for the proof-of-concept device is available for others to build on. The system is not commercially available.”

While the paper shared by @cosors (https://homes.cs.washington.edu/~gshyam/Papers/tsh.pdf) indicates that the on-device processing of the end-to-end hardware system UW professor Shyam Gollakota and his team built as a proof-of-concept device is not based on neuromorphic technology, the paper’s future outlook (see below), combined with the fact that UW’s Paul G. Allen School of Computer Science & Engineering* has been encouraging students to apply for the BrainChip Summer Internship Program for the second year in a row, is reason enough for me to speculate those UW researchers could well be playing around with Akida at some point to minimise their prototype’s power consumption and latency.

*(named after the late Paul Gardner Allen who co-founded Microsoft in 1975 with his childhood - and lifelong - friend Bill Gates and donated millions of dollars to UW over the years)

View attachment 64177

View attachment 64203 The paper was co-authored by Shyam Gollakota (https://homes.cs.washington.edu/~gshyam) and three of his UW PhD students as well as by AssemblyAI’s Director of Research, Takuya Yoshioka (ex-Microsoft).

AssemblyAI (www.AssemblyAI.com) sounds like an interesting company to keep an eye on:

View attachment 64205

View attachment 64206

View attachment 64207

View attachment 64204

View attachment 64208

View attachment 64209

FNU Sidharth, a Graduate Student Researcher from the University of Washington in Seattle, will be spending the summer as a Machine Learning Engineering Research Intern at BrainChip:

View attachment 66341

View attachment 66342

View attachment 66344

Bingo!

https://thestockexchange.com.au/threads/brn-discussion-ongoing.1/post-425543

View attachment 66345

View attachment 66346

One of BrainChip’s 2024 summer interns was FNU Sidharth, a Graduate Student Researcher from the University of Washington in Seattle (see the last of my posts above). According to his LinkedIn profile he actually continues to work for our company and will graduate with an M.Sc. in March 2025.

His research is focused on speech & audio processing, and this is what he says about himself: “My ultimate aspiration is to harness the knowledge and skills I gain in these domains to engineer cutting-edge technologies with transformative applications in the medical realm. By amalgamating my expertise, I aim to contribute to advancements that will revolutionise healthcare technology.”

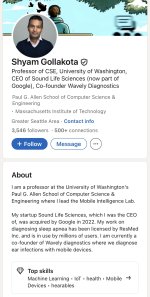

As also mentioned in my previous post on FNU Sidarth, his advisor for his UW spring and summer projects (the last one largely overlapping with his time at BrainChip) was Shyam Gollakota, Professor of Computer Science & Engineering at the University of Washington (where he leads the Mobile Intelligence Lab) and serial entrepreneur (one of his start-ups was acquired by Google in 2022).

Here is a podcast with Shyam Gollakota on “The Future of Intelligent Hearables” that is quite intriguing when you watch it in the above context (especially from 6:15 min onwards) and when you find out that he and his fellow researchers are planning on setting up a company in the field of intelligent hearables…

We should definitely keep an eye on them!

Attachments

Frangipani

Regular

One of BrainChip’s 2024 summer interns was FNU Sidharth, a Graduate Student Researcher from the University of Washington in Seattle (see the last of my posts above). According to his LinkedIn profile he actually continues to work for our company and will graduate with an M.Sc. in March 2025.

His research is focused on speech & audio processing, and this is what he says about himself: “My ultimate aspiration is to harness the knowledge and skills I gain in these domains to engineer cutting-edge technologies with transformative applications in the medical realm. By amalgamating my expertise, I aim to contribute to advancements that will revolutionise healthcare technology.”

View attachment 72617

View attachment 72618

View attachment 72622

As also mentioned in my previous post on FNU Sidarth, his advisor for his UW spring and summer projects (the last one largely overlapping with his time at BrainChip) was Shyam Gollakota, Professor of Computer Science & Engineering at the University of Washington (where he leads the Mobile Intelligence Lab) and serial entrepreneur (one of his start-ups was acquired by Google in 2022).

View attachment 72615

View attachment 72616

Here is a podcast with Shyam Gollakota on “The Future of Intelligent Hearables” that is quite intriguing when you watch it in the above context (especially from 6:15 min onwards) and when you find out that he and his fellow researchers are planning on setting up a company in the field of intelligent hearables…

We should definitely keep an eye on them!

View attachment 72620

Pom down under

Top 20

As the $1.8 trillion space economy takes off, here are 3 ASX stocks to watch - Stockhead

McKinsey highlights vast opportunities for investors in the space sector. On the ASX, Brainchip is one stock for potential investors.

stockhead.com.au

Pom down under

Top 20

Even a little positivity from the fools

www.fool.com.au

www.fool.com.au

Why is the BrainChip share price surging 17% today?

The BrainChip share price has surged today as investors pile into the ASX AI stock. What's behind the jump? Let's see.

Edward Lien, Executive MBA on LinkedIn: #akida #tenns #tinymltaipeiday2024 #edgeaifoundation

We were thrilled to participate in this incredible event. Shared how we bring LLMs to the edge and the unique value in using a new model architecture based on…

KiKi

Regular

This is the most boring video I have seen for a long time. I almost fell asleep.

I did not even finish it.

Pom down under

Top 20

I might watch it tonight then just before I go to bed.This is the most boring video I have seen for a long time. I almost fell asleep.

I did not even finish it.

Now I am concerned this is another pump n dump.Even a little positivity from the fools

Why is the BrainChip share price surging 17% today?

The BrainChip share price has surged today as investors pile into the ASX AI stock. What's behind the jump? Let's see.www.fool.com.au

Tothemoon24

Top 20

AI-Driven SDV Rapidly Emerging As Next Big Step

The vision laid out by Qualcomm and other tech executives calls for the AI-driven SDV to lead to a critical shift in computing away from the cloud to directly onboard the vehicle as the industry moves to its next stage with what some are calling the Intelligent SDV.

www.wardsauto.com

www.wardsauto.com

AI-Driven SDV Rapidly Emerging As Next Big Step

The vision laid out by Qualcomm and other tech executives calls for the AI-driven SDV to lead to a critical shift in computing away from the cloud to directly onboard the vehicle as the industry moves to its next stage with what some are calling the Intelligent SDV.

AI-Driven SDV Rapidly Emerging As Next Big Step

The vision laid out by Qualcomm and other tech executives calls for the AI-driven SDV to lead to a critical shift in computing away from the cloud to directly onboard the vehicle as the industry moves to its next stage with what some are calling the Intelligent SDV.

David Zoia, Senior Contributing Editor

November 8, 2024

7 Min Read

Qualcomm’s Snapdragon-enabled vehicle already looking beyond SDV.

MAUI, HI – It’s time to turn the page on the software-defined vehicle – rapidly becoming basic table stakes in next-gen vehicle development – toward a new chapter: the AI-defined vehicle.

That’s probably the biggest takeaway at Qualcomm’s recent Snapdragon Symposium here, where the San Diego-based chipmaker and technology company unleashed its new Snapdragon Elite line of digital cockpit and automated-driving processors powerful enough to take advantage of what fast-developing artificial-intelligence technology can bring to the automobile.

Of course, it’s hard to say how quickly the industry will move toward SDVs now that many automakers are pulling back on planned battery-electric vehicles based on new platforms that were expected to lead the transition.

However, at least some suppliers say the shift will continue with or without a broad migration to BEVs, and for its part, Qualcomm predicts the movement remains on pace to creating a market worth nearly $650 billion annually by 2030. The forecast includes hardware and software demands from automakers rising to $248 billion per year from $87 billion today and a doubling in demand from suppliers to $411 billion.

The vision laid out by Qualcomm and executives of other tech developers and enablers on hand here calls for the AI-driven SDV to lead a critical migration in computing away from the cloud to directly onboard the vehicle as the industry moves to its next stage toward what some are calling the Intelligent SDV.

“The shift of AI processing toward the edge is happening,” declares Durga Malladi, senior vice president and general manager of tech planning for edge solutions at Qualcomm. “It is inevitable.”

From Cloud To Car

Two things are making possible this movement away from the cloud and toward so-called edge processing that takes place within the vehicle itself: the rapid and seismic leaps in AI coding and data efficiency and capability, and the increasingly powerful and energy-efficient computers needed to process the information.

Answering the call for greater computing capability is Qualcomm’s new Elite line of Snapdragon Cockpit and Snapdragon Drive platforms, set to launch on production vehicles in 2026, including upcoming models featuring Mercedes-Benz’s next-gen MB-OS centralized electronics architecture and operating system and vehicles from China’s Li Auto.

Current-generation Snapdragon chips also will underpin new Level 3 ADAS technology and central-compute architecture Qualcomm is developing with BMW that will be deployed in BMW’s Neue Klasse BEVs expected to roll out in 2026. That ADAS technology will be offered to other OEMs through Qualcomm as well.

These new top-of-the-line Elite processors are many times more powerful than current-generation Snapdragon platforms, already with a strong foothold in automotive. Qualcomm says that as of second-quarter 2024, it had a new business pipeline for current products totaling more than $45 billion.

Moving computing away from interactions with the cloud and onboard the vehicle as the AI-driven architecture takes hold will result in several advantages, experts here say, including development of safe and reliable self-driving technology.

For automated driving, “you need more serious edge intelligence to map the environment in real time, predict the trajectory of every vehicle on the road and decide what action to take,” notes Nakul Duggal, group manager, automotive, industrial and cloud for Qualcomm Technologies.

And for cockpit operation, relying less on the cloud and more on the vehicle’s computers provides faster response and greater security and privacy. It also ratchets up the ability of the vehicle’s AI-based systems to adapt to the occupant’s preferences.

Qualcomm Snapdragon Elite Brings New Capabilities

In promoting the new Elite line of Snapdragon systems-on-a-chip for automotive, Qualcomm presents a future in which the onboard AI assistant better recognizes natural-speech commands, anticipates needs of the occupants, is capable of providing predictive maintenance alerts and does things like buy tickets to events and make reservations. The vehicle will be able to drop occupants off at their destination and then locate and drive to an open parking spot – and pay if necessary – all on its own. Not sure what that road sign indicated that you just passed? The AI assistant will be able to fill you in. If the scene ahead would make a good picture, the virtual assistant can use the vehicle’s onboard cameras to take a digital photo.

The AI assistant also will have contextual awareness, meaning it might decide it’s better not to play sensitive messages if other occupants are in the vehicle.

“Automakers are looking for new ways to personalize the driving experience, improve automated driving features and deliver predictive maintenance notifications,” says Robert Boetticher, automotive and manufacturing global technology leader for Amazon Web Services. “Our customers want to use AI at the edge now, to enhance these experiences with custom solutions built on top of powerful models.”

Beyond virtual assistants, these new onboard computers will be there to support high-resolution 3D mapping, multiple infotainment screens, personalized audio zones that don’t interfere with what other passengers are listening to and sophisticated cabin-monitoring technology. They will be capable of fusing data from both the ADAS and infotainment systems to provide vehicle occupants with more granular information and intuitive driving assistance that acts more like a human would – guiding the vehicle around a known pothole on your daily commute, for instance.

And the human-machine interface promises to evolve as a result. With AI, the world is edging away from a tactile experience – such as pushing buttons on a screen to access data – to one where infotainment is voice-, video- and sensor- (lidar, radar, camera) driven, Malladi says.

“The bottom line is, the AI agent becomes the one starting point that puts it all together for you,” he says.

AI Landscape Evolving, Rapidly

AI interest was somewhat dormant in automotive until two years ago, when the release of ChatGPT caused a stir in the tech world.

“For a lot of us in the (chip) industry, we were working on AI for a long time,” Malladi says. “But for the rest of the world, it was an eye-opener. Everyone was talking about it.”

Within a year, he says, large-language-model technology took giant leaps in simplicity. While the model used to create ChatGPT was about 175 billion parameters (a measure of its complexity), that has shrunk considerably, Malladi says.

Large-language-model technology “went from 175 billion parameters to 8 billion in two years, and the quality has only increased,” he says. That translates into less required storage capacity, faster compute times, greater accuracy and fewer chances to introduce bugs into the code.

A new AI law is emerging as a result, Malladi adds, in that “the quality of AI per parameter is constantly increasing. It means that the same experience you could get from a (large, cloud-based) data center yesterday you (now) can bring into devices that you and I have.”

Making it all possible inside the vehicle are the new-generation chips now emerging that easily can handle the AI workload.

“We can run with the next generation up to 20 billion parameter models at the edge,” Duggal says, adding that compares to about 7 billion with the current-generation processors. “Everything is in your environment (and processed) locally. This is the big advancement that has happened with the latest AI.”

The chips also are becoming more power efficient, as well, a key factor in the evolution toward BEVs, where automakers are looking to squeeze out as much driving range as possible from their lithium-ion batteries.

The new Snapdragon platform is said to be 20 times more efficient than what is required to generate AI from a data center today.

“The power draw of the devices we now use – that have the power of a mini-supercomputer of 25 years ago – is down to less than an LED lightbulb,” Malladi says.

Moving from the cloud to the edge onboard the vehicle also will save money – it’s closer to zero cost when accessing the data onboard the vehicle, and unlike operation of huge cloud servers, there’s no impact on the electrical grid, making it more environmentally favorable, Malladi points out.

Minimal reliance on vehicle-to-cloud computing also reduces the risk a cloud server won’t be available at a critical juncture, says Andrew Ng, founder and CEO of AI visual solutions provider Landing AI. “AI brings low latency, real-time processing, reduced bandwidth requirements and potentially advanced privacy and security,” he says.

It will take time for AI-based SDVs to begin penetrating the market in big numbers, and automakers will have to carefully determine what features customers will want and avoid packing vehicles with capabilities they won’t appreciate. But the general direction seems clear.

“Bringing AI in is no small task,” Duggal admits, but he says it offers limitless potential and notes nearly every major automaker has shown an interest in the new Snapdragon Elite platform that can help unleash the technology.

Sums up Qualcomm’s Anshuman Saxena, product management lead and business manager for automotive software and systems: “AI is definitely becoming a focal point for the whole industry – and for us too.”

Bravo

If ARM was an arm, BRN would be its biceps💪!

Neuromorphic Computing Market worth $1,325.2 Million by 2030, at a CAGR of 89.7%

08-11-2024 07:48 PM CETPress release from: ABNewswire

Neuromorphic Computing Market

The global Neuromorphic Computing Market in terms of revenue is estimated to be worth $28.5 million in 2024 and is poised to reach $1,325.2 million by 2030, growing at a CAGR of 89.7% during the forecast period.

According to a research report "Neuromorphic Computing Market [https://www.marketsandmarkets.com/M...idPR&utm_campaign=neuromorphiccomputingmarket] by Offering (Processor, Sensor, Memory, Software), Deployment (Edge, Cloud), Application (Image & Video Processing, Natural Language Processing (NLP), Sensor Fusion, Reinforcement Learning) - Global Forecast to 2030" The neuromorphic computing industry is expected to grow from USD 28.5 million in 2024 and is estimated to reach USD 1,325.2 million by 2030; it is expected to grow at a Compound Annual Growth Rate (CAGR) of 89.7% from 2024 to 2030.

Growth in the neuromorphic computing industry is driven through the integration of neuromorphic computing in automotive and space operations. In space, where bandwidth is limited and the communication delay might be considered large, onboard processing capabilities are crucial. The neuromorphic processor analyzes and filters data at the point of collection, reducing the need to transmit large datasets back to Earth. whereas, in automobile sector, neuromorphic processors can make autonomous driving systems more responsive by onboard real-time processing with minimal latency so that safety is ensured along with efficiency.

Download PDF Brochure @ [https://www.marketsandmarkets.com/r...idPR&utm_campaign=neuromorphiccomputingmarket]

Browse 167 market data Tables and 69 Figures spread through 255 Pages and in-depth TOC on "Neuromorphic Computing Market"

View detailed Table of Content here -https://www.marketsandmarkets.com/Market-Reports/neuromorphic-chip-market-227703024.html [https://www.marketsandmarkets.com/M...idPR&utm_campaign=neuromorphiccomputingmarket]

[https://www.marketsandmarkets.com/M...idPR&utm_campaign=neuromorphiccomputingmarket]

By Offering, software segment is projected to grow at a high CAGR of neuromorphic computing industry during the forecast period.

The software segment is expected to grow at a fast rate in the forecasted period. Neuromorphic software has its roots in models of neural systems. Such systems entail spiking neural networks (SNNs), that attempt to replicate the properties of biological neurons in terms of their firing patterns. In contrast to the typical artificial neural networks using continuous activation functions, SNNs utilize discrete spikes for communication, a feature that is also found in the brain. With intelligence embedded directly into the edge devices and IoT sensors, the potential for neuromorphic systems to perform even the most complex tasks such as pattern recognition and adaptive learning with considerably less power consumption remains. This efficiency stretches the lifetime of device operations while cutting down on the overall energy footprint, thus spurring demand for neuromorphic software that can harness these benefits and optimize performance for real-world edge and IoT applications.

By deployment, cloud segment will account for the highest CAGR during the forecast period.

Cloud segment will account for the high CAGR in the forecasted period. Cloud computing benefits from offering central processing power, which enables large-scale computational resources and storage capacities accessible from remote data centers. This is useful because neuromorphic computing, has been very often associated with complex algorithms and large-scale data processing. In the cloud, such huge resources can be utilized to train neuromorphic models, run large-scale simulations, and process enormous datasets. The scalable infrastructure of cloud platforms allows neuromorphic computing applications to dynamically adjust resources according to demand. It is a key factor for the training and deployment of high-scale neuromorphic networks, as their computation requirements are considerable especially during peak loads, driving its demand in the market.

Natural language processing (NLP) segment is projected to grow at a high CAGR of neuromorphic computing industry during the forecast period.

Natural Language Processing (NLP) is a branch of artificial intelligence focused on giving computers the ability to understand text and spoken words in much the same way human beings can. NLP represents a promising application of neuromorphic computing, leveraging the brain- inspired design of spiking neural networks (SNNs) to enhance the efficiency and accuracy of language data processing. Low-power, high-performance solutions are required by the expanding demand for real-time efficient language processing in devices-from smartphones to IoT devices. Neuromorphic computing fits well within these requirements with its energy-efficient architecture. Progress over time with improvements in SNNs is also advancing its ability to approach complex NLP tasks,

Industrial vertical in neuromorphic computing industry will account for the high CAGR by 2030.

Industrial segment will account for the high CAGR in the forecasted period. In the industrial vertical, manufacturing companies use neuromorphic computing for developing and testing end products, manufacturing delicate electronic components, printing products, metal product finishing, testing of machines, and security purpose. Neuromorphic computing can be used in these processes to store the data in chips, and the images can be extracted from the devices for further use. Neuromorphic computing also helps monitor the condition of the machines by analyzing the previous signals and comparing them with current signals. These advantages lead to high demand for neuromorphic processors and software in industrial vertical.

Asia Pacific will account for the highest CAGR during the forecast period.

The neuromorphic computing industry in Asia Pacific is expected to grow at the highest CAGR due to a high adoption rate of new technologies in this region. High economic growth, witnessed by the major countries such as China and India, is also expected to drive the growth of the neuromorphic computing industry in APAC.

Key Players

Key companies operating in the neuromorphic computing industry are Intel Corporation (US), IBM (US), Qualcomm Technologies, Inc. (US), Samsung Electronics Co., Ltd. (South Korea), Sony Corporation (Japan), BrainChip, Inc. (Australia), SynSense (China), MediaTek Inc. (Taiwan), NXP Semiconductors (Netherlands), Advanced Micro Devices, Inc. (US), Hewlett Packard Enterprise Development LP (US), OMNIVISION (US), among others.

Neuromorphic Computing Market worth $1,325.2 Million by 2030, at a CAGR of 89.7%

The global Neuromorphic Computing Market in terms of revenue is estimated to be worth 28 5 million in 2024 and is poised to reach 1 325 2 million by 2030 growing at a CAGR of 89 7 during the forecast ...

www.openpr.com

Bravo

If ARM was an arm, BRN would be its biceps💪!

Hi Tech,Well, it's 9:57am in Taipei and the tinyML Foundation is underway.

Our very own KEN WU (Senior Design Engineer) at Brainchip will be manning the demo counter, this event being held today

at the Grand Hilai Taipei is yet another great event to demonstrate our Akida Pico, TENN's and I personally think having Ken

on the desk is a huge plus, he has worked in very close with Anil, has hands on experience and will be able to communicate

with many of the local Taiwanese engineers in their local tongue, that is, getting the message across about any detailed questions

that may be posed.

I just wonder if Sean his handed him the company's big fancy pen, just incase someone walks up to the counter and wishes to

sign on the dotted line...dreams are free.

Keep an eye out for any news grabs on this event.

Cheers....Tech.

I only just realised this morning that the TinyML Foundation is rebranding itself to the EDGE AI FOUNDATION!!! The new foundation’s mission is to ensure advancements in edge AI benefit society and the environment.

Check out the video below!! No one can deny that the edge is really starting to gain momentum!!

I think it's interesting that the video has been narrated by a woman with an Australian accent, despite the foundation being based in the US. I wonder if we can read anything into that?

This is the video link from the article above.

Here's a pic showing Ken Wu at the TinyML Foundation's (aka EDGE AI FOUNDATION) recent event.

Last edited:

Hi Tech,

I only just realised this morning that the TinyML Foundation is rebranding itself to the EDGE AI FOUNDATION!!! The new foundation’s mission is to ensure advancements in edge AI benefit society and the environment.

Check out the video below!! No one can deny that the edge is really starting to gain momentum!!

I think it's interesting that the video has been narrated by a woman with an Australian accent, despite the foundation being based in the US. I wonder if we can read anything into that?

View attachment 72634

This is the video link from the article above.

Here's a pic showing Ken Wu at the TinyML Foundation's (aka EDGE AI FOUNDATION) recent event.

View attachment 72636

Hi Bravo,

Yes, I noticed that the other day when I posted that short video.

The momentum continues to gather pace, and you, like me, can clearly see how Peters early break through with SNN architecture was and still is well ahead of the mob.

Sometimes when I read edge ai articles or view videos I'm thinking to myself, I could have told you that back in 2018/2019.

I will never ever forget the night back in 2019 when at Peters lecture he stated that Akida didn't need the internet to function as all processing & intelligence was done on-chip, that really blew me away.

My prediction 2 years ago about reviewing my holding and satisfaction surrounding Brainchips overall performance is appearing at first glance to be right on..but lets see.

Love our company...

Kind regards (Chris) Tech.

Last edited:

Conference Program

The CES® 2025 conference program will highlight advancements in digital health, AI, sustainability, gaming, vehicle tech, cybersecurity, beauty and fashion tech, space tech, and more. Register today for CES 2025.

Did somebody say Brainchip?!

Smells strong!

Similar threads

- Replies

- 0

- Views

- 2K

- Replies

- 4

- Views

- 2K

- Replies

- 0

- Views

- 1K