Maybe you don’t understand that in order to gain market advantage, companies developing new technology like to keep things SECRETIVE.Sure if that's how you want to internalise it

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

BRN Discussion Ongoing

- Thread starter TechGirl

- Start date

uiux

Regular

Maybe you don’t understand that in order to gain market advantage, companies developing new technology like to keep things secretive.

Maybe I understand it and think the notion of Peter van der Made being so careless as to let his IP slip away from him is ridiculous

Further, the patent for this "new" chip is listed in Gerts scholar profile from 2021, so it's not "new" or "secret"

Secondly, the innovations seem radically different - you are assuming stolen IP when the two innovations aren't close

Please don’t put words in my mouth. I asked a question and never made a statement. If you don’t know the answer that’s fine. Today is the first we have heard of this chip that to many of us sounds very similar to Akida. You tell us that one of the developers was on BrainChip’s scientific board. The question that I asked is reasonable. The guy goes from our scientific board and later is part of a team that releases a neuromorphic chip. Unlike Akida, which we knew about for years before it was developed, this chip seems to have come out of the blue. With all your research you didn’t even know it was being developed.Maybe I understand it and think the notion of Peter van der Made being so careless as to let his IP slip away from him is ridiculous

Further, the patent for this "new" chip is listed in Gerts scholar profile from 2021, so it's not "new" or "secret"

Secondly, the innovations seem radically different - you are assuming stolen IP when the two innovations aren't close

uiux

Regular

Please don’t put words in my mouth. I asked a question and never made a statement. If you don’t know the answer that’s fine. Today is the first we have heard of this chip that to many of us sounds very similar to Akida. You tell us that one of the developers was on BrainChip’s scientific board. The question that I asked is reasonable. The guy goes from our scientific board and later is part of a team that releases a neuromorphic chip. Unlike Akida, which we knew about for years before it was developed, this chip seems to have come out of the blue. With all your research you didn’t even know it was being developed.

I check Gerts profile every few weeks, so I would have seen the patent before

So what exactly are you saying then Slade?

Nothing, I’m taking a break.I check Gerts profile every few weeks, so I would have seen the patent before

So what exactly are you saying then Slade?

gilti

Regular

I remember there being a video of the SAB outside, like on a bush walk, using an early version of the technology.There exists a video of Jeff Krichmar, Gert Cauwenbergs and Nicholas Spitzer talking up Brainchip

Maybe the really long long termers remember it and can source it?

Anyone else?

uiux

Regular

I remember there being a video of the SAB outside, like on a bush walk, using an early version of the technology.

Anyone else?

Northrop Grumman + Neuromorphic

https:// arxiv.org/pdf/1611.01235 .pdf A Self-Driving Robot Using Deep Convolutional Neural Networks on Neuromorphic Hardware Tiffany Hwu∗†, Jacob Isbell‡, Nicolas Oros§, and Jeffrey Krichmar∗¶ ∗Department of Cognitive Sciences University of California, Irvine Irvine, California, USA, 92697...

thestockexchange.com.au

thestockexchange.com.au

They are using TrueNorth

By the sound of it, this is student project which has been running for a few years involving a couple of generations of students. Compute-in-memory and analog NPUs were around when PvdM started. His invention solves the problems with analog ReRAM.

"Compute-in-memory has been common practice in neuromorphic engineering since it was introduced more than 30 years ago," Cauwenberghs said. "What is new with NeuRRAM is that the extreme efficiency now goes together with great flexibility for diverse AI applications with almost no loss in accuracy over standard digital general-purpose compute platforms."

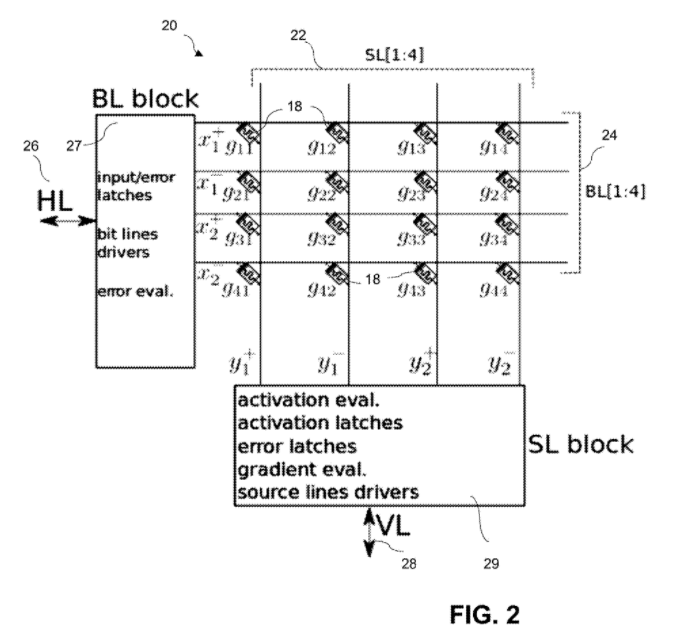

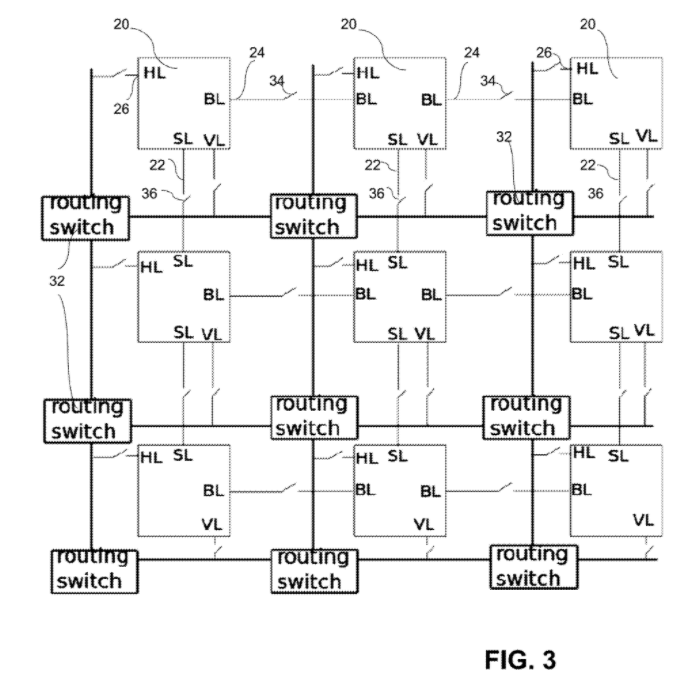

This is a patent application related to the compute-in-memory chip discussed:

US2021342678A1 COMPUTE-IN-MEMORY ARCHITECTURE FOR NEURAL NETWORKS

A neural network architecture for inference and learning comprising:

a plurality of network modules,

each network module comprising a combination of CMOS neural circuits and RRAM synaptic crossbar memory structures interconnected by bit lines and source lines,

each network module having an input port and an output port,

wherein weights are stored in the crossbar memory structures, and

wherein learning is effected using approximate backpropagation with ternary errors;

wherein the CMOS neural circuits include a source line block having dynamic comparators, and

wherein inference is effected by clamping pairs of bit lines in a differential manner and comparing within the dynamic comparator voltages on each differential bit line pair to obtain a binary output activation for output neurons.

[005] ... [Prior ReRAM systems] By storing the weights of the neural network as conductance values of the memory elements, and by arranging these elements in a crossbar configuration as shown in FIG. 1, the crossbar memory structure can be used to perform a matrix-vector product operation in the analog domain. In the illustrated example, the input layer 10 neural activity, yl-1 , is encoded as analog voltages. The output neurons 12 maintain a virtual ground at their input terminals and their input currents represent weighted sums of the activities of the neurons in the previous layer, where the weights are encoded in the memory-resistor, or “memristor”, conductances 14 a - 14 n. The output neurons generate an output voltage proportional to their input currents. Additional details are provided by S. Hamdioui, et al., in “Memristor For Computing: Myth of Reality?”, Proceedings of the Conference on Design, Automation & Test in Europe (DATE ), IEEE, pp. 722-731, 2017. This approach has two advantages: (1) weights do not need to be shuttled between memory and a compute device as computation is done directly within the memory structure; and (2) minimal computing hardware is needed around the crossbar array as most of the computation is done through Kirchoff's current and voltage laws. A common issue with this type of memory structure is a data-dependent problem called “sneak paths”. This phenomenon occurs when a resistor in the high-resistance state is being read while a series of resistors in the low-resistance state exist parallel to it, causing it to be erroneously read as low-resistance. The “sneak path” problem in analog crossbar array architectures can be avoided by driving all input lines with voltages from the input neurons. Other approaches involve including diodes or transistors to isolate each device, which limits array density and increases cost.

[0006] Deep neural networks have demonstrated state-of-the-art performance on a variety of tasks such as image classification and automatic speech recognition. Before neural networks can be deployed, however, they must first be trained. The training phase for deep neural networks can be very power-hungry and is typically executed on centralized and powerful computing systems. The network is subsequently deployed and operated in the “inference mode” where the network becomes static and its parameters fixed. This use scenario is dictated by the prohibitively high power costs of the “learning mode” which makes it impractical for use on power-constrained deployment devices such as mobile phones or drones. This use scenario, in which the network does not change after deployment, is inadequate in situations where the network needs to adapt online to new stimuli, or to personalize its output to the characteristics of different environments or users.

"Compute-in-memory has been common practice in neuromorphic engineering since it was introduced more than 30 years ago," Cauwenberghs said. "What is new with NeuRRAM is that the extreme efficiency now goes together with great flexibility for diverse AI applications with almost no loss in accuracy over standard digital general-purpose compute platforms."

This is a patent application related to the compute-in-memory chip discussed:

US2021342678A1 COMPUTE-IN-MEMORY ARCHITECTURE FOR NEURAL NETWORKS

Inventors

MOSTAFA HESHAM [US]; KUBENDRAN RAJKUMAR CHINNAKONDA [US]; CAUWENBERGHS GERT [US]A neural network architecture for inference and learning comprising:

a plurality of network modules,

each network module comprising a combination of CMOS neural circuits and RRAM synaptic crossbar memory structures interconnected by bit lines and source lines,

each network module having an input port and an output port,

wherein weights are stored in the crossbar memory structures, and

wherein learning is effected using approximate backpropagation with ternary errors;

wherein the CMOS neural circuits include a source line block having dynamic comparators, and

wherein inference is effected by clamping pairs of bit lines in a differential manner and comparing within the dynamic comparator voltages on each differential bit line pair to obtain a binary output activation for output neurons.

[005] ... [Prior ReRAM systems] By storing the weights of the neural network as conductance values of the memory elements, and by arranging these elements in a crossbar configuration as shown in FIG. 1, the crossbar memory structure can be used to perform a matrix-vector product operation in the analog domain. In the illustrated example, the input layer 10 neural activity, yl-1 , is encoded as analog voltages. The output neurons 12 maintain a virtual ground at their input terminals and their input currents represent weighted sums of the activities of the neurons in the previous layer, where the weights are encoded in the memory-resistor, or “memristor”, conductances 14 a - 14 n. The output neurons generate an output voltage proportional to their input currents. Additional details are provided by S. Hamdioui, et al., in “Memristor For Computing: Myth of Reality?”, Proceedings of the Conference on Design, Automation & Test in Europe (DATE ), IEEE, pp. 722-731, 2017. This approach has two advantages: (1) weights do not need to be shuttled between memory and a compute device as computation is done directly within the memory structure; and (2) minimal computing hardware is needed around the crossbar array as most of the computation is done through Kirchoff's current and voltage laws. A common issue with this type of memory structure is a data-dependent problem called “sneak paths”. This phenomenon occurs when a resistor in the high-resistance state is being read while a series of resistors in the low-resistance state exist parallel to it, causing it to be erroneously read as low-resistance. The “sneak path” problem in analog crossbar array architectures can be avoided by driving all input lines with voltages from the input neurons. Other approaches involve including diodes or transistors to isolate each device, which limits array density and increases cost.

[0006] Deep neural networks have demonstrated state-of-the-art performance on a variety of tasks such as image classification and automatic speech recognition. Before neural networks can be deployed, however, they must first be trained. The training phase for deep neural networks can be very power-hungry and is typically executed on centralized and powerful computing systems. The network is subsequently deployed and operated in the “inference mode” where the network becomes static and its parameters fixed. This use scenario is dictated by the prohibitively high power costs of the “learning mode” which makes it impractical for use on power-constrained deployment devices such as mobile phones or drones. This use scenario, in which the network does not change after deployment, is inadequate in situations where the network needs to adapt online to new stimuli, or to personalize its output to the characteristics of different environments or users.

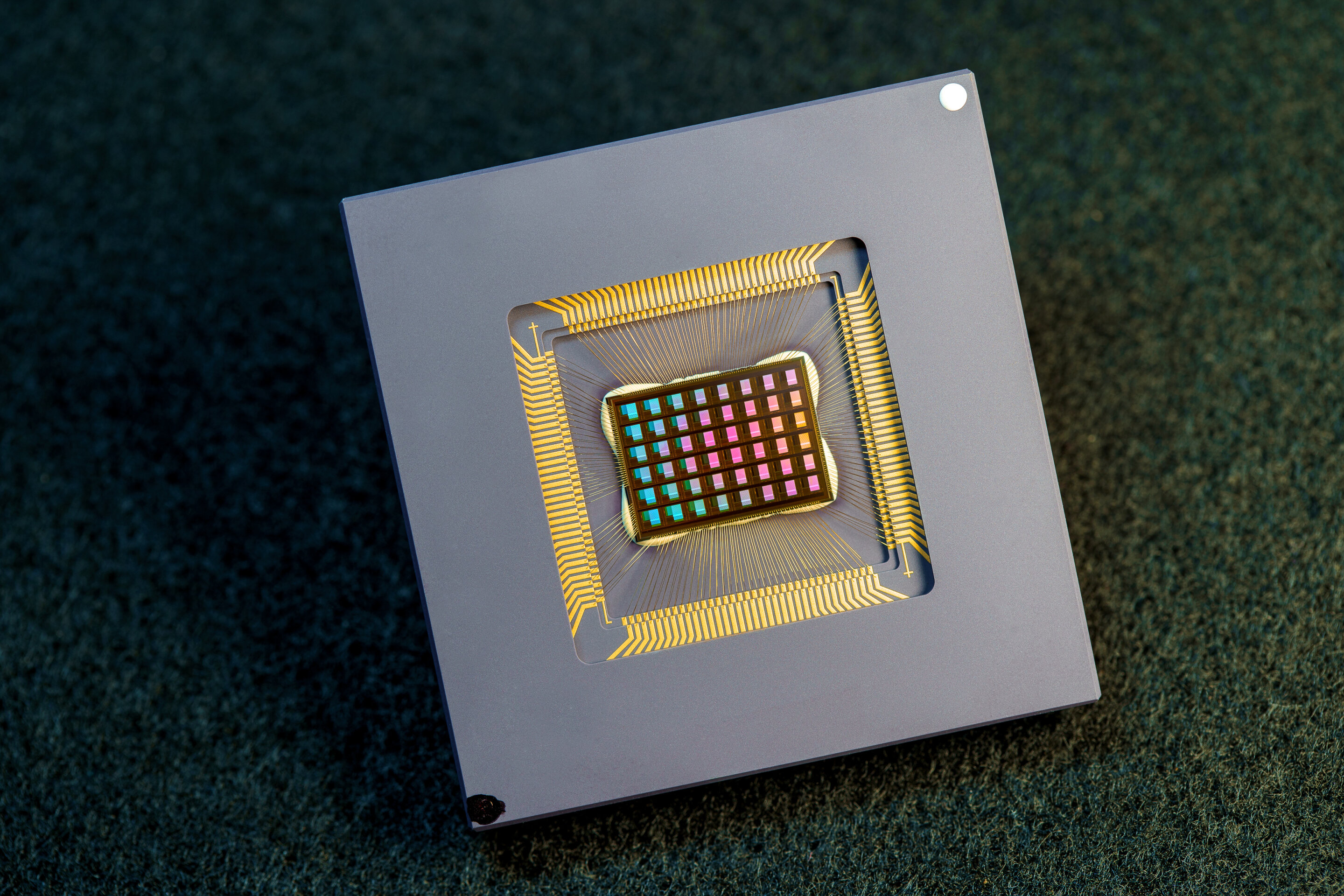

New neuromorphic chip for AI on the edge, at a small fraction of the energy and size of today's computing platforms

An international team of researchers has designed and built a chip that runs computations directly in memory and can run a wide variety of AI applications–all at a fraction of the energy consumed by computing platforms for general-purpose AI computing.techxplore.com

This looks like genuine competition

Dang Son

Regular

IMO members on our scientific advisory board should have signed a Stat Dec on the subject of no conflict of interest to insure against sharing of our trade secrets with competition.By the sound of it, this is student project which has been running for a few years involving a couple of generations of students. Compute-in-memory and analog NPUs were around when PvdM started. His invention solves the problems with analog ReRAM.

"Compute-in-memory has been common practice in neuromorphic engineering since it was introduced more than 30 years ago," Cauwenberghs said. "What is new with NeuRRAM is that the extreme efficiency now goes together with great flexibility for diverse AI applications with almost no loss in accuracy over standard digital general-purpose compute platforms."

This is a patent application related to the compute-in-memory chip discussed:

US2021342678A1 COMPUTE-IN-MEMORY ARCHITECTURE FOR NEURAL NETWORKS

Inventors

MOSTAFA HESHAM [US]; KUBENDRAN RAJKUMAR CHINNAKONDA [US]; CAUWENBERGHS GERT [US]

A neural network architecture for inference and learning comprising:

a plurality of network modules,

each network module comprising a combination of CMOS neural circuits and RRAM synaptic crossbar memory structures interconnected by bit lines and source lines,

each network module having an input port and an output port,

wherein weights are stored in the crossbar memory structures, and

wherein learning is effected using approximate backpropagation with ternary errors;

wherein the CMOS neural circuits include a source line block having dynamic comparators, and

wherein inference is effected by clamping pairs of bit lines in a differential manner and comparing within the dynamic comparator voltages on each differential bit line pair to obtain a binary output activation for output neurons.

View attachment 14425

View attachment 14426

[005] ... [Prior ReRAM systems] By storing the weights of the neural network as conductance values of the memory elements, and by arranging these elements in a crossbar configuration as shown in FIG. 1, the crossbar memory structure can be used to perform a matrix-vector product operation in the analog domain. In the illustrated example, the input layer 10 neural activity, yl-1 , is encoded as analog voltages. The output neurons 12 maintain a virtual ground at their input terminals and their input currents represent weighted sums of the activities of the neurons in the previous layer, where the weights are encoded in the memory-resistor, or “memristor”, conductances 14 a - 14 n. The output neurons generate an output voltage proportional to their input currents. Additional details are provided by S. Hamdioui, et al., in “Memristor For Computing: Myth of Reality?”, Proceedings of the Conference on Design, Automation & Test in Europe (DATE ), IEEE, pp. 722-731, 2017. This approach has two advantages: (1) weights do not need to be shuttled between memory and a compute device as computation is done directly within the memory structure; and (2) minimal computing hardware is needed around the crossbar array as most of the computation is done through Kirchoff's current and voltage laws. A common issue with this type of memory structure is a data-dependent problem called “sneak paths”. This phenomenon occurs when a resistor in the high-resistance state is being read while a series of resistors in the low-resistance state exist parallel to it, causing it to be erroneously read as low-resistance. The “sneak path” problem in analog crossbar array architectures can be avoided by driving all input lines with voltages from the input neurons. Other approaches involve including diodes or transistors to isolate each device, which limits array density and increases cost.

[0006] Deep neural networks have demonstrated state-of-the-art performance on a variety of tasks such as image classification and automatic speech recognition. Before neural networks can be deployed, however, they must first be trained. The training phase for deep neural networks can be very power-hungry and is typically executed on centralized and powerful computing systems. The network is subsequently deployed and operated in the “inference mode” where the network becomes static and its parameters fixed. This use scenario is dictated by the prohibitively high power costs of the “learning mode” which makes it impractical for use on power-constrained deployment devices such as mobile phones or drones. This use scenario, in which the network does not change after deployment, is inadequate in situations where the network needs to adapt online to new stimuli, or to personalize its output to the characteristics of different environments or users.

It seems very suss to me that Gunter goes off and releases a competitive chip after being privy to AKIDA IP.

How else could he be an advisor without knowing intimate details of our chip?

uiux

Regular

IMO members on our scientific advisory board should have signed a Stat Dec on the subject of no conflict of interest to insure against sharing of our trade secrets with competition.

It seems very suss to me that Gunter goes off and releases a competitive chip after being privy to AKIDA IP.

How else could he be an advisor without knowing intimate details of our chip?

His technology is compute-in-memory

It's completely different

Taproot

Regular

What is your take on " in-memory" vs at-memory ?His technology is compute-in-memory

It's completely different

At-memory compute is the sweet spot for AI acceleration

Interesting debate between Robert Beachler and GP Singh from Ambient Scientific from 9.30min

cosors

👀

Your understanding makes me understand better. Nevertheless, some things remain open for me. So far, I was used to scientific reports giving me a glimpse into the future of what might come. The main topic is where does the world stand regarding neuromorphic computing. For example, if they only research with the Loihi then scientifically only Loihi exists in the report? The question was also whether it is already so far whether there are applications and products. For me as a non-scientist this is clear and it could have been clear to the moderator. Yes, there are applications and there are first products. Even if it is very difficult to prove scientifically that there is a neuromorphic chip in the EQXX or Nicobo or at first our PCI board. But I hear you. Let's wait and see. Maybe the next scientific elaboration from them with the following podcast will give a more concrete outlook where we stand on the world with neuromorphic computing. Now they don't need to research other neuromorphic chips besides the Loihi but can just contact Brainchip's sales team. But my statements are unfair because we don't have that podcast in EnglishHi cosors,

This is something which I have noted before - academics confine their research to peer-reviewed publications because anything that is not peer reviewed is not "proven" scientifically.

In fact, finding Akida in peer reviewed papers may be a benefit of the Carnegie Mellon University project, as the students and academics will be experimenting with Akida and producing peer reviewed papers.

___

To be fair I add that they clearly mentioned that there are start-ups.

Last edited:

alwaysgreen

Top 20

Plus some large after market trades...So I noticed an after trade of over 100k shares at 1.07

I wonder why its not registered as official closing price?

Im feeling warm and fuzzies for next week...

Was hoping to accumulate tomorrow afternoon but I fear I may not get the price I'm after...

AKIDA BALLISTA!

Makeme 2020

Regular

Buddy i feel your pain.Nothing, I’m taking a break.

Come back soon..

Quercuskid

Regular

I hope members leaving or taking a break have not been monstered out by the palpable aggression which has made its way onto the site.

cosors

👀

I'm not an IT tech head either and additionally a beginner. I have fast skimmed the report and come to the same conclusion. It's CNN and in addition the chip doesn't seem to be ready or all parts integrated. I also read about the accuracy.I'm no tech head but I cant see NeuRRam being in the same century as Akida much less the same market. my understanding is it uses convolution processing (analogue to digital). they are yet to tackle spiking architecture and their power saving is like 30 to 40 times less efficient than Akida. Furthermore the chip achieved 87% accuracy on image classification - imagine using it in self driving cars, it would make Tesla look good.

"As a result, when performing multi-core parallel inference on a deep CNN, ResNet-20, the measured accuracy on CIFAR-10 classification (83.67%) is still 3.36% lower than that of a 4-bit-weight software model (87.03%)."

"The intermediate data buffers and partial-sum accumulators are implemented by a field-programmable gate array (FPGA) integrated on the same board as the NeuRRAM chip. Although these digital peripheral modules are not the focus of this study, they will eventually need to be integrated within the same chip in production-ready RRAM-CIM hardware." Of course I don't know what that means in detail. But for me it doesn't seem to be ready. Maybe one of you who knows about this will comment.

"We use CNN models for the CIFAR-10 and MNIST image classification tasks. The CIFAR-10 dataset consists of 50,000 training images and 10,000 testing images belonging to 10 object classes."

https://www.nature.com/articles/s41586-022-04992-8

@Slymeat I had the topic of our manufacturing technology (WBT) the other day so these details jumped out at me. They don't do what Weebit deliberately chose to do. You mentioned that this is exactly where the advantage of Weebit lies, far cheaper and easier to manufacture.

"The RRAM device stack consists of a titanium nitride (TiN) bottom-electrode layer, a hafnium oxide (HfOx) switching layer, a tantalum oxide (TaOx) thermal-enhancement layer and a TiN top-electrode layer."

"The current RRAM array density under a 1T1R configuration is limited not by the fabrication process but by the RRAM write current and voltage. The current NeuRRAM chip uses large thick-oxide I/O transistors as the ‘T’ to withstand >4-V RRAM forming voltage and provide enough write current. Only if we lower both the forming voltage and the write current can we obtain higher density and therefore lower parasitic capacitance for improved energy efficiency."

https://thestockexchange.com.au/threads/brn-discussion-2022.1/post-119627

https://thestockexchange.com.au/threads/brn-discussion-2022.1/post-119495

Normally, when the order happened after market like these big, it will be a SP drop followed next day. Finger cross !

Zedjack33

Regular

Agreed. Seems to be the norm atm.Normally, when the order happened after market like these big, it will be a SP drop followed next day. Finger cross !

cosors

👀

Perhaps it would be more pleasant in general to leave the ego aside and not offensively claim the right to be right. Don't get me wrong. I love DIScussions. They just have to lead to something and not apart, but further. That's only possible with some movement. My thought.I hope members leaving or taking a break have not been monstered out by the palpable aggression which has made its way onto the site.

"Here, you will find everything from friendly discussion through to advanced research and analysis contributed freely by very engaged and supportive members." zeeb0t

Similar threads

- Replies

- 0

- Views

- 4K

- Replies

- 9

- Views

- 6K

- Replies

- 0

- Views

- 3K