Fact Finder

Top 20

If you are new to Brainchip / Akida, or even if you've been following all along - this is an excellent write up which gives you an amazingly in depth overview of where Brainchip has been, and where it is headed.

Enjoy!

Authored by @Fact Finder - December 2020

Chapter One

The Brainchip story is not one which is well known by the general public for a number of reasons not the least of which is the fact that Brainchip for obvious commercial reasons has been operating as much as possible under the radar to protect its commercial and intellectual advantage in the field of Spiking Neural Network technology.

Recently however with the signing of its first commercial Intellectual Property Licence agreement it has moved out of the shadows of being a start up in self-imposed stealth mode to becoming a major international semiconductor provider heralding a new era of artificial intelligence at the edge and far edge both here on Earth and in Space.

The journey to this point commenced over twenty years ago when Peter van der Made commenced to formalise his ideas for how to conceive artificial general intelligence. These ideas were developed by him until in 2008, when he filed the foundational Patent number 8250011 for what was to become Brainchip’s spiking neural network technology known as AKIDA.

Along this journey other visionaries joined him and in 2015 as a result of Peter van der Made’s previous experiences with the venture capital model Brainchip Technologies Ltd was born out of a reverse takeover of the dormant minerals explorer Aziana on the Australian Stock Exchange. Supported by Mick Bolto Chairman, Adam Osserian Non Executive Director, Anil Mankar Chief Operating Officer and Senior Engineer and Neil Rinaldi Non Executive Director, Peter van der Made commenced the journey towards the commercialisation of his original idea for artificial general intelligence. This caused much excitement among investors on the ASX and many captivated by the idea of artificial general intelligence bought shares priced purely on a collective vision of what the future could hold for Brainchip’s technology.

In October, 2015 an investor presentation by the company announced the introduction of SNAP. SNAP was the first iteration of SNN technology and stood for Spiking Neuron Adaptive Processor. The business model involved initially the sale of the intellectual property however the early high expectations were not to be delivered. There are lots of reasons but suffice to say having the most advanced revolutionary technology in the world can be a hard sell to a world which is happy with the status quo and who have no idea what you are talking about and no interest in learning. In short SNAP in all its variants was ahead of its time and not market ready and Moore’s Law had yet to reach breaking point.

On 1 September, 2016 the company completed the acquisition of a French based company Spikenet Technology which specialised in Ai computer vision technology with a view to accelerating up take of SNAP technology as a video processing solution. Spikenet had a relationship with Cerco Research Centre where Dr Simon Thorpe (now a member of the Brainchip Scientific Advisory Board) undertook research with others and in that position had developed the JAST learning rules.

The term JAST was made up from the first initials of the four inventors and Brainchip saw the benefit these rules would bring to the development of its technologies and on 20 March, 2017 entered an agreement which gave them exclusive rights to the JAST learning rules.

Bringing together the expertise of Spikenet, Brainchip and the JAST learning rules on 12 September, the company produced a new video processing product which it named Brainchip Studio. It was a very impressive product and was unlike anything else available on the market and on 21 August 2017 Brainchip announced that it had been awarded New Product of the Year for 2017 for Video Analytics by Security Today’s panel of independent experts. Shortly after this on 12 September, 2017 the company announced an upgrade to Brainchip Studio called Brainchip Accelerator which was capable of 16 channel simultaneous video processing, with ultra low power and 6x speed boost to Brainchip Studios CPU-based Artificial Intelligence Software for Object Recognition and performance levels 7 times more efficient than existing GPU accelerated deep learning systems.

Brainchip Accelerator went on to also win an industry award however the market place in which it was released was already dominated by well known global brands and proved to be impenetrable until the then new CEO Lou Dinardo announced the signing of what was in effect a Heads of Agreement with Gaming Partners International to develop a system built on Studio technology for game table security in the Casino industry. The agreement involved the upfront payment by Gaming Partners International of $500,000 as well as an additional payment of $100,000 to cover engineering works. These payments were made and it was full steam ahead and the project was reportedly on track and successful demonstrations of the prototype had gone down well at a Gaming Industry conference in the USA.

The saying ‘never seems to be able to catch a break’ comes to mind as not long into the process of designing and building this table gaming solution Gaming Partners International became the subject of a successful takeover bid by a Japanese gaming company and in the wash up of that takeover the Japanese owners were not interested in pursuing this direction as their principle reason for acquiring GPI was to add GPI’s chip technology to their playing card business. After much negotiation it became obvious to Brainchip that it was a zero sum game trying to revive the project although both parties have agreed to revisit it in the future.

Chapter Two

The decision was made by Brainchip to go for the end goal in a make or break effort to achieve the companies ‘holy grail’ and Peter van der Made stepped aside from his position on the Board of Directors to work exclusively on the final stage of the development of the AKIDA technology. The company engaged in brutal cost savings and shedding of all staff not engaged in the technology engineering side of the company.

All of the knowledge and research that had gone into the various attempts at commercialisation had also driven the advance of the AKIDA technology platform so Peter van der Made and Anil Mankar had the plan already laid out as to what was required, but now it became a race not just to be the first to market that would be a given but a race to finalise the research and engineering in record time before the company was no longer financially viable.

The various decisions as to how to make the final push to market of this revolutionary product were brilliant. They knew that this would be make or break. They knew they had the technology but they knew from the past failures that they had to pick an area or segment of the market where they would not have any true competition. They also knew that even then they needed to have found out before they built the product what clients thought of it and what they liked and did not like about it all on a shoestring budget.

The idea of the Akida Development Environment (ADE) was conceived and it was released to early access customers. Studio had been released to customers after being produced and as a result feedback from customers came too late in the cycle but nonetheless this feedback was available to inform Brainchip as to what the ADE should look like. However by releasing the ADE in advance it was intended that any further feedback would be taken on board and designed in which in fact occurred however to accommodate these customer requests the release date of the IP and the production of AKIDA1000 was delayed by twelve months as Peter van der Made and Anil Mankar went back to the drawing board.

The state of the Brainchip balance sheet and the delay that this redesign caused did not go down well with many shareholders and a great deal of angst and anger were directed at the company in particular the CEO which suffice to say he fielded with a dignified air but which to those who had been involved watching the company since he took up his role clearly weighed heavily on his shoulders. In an extremely positive sign on 14 December, 2019 a joint presentation of the ADE performing hand gesture identification took place at a trade show in combination with Tata Consulting Service engineers and to the delight of many shareholders one of the presentation slides stated that the Tata engineers were looking forward to building a robot based upon an AKIDA platform.

Anil Mankar has a strong background in bringing IP to successful implementation on chip and it is reported that he has over 100 successful chip designs to his credit. As a result despite the speed which was required he ensured that the company took the time to implement the AKD1000 design in FPGA before taking it to Socionext for final engineering and design prior to sending it to TSMC for production of the wafer. In an effort to contain the costs of producing the AKD1000 chip the company decided that it would share a wafer and on 8 April, 2020 TSMC commenced production.

These actions of going back to the drawing board following feedback from early access customers and implementing the design on FPGA produced delays which clearly did not impress the market and it was reflected in an ever falling share price but the company stuck with what had to be done to achieve the end result.

Chapter Three

While the engineering and tech people were burning the midnight oil the task of marketing the ADE the IP and the eventual AKD1000 boards and raising capital fell to the CEO and Mr Roger Levinson. Despite Covid-19 these two individuals managed to leverage their contacts around the globe to the extent that despite significant shareholder criticism at the time the company announced a 29 million dollar funding arrangement with LDA Capital ensuring the companies financial viability. Shareholders waited to find out if the AKD1000 which had been through testing and packaging by Socionext would also pass validation tests conducted by the company before installing them on Boards and shipping them to customers. Everything seemed to be falling into place.

In a tribute to the chip design work of Anil Mankar the CEO announced that final validation and testing proved the AKD1000 worked perfectly matching and exceeding all predicted benchmarks something which he had not witnessed in all his years in the semiconductor industry.

While all these milestones were being achieved the CEO was keeping shareholders up to date with progress on the marketing and engagement side of the company and over time announced that Brainchip had well north of 100 Non Disclosure Agreements in place with various interested companies and that they had selected from the interested companies about two dozen which stood out as household names, Fortune 500 companies and most likely to bring commercial benefit. It is indeed difficult to know when the CEO found the time, given that after Peter van der Made stepped down from the Board to concentrate all his efforts on finalising the design of AKD1000 all administrative and sales staff were let go, yet he was able to finalise and announce early access and development agreements with Ford and Valeo both well known Tier 1 companies in the automotive industry where such agreements involved these companies paying Brainchip for the privilege of receiving early access to the AKD1000 test boards.

In a further coup the CEO announced the appointment of Mr Rob Telson as Vice President of World Wide Sales. Rob Telson had been at ARM and had filled the roles of Vice President of Foundry Sales World wide and also Vice President of Sales for the Americas. It is interesting to note that with this appointment Brainchip seemed to be confirming that its stated intention in 2015 to use ARM as its model for building its global footprint still was in play.

The CEO not one to let grass grow also jointly announced with Socionext an agreement to include the AKD1000 chip as part of the Socionext SynQuacer product offering and also a joint development agreement with Magik-Eye the inventor of a revolutionary 3D point sensor capable of depth perception. Then out of left field the CEO announced an Early Access Customer engagement with Vorago for the purpose of Vorago hardening the AKD1000 chip for space deployments in a fully funded Phase 1 program with NASA. Not long after this announcement the CEO announced that NASA had directly been admitted to the Early Access Program.

Chapter Four

While all this has been taking place Peter van der Made having completed the AKD1000 design has with Anil Mankar moved on to the designs for AKD2000 and AKD3000 while at the same time working with Adam Osseiran and others on the AERO project. The AERO project started life as a way to leverage the ADE to identify gases and in the process created a design for a scientific instrument powered by the ADE using only 200 AKIDA neurons which was capable of state of the art identification of the 20 gas data set (of ten gases) with latency of only 3 seconds. This breakthrough research has led to trialling the ADE as the (processor) for identifying Volatile Organic Compounds of various diseases from samples of exhaled human breath.

As luck would have it Adam Osseiran’s work with Noble Laureate Professor Barry Marshall on the invention of the Noisy Gut Belt has led to Professor Marshall becoming aware of the AKIDA technology and accepting an invitation to join the BrainChip Scientific Advisory Board for the purpose of developing medical diagnostic applications relating to AERO and its proven capacity to identify gases for the purpose of identify various medical conditions in real time from sampling exhaled human breath.

The Brainchip Scientific Advisory Board is presently chaired by Adam Osseiran with Professor Barry Marshall and Dr Simon Thorpe. In a presentation by Peter van der Made to the Brain Inspired Computing Congress in November, 2020 he confirmed that AKIDA had been successfully implemented as the processor for the purpose of identifying Covid-19 with a success rate of 94%. It is well recognised that various diseases including some cancers, diabetes and Covid-19 express themselves in identifiable patterns when exhaled on an infected person’s breath.

The potential low cost, unconnected, portable nature of an AKIDA medical scientific instrument for this purpose could be life changing for remote populations and people of developing countries. The ability to incorporate this technology into telehealth applications which have grown exponentially in developed countries as a result of Covid-19 infection promises huge commercial opportunities and together these factors could provide greater equality of access to modern medical services than at any time in history of the world. In fact researchers at Australia’s own Flinders’ University have in recent months announced that they have identified VOC markers in exhaled human breath for head and neck cancer.

Chapter Five

On more than one occasion the CEO of Brainchip has stated to shareholders in presentations during the second half of 2020 that the company was very close to announcing its first commercial engagement and that this announcement may come late 2020 but certainly early 2021. Well to the amazement of shareholders as Christmas descended and the markets started to wind down the CEO was true to his word as a trading halt was called on the 22 December, 2020 and after the close of trade on 23 December, 2020 the CEO and the Board of Brainchip released two announcements.

The first was confirmation that Brainchip had been awarded a sole source contract with NASA for the AKIDA early access development kit. The second and pivotal announcement was that the first Intellectual Property licence had been entered with Renesas with the CEO commenting: “This is an exciting and significant milestone in obtaining the Company’s first IP licensing agreement. Furthermore, this is a market validation of our technology.”

The salient terms of this agreement as announced are: Aliso Viejo, Calif. – 23 December 2020 – BrainChip Holdings Ltd (ASX: BRN), a leading provider of ultra-low power, high- performance AI technology, today announced the signing of an intellectual property license agreement with Renesas Electronics America Inc., a subsidiary of Japan-based Renesas Electronics Corp., a tier-one semiconductor manufacturer that specializes in microcontroller and automotive SoC products.

The unconditional agreement provides for:

So after this announcement the next trading day being 24 December, 2020 trading resumed and the market closed early because it was Christmas Eve. The next chapter in the Brainchip story will now unfold with expectations of further IP agreements, mass production of the AKD1000 and many exciting new ventures being anticipated. The rest of the Brainchip story is likely to be written in front of an increasingly attentive market both here in Australia and around the world and is likely not to require someone to put it in writing as AKIDA technology becomes ubiquitous both on Earth and in Space.

Chapter Six

In finalising this first book of Brainchip I have included below some of the patents and published research to complete the historical picture noting that there are still a number of patents in progress:

Patents:

Published Research:

The End.

Enjoy!

Authored by @Fact Finder - December 2020

Chapter One

The Brainchip story is not one which is well known by the general public for a number of reasons not the least of which is the fact that Brainchip for obvious commercial reasons has been operating as much as possible under the radar to protect its commercial and intellectual advantage in the field of Spiking Neural Network technology.

Recently however with the signing of its first commercial Intellectual Property Licence agreement it has moved out of the shadows of being a start up in self-imposed stealth mode to becoming a major international semiconductor provider heralding a new era of artificial intelligence at the edge and far edge both here on Earth and in Space.

The journey to this point commenced over twenty years ago when Peter van der Made commenced to formalise his ideas for how to conceive artificial general intelligence. These ideas were developed by him until in 2008, when he filed the foundational Patent number 8250011 for what was to become Brainchip’s spiking neural network technology known as AKIDA.

Along this journey other visionaries joined him and in 2015 as a result of Peter van der Made’s previous experiences with the venture capital model Brainchip Technologies Ltd was born out of a reverse takeover of the dormant minerals explorer Aziana on the Australian Stock Exchange. Supported by Mick Bolto Chairman, Adam Osserian Non Executive Director, Anil Mankar Chief Operating Officer and Senior Engineer and Neil Rinaldi Non Executive Director, Peter van der Made commenced the journey towards the commercialisation of his original idea for artificial general intelligence. This caused much excitement among investors on the ASX and many captivated by the idea of artificial general intelligence bought shares priced purely on a collective vision of what the future could hold for Brainchip’s technology.

In October, 2015 an investor presentation by the company announced the introduction of SNAP. SNAP was the first iteration of SNN technology and stood for Spiking Neuron Adaptive Processor. The business model involved initially the sale of the intellectual property however the early high expectations were not to be delivered. There are lots of reasons but suffice to say having the most advanced revolutionary technology in the world can be a hard sell to a world which is happy with the status quo and who have no idea what you are talking about and no interest in learning. In short SNAP in all its variants was ahead of its time and not market ready and Moore’s Law had yet to reach breaking point.

On 1 September, 2016 the company completed the acquisition of a French based company Spikenet Technology which specialised in Ai computer vision technology with a view to accelerating up take of SNAP technology as a video processing solution. Spikenet had a relationship with Cerco Research Centre where Dr Simon Thorpe (now a member of the Brainchip Scientific Advisory Board) undertook research with others and in that position had developed the JAST learning rules.

The term JAST was made up from the first initials of the four inventors and Brainchip saw the benefit these rules would bring to the development of its technologies and on 20 March, 2017 entered an agreement which gave them exclusive rights to the JAST learning rules.

Bringing together the expertise of Spikenet, Brainchip and the JAST learning rules on 12 September, the company produced a new video processing product which it named Brainchip Studio. It was a very impressive product and was unlike anything else available on the market and on 21 August 2017 Brainchip announced that it had been awarded New Product of the Year for 2017 for Video Analytics by Security Today’s panel of independent experts. Shortly after this on 12 September, 2017 the company announced an upgrade to Brainchip Studio called Brainchip Accelerator which was capable of 16 channel simultaneous video processing, with ultra low power and 6x speed boost to Brainchip Studios CPU-based Artificial Intelligence Software for Object Recognition and performance levels 7 times more efficient than existing GPU accelerated deep learning systems.

Brainchip Accelerator went on to also win an industry award however the market place in which it was released was already dominated by well known global brands and proved to be impenetrable until the then new CEO Lou Dinardo announced the signing of what was in effect a Heads of Agreement with Gaming Partners International to develop a system built on Studio technology for game table security in the Casino industry. The agreement involved the upfront payment by Gaming Partners International of $500,000 as well as an additional payment of $100,000 to cover engineering works. These payments were made and it was full steam ahead and the project was reportedly on track and successful demonstrations of the prototype had gone down well at a Gaming Industry conference in the USA.

The saying ‘never seems to be able to catch a break’ comes to mind as not long into the process of designing and building this table gaming solution Gaming Partners International became the subject of a successful takeover bid by a Japanese gaming company and in the wash up of that takeover the Japanese owners were not interested in pursuing this direction as their principle reason for acquiring GPI was to add GPI’s chip technology to their playing card business. After much negotiation it became obvious to Brainchip that it was a zero sum game trying to revive the project although both parties have agreed to revisit it in the future.

Chapter Two

The decision was made by Brainchip to go for the end goal in a make or break effort to achieve the companies ‘holy grail’ and Peter van der Made stepped aside from his position on the Board of Directors to work exclusively on the final stage of the development of the AKIDA technology. The company engaged in brutal cost savings and shedding of all staff not engaged in the technology engineering side of the company.

All of the knowledge and research that had gone into the various attempts at commercialisation had also driven the advance of the AKIDA technology platform so Peter van der Made and Anil Mankar had the plan already laid out as to what was required, but now it became a race not just to be the first to market that would be a given but a race to finalise the research and engineering in record time before the company was no longer financially viable.

The various decisions as to how to make the final push to market of this revolutionary product were brilliant. They knew that this would be make or break. They knew they had the technology but they knew from the past failures that they had to pick an area or segment of the market where they would not have any true competition. They also knew that even then they needed to have found out before they built the product what clients thought of it and what they liked and did not like about it all on a shoestring budget.

The idea of the Akida Development Environment (ADE) was conceived and it was released to early access customers. Studio had been released to customers after being produced and as a result feedback from customers came too late in the cycle but nonetheless this feedback was available to inform Brainchip as to what the ADE should look like. However by releasing the ADE in advance it was intended that any further feedback would be taken on board and designed in which in fact occurred however to accommodate these customer requests the release date of the IP and the production of AKIDA1000 was delayed by twelve months as Peter van der Made and Anil Mankar went back to the drawing board.

The state of the Brainchip balance sheet and the delay that this redesign caused did not go down well with many shareholders and a great deal of angst and anger were directed at the company in particular the CEO which suffice to say he fielded with a dignified air but which to those who had been involved watching the company since he took up his role clearly weighed heavily on his shoulders. In an extremely positive sign on 14 December, 2019 a joint presentation of the ADE performing hand gesture identification took place at a trade show in combination with Tata Consulting Service engineers and to the delight of many shareholders one of the presentation slides stated that the Tata engineers were looking forward to building a robot based upon an AKIDA platform.

Anil Mankar has a strong background in bringing IP to successful implementation on chip and it is reported that he has over 100 successful chip designs to his credit. As a result despite the speed which was required he ensured that the company took the time to implement the AKD1000 design in FPGA before taking it to Socionext for final engineering and design prior to sending it to TSMC for production of the wafer. In an effort to contain the costs of producing the AKD1000 chip the company decided that it would share a wafer and on 8 April, 2020 TSMC commenced production.

These actions of going back to the drawing board following feedback from early access customers and implementing the design on FPGA produced delays which clearly did not impress the market and it was reflected in an ever falling share price but the company stuck with what had to be done to achieve the end result.

Chapter Three

While the engineering and tech people were burning the midnight oil the task of marketing the ADE the IP and the eventual AKD1000 boards and raising capital fell to the CEO and Mr Roger Levinson. Despite Covid-19 these two individuals managed to leverage their contacts around the globe to the extent that despite significant shareholder criticism at the time the company announced a 29 million dollar funding arrangement with LDA Capital ensuring the companies financial viability. Shareholders waited to find out if the AKD1000 which had been through testing and packaging by Socionext would also pass validation tests conducted by the company before installing them on Boards and shipping them to customers. Everything seemed to be falling into place.

In a tribute to the chip design work of Anil Mankar the CEO announced that final validation and testing proved the AKD1000 worked perfectly matching and exceeding all predicted benchmarks something which he had not witnessed in all his years in the semiconductor industry.

While all these milestones were being achieved the CEO was keeping shareholders up to date with progress on the marketing and engagement side of the company and over time announced that Brainchip had well north of 100 Non Disclosure Agreements in place with various interested companies and that they had selected from the interested companies about two dozen which stood out as household names, Fortune 500 companies and most likely to bring commercial benefit. It is indeed difficult to know when the CEO found the time, given that after Peter van der Made stepped down from the Board to concentrate all his efforts on finalising the design of AKD1000 all administrative and sales staff were let go, yet he was able to finalise and announce early access and development agreements with Ford and Valeo both well known Tier 1 companies in the automotive industry where such agreements involved these companies paying Brainchip for the privilege of receiving early access to the AKD1000 test boards.

In a further coup the CEO announced the appointment of Mr Rob Telson as Vice President of World Wide Sales. Rob Telson had been at ARM and had filled the roles of Vice President of Foundry Sales World wide and also Vice President of Sales for the Americas. It is interesting to note that with this appointment Brainchip seemed to be confirming that its stated intention in 2015 to use ARM as its model for building its global footprint still was in play.

The CEO not one to let grass grow also jointly announced with Socionext an agreement to include the AKD1000 chip as part of the Socionext SynQuacer product offering and also a joint development agreement with Magik-Eye the inventor of a revolutionary 3D point sensor capable of depth perception. Then out of left field the CEO announced an Early Access Customer engagement with Vorago for the purpose of Vorago hardening the AKD1000 chip for space deployments in a fully funded Phase 1 program with NASA. Not long after this announcement the CEO announced that NASA had directly been admitted to the Early Access Program.

Chapter Four

While all this has been taking place Peter van der Made having completed the AKD1000 design has with Anil Mankar moved on to the designs for AKD2000 and AKD3000 while at the same time working with Adam Osseiran and others on the AERO project. The AERO project started life as a way to leverage the ADE to identify gases and in the process created a design for a scientific instrument powered by the ADE using only 200 AKIDA neurons which was capable of state of the art identification of the 20 gas data set (of ten gases) with latency of only 3 seconds. This breakthrough research has led to trialling the ADE as the (processor) for identifying Volatile Organic Compounds of various diseases from samples of exhaled human breath.

As luck would have it Adam Osseiran’s work with Noble Laureate Professor Barry Marshall on the invention of the Noisy Gut Belt has led to Professor Marshall becoming aware of the AKIDA technology and accepting an invitation to join the BrainChip Scientific Advisory Board for the purpose of developing medical diagnostic applications relating to AERO and its proven capacity to identify gases for the purpose of identify various medical conditions in real time from sampling exhaled human breath.

The Brainchip Scientific Advisory Board is presently chaired by Adam Osseiran with Professor Barry Marshall and Dr Simon Thorpe. In a presentation by Peter van der Made to the Brain Inspired Computing Congress in November, 2020 he confirmed that AKIDA had been successfully implemented as the processor for the purpose of identifying Covid-19 with a success rate of 94%. It is well recognised that various diseases including some cancers, diabetes and Covid-19 express themselves in identifiable patterns when exhaled on an infected person’s breath.

The potential low cost, unconnected, portable nature of an AKIDA medical scientific instrument for this purpose could be life changing for remote populations and people of developing countries. The ability to incorporate this technology into telehealth applications which have grown exponentially in developed countries as a result of Covid-19 infection promises huge commercial opportunities and together these factors could provide greater equality of access to modern medical services than at any time in history of the world. In fact researchers at Australia’s own Flinders’ University have in recent months announced that they have identified VOC markers in exhaled human breath for head and neck cancer.

Chapter Five

On more than one occasion the CEO of Brainchip has stated to shareholders in presentations during the second half of 2020 that the company was very close to announcing its first commercial engagement and that this announcement may come late 2020 but certainly early 2021. Well to the amazement of shareholders as Christmas descended and the markets started to wind down the CEO was true to his word as a trading halt was called on the 22 December, 2020 and after the close of trade on 23 December, 2020 the CEO and the Board of Brainchip released two announcements.

The first was confirmation that Brainchip had been awarded a sole source contract with NASA for the AKIDA early access development kit. The second and pivotal announcement was that the first Intellectual Property licence had been entered with Renesas with the CEO commenting: “This is an exciting and significant milestone in obtaining the Company’s first IP licensing agreement. Furthermore, this is a market validation of our technology.”

The salient terms of this agreement as announced are: Aliso Viejo, Calif. – 23 December 2020 – BrainChip Holdings Ltd (ASX: BRN), a leading provider of ultra-low power, high- performance AI technology, today announced the signing of an intellectual property license agreement with Renesas Electronics America Inc., a subsidiary of Japan-based Renesas Electronics Corp., a tier-one semiconductor manufacturer that specializes in microcontroller and automotive SoC products.

The unconditional agreement provides for:

- A single-use, royalty-bearing, worldwide IP design license for the rights to use the Akida™ IP in the customer’s SoC products, which continues while the customer continues to use the Akida IP in its products. The parties agreed to customary termination terms;

- BrainChip to provide implementation support services (at an agreed fee to cover costs) aimed at facilitating the customer’s adoption and commercialization of the Akida-licensed product during the first year of the license agreement.

- The agreement provides for various payment terms including the payment of ongoing royalties based on the volume of units sold, commencing at certain agreed volume threshold and the net sale price of the customer’s products. The royalties remain in effect throughout the life of the licensed product.

- Brainchip to provide software maintenance services, which attract a separate fee if the customer elects to continue to use these services after the first two years of the agreement.

So after this announcement the next trading day being 24 December, 2020 trading resumed and the market closed early because it was Christmas Eve. The next chapter in the Brainchip story will now unfold with expectations of further IP agreements, mass production of the AKD1000 and many exciting new ventures being anticipated. The rest of the Brainchip story is likely to be written in front of an increasingly attentive market both here in Australia and around the world and is likely not to require someone to put it in writing as AKIDA technology becomes ubiquitous both on Earth and in Space.

Chapter Six

In finalising this first book of Brainchip I have included below some of the patents and published research to complete the historical picture noting that there are still a number of patents in progress:

Patents:

- Autonomous learning dynamic artificial neural computing device and brain inspired system

Patent number 8250011 was filed by Peter van der Made in September 2008.

It outlines a digital simulation of the biological process known as Synaptic Time Dependent Plasticity (STDP), which can enable an information processing system to perform approximation, autonomous learning and the strengthening of formerly learned input patterns. The information processing system consists of artificial neurons (composed of binary logic gates), which are interconnected via dynamic artificial synapses. Each artificial neuron consists of a soma circuit and a plurality of synapse circuits, whereby the soma membrane potential, the soma threshold value, the synapse strength and the Post Synaptic Potential at each synapse are expressed as values in binary registers, which are dynamically determined from certain aspects of input pulse timing, previous strength value and output pulse feedback.

- Method and A System for Creating Dynamic Neural Function Libraries

US20190012597A1 - Method and A System for Creating Dynamic Neural Function Libraries - Google Patents

A method for creating a dynamic neural function library that relates to Artificial Intelligence systems and devices is provided. Within a dynamic neural network (artificial intelligent device), a plurality of control values are autonomously generated during a learning process and thus stored in...patents.google.com

Abstract:

A method for creating a dynamic neural function library that relates to Artificial Intelligence systems and devices is provided. Within a dynamic neural network (artificial intelligent device), a plurality of control values are autonomously generated during a learning process and thus stored in synaptic registers of the artificial intelligent device that represent a training model of a task or a function learned by the artificial intelligent device.

Control Values include, but are not limited to, values that indicate the neurotransmitter level that is present in the synapse, the neurotransmitter type, the connectome, the neuromodulator sensitivity, and other synaptic, dendric delay and axonal delay parameters. These values form collectively a training model. Training models are stored in the dynamic neural function library of the artificial intelligent device. The artificial intelligent device copies the function library to an electronic data processing device memory that is reusable to train another artificial intelligent device.

- Spiking neural network

US20200143229A1 - Spiking neural network

Abstract:

Disclosed herein are system, method, and computer program product embodiments for an improved spiking neural network (SNN) configured to learn and perform unsupervised extraction of features from an input stream.

An embodiment operates by receiving a set of spike bits corresponding to a set synapses associated with a spiking neuron circuit.

The embodiment applies a first logical AND function to a first spike bit in the set of spike bits and a first synaptic weight of a first synapse in the set of synapses.

The embodiment increments a membrane potential value associated with the spiking neuron circuit based on the applying.

The embodiment determines that the membrane potential value associated with the spiking neuron circuit reached a learning threshold value.

The embodiment then performs a Spike Time Dependent Plasticity (STDP) learning function based on the determination that the membrane potential value of the spiking neuron circuit reached the learning threshold value.

- Low power neuromorphic voice activation system and method

US10157629B2 - Low power neuromorphic voice activation system and method

Abstract:

The present invention provides a system and method for controlling a device by recognizing voice commands through a spiking neural network. The system comprises a spiking neural adaptive processor receiving an input stream that is being forwarded from a microphone, a decimation filter and then an artificial cochlea. The spiking neural adaptive processor further comprises a first spiking neural network and a second spiking neural network. The first spiking neural network checks for voice activities in output spikes received from artificial cochlea. If any voice activity is detected, it activates the second spiking neural network and passes the output spike of the artificial cochlea to the second spiking neural network that is further configured to recognize spike patterns indicative of specific voice commands. If the first spiking neural network does not detect any voice activity, it halts the second spiking neural network.

- Neural processor based accelerator system and method

US20170024644A1 - Neural processor based accelerator system and method

Abstract:

A configurable spiking neural network based accelerator system is provided. The accelerator system may be executed on an expansion card, which may be a printed circuit board.

The system includes one or more application specific integrated circuits comprising at least one spiking neural processing unit and a programmable logic device mounted on the printed circuit board. The spiking neural processing unit includes digital neuron circuits and digital, dynamic synaptic circuits.

The programmable logic device is compatible with a local system bus.

The spiking neural processing units contain digital circuits comprises a Spiking Neural Network that handles all of the neural processing.

The Spiking Neural Network requires no software programming, but can be configured to perform a specific task via the Signal Coupling device and software executing on the host computer.

Configuration parameters include the connections between synapses and neurons, neuron types, neurotransmitter types, and neuromodulation sensitivities of specific neurons.

- Intelligent biomorphic system for pattern recognition with autonomous visual feature extraction

US20170236027A1 - Intelligent biomorphic system for pattern recognition with autonomous visual feature extraction

Abstract:

Embodiments of the present invention provide a hierarchical arrangement of one or more artificial neural networks for recognizing visual feature pattern extraction and output labelling.

The system comprises a first spiking neural network and a second spiking neural network. The first spiking neural network is configured to autonomously learn complex, temporally overlapping visual features arising in an input pattern stream. Competitive learning is implemented as spike time dependent plasticity with lateral inhibition in the first spiking neural network.

The second spiking neural network is connected by means of dynamic synapses with the first spiking neural network, and is trained for interpreting and labelling output data of the first spiking neural network. Additionally, the output of the second spiking neural network is transmitted to a computing device, such as a CPU for post processing.

- Secure Voice Communications System

US20190188600A1 - Secure Voice Communications System

Abstract:

Disclosed herein are system and method embodiments for establishing secure communication with a remote artificial intelligent device.

An embodiment operates by capturing an auditory signal from an auditory source. The embodiment coverts the auditory signal into a plurality of pulses having a spatio-temporal distribution.

The embodiment identifies an acoustic signature in the auditory signal based on the plurality of pulses using a spatio-temporal neural network. The embodiment modifies synaptic strengths in the spatio-temporal neural network in response to the identifying thereby causing the spatio-temporal neural network to learn to respond to the acoustic signature in the acoustic signal.

The embodiment transmits the plurality of pulses to the remote artificial intelligent device over a communications channel thereby causing the remote artificial intelligent device to learn to respond to the acoustic signature, and thereby allowing secure communication to be established with the remote artificial intelligent device based on the auditory signature.

- Intelligent Autonomous Feature Extraction System Using Two Hardware Spiking Neutral Networks with Spike Timing Dependent Plasticity

US20170236051A1 - Intelligent Autonomous Feature Extraction System Using Two Hardware Spiking Neutral Networks with Spike Timing Dependent Plasticity

Abstract:

Embodiments of the present invention provide an artificial neural network system for feature pattern extraction and output labelling. The system comprises a first spiking neural network and a second spiking neural network.

The first spiking neural network is configured to autonomously learn complex, temporally overlapping features arising in an input pattern stream. Competitive learning is implemented as spike timing dependent plasticity with lateral inhibition in the first spiking neural network. The second spiking neural network is connected with the first spiking neural network through dynamic synapses, and is trained to interpret and label the output data of the first spiking neural network.

Additionally, the labelled output of the second spiking neural network is transmitted to a computing device, such as a central processing unit for post processing.

- System and Method for Spontaneous Machine Learning and Feature Extraction

US20180225562A1 - System and Method for Spontaneous Machine Learning and Feature Extraction - Google Patents

Embodiments of the present invention provide an artificial neural network system for improved machine learning, feature pattern extraction and output labeling. The system comprises a first spiking neural network and a second spiking neural network. The first spiking neural network is configured...patents.google.com

Abstract:

Embodiments of the present invention provide an artificial neural network system for improved machine learning, feature pattern extraction and output labelling. The system comprises a first spiking neural network and a second spiking neural network. The first spiking neural network is configured to spontaneously learn complex, temporally overlapping features arising in an input pattern stream.

Competitive learning is implemented as Spike Timing Dependent Plasticity with lateral inhibition in the first spiking neural network. The second spiking neural network is connected with the first spiking neural network through dynamic synapses, and is trained to interpret and label the output data of the first spiking neural network.

Additionally, the output of the second spiking neural network is transmitted to a computing device, such as a CPU for post processing.

- Method, digital electronic circuit and system for unsupervised detection of repeating patterns in a series of events

US20190286944A1 - Method, digital electronic circuit and system for unsupervised detection of repeating patterns in a series of events - Google Patents

A method of performing unsupervised detection of repeating patterns in a series of events, includes a) Providing a plurality of neurons, each neuron being representative of W event types; b) Acquiring an input packet comprising N successive events of the series; c) Attributing to at least some...patents.google.com

Abstract:

A method of performing unsupervised detection of repeating patterns in a series of events, includes:

Providing a plurality of neurons, each neuron being representative of W event types;

Acquiring an input packet comprising N successive events of the series;

Attributing to at least some neurons a potential value, representative of the number of common events between the input packet and the neuron;

Modify the event types of neurons having a potential value exceeding a first threshold TL; and

Generating a first output signal for all neurons having a potential value exceeding a second threshold TF, and a second output signal, different from the first one, for all other neurons. A digital electronic circuit and system configured for carrying out such a method is also provided.

Published Research:

- Unsupervised learning of repeating patterns using a novel STDP based algorithm

Abstract:

Computational vision systems that are trained with deep learning have recently matched human performance (Hinton et al). However, while deep learning typically requires tens or hundreds of thousands of labelled examples, humans can learn a task or stimulus with only a few repetitions. For example, a 2015 study by Andrillon et al. showed that human listeners can learn complicated random auditory noises after only a few repetitions, with each repetition invoking a larger and larger EEG activity than the previous. In addition, a 2015 study by Martin et al. showed that only 10 minutes of visual experience of a novel object class was required to change early EEG potentials, improve saccadic reaction times, and increase saccade accuracies for the particular object trained. How might such ultra-rapid learning actually be accomplished by the cortex? Here, we propose a simple unsupervised neural model based on spike timing dependent plasticity, which learns spatiotemporal patterns in visual or auditory stimuli with only a few repetitions. The model is attractive for applications because it is simple enough to allow the simulation of very large numbers of cortical neurons in real time. Theoretically, the model provides a plausible example of how the brain may accomplish rapid learning of repeating visual or auditory patterns using only a few examples.

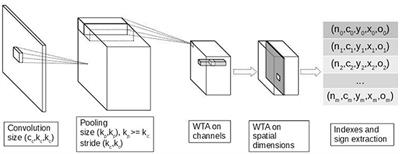

- Unsupervised Feature Learning With Winner-Takes-All Based STDP

Unsupervised Feature Learning With Winner-Takes-All Based STDP

We present a novel strategy for unsupervised feature learning in image applications inspired by the Spike-Timing-Dependent-Plasticity (STDP) biological learning rule. We show equivalence between rank order coding Leaky-Integrate-and-Fire neurons and ReLU artificial neurons when applied to...www.frontiersin.org

Abstract:

We present a novel strategy for unsupervised feature learning in image applications inspired by the Spike-Timing-Dependent-Plasticity (STDP) biological learning rule. We show equivalence between rank order coding Leaky-Integrate-and-Fire neurons and ReLU artificial neurons when applied to non-temporal data. We apply this to images using rank- order coding, which allows us to perform a full network simulation with a single feed-forward pass using GPU hardware. Next we introduce a binary STDP learning rule compatible with training on batches of images. Two mechanisms to stabilize the training are also presented : a Winner-Takes-All (WTA) framework which selects the most relevant patches to learn from along the spatial dimensions, and a simple feature-wise normalization as homeostatic process. This learning process allows us to train multi-layer architectures of convolutional sparse features. We apply our method to extract features from the MNIST, ETH80, CIFAR- 10, and STL-10 datasets and show that these features are relevant for classification. We finally compare these results with several other state of the art unsupervised learning methods.

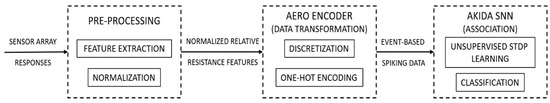

- A Hardware-Deployable Neuromorphic Solution for Encoding and Classification of Electronic Nose Data

A Hardware-Deployable Neuromorphic Solution for Encoding and Classification of Electronic Nose Data

In several application domains, electronic nose systems employing conventional data processing approaches incur substantial power and computational costs and limitations, such as significant latency and poor accuracy for classification. Recent developments in spike-based bio-inspired approaches...www.mdpi.com

Abstract:

In several application domains, electronic nose systems employing conventional data processing approaches incur substantial power and computational costs and limitations, such as significant latency and poor accuracy for classification. Recent developments in spike-based bio-inspired approaches have delivered solutions for the highly accurate classification of multivariate sensor data with minimized computational and power requirements. Although these methods have addressed issues related to efficient data processing and classification accuracy, other areas, such as reducing the processing latency to support real-time application and deploying spike-based solutions on supported hardware, have yet to be studied in detail. Through this investigation, we proposed a spiking neural network (SNN)- based classifier, implemented in a chip-emulation-based development environment, that can be seamlessly deployed on a neuromorphic system-on-a-chip (NSoC). Under three different scenarios of increasing complexity, the SNN was determined to be able to classify real-valued sensor data with greater than 90% accuracy and with a maximum latency of 3 s on the software-based platform. Highlights of this work included the design and implementation of a novel encoder for artificial olfactory systems, implementation of unsupervised spike-timing-dependent plasticity (STDP) for learning, and a foundational study on early classification capability using the SNN-based classifier.

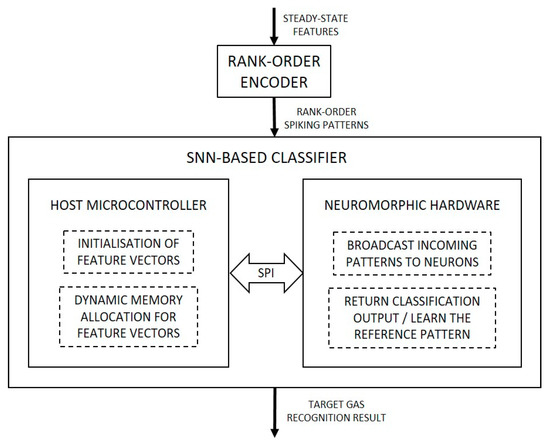

- Real-Time Classification of Multivariate Olfaction Data Using Spiking Neural Networks

Real-Time Classification of Multivariate Olfaction Data Using Spiking Neural Networks

Recent studies in bioinspired artificial olfaction, especially those detailing the application of spike-based neuromorphic methods, have led to promising developments towards overcoming the limitations of traditional approaches, such as complexity in handling multivariate data, computational and...www.mdpi.com

Abstract:

Recent studies in bio inspired artificial olfaction, especially those detailing the application of spike-based neuromorphic methods, have led to promising developments towards overcoming the limitations of traditional approaches, such as complexity in handling multivariate data, computational and power requirements, poor accuracy, and substantial delay for processing and classification of odours. Rank-order-based olfactory systems provide an interesting approach for detection of target gases by encoding multi-variate data generated by artificial olfactory systems into temporal signatures. However, the utilization of traditional pattern-matching methods and unpredictable shuffling of spikes in the rank-order impedes the performance of the system. In this paper, we present an SNN-based solution for the classification of rank-order spiking patterns to provide continuous recognition results in real-time. The SNN classifier is deployed on a neuromorphic hardware system that enables massively parallel and low-power processing on incoming rank-order patterns.

Offline learning is used to store the reference rank-order patterns, and an inbuilt nearest neighbour classification logic is applied by the neurons to provide recognition results. The proposed system was evaluated using two different datasets including rank-order spiking data from previously established olfactory systems. The continuous classification that was achieved required a maximum of 12.82% of the total pattern frame to provide 96.5% accuracy in identifying corresponding target gases. Recognition results were obtained at a nominal processing latency of 16ms for each incoming spike. In addition to the clear advantages in terms of real-time operation and robustness to inconsistent rank-orders, the SNN classifier can also detect anomalies in rank-order patterns arising due to drift in sensing arrays.

- A Review of Current Neuromorphic Approaches for Vision, Auditory, and Olfactory Sensors

Frontiers | A Review of Current Neuromorphic Approaches for Vision, Auditory, and Olfactory Sensors

Conventional vision, auditory, and olfactory sensors generate large volumes of redundant data and as a result tend to consume excessive power. To address the... www.frontiersin.org

www.frontiersin.org

Abstract:

Conventional vision, auditory, and olfactory sensors generate large volumes of redundant data and as a result tend to consume excessive power. To address these shortcomings, neuromorphic sensors have been developed. These sensors mimic the neuro-biological architecture of sensory organs using aVLSI (analog Very Large Scale Integration) and generate asynchronous spiking output that represents sensing information in ways that are similar to neural signals. This allows for much lower power consumption due to an ability to extract useful sensory information from sparse captured data. The foundation for research in neuromorphic sensors was laid more than two decades ago, but recent developments in understanding of biological sensing and advanced electronics, have stimulated research on sophisticated neuromorphic sensors that provide numerous advantages over conventional sensors. In this paper, we review the current state-of-the-art in neuromorphic implementation of vision, auditory, and olfactory sensors and identify key contributions across these fields. Bringing together these key contributions we suggest a future research direction for further development of the neuromorphic sensing field.

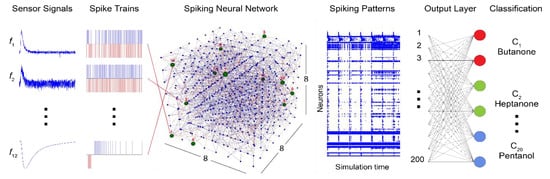

- Application of a Brain-Inspired Spiking Neural Network Architecture to Odour Data Classification

Application of a Brain-Inspired Spiking Neural Network Architecture to Odor Data Classification

Existing methods in neuromorphic olfaction mainly focus on implementing the data transformation based on the neurobiological architecture of the olfactory pathway. While the transformation is pivotal for the sparse spike-based representation of odor data, classification techniques based on the...www.mdpi.com

Abstract:

Existing methods in neuromorphic olfaction mainly focus on implementing the data transformation based on the neurobiological architecture of the olfactory pathway. While the transformation is pivotal for the sparse spike-based representation of odour data, classification techniques based on the bio-computations of the higher brain areas, which process the spiking data for identification of odour, remain largely unexplored. This paper argues that brain-inspired spiking neural networks constitute a promising approach for the next generation of machine intelligence for odour data processing. Inspired by principles of brain information processing, here we propose the first spiking neural network method and associated deep machine learning system for classification of odour data. The paper demonstrates that the proposed approach has several advantages when compared to the current state-of-the-art methods. Based on results obtained using a benchmark dataset, the model achieved a high classification accuracy for a large number of odours and has the capacity for incremental learning on new data. The paper explores different spike encoding algorithms and finds that the most suitable for the task is the step-wise encoding function. Further directions in the brain-inspired study of odour machine classification include investigation of more biologically plausible algorithms for mapping, learning, and interpretation of odour data along with the realization of these algorithms on some highly parallel and low power consuming neuromorphic hardware devices for real-world applications.

- Neuromorphic engineering — A paradigm shift for future IM technologies

Neuromorphic engineering — A paradigm shift for future IM technologies

Recent developments in measurement science have mainly focused on enhancing the quality of measurement procedures, improving their efficiency, and introducing processes that can increase accuracy [1]. Simultaneously, the implementation of intelligent and adaptive distributed systems using the...ieeexplore.ieee.org

Abstract:

Recent developments in measurement science have mainly focused on enhancing the quality of measurement procedures, improving their efficiency, and intro

The End.

Last edited by a moderator: