Recent article outlining Renesas "honing in on AI" for their PMIC.

Highlighted red down bottom of article & last line.

Getting closer...n...closer hopefully.

A Glimpse at a New Wave of Smaller PMICs: AI, Automotive, Wearables, and More

February 26, 2022 by

Antonio Anzaldua Jr.

Power management ICs (PMICs) are continually scaling down. Here's how a few companies are packing functionality in these devices in the face of miniaturization.

By 2027, the market for

power management integrated circuits (PMICs) is expected to grow to $51.04 billion, making the most impact in industries including automotive, consumer, industrial, and telecommunications.

PMICs control voltage, current, and heat dissipation in a system, so the device can function efficiently without consuming too much power. The PMIC manages battery charging and monitors sleep modes, DC-to-DC conversions, and voltage and current setting adjustments in real-time.

Renesas' new PMIC complements two of its microprocessors. Image used courtesy of Renesas

In the past six months, a number of developers have designed compact single-chip PMICs to meet the growing demand for smaller, more power-efficient electronics. Here are a few PMICs designed with automotive, wearables/hearables, and artificial intelligence (AI) in mind.

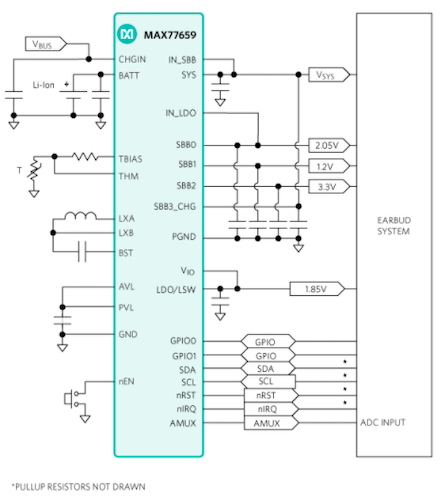

Maxim/ADI's PMIC Integrates Switching Charger for Wearables

Maxim Integrated (

now part of Analog Devices) is known for its analog and mixed-signal integrated circuits for the automotive, industrial, communications, consumer, and computing markets. Maxim has an established portfolio of PMICs for both high-performing and low-power solutions.

Maxim Integrated’s MAX77659 SIMO PMIC comes with a built-in 300 mA switching charger to help extend battery lifespan. Image used courtesy of Maxim Integrated

The high-performance PMICs are said to maximize tasks per watt while increasing system efficiency for complex systems-on-chip (SoCs), FPGAs, and application processors. Maxim says the low-power PMICs pack multiple power rails and power management features in a small footprint.

The company recently released

a tiny PMIC designed to charge wearables and listening devices four times faster than conventional chargers. This device, the

MAX77659, is a single-inductor multiple-output (SIMO) PMIC equipped with Analog Devices’ switch-mode buck-boost charger. Geared for wearables, hearables, and IoT designs, this device is said to provide over four hours of battery life with one 10-minute charge.

This PMIC includes low-dropout (LDO) regulators that provide noise mitigation and help derive voltage from the battery when lighter loads are occurring. Like a load switch, these LDOs disconnect external blocks not in use to decrease power consumption. Two GPIOs and an analog multiplexer are incorporated in the MAX77659 to allow the PMIC to switch between several internal voltages and current signals to an external node for monitoring.

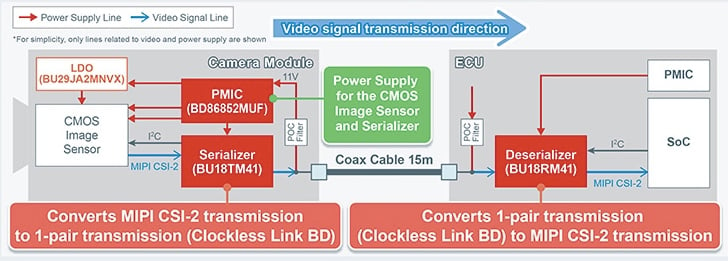

ROHM’s PMIC Aims to Improve ADAS Camera Modules

ROHM Semiconductor believes it is addressing the demand for smaller, more compact PMICs in the automotive realm. Now, the company is targeting this market with PMIC solutions for tiny satellite camera modules. To do this, the company plans on

combining its existing SerDes ICs with a new PMIC.

This combo was designed to solve issues revolving around the compact footprints and low power consumption of new camera modules. It also features a new element: low electromagnetic noise (EMI) cancellation. These cameras assist with object detection to ensure the driver’s safety from potential risks around the vehicle.

Through a single IC, the voltage settings and sequence controls can be performed to reduce the mounting area by 41%, according to ROHM. Image used courtesy of ROHM Semiconductor

ROHM says the SerDes IC optimizes the transmission rate based on video resolution and reduces power consumption by approximately 27%. The device is also equipped with a spread-spectrum function that mitigates noise by nearly 20 dB while improving the reliability of images taken by the ADAS cameras.

The PMIC is designed to manage the power supply systems of any CMOS image sensor. Using a camera-specific PMIC can help deliver high conversion efficiency that leads to low power consumption. The SerDes IC dissipates heat concentration with an external LDO to supply power to the CMOS image sensor. The close proximity of the image sensor and the LDO decreases the disturbance noise in the power supply.

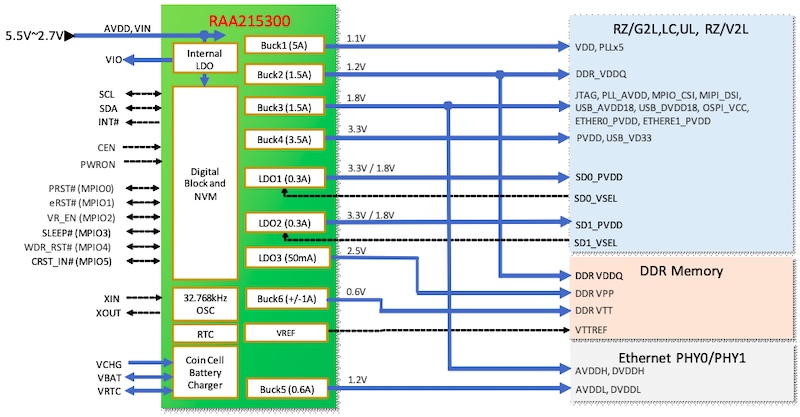

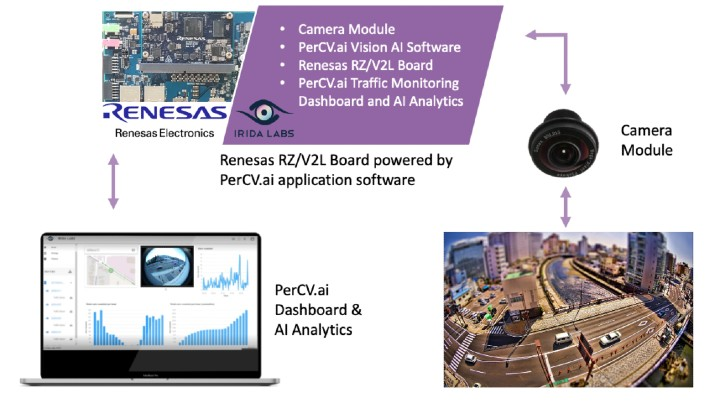

Renesas Hones in on AI With MPU-focused PMIC

Renesas has also announced a new PMIC in the past few months—this one for MPUs. The company says the new PMIC, RAA215300, complements two of Renesas’ microprocessors (MPUs) (32-bit and 64-bit) built for AI applications.

The new RAA215300 PMIC enables four-layer PCBs, which is a cost-effective route for developers to take. This higher level of integration also increases system reliability because it doesn't need as many external components on the board.

A stacked solution for MPUs and FPGAs with six high-efficiency buck regulators and three LDOs to ensure complete system power. Image used courtesy of Renesas

Key features for Renesas’ PMIC solution are a built-in power sequencer, a real-time clock, a super cap charger, and an ultrasonic mode for eliminating unwanted audible noise interference found in microphones or speakers. A feature worth highlighting is the fact that this PMIC can support different memory power requirements, ranging from DDR3 to LPDDR4.

A PMIC for Any Application

Some characteristics of PMICs are valuable across the board—for instance, the ability to add separate input current limits and battery current settings. These features simplify monitoring since the current in the charger is independently regulated and not shared among the other system loads.

Each of these PMICs from Maxim, ROHM, and Renesas illustrate the many different use cases for which PMICs may aid designers.

Maxim Integrated's PMIC incorporates a smart power selector charging component for addressing smaller wearable technologies. This SIMO PMIC also has GPIOs that can communicate to the CPU with signals received from switches or sensors. ROHM targets automotive applications. With an increasing demand for solutions that occupy less space within the ADAS system, ROHM’s PMIC may be of use in small cameras.

Lastly, Renesas’ PMIC was built for AI and MPUs. This device is essentially built like a microscopic tank. The 9-channel PMIC holds a larger footprint at 8 x 8 mm; however it is equipped with DDR memory, a built-in charger, and RTC, along with six buck regulators and three LDOs.

www.micro.ai

www.micro.ai