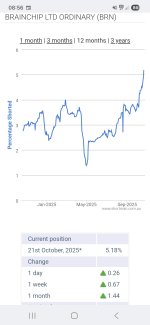

A new joint Arm/SCSP Paper just published online a few hours ago, argues the next wave of AI must shift from cloud to edge to slash energy, cost and improve latency and privacy. It claims edge AI can cut energy use up to 60% for equivalent workloads.

The paper doesn’t mention “neuromorphic” or BrainChip by name, but it strongly backs the exact themes Akida benefits from - on-device AI, ultra-efficient inference, small language models, and heterogeneous accelerators at the edge.

Their 2025–2029 “Edge AI Evolution Matrix” explicitly calls for on-device generative AI and models that learn locally by 2026–2027, a direct tailwind for low-power, always-on inference like Akida’s (see Matrix below).

They emphasize small language models (SLMs) moving to devices within 12–18 months, and keeping sensitive data on-device for privacy.

IMO this 100% aligned with BrainChip’s edge-SLM/LLM pitch and is right in Akida 3.0's wheelhouse!

BlogOctober 27, 2025

How Efficient AI Can Power U.S. Competitiveness

New joint Arm-SCSP position paper outlines how policy and innovation can accelerate AI energy efficiency

By

Vince Jesaitis, Head of Global Government Affairs, Arm

Artificial Intelligence (AI)Sustainability and Social Impact

Share

Reading time 3 min

Artificial intelligence (AI) is reshaping economies, industries and national priorities — and sparking a vital discussion about energy efficiency and infrastructure resilience.

This is the central theme of

Smarter at the Edge: How Edge Computing Can Advance U.S. AI Leadership and Energy Security, a joint educational and position paper from Arm and the Special Competitive Studies Project (SCSP) that explores one of the defining challenges of our time: how to scale AI sustainably.

As AI adoption accelerates, the infrastructure that supports it is straining under growing power and resource demands. Without new approaches, the energy cost of progress risks becoming unsustainable. Investment and research into edge AI, alongside data centers, can enable faster, more secure, more cost-effective AI, while easing the pressure on national grids and cloud infrastructure.

The paper builds on Arm’s continued work to promote efficient-by-design IP and compute architecture as the defining measure of progress for the AI era.

AI’s Energy Inflection Point

Generative AI and large language models (LLMs) have transformed how we interact with technology. But each AI query, each model update, and each inference consumes compute power and therefore energy. While the training of massive models often captures headlines, inference (the process of generating outputs for users) now accounts for the majority of AI’s energy use.

Analysts estimate that inference workloads will make up more than 75% of U.S. compute demand in the coming years.

The efficiency gains from advanced processors and optimized workloads are real, but overall AI energy efficiency isn’t keeping pace with the explosive growth in AI activity. If left unchecked, AI’s power demands could outstrip available grid capacity, driving up costs and constraining innovation.

The good news: there are solutions. Edge computing – processing AI closer to where it’s used – offers a path to AI energy efficiency, easing pressure on power grids while strengthening U.S. competitiveness and energy security.

The Strategic Role of the Edge

Edge AI doesn’t replace the cloud; it complements it. Frontier models will still train in large data centers, but inference – where AI meets the real world – can increasingly happen on devices, in factories, hospitals, and local networks.

By combining specialized, energy-efficient hardware with optimized software architectures, edge computing can reduce energy consumption by up to 60% for equivalent workloads. It also provides tangible operational advantages: lower latency, enhanced privacy, and reduced network dependence. These advances make edge AI development and proliferation a strategic advantage.

Edge deployments already power a range of innovations: Autonomous vehicles, industrial robotics, wearable health monitors, smart infrastructure, and the consumer and mobile devices used by billions every day. Each one brings AI closer to the user while reducing the need to move vast amounts of data across energy-hungry networks.

The U.S. government has an essential role to play in accelerating this transition. The White House AI Action Plan, the CHIPS and Science Act, and Department of Energy’s EES2 initiative and AI Science Cloud all lay groundwork for a more efficient AI ecosystem. But they must be matched with continued investment, smart procurement incentives, and edge AI testbeds for public and critical infrastructure-sector missions like wildfire monitoring and grid management.

Efficiency as a National Advantage

AI leadership will depend on how intelligently and efficiently we can deploy compute across the entire ecosystem – from hyperscale data centers to the billions of edge devices embedded in our daily lives.

Other countries have laid out ambitious programs, preparing for the next phase of AI competition. To stay competitive, the U.S. must pursue a similar strategy, linking innovation in hardware and software with deliberate policy support for AI energy-efficient computing.

About the SCSP

The SCSP is a nonprofit, nonpartisan initiative established to strengthen America’s long-term competitiveness in AI, biotechnology, and other emerging technologies. SCSP’s mission is to ensure that democratic nations lead the next wave of innovation while safeguarding shared values and national security.

Read the Full Paper

To learn more about how AI energy efficiency and edge computing can advance U.S. AI leadership and energy security, read the full Arm–SCSP position paper,

Smarter at the Edge,

here.

EXTRACT

www.executivebiz.com

www.executivebiz.com