You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

BRN Discussion Ongoing

- Thread starter TechGirl

- Start date

Something new or just a reminder?

Bravo

Meow Meow 🐾

Here's an extract from this article which shows some cybersecurity-related allocations form the One Big Beautiful Bill.

Extract from above article.

ChatGPT says:

Yes — CNRT is well-positioned to benefit from the bill’s funding framework, especially through APEX and DARPA channels. With the right applications and partnerships, they could substantially accelerate deployment of neuromorphic cybersecurity at the edge—potentially feeding into Dept of Homeland Security, border security, or defense sectors.

Extract from above article.

ChatGPT says:

Cybersecurity and the “One Big Beautiful Bill”?

- Passed July 4, 2025, it’s a massive reconciliation package with sweeping policy and budget provisions Forbes+1Forbes+1The White House+6Wikipedia+6GovFacts+6.

- Cybersecurity-related allocations include:

- $90 million for APEX accelerators and cybersecurity support aimed at small/non‑traditional defense contractors PitchBook+3TechRadar+3Wikipedia+3Security Sales & Integration+1Security Industry Association+1.

- $20 million channelled to DARPA for defense cybersecurity programs SC Media+15Security Sales & Integration+15SparTech Software+15.

- Additional hundreds of millions in DoD IT modernization, including AI-driven threat detection funding industrialautomationindia.in+3SparTech Software+3Quantum Zeitgeist+3.

Could this benefit CNRT (Quantum Ventura + BrainChip + Lockheed)?

Could this benefit CNRT (Quantum Ventura + BrainChip + Lockheed)?

- CNRT is a real-time, AI-driven cybersecurity tech using BrainChip’s Akida neuromorphic processor at the edge quantumventura.com+11quantumventura.com+11industrialautomationindia.in+11.

- The $90 million APEX funds are specifically intended to support small, non-traditional defense contractors — exactly CNRT’s fit in terms of size and innovation scope Security Sales & Integration+1Security Industry Association+1.

Will it funnel into CNRT?

Will it funnel into CNRT?

- High potential:

- CNRT aligns perfectly with APEX’s mission—edge-based, AI-powered cybersecurity for critical infrastructure.

- With DARPA also receiving new funding, the broader cybersecurity ecosystem is stimulated.

- Getting there:

- CNRT reforms still early-stage and developing; they’d need to apply via grants or government contracts.

- Teams usually partner with agencies like DARPA or the DoD’s CISO office to access these pools.

Bottom line

Bottom line

Yes — CNRT is well-positioned to benefit from the bill’s funding framework, especially through APEX and DARPA channels. With the right applications and partnerships, they could substantially accelerate deployment of neuromorphic cybersecurity at the edge—potentially feeding into Dept of Homeland Security, border security, or defense sectors.Mazewolf

Regular

Ambiq micro is IPO stage US Edge AI chip developer, and is linked to BRN partner Edge Impulse, so Akida use possible. They seem to have a strong public customer list according to article...

People waiting to see the quarterly.Volume is better than yesterday… but still somehow on the lower level I would say… maybe more gambler than investors

Yeah but honestly…. What does people expect?People waiting to see the quarterly.

Not alot at this stage.Yeah but honestly…. What does people expect?

I bet all the bigger defense providers are at the least testing AKIDA. None of them would want to miss the bus.Interesting...Our partners RTX and Lockheed get a mention here.

Gen. Michael Guetlein noted that he has 60 days to come up with a notional blueprint for Golden Dome. He said. “I owe that back to the deputy secretary of defense in 60 days. So, in 60 days I’ll be able to talk in depth about, hey, this is our vision for what we want to get after for Golden Dome.”

Space Force eyes ‘novel’ development tools for Golden Dome space-based interceptors

Gen. Michael Guetlein, the Pentagon Golden Dome czar, said on Tuesday that the "real technical challenge" for the effort will be building space-based interceptors to knock down enemy missiles in their boost phase.

By Theresa Hitchens on July 23, 2025 at 3:55 PM

An Air Force Global Strike Command unarmed Minuteman III intercontinental ballistic missile launches during an operational test Oct. 29, 2020, at Vandenberg AFB, Calif. (US Air Force photo by Michael Peterson)

WASHINGTON — The Defense Department is considering innovative methods — such as prize contests and industry cooperation — for kick starting development of space-based interceptors (SBIs) under the Trump administration’s ambitious Golden Dome initiative, according to a senior Space Force official.

Maj. Gen. Stephen Purdy, acting head of space acquisition for the Department of the Air Force, told the House Armed Services Committee today that “from initial discussions” with the Pentagon’s new Golden Dome czar, Gen. Michael Guetlein, and his team, it is clear that the primary goal for SBIs is speed.

“They plan to intend to be as fast as possible,” he said. “[T]hey’re looking at really additional novel ideas, like prize activities, [and] cooperative work with industry where they’re leveraging industry development.”

The Congressional Roundup - your Capitol Hill insights, coming to Breaking Defense!

Speaking at a Space Foundation event on Tuesday, Guetlein acknowledged that SBIs are the long pole in the tent for Golden Dome, which is aimed at creating a comprehensive air and missile defense shield over the US homeland.

“I think the real technical challenge will be building of the space-based interceptor. That technology exists. I believe we have proven every element of the physics that we can make it work. What we have not proven is: First, can I do it economically; and then second, can I do it at scale? Can I build enough satellites to get after the threat? Can I expand the industrial base fast enough to build those satellites? Do I have enough raw materials, etc?” he said.

Guetlein further noted that he has 60 days to come up with a notional blueprint for Golden Dome.

“I’ve been given 60 days to come up with the objective architecture,” he said. “I owe that back to the deputy secretary of defense in 60 days. So, in 60 days I’ll be able to talk in depth about, hey, this is our vision for what we want to get after for Golden Dome.”

Air Warfare, Sponsored

Pioneering the future of airborne ISR: ASELSAN’s ASELFLIR 600

Blending superior optics, advanced stabilization and AI-powered autonomy in a compact and rugged system, ASELFLIR 600 delivers unmatched intelligence, surveillance and reconnaissance (ISR) capabilities.

From ASELSAN

As the Space Force moves forward with development of SBIs, Purdy said the service will apply “lessons learned” from recent efforts to shorten acquisition timelines for other space systems by using non-traditional contracting vehicles, such as Other Transaction Authority, Middle Tier Acquisition constructs and Commercial Solutions Openings.

Further, he stressed that in order to take advantage of commercial innovation, it is key that SBI development not be bogged down by “gold standard” requirements developed up front — instead defining necessary capabilities and asking industry how it can provide solutions.

“Broadly speaking, what we are trying to get after [is to] let industry bid to those pieces, and then we have the maturity and flexibility to pick different elements that might have different levels of capability, but each provide their own uniqueness and bring those forward,” Purdy said.

Another key to success that the Space Force has learned in its own acquisition reform efforts is the need to ensure multiple providers, he said.

Recommended

Global military space spending growth trend continues in 2024, topping $60B

While US spending on national security space continues to dwarf that of the rest of world, non-US military space spending has jumped a whopping 76.5 percent over the last five years.

By Theresa Hitchens

“[T]he opportunity to have multiple winners in the end game, and not just a one and done, I think, is critical. It allows you to have continued to build that industrial base. It allows you extra resiliency.”

According to a report by Reuters, in particular the Pentagon is looking at how to move away from reliance on SpaceX for both launch services and communications satellites for Golden Dome in the wake of the ongoing feud between President Donald Trump and the company’s founder Elon Musk. Citing several government officials, the report noted that DoD is eyeing Amazon’s Project Kuiper to provide data relay services, and has initiated talks with launch providers Stoke Space and Rocket Lab regarding Golden Dome.

Purdy said that Guetlein and his team have “already hit commercial a couple of times” to discuss SBI development.

A number of companies already are advertising their interest, including big defense firms Lockheed Martin, RTX and Northrop Grumman. Indeed, Northrop Grumman CEO Kathy Warden said Tuesday during an earnings call that the company already is performing ground-based tests on an SBI design that she believes can be accelerated.

I think the biggest worry is that the tech grew itself without paying customers... all the advancement was pretty much funded by investors not customers... I wouldn't have been this worried if we were continuously advancing our tech like new apple/Samsung's smart phones (where people actually pay money to buy them...), but we never had anyone paying us significant $$$ or any loyal "paying" customers...

We've always been hearing about EAPs, many excitements, excellent feedbacks, but yet, there's nothing in terms of $$$. Yes, it does look like we are finally getting some attention we deserve lately, I do hope that it will lead us to $$$ this time... unlike Merc / NASA / Valeo / Ford / Vw, etc...

Yes, we all know where you're coming from, it is frustrating with regards to us either accepting this is how it's going to be for at least another

12 months or not accepting or trusting our Board to deliver on all the alleged interaction that is apparently taking place, it's not easy to process when your brain is continually throwing up negatives.

You can always sell your shares, accept a loss or appreciate that the technology is top class, and the market will finally realize that Brainchip's

Akida is market changing, disruptive technology that will benefit millions of people worldwide, especially in the healthcare industry and through

the space industry break-through technology that Akida will bring; we are fast approaching an intersection that nobody wants to enter, and once

again, it's referred to as change or more to the point disruptive technology, our technology that Peter first started researching more than 25 years ago, is finally approaching that point in time (within 5 years) where everything he told me, and many others will be proven to be true.

Maybe IBM or Intel know something that our company doesn't know, yes Quantum and its qubits maybe a threat further down the road, but the next port of call for humanity is Spiking Neural Networks, and I'm referring to "Native SNN's".

Finally, I personally believe that our current partners, like the AFRL and RTX know whose technology is respectfully termed SOTA.

Just my opinion...either way, it's not right nor wrong........take it easy.........Tech x

Frangipani

Top 20

View attachment 88542 View attachment 88543 View attachment 88544

Selamlar Dostlar 🙏🏻 Graph Neural Networks ve Spiking Neural Networks gibi düşük güç harcayan nöral tabanlı AI modelleriyle ilgilenirken, neuromorphic işlemcilere merak saldım. Bu işlemciler, insan… | Alican Kiraz

Selamlar Dostlar 🙏🏻 Graph Neural Networks ve Spiking Neural Networks gibi düşük güç harcayan nöral tabanlı AI modelleriyle ilgilenirken, neuromorphic işlemcilere merak saldım. Bu işlemciler, insan beyninin sinir ağlarını taklit ederek olay tabanlı hesaplama yapıyor. Ve yalnızca sensör...www.linkedin.com

Hi @Humble Genius,

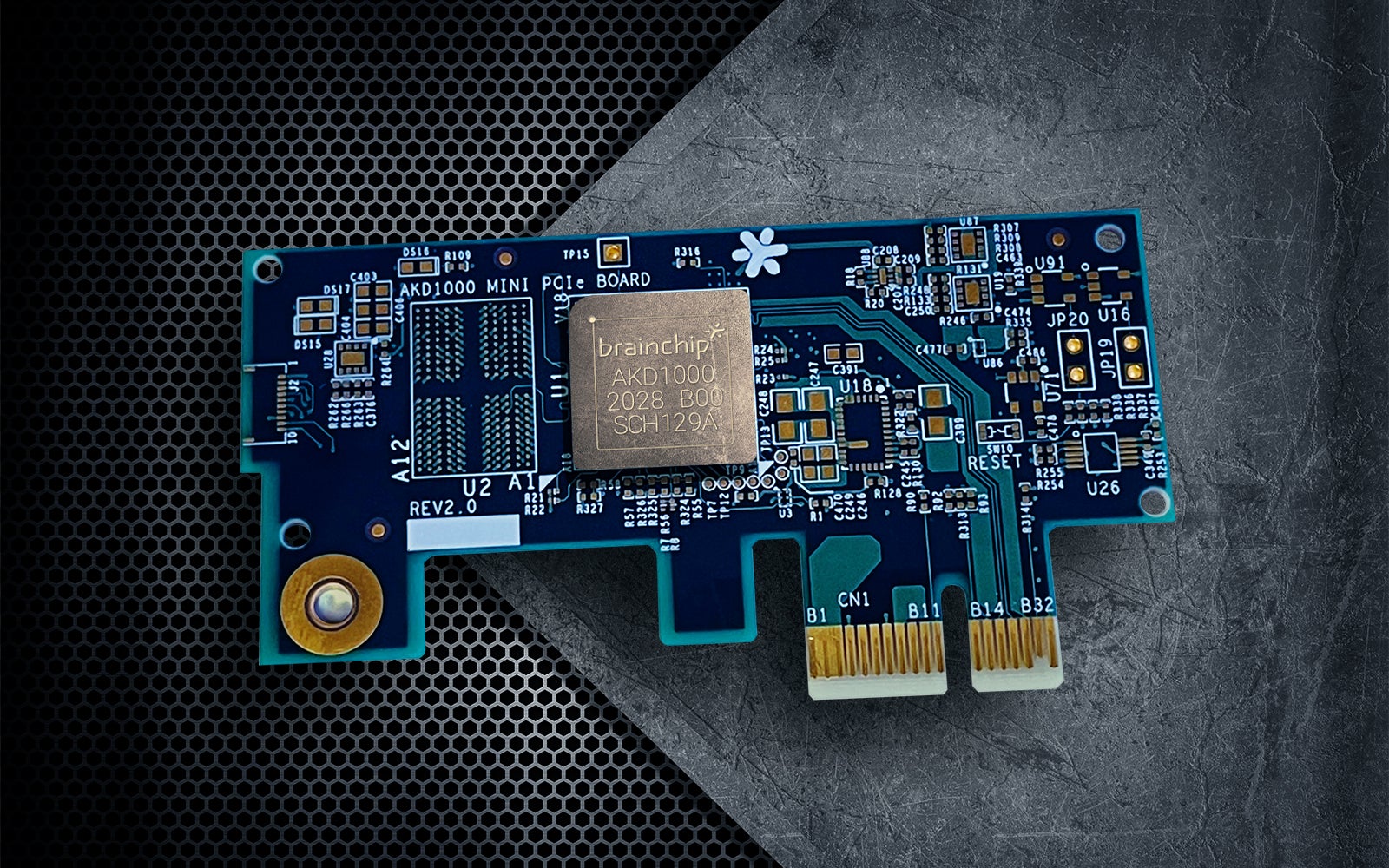

it’s a 2020 picture of an AKD1000 reference board, which must have been the evaluation board our EAP customers received:

BrainChip Confirms Validation of the Akida Neural Processor

Brainchip announces the Company has validated the Akida Neuromorphic System-on-Chip (NSoC) design with functional silicon.brainchip.com

View attachment 88564 View attachment 88565 View attachment 88566

At the time, our company was planning on releasing AKD1000 both in an M.2 form factor (which eventually happened in early 2025) and in a USB form factor (which hasn’t happened so far).

But for some reason the plans changed:

In January 2022, BrainChip started taking orders for the AKD1000 Mini PCIe Board that we are so familiar with (and that is still being sold via https://shop.brainchipinc.com/ these days, although at a much discounted price: for US$ 289 instead of the original US$ 499).

Brainchip ships first mini PCIexpress board with spiking neural network chip

Brainchip is taking orders for its AIoT mini PCIe board, with a full design layout, using the neuromorphic Akida AKD1000 AI chipwww.eenewseurope.com

View attachment 88567

When I saw this Akida AKD1000 board I was surprised: It's historic! Go here https://lnkd.in/dxJfPs3b to buy latest AKD1000 eval boards in PCIe and M.2 form factors for below $300 + shipping. | Alf Kuchenbuch

When I saw this Akida AKD1000 board I was surprised: It's historic! Go here https://lnkd.in/dxJfPs3b to buy latest AKD1000 eval boards in PCIe and M.2 form factors for below $300 + shipping.www.linkedin.com

View attachment 88568

Alican Kiraz has meanwhile received the AKD1000 Mini PCIe Board he had ordered to adapt SNN and GNN (= graph neural network) models for use in robotic arm and autonomous drone projects.

I’m not sure why he calls this an “AKD1000 PCIeM.2 module”, though, as this is evidently not the AKD1000 M.2 form factor that came out in January.

Saying that, we wouldn’t mind him purchasing both form factors, would we?

Google’s translation from Turkish to English:

“Hello friends!

As you know, today's AI projects require high-parameter Transformer-based models and GPUs to reduce the latency between real-time perception and decision-making. However, achieving this with a milliwatt power budget poses a critical engineering challenge for these systems.

To this end, I integrated the BrainChipAkida AKD1000 PCIeM.2 module into the RaspberryPi5's PCIeGen2x1 lane with a Waveshare PCIe x1, creating a fully edge-driven, event-driven Spiking Neural Network accelerator. This reduces average power consumption by 10x compared to traditional models and accelerates perception-to-decision latency by 5x.

In my research plan, I hope to develop excellent examples of hybrid GNN and SNN architectures using Causal Reasoning in energy-constrained edge applications. Friends pursuing related academic research can easily connect with me on LinkedIn.

Thanks Bravo, interesting,Here's an extract from this article which shows some cybersecurity-related allocations form the One Big Beautiful Bill.

View attachment 88836

Extract from above article.

View attachment 88837

ChatGPT says:

Cybersecurity and the “One Big Beautiful Bill”?

- Passed July 4, 2025, it’s a massive reconciliation package with sweeping policy and budget provisions Forbes+1Forbes+1The White House+6Wikipedia+6GovFacts+6.

- Cybersecurity-related allocationsinclude:

- $90 million for APEX accelerators and cybersecurity support aimed at small/non‑traditional defense contractors PitchBook+3TechRadar+3Wikipedia+3Security Sales & Integration+1Security Industry Association+1.

- $20 million channelled to DARPA for defense cybersecurity programs SC Media+15Security Sales & Integration+15SparTech Software+15.

- Additional hundreds of millions in DoD IT modernization, including AI-driven threat detection funding industrialautomationindia.in+3SparTech Software+3Quantum Zeitgeist+3.

Could this benefit CNRT (Quantum Ventura + BrainChip + Lockheed)?

- CNRT is a real-time, AI-driven cybersecurity tech using BrainChip’s Akida neuromorphic processor at the edge quantumventura.com+11quantumventura.com+11industrialautomationindia.in+11.

- The $90 million APEX funds are specifically intended to support small, non-traditional defense contractors — exactly CNRT’s fit in terms of size and innovation scope Security Sales & Integration+1Security Industry Association+1.

Will it funnel into CNRT?

- High potential:

- CNRT aligns perfectly with APEX’s mission—edge-based, AI-powered cybersecurity for critical infrastructure.

- With DARPA also receiving new funding, the broader cybersecurity ecosystem is stimulated.

- Getting there:

- CNRT reforms still early-stage and developing; they’d need to apply via grants or government contracts.

- Teams usually partner with agencies like DARPA or the DoD’s CISO office to access these pools.

Yes — CNRT is well-positioned to benefit from the bill’s funding framework, especially through APEX and DARPA channels. With the right applications and partnerships, they could substantially accelerate deployment of neuromorphic cybersecurity at the edge—potentially feeding into Dept of Homeland Security, border security, or defense sectors.

Bottom line

What Akida Could Do in Golden Dome-Like Systems

| Capability | Akida’s Role |

|---|---|

| Sensor Fusion | Combine radar, EO/IR, and EW data at the edge for real-time threat analysis |

| Autonomous Launch Decision | Trigger intercepts based on local inference without human or cloud input |

| Threat Signature Adaptation | Learn new missile profiles on-device as adversaries evolve tactics |

| Cybersecurity Hardening | Eliminate cloud APIs and reduce I/O vectors for adversary exploitation |

So this post was 4 months ago. Should we be seeing any revenue from this yet? Or am I reading it wrong.

www.linkedin.com

www.linkedin.com

AI Labs: How Minsky™ and Akida™ improve oil rig maintenance | AiLabs Inc | Ai Labs Inc posted on the topic | LinkedIn

At AI Labs, we implemented an advanced Predictive Maintenance solution for hydraulic oil rigs using our proprietary AI engine Minsky™ and BrainChip’s Akida™ platform. 📋 How It Works: ✅ Real-time sensor data integration (e.g., Pressure, Temperature, Motor Power) ✅ Predictive models using...

Also , this just in…. A hospital using Minsky medical imaging to cut times by a large margin.

www.linkedin.com

www.linkedin.com

Case Study: Enhancing Radiology Diagnostics with AI-Powered Medical Imaging | AiLabs Inc | Ai Labs Inc

Transforming Medical Imaging with AI Radiology departments worldwide are under immense pressure—with increasing scan volumes and limited specialist availability. In our latest case study, discover how AI Labs Inc. partnered with a leading hospital network to revolutionize radiology diagnostics...

Hi Bravo,Interesting...Our partners RTX and Lockheed get a mention here.

Gen. Michael Guetlein noted that he has 60 days to come up with a notional blueprint for Golden Dome. He said. “I owe that back to the deputy secretary of defense in 60 days. So, in 60 days I’ll be able to talk in depth about, hey, this is our vision for what we want to get after for Golden Dome.”

Space Force eyes ‘novel’ development tools for Golden Dome space-based interceptors

Gen. Michael Guetlein, the Pentagon Golden Dome czar, said on Tuesday that the "real technical challenge" for the effort will be building space-based interceptors to knock down enemy missiles in their boost phase.

By Theresa Hitchens on July 23, 2025 at 3:55 PM

An Air Force Global Strike Command unarmed Minuteman III intercontinental ballistic missile launches during an operational test Oct. 29, 2020, at Vandenberg AFB, Calif. (US Air Force photo by Michael Peterson)

WASHINGTON — The Defense Department is considering innovative methods — such as prize contests and industry cooperation — for kick starting development of space-based interceptors (SBIs) under the Trump administration’s ambitious Golden Dome initiative, according to a senior Space Force official.

Maj. Gen. Stephen Purdy, acting head of space acquisition for the Department of the Air Force, told the House Armed Services Committee today that “from initial discussions” with the Pentagon’s new Golden Dome czar, Gen. Michael Guetlein, and his team, it is clear that the primary goal for SBIs is speed.

“They plan to intend to be as fast as possible,” he said. “[T]hey’re looking at really additional novel ideas, like prize activities, [and] cooperative work with industry where they’re leveraging industry development.”

The Congressional Roundup - your Capitol Hill insights, coming to Breaking Defense!

Speaking at a Space Foundation event on Tuesday, Guetlein acknowledged that SBIs are the long pole in the tent for Golden Dome, which is aimed at creating a comprehensive air and missile defense shield over the US homeland.

“I think the real technical challenge will be building of the space-based interceptor. That technology exists. I believe we have proven every element of the physics that we can make it work. What we have not proven is: First, can I do it economically; and then second, can I do it at scale? Can I build enough satellites to get after the threat? Can I expand the industrial base fast enough to build those satellites? Do I have enough raw materials, etc?” he said.

Guetlein further noted that he has 60 days to come up with a notional blueprint for Golden Dome.

“I’ve been given 60 days to come up with the objective architecture,” he said. “I owe that back to the deputy secretary of defense in 60 days. So, in 60 days I’ll be able to talk in depth about, hey, this is our vision for what we want to get after for Golden Dome.”

Air Warfare, Sponsored

Pioneering the future of airborne ISR: ASELSAN’s ASELFLIR 600

Blending superior optics, advanced stabilization and AI-powered autonomy in a compact and rugged system, ASELFLIR 600 delivers unmatched intelligence, surveillance and reconnaissance (ISR) capabilities.

From ASELSAN

As the Space Force moves forward with development of SBIs, Purdy said the service will apply “lessons learned” from recent efforts to shorten acquisition timelines for other space systems by using non-traditional contracting vehicles, such as Other Transaction Authority, Middle Tier Acquisition constructs and Commercial Solutions Openings.

Further, he stressed that in order to take advantage of commercial innovation, it is key that SBI development not be bogged down by “gold standard” requirements developed up front — instead defining necessary capabilities and asking industry how it can provide solutions.

“Broadly speaking, what we are trying to get after [is to] let industry bid to those pieces, and then we have the maturity and flexibility to pick different elements that might have different levels of capability, but each provide their own uniqueness and bring those forward,” Purdy said.

Another key to success that the Space Force has learned in its own acquisition reform efforts is the need to ensure multiple providers, he said.

Recommended

Global military space spending growth trend continues in 2024, topping $60B

While US spending on national security space continues to dwarf that of the rest of world, non-US military space spending has jumped a whopping 76.5 percent over the last five years.

By Theresa Hitchens

“[T]he opportunity to have multiple winners in the end game, and not just a one and done, I think, is critical. It allows you to have continued to build that industrial base. It allows you extra resiliency.”

According to a report by Reuters, in particular the Pentagon is looking at how to move away from reliance on SpaceX for both launch services and communications satellites for Golden Dome in the wake of the ongoing feud between President Donald Trump and the company’s founder Elon Musk. Citing several government officials, the report noted that DoD is eyeing Amazon’s Project Kuiper to provide data relay services, and has initiated talks with launch providers Stoke Space and Rocket Lab regarding Golden Dome.

Purdy said that Guetlein and his team have “already hit commercial a couple of times” to discuss SBI development.

A number of companies already are advertising their interest, including big defense firms Lockheed Martin, RTX and Northrop Grumman. Indeed, Northrop Grumman CEO Kathy Warden said Tuesday during an earnings call that the company already is performing ground-based tests on an SBI design that she believes can be accelerated.

This would suit Akida 3 down to the ground, or in orbit.

Blending superior optics, advanced stabilization and AI-powered autonomy in a compact and rugged system, ASELFLIR 600 delivers unmatched intelligence, surveillance and reconnaissance (ISR) capabilities.

This is the ASELFLIR 600 brochure:

https://www.aselsan.com/en/defence/product/2765/aselflir600

ELECTRO-OPTICAL RECONNAISSANCE, SURVEILLANCE AND TARGETING SYSTEM ASELFLIR-600 COMMON APERTURE WITH PRIMARY MIRROR OF DIAMETER 325 MM SINGLE-LRU SYSTEM SUPERIOR RANGE PERFORMANCE HIGH DEFINITION (1280x1024) HD TERMAL CAMERA HIGH DEFINITION (1920x1080) HD DAY TV CAMERA HIGH DEFINITION (1280x1024) HD SWIR CAMERA MULTI TARGET TRACKING LASER TARGET DESIGNATION INTERNAL BORESIGHT SYSTEM (IBS) SUPER RESOLUTION AUTOMATIC TARGET RECOGNATION (ATR) HIGH PRECISION 4 AXIS MECHANICAL + 2 AXIS OPTICAL STABILIZATION OPERATION IN VERY LOW TEMPERATURES IN HIGH ALTITUDES

Applications • Targeting • Reconnaissance and Surveillance Main Feautures • 25-inch Gimbal Diameter • Common Aperture with Diameter of 325mm, Very Large Aperture for Narrow FOVs and Very Narrow FOVs of HD IR, HD Day TV, HD SWIR Camera • High definition HD IR Camera (MWIR) • True “Full High Definition” (1920x1080) HD Day TV Camera with 1920x1080p Video Output • High Definition HD SWIR Camera • Laser Spot Tracker (LST) • Laser Range Finder (LRF) • Laser Pointer and Illuminator (LPI) • Single-LRU System • Superior Range Performance (Longer Range with Larger Optical Aperture) • Internal Sensor Alignment Unit • All-Digital Video Pipeline • Advanced Image Processing • Multi Target Tracking • Accurate Target Geo-Location • 4 Axis Mechanical + 2 Axis Optical Stabilization (Accurate Stabilization) • Reinforced Mechanical Components • Improved Thermal Performance • Automatic Target Recognition (ATR) • Real Time Target Tracking in HD IR, HD Day TV and HD SWIR Cameras • Determination of Coarse and Speed of Moving Target • Inertial Measurement Unit (IMU) • Automatic Alignment with Platform • Operation in Very Low Temperatures in High Altitudes

Well here we have high definition imaging, target recognition, AND real time target tracking.

It is worthwhile comparing the 500:

https://wwwcdn.aselsan.com/api/file/ASELFLIR500_ENG.pdf

ELECTRO-OPTICAL RECONNAISSANCE, SURVEILLANCE AND TARGETING SYSTEM ASELFLIR-500 COMMON APERTURE WITH PRIMARY MIRROR OF DIAMETER 220 MM COMPACT AND LIGHT-WEIGHT SYSTEM SINGLE-LRU SYSTEM SUPERIOR RANGE PERFORMANCE HIGH RESOLUTION IR CAMERA TRUE FULL HIGH DEFINITION HD DAY TV CAMERA HIGH RESOLUTION SWIR NIR CAMERA LASER TARGET DESIGNATION INTERNAL BORESIGHT SYSTEM (IBS) HIGH PRECISION 4 AXIS MECHANICAL + 2 AXIS OPTICAL STABILIZATION OPERATION IN VERY LOW TEMPERATURES IN HIGH ALTITUDES INNOVATIVE ARTIFICIAL INTELLIGENCE-BASED IMAGE PROCESSING ALGORITHMS IN THERMAL AND DAY TV CHANNELS

Main Features • Common Aperture with Diameter of 220 mm • Very Large Aperture for Narrow FOVs and Very Narrow FOVs of HD IR, HD Day TV and HD-SWIR Cameras • Larger Aperture Means Lighter and Therefore Better Image Quality and Better Range • Compact and Light-weight system • Artificial Intelligence Based image Processing Solutions for Thermal and Day TV Channels • Single-LRU System • Superior Range Performance • High Performance HD IR Camera • True Full High Definition (4096x2880 sensor resolution) HD Day TV Camera 1920x1080p Video Output Without Digital Upscaling • High Definition SWIR Camera • Common FOVs for HD IR, HD Day TV and HD SWIR cameras • Laser Range Finder and Target Designator • Laser Pointer • Laser Spot Tracker • Internal Boresight Unit • All-Digital Video Pipeline • Advanced Image Processing • Multi Target Tracking • Simultaneous Target Tracking on IR, Day TV and SWIR Videos • Accurate Target Geo-Location • Determination of Coarse and Speed of Moving Target • Inertial Measurement Unit (IMU) • Accurate Stabilization • Automatic Alignment with Platform • Operation in Very Low Temperatures in High Altitudes

The 500 uses AI based image processing algorithms.

600 does not refer to algorithms, yet it adds target recognition and real time target tracking although the 500 does determine the "coarse*" and speed of moving target [*Rough trip?] which the 600 also does.

The brochure does not mention the CPU, so maybe it connects to a mother board.

In any event, our concern is whether the improvements in the 600 are due to Akida 3. Certainly Gen. Purdy would have ordered the latest AI to be considered.

Real time tracking is a TENNs speciality.

Automatic target recognition from a satellite in real time is also an essential element of this satellite-based system, hense the high definition optics, and you'd want to be sure of target identification, hence Akida 3's INT16/FP3 to analyse the HD imagery.

FN: I like that they plan to intend to be as fast as possible. It's like hurry up and procrastinate.

PS: OOPS! ASELFLIR are Turkish ...

PPS: That does not rule out their use of Akida, but it rules out US Space Force.

Last edited:

This big question for us longs now has to be wether or not the company can keep going with the massive operation costs cash burn, without diluting our shares to water in the long run. They probably gonna need more capital raise to keep it going if contracts aren't on knocking on the door. What is your perspective on this?

(im danish, sorry for potential wrong grammar)

(im danish, sorry for potential wrong grammar)

Baneino

Regular

This big question for us longs now has to be wether or not the company can keep going with the massive operation costs cash burn, without diluting our shares to water in the long run. They probably gonna need more capital raise to keep it going if contracts aren't on knocking on the door. What is your perspective on this?

(im danish, sorry for potential wrong grammar)

The crucial question for us long-term investors in BrainChip is whether the company can continue despite high operating costs and noticeable cash burn, without diluting our shares unnecessarily. I am optimistic about it. Of course, a deep-tech company at this stage requires capital – this is completely normal in the commercialization of high technology. What matters is how it is managed. BrainChip is debt-free, which provides a lot of room for maneuver. At the same time, the management is very aware of the capital structure and has repeatedly emphasized that they aim to act strategically and in partnership – not blindly through dilution. I find the integration of Akida into the Nvidia TAO Toolkit particularly positive. This is a strong signal. Developers who work with Nvidia – and there are many in the AI space – can now directly and easily port their models to Akida. This significantly lowers entry barriers and positions BrainChip in an excellent spot within the global AI developer ecosystem. Even though it's not a formal partnership, this visibility within the Nvidia environment is an important step towards market acceptance. I am convinced that once the first major licensing or partnership deal comes through, the entire perception will change. The technological foundation is there – now it’s all about timing and execution If I may say so as a German Hanoverian

!

Pom down under

Top 20

This big question for us longs now has to be wether or not the company can keep going with the massive operation costs cash burn, without diluting our shares to water in the long run. They probably gonna need more capital raise to keep it going if contracts aren't on knocking on the door. What is your perspective on this?

(im danish, sorry for potential wrong grammar)

Pom down under

Top 20

Tomorrow will be the perfect moment for a nice positive prices sensitive announcement IMO!

Not today… tomorrow… yeah… it would be a nice start into the weekend

GStocks123

Regular

Worth the read!

The deployment platform, BrainChip’s Akida (ASX:BRN;OTC:BRCHF) neuromorphic processor, natively supports SNN operations and enables on-chip incremental learning. This capability allows the system to adapt to new patient data without requiring complete model retraining, aligning with clinical workflows that continuously encounter new diagnostic cases.

The Squeeze-and-Excitation blocks implement adaptive channel weighting through a two-stage bottleneck mechanism, providing additional regularization particularly beneficial for small, imbalanced medical datasets. The quantized output projection produces SNN-ready outputs that can be directly processed by neuromorphic hardware without additional conversion steps.

On HAM10000, QANA achieved 91.6% Top-1 accuracy and 82.4% macro F1 score, maintaining comparable performance on the clinical dataset with 90.8% accuracy and 81.7% macro F1 score. These results demonstrate consistent performance across both standardized benchmarks and clinical practice conditions.

The system showed balanced performance across all seven diagnostic categories, including minority classes such as dermatofibroma and vascular lesions. Notably, melanoma detection achieved 95.6% precision and 93.3% recall, critical metrics for this potentially life-threatening condition.

Comparative analysis against state-of-the-art architectures converted to SNNs showed QANA’s superior performance across all metrics. While conventional architectures experienced accuracy drops of 3–7% after SNN conversion, QANA maintained high accuracy through its quantization-aware design principles.

Neuromorphic Computing Advantages

Moving to Spiking Neural Networks (SNNs) signifies a core alteration from standard neural processing. In contrast to standard neural networks that process continuous values, SNNs utilize discrete spike events for information encoding and transmission. This method yields sparse, event-driven computation that greatly lessens energy use while preserving diagnostic accuracy.The deployment platform, BrainChip’s Akida (ASX:BRN;OTC:BRCHF) neuromorphic processor, natively supports SNN operations and enables on-chip incremental learning. This capability allows the system to adapt to new patient data without requiring complete model retraining, aligning with clinical workflows that continuously encounter new diagnostic cases.

Spike-Compatible Architecture Design

The architecture incorporates specific design elements that facilitate seamless conversion from standard neural networks to spike-based processing. The spike-compatible feature transformation module employs separable convolutions with quantization-aware normalization, ensuring all activations remain within bounds suitable for spike encoding while preserving diagnostic information.The Squeeze-and-Excitation blocks implement adaptive channel weighting through a two-stage bottleneck mechanism, providing additional regularization particularly beneficial for small, imbalanced medical datasets. The quantized output projection produces SNN-ready outputs that can be directly processed by neuromorphic hardware without additional conversion steps.

Comprehensive Performance Validation

Experimental validation was conducted on both the HAM10000 public benchmark dataset and a real-world clinical dataset from Hospital Sultanah Bahiyah, Malaysia. The clinical dataset comprised 3,162 dermatoscopic images from 1,235 patients, providing real-world validation beyond standard benchmarks.On HAM10000, QANA achieved 91.6% Top-1 accuracy and 82.4% macro F1 score, maintaining comparable performance on the clinical dataset with 90.8% accuracy and 81.7% macro F1 score. These results demonstrate consistent performance across both standardized benchmarks and clinical practice conditions.

The system showed balanced performance across all seven diagnostic categories, including minority classes such as dermatofibroma and vascular lesions. Notably, melanoma detection achieved 95.6% precision and 93.3% recall, critical metrics for this potentially life-threatening condition.

Hardware Performance and Energy Analysis

When deployed on Akida hardware, the system delivers 1.5 millisecond inference latency and consumes only 1.7 millijoules of energy per image. These figures represent reductions of over 94.6% in inference latency and 98.6% in energy consumption compared to GPU-based CNN implementations.Comparative analysis against state-of-the-art architectures converted to SNNs showed QANA’s superior performance across all metrics. While conventional architectures experienced accuracy drops of 3–7% after SNN conversion, QANA maintained high accuracy through its quantization-aware design principles.

Similar threads

- Replies

- 0

- Views

- 4K

- Replies

- 9

- Views

- 6K

- Replies

- 0

- Views

- 3K