Frangipani

Top 20

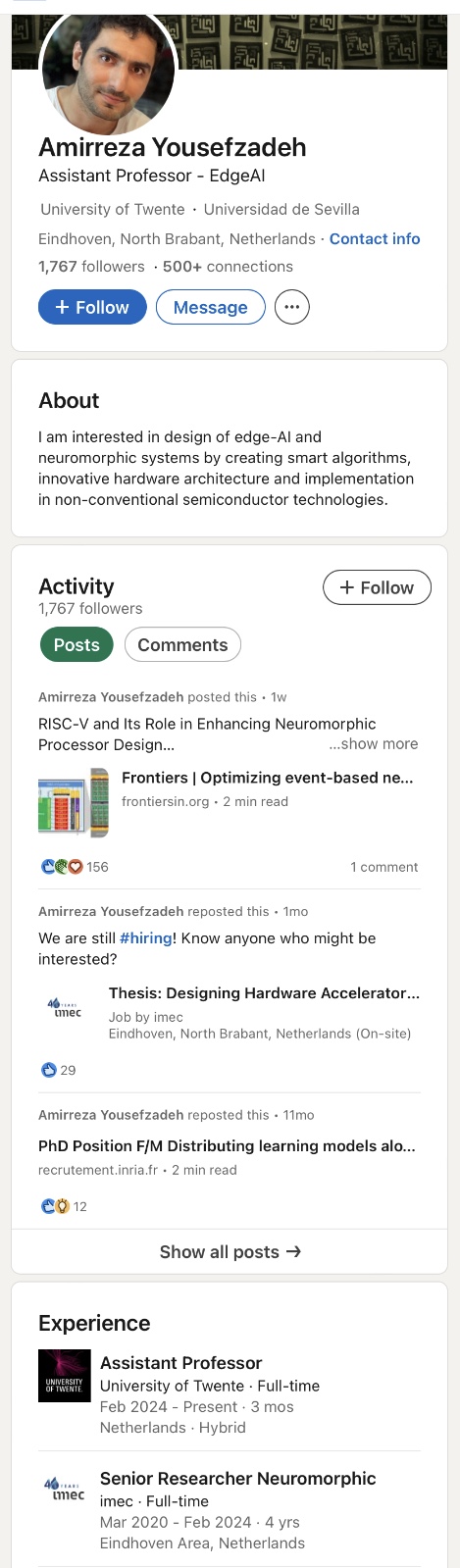

A new Brains & Machines podcast is out - the latest episode’s podcast guest was Amirreza Yousefzadeh, who co-developed the digital neuromorphic processor SENECA at imec The Netherlands in Eindhoven before joining the University of Twente as assistant professor in February 2024.

Here is the link to the podcast and its transcript:

BrainChip is mentioned a couple of times.

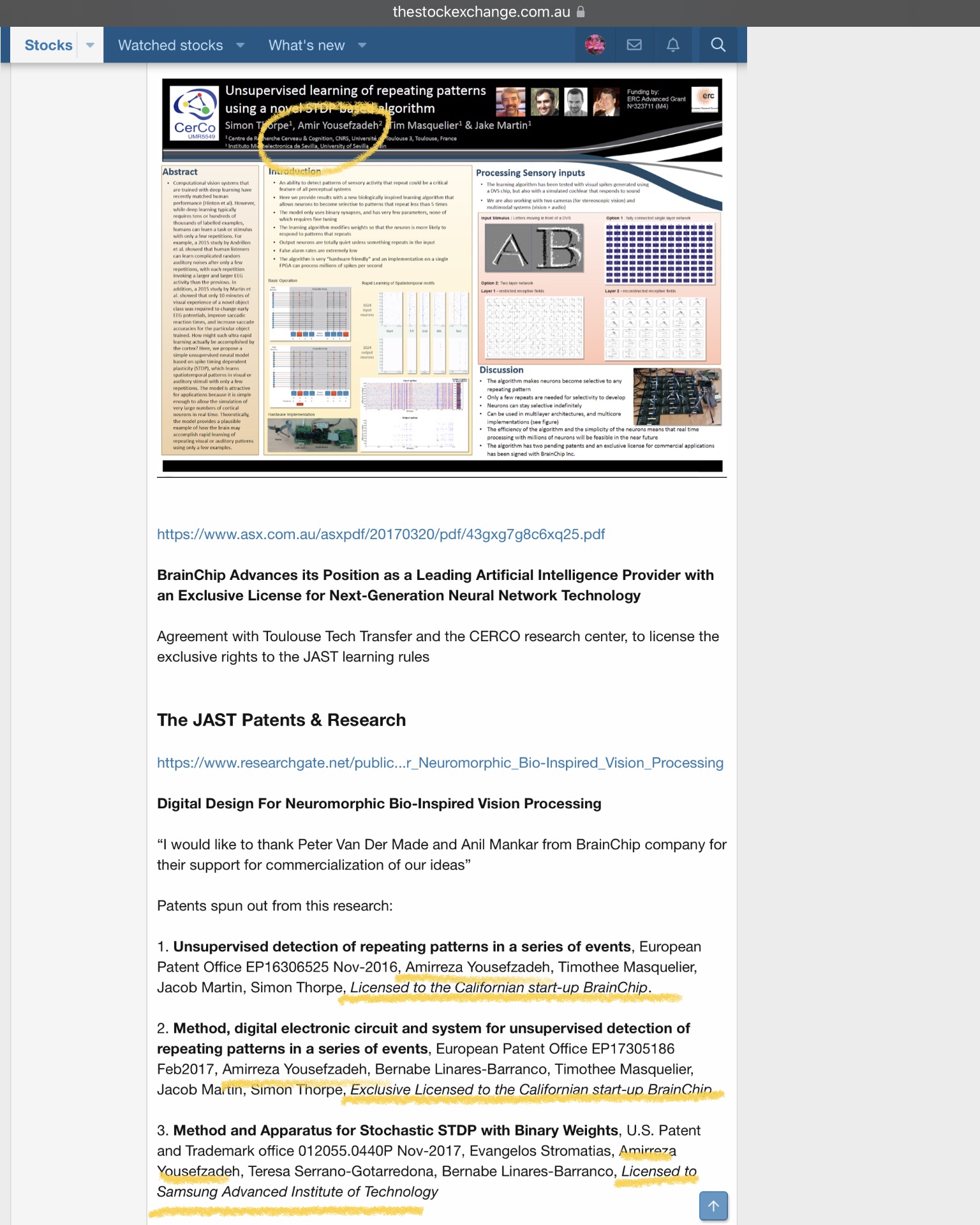

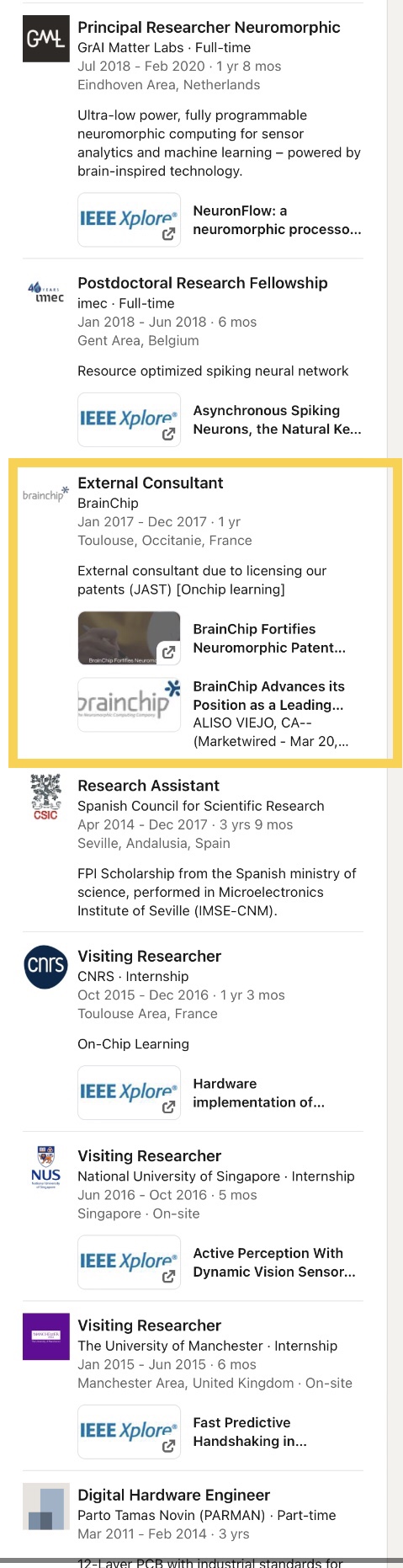

All of 2017, towards the end of his PhD, Yousefzadah collaborated with our company as an external consultant. His PhD supervisor in Sevilla was Bernabé Linares-Barranco who co-founded both GrAI Matter Labs and Prophesee. Yousefzadah was one of the co-inventors (alongside Simon Thorpe) of the two JAST patents that were licensed to BrainChip (Bernabé Linares-Barranco was co-inventor of one of them, too).

https://thestockexchange.com.au/threads/all-roads-lead-to-jast.1098/

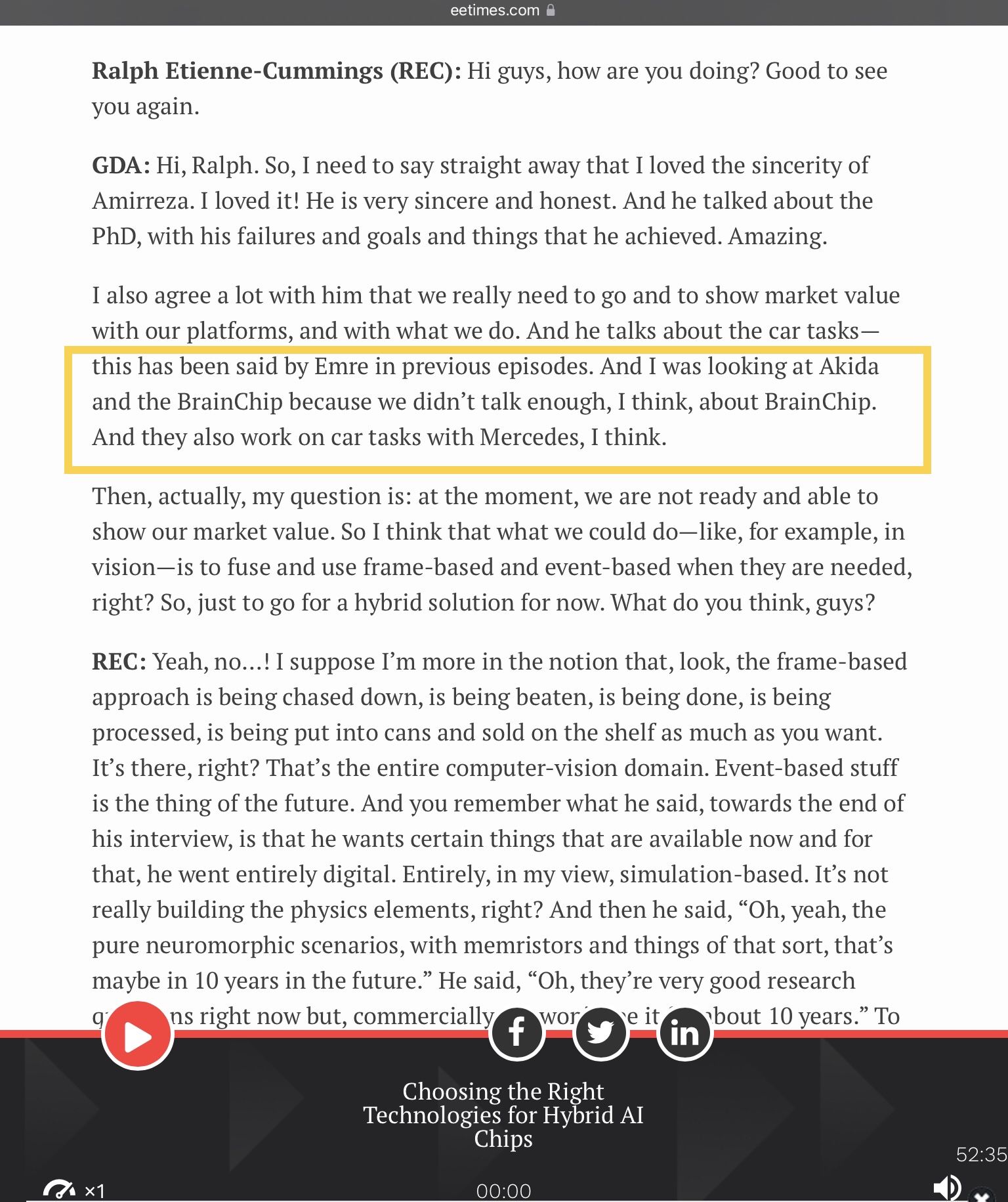

Here are some interesting excerpts from the podcast transcript, some of them mentioning BrainChip:

(Please note that the original interview took place some time ago, when Amirezza Yousefzadah was still at imec full-time, ie before Feb 2024 - podcast host Sunny Bains mentions this at the beginning of the discussion with Giulia D’Angelo and Ralph Etienne-Cummings and again towards the end of the podcast, when there is another short interview update with him.)

Sounds like we can finally expect a future podcast episode featuring someone from BrainChip?

(As for the comment that “they also work on car tasks with Mercedes, I think”, I wouldn’t take that as confirmation of any present collaboration, though…)

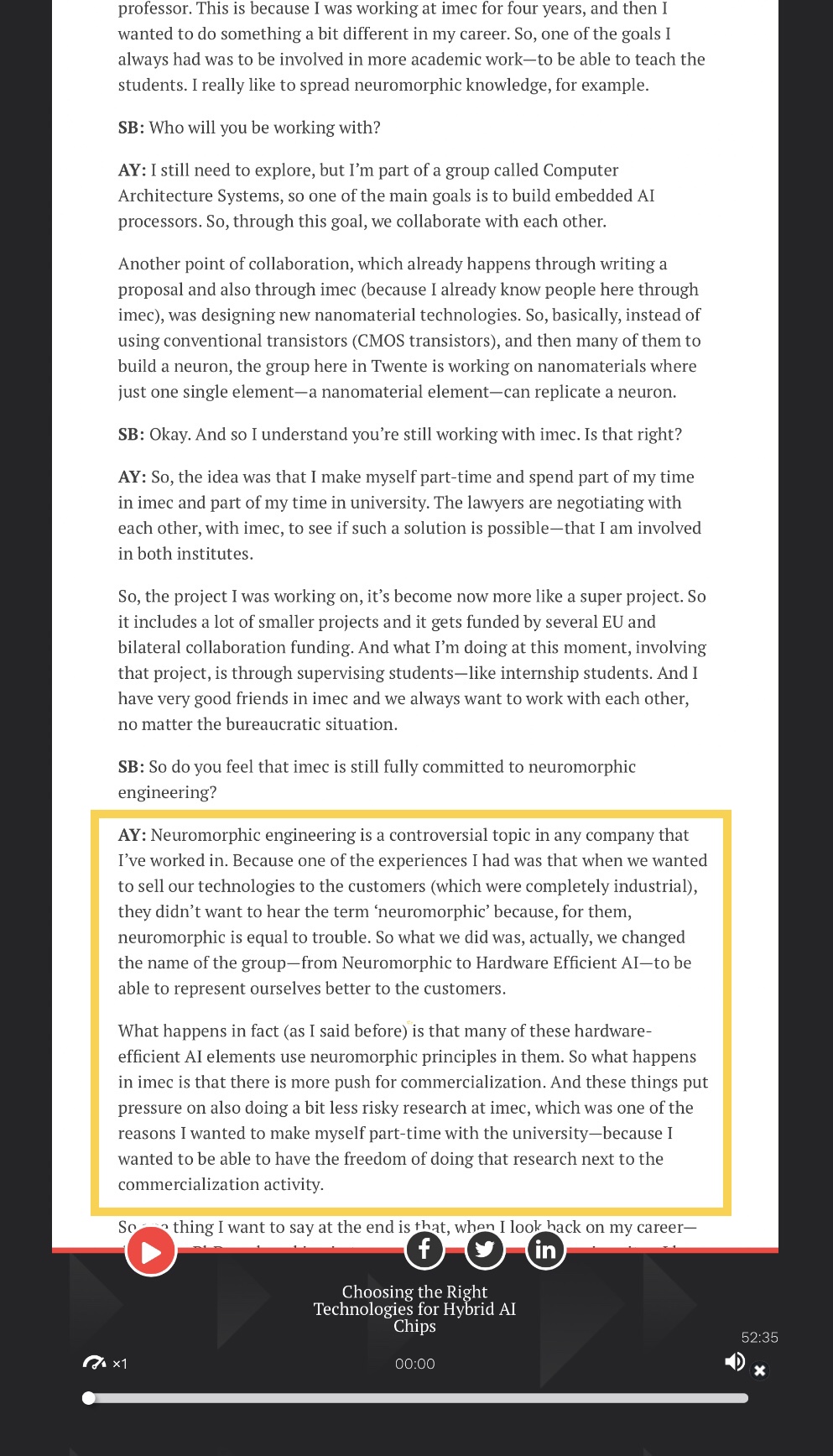

Interesting comment on prospective customers not enamoured of the term “neuromorphic”…

Here is the link to the podcast and its transcript:

BrainChip is mentioned a couple of times.

All of 2017, towards the end of his PhD, Yousefzadah collaborated with our company as an external consultant. His PhD supervisor in Sevilla was Bernabé Linares-Barranco who co-founded both GrAI Matter Labs and Prophesee. Yousefzadah was one of the co-inventors (alongside Simon Thorpe) of the two JAST patents that were licensed to BrainChip (Bernabé Linares-Barranco was co-inventor of one of them, too).

https://thestockexchange.com.au/threads/all-roads-lead-to-jast.1098/

Here are some interesting excerpts from the podcast transcript, some of them mentioning BrainChip:

(Please note that the original interview took place some time ago, when Amirezza Yousefzadah was still at imec full-time, ie before Feb 2024 - podcast host Sunny Bains mentions this at the beginning of the discussion with Giulia D’Angelo and Ralph Etienne-Cummings and again towards the end of the podcast, when there is another short interview update with him.)

Sounds like we can finally expect a future podcast episode featuring someone from BrainChip?

(As for the comment that “they also work on car tasks with Mercedes, I think”, I wouldn’t take that as confirmation of any present collaboration, though…)

Interesting comment on prospective customers not enamoured of the term “neuromorphic”…