Mt09

Regular

Apparently this is what we’ll be incorporated into going off the podcast the other day.

Apparently this is what we’ll be incorporated into going off the podcast the other day.

Apple in talks with Google Gemini AI into iPhones

Anyone know anything about this

Reported on Bloomberg just now

" I knew the Unigen Cupcake Ai Edge Server was in full production but is it available yet? Not sure how long these things take to produce!"I knew the Unigen Cupcake Ai Edge Server was in full production but is it available yet? Not sure how long these things take to produce!

Cupcake Edge AI Server in Full Production - Unigen

Unigen Corporation proudly announces the successful production launch of its highly anticipated Cupcake Edge AI Server. The first units have been produced at our cutting-edge facilities in Hanoi, Vietnam, and Penang, Malaysia, marking a significant milestone in Unigen’s commitment to delivering...unigen.com

Cannot find any info other than this. I did email their sales team and was told it would more likely be available through their distributors. No timeframe was provided.

Hoping for a surprise in the next quarterly as it is 9 years today since I purchased my first parcel of shares. Never expected to hold this long and for the share price to still be this low but I am hoping to celebrate next year’s anniversary in style.

View attachment 59283

Silver lining is that I have been able to accumulate a lot more at very low prices over time

A big shout out to all of the other patient holders who have been here just as long!

Wondering what the average amount of shares people hold.

And also I am thinking do I hold enough

What’s the idea amount one should hold

What’s the amount that will see amazing growth moving forward

I concure, as long as ones holding is below 5% of the floated stock your laughing.Take the calculator and play with your figures. Assume different stock prices and do your calculations.

In my situation, I will have enough money to live very comfortably and stop working with a SP of about 20 EUR, but even only 7 EUR would be enough for a nice place to live in. To improve it further I am still buying more stocks.

When we run out, we'll refer them to Megachips who have a version in 22nm FDSOI (fully depleted silicon on insulator).Something I don't really understand.

If a small electronic/space company wants to buy say 1000 pcs Alkida 2 chips for own in-house development, how do they do it?

I read somewhere that you can buy the 1.0 but not 2?

Most companies will not be interested in buying an IP unless they sell/develop chips themselves but I could be wrong.

Thanks but still only 1.0 or 1500 right?When we run out, we'll refer them to Megachips who have a version in 22nm FDSOI (fully depleted silicon on insulator).

FDSOI is more efficient than vanilla CMOS because it has less loss.

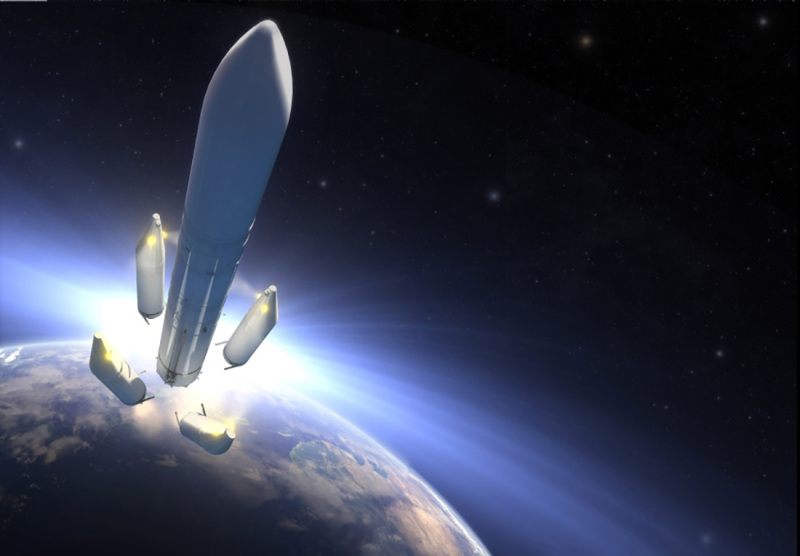

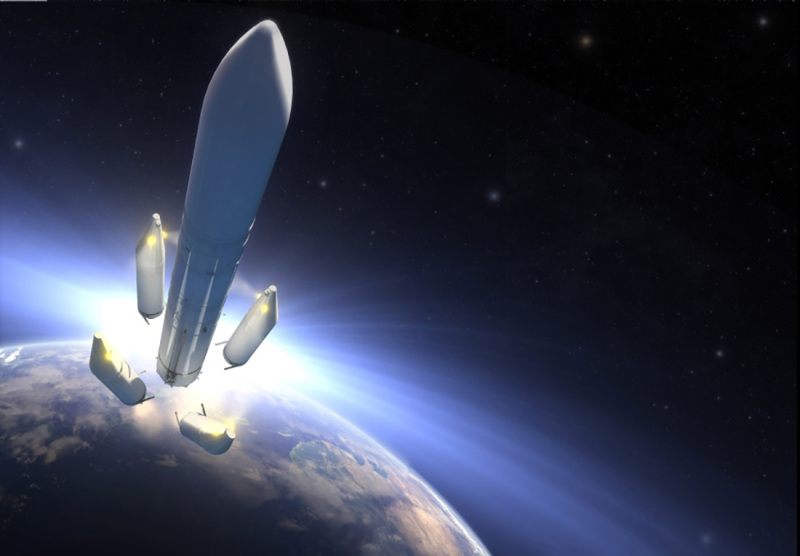

Was that graphic already commented on? OHB is not a household name … it might become one soon.

Yes sorry - I was half asleep.Thanks but still only 1.0 or 1500 right?

Good Morning Frangipani ,At least one forum member appears to have found @Berlinforever ’s post so ridiculous he/she/they felt the need to comment with a?!

View attachment 59313

View attachment 59306

View attachment 59307

While OHB may not end up becoming a household name in the general public, the emoji poster(s) seem(s) to totally underestimate the parent company’s reputation and significance within the aerospace industry sector: OHB SE (the multinational space and technology group that wholly owns OHB Hellas) is actually one of Europe’s leading space tech companies, headquartered in Bremen, Germany, which is not only well-known for the fairy tale The Town Musicians of Bremen, but also happens to be one of Europe’s hubs for the aeronautics and space industry.

The top five factors behind Bremen’s success as an aerospace hub

Aeroplane wings, Ariane rockets and Galileo satellites – Bremen is one of the leading locations in the international aerospace industry. Here are five factors behind Bwww.wfb-bremen.de

I recommend the Doubting Thomas(es) (and everyone else) to have a look at OHB’s image brochure

their latest corporate report

as well as their website (https://www.ohb.de/en/corporate/milestones) to see how Christa Fuchs, her late husband Manfred Fuchs and their team “turned the small hydraulics company OHB into a global player in the international space industry.” The initialism OHB originally stood for “Otto Hydraulik Bremen” - in 1991 it was officially renamed “Orbital- und Hydrotechnologie Bremen-System GmbH”.

View attachment 59314

They are right up there with Europe’s aerospace giants Airbus Defence & Space (formerly Astrium) and Thales Alenia Space, fiercely bidding to secure contracts for satellites etc (OHB lost out to both Airbus D&S and Thales Alenia Space in securing the contract for the now ongoing construction of the second generation (G2) of Galileo satellites, after they had been the main supplier for the first generation (https://www.reuters.com/technology/...about-galileo-satellites-contract-2023-04-26/).

At other times, however, they also collaborate with their main competitors (https://www.ohb.de/en/news/esas-pla...stem-ag-readies-for-integration-of-26-cameras).

In 2018, the Hanseatic city of Bremen even renamed the square in front of the company’s headquarters in honour of Manfred Fuchs (1938-2014), to commemorate the engineer and entrepreneur’s significant contribution to the development of the aerospace industry in Bremen.

Being a household name is not necessarily part of what defines a successful global player. Just think of Arm (especially before the IPO).

We as BRN shareholders should always keep that in mind…

View attachment 59272

Evening Diogenese ,Yes sorry - I was half asleep.

That could be right - it seems there is a mysterious customer (or group of customers) upon whose toes we wish not to tread.

If we cancelled tapeout of Akida 2, then it may be that someone quite large is doing it under NDA - remember "Loose lips sink ships".