You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

BRN Discussion Ongoing

- Thread starter TechGirl

- Start date

wilzy123

Founding Member

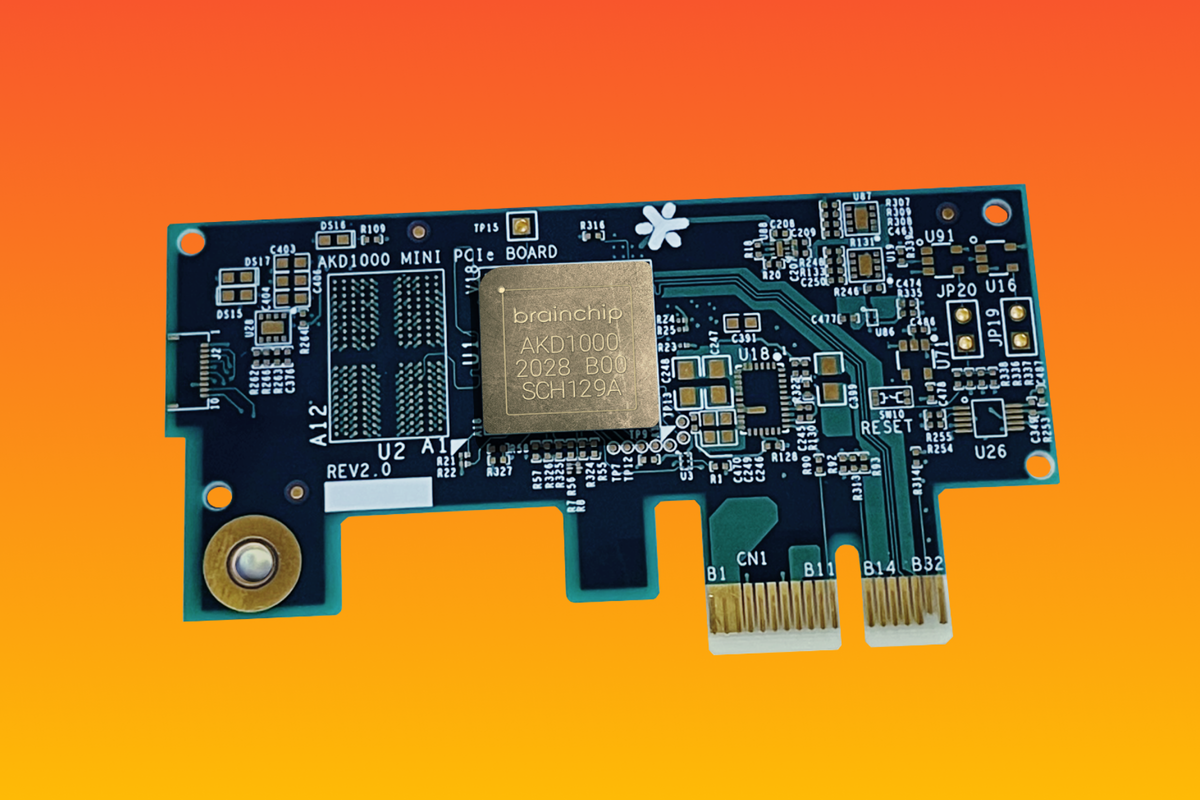

"All told I am very impressed with the BrainChip Akida neuromorphic processor. The performance of the networks implemented is very good, while the power used is also exceptionally low. These two parameters are critical parameters for embedded solutions deployed at the edge."

Benchmarking Akida with Edge Impulse: A Validation of Model Performance on BrainChip's Akida Platform

See how BrainChip's Akida and Edge Impulse perform when it comes to visual wake word detection, keyword spotting, anomaly detection, and CIFAR classification.

www.edgeimpulse.com

www.edgeimpulse.com

Top200 and 300 is not just based on current market cap. It's an average over a longer period of time.Something doesn't smell right, as far as the short position is concerned..

By all accounts (just my opinion) we should have been kicked out of the ASX 300 in the June rebalancing.

Someone had said (which sounded reasonable) that once you are in an index, you are in for 12 months, no matter what, to reduce volatility.

That explains us surviving at least 2 rebalances, but the June rebalance is a bit of a mystery to me..

Unless there were no other companies, that had progressed significantly in market cap and trading volume, at that time, to warrant inclusion?..

What would the effect have been, with such a large short position, on shorters who had to buy back shares, as funds and institutions needed them to sell, as they no longer needed them for index weighting?

Yes there would be selling pressure from the funds, but first buying pressure from the shorters..

If at a time of a turnaround in the Company's "progress" as measured by the market, it could be a real spectacle to watch..

View attachment 41661

Are they sleeping well at night and being cool cucumbers?

Who knows, but it's a dance I'd want to sit out..

Many tech companies, especially in the US, where they "should" be better understood, owe their magnificent market capitalisations, to the narrow mindedness of shorting entities.

Something to keep in mind, as we wallow in the current share price predicament.

Also liquidity is taken into account. BRN is certainly extremely liquid in terms of turnover. If we are below some companies in terms of market cap we may be included in the top 300 above them due to greater liquidity.

View attachment 41654 View attachment 41655

Chiplets: Deep Dive Into Designing, Manufacturing, And Testing

EBook: Chiplets may be the semiconductor industry’s hardest challenge yet, but they are the best path forward.semiengineering.com

AMD's Unannounced MI300C AI Accelerator Emerges

Third and unannounced variant of the MI300

supersonic001

Regular

Benchmarking Akida with Edge Impulse: A Validation of Model Performance on BrainChip's Akida Platform | Adam Taylor

A slightly different blog, with the Edge Impulse Team I have been looking at the BrainChip Akida Platform and validating the model performance. Across a range of applications from Visual Wake Words, CIFAR10, Anomaly Detection and Key Word Detection. https://lnkd.in/eaAkAu9f

equanimous

Norse clairvoyant shapeshifter goddess

Just going to put this out there....

How many are sitting on a pile of cash just waiting for a uptick in the share price?

I for 1 are just sitting waiting to see the manipulation stop so we can once again push to where Brainchip real valuation should be. I know it's a bargain ATM but with all that is going on and 133M of shorts, the big players surely want to make a pile ofon the way down and also o the way up.

Google Is Using AI to Make Hearing Aids More Personalized

A new partnership between Google and an Australian hearing coalition is using machine intelligence to improve the customizability of hearing aids and cochlear implants.

Falling Knife

Regular

In conclusion, neuromorphic computing represents a significant technological advancement with the potential to revolutionize the telecommunications industry. By enhancing data processing capabilities, improving network efficiency, and enabling advanced AI applications, it can significantly improve the performance and competitiveness of telecommunications companies. As such, it is indeed poised to become the next big thing in telecommunications.

7 August 2023 0

Exploring Neuromorphic Computing: The Next Big Thing in Telecommunications

Neuromorphic computing, a revolutionary technology that mimics the human brain’s neural architecture, is poised to become the next big thing in telecommunications. This innovative technology is set to transform the industry by enhancing data processing capabilities, improving network efficiency, and enabling advanced artificial intelligence (AI) applications.

Neuromorphic computing is a subset of AI that uses very large scale integration systems containing electronic analog circuits to mimic neuro-biological architectures present in the nervous system. In simpler terms, it’s a technology that replicates the human brain’s ability to respond and adapt to information. This technology’s potential to process vast amounts of data in real-time, with minimal power consumption, makes it a game-changer for the telecommunications industry.

The telecommunications industry is a data-intensive sector that requires high-speed processing and transmission of large volumes of data. Traditional computing systems, while powerful, often struggle to keep up with the increasing demand for real-time data processing and transmission. Neuromorphic computing, with its ability to process data in real-time, offers a solution to this challenge. It can significantly enhance the speed and efficiency of data processing and transmission, leading to improved network performance and customer experience.

Moreover, neuromorphic computing can also play a crucial role in advancing AI applications in telecommunications. AI is increasingly being used in the industry for various purposes, such as network optimization, predictive maintenance, customer service, and fraud detection. However, the effectiveness of these applications is often limited by the processing capabilities of traditional computing systems. Neuromorphic computing, with its superior processing capabilities, can enable more advanced and effective AI applications. For instance, it can facilitate real-time network optimization, leading to improved network performance and reliability.

Furthermore, neuromorphic computing can also contribute to energy efficiency in telecommunications. Traditional computing systems consume a significant amount of energy, which not only increases operational costs but also contributes to environmental pollution. Neuromorphic computing, on the other hand, requires minimal power to operate. This can significantly reduce energy consumption in the telecommunications industry, leading to cost savings and a smaller environmental footprint.

However, despite its potential, the adoption of neuromorphic computing in telecommunications is still in its early stages. There are several challenges that need to be addressed, such as the high cost of neuromorphic chips and the lack of skilled professionals in the field. Moreover, there are also concerns about the ethical implications of using technology that mimics the human brain.

Nevertheless, the potential benefits of neuromorphic computing for the telecommunications industry are too significant to ignore. As the technology matures and becomes more accessible, it is expected to play an increasingly important role in the industry. In fact, some industry experts predict that neuromorphic computing could become as fundamental to telecommunications as the internet itself.

In conclusion, neuromorphic computing represents a significant technological advancement with the potential to revolutionize the telecommunications industry. By enhancing data processing capabilities, improving network efficiency, and enabling advanced AI applications, it can significantly improve the performance and competitiveness of telecommunications companies. As such, it is indeed poised to become the next big thing in telecommunications.

The passage that screams ubiquitous is this;

Loving the build up, so much happening its hard to keep up.

Attachments

DingoBorat

Slim

Hey Manny, yeah I know liquidity is a factor and mentioned about that in my post, but we are well, well below Top 300 market cap and it seems odd, that none of those companies have greater turnover.Top200 and 300 is not just based on current market cap. It's an average over a longer period of time.

Also liquidity is taken into account. BRN is certainly extremely liquid in terms of turnover. If we are below some companies in terms of market cap we may be included in the top 300 above them due to greater liquidity.

Maybe they haven't met the conditions, for the required time period?

Bravo

Meow Meow 🐾

Call me weird if you must, but I'm getting the feeling that Arm might leverage our partnership to help it achieve its IPO target valuation of $70 - 80 billion. Remember back in April we heard that Arm was planning to manufacture its own prototype chip, a more advanced processor not seen before (hint, hint, nudge, nudge = Arm cortex + AKIDA). Well, imagine if Arm launches this new prototype (read Arm Cortex M-85 + AKIDA 2000 for example) at the roadshow which is scheduled to start in the first week of September with pricing for the IPO to come in the following week! The prototype was expected to only be used to showcase what is possible since it was reported Arm apparently had no plans to license or sell it as a product. So what better time to showcase this fancy, new, wiz-bang advanced processor than at the roadshow in the lead up to an IPO that promises to be bigger than Ben-Hur's helmet!!!

I fully acknowledge that I'm in the habit of seeing AKIDA behind almost every pot plant but I'm hopeful that this prediction might not be completely outside the realms of possibility.

DYOR and don't forget to brush your hair and teeth.

B

I fully acknowledge that I'm in the habit of seeing AKIDA behind almost every pot plant but I'm hopeful that this prediction might not be completely outside the realms of possibility.

DYOR and don't forget to brush your hair and teeth.

B

mrgds

Regular

Yes @Bravo ....................... i can fully see why the "hairs on your toes are standing up"! ( Now thats "wierd " )Call me weird if you must, but I'm getting the feeling that Arm might leverage our partnership to help it achieve its IPO target valuation of $70 - 80 billion. Remember back in April we heard that Arm was planning to manufacture its own prototype chip, a more advanced processor not seen before (hint, hint, nudge, nudge = Arm cortex + AKIDA). Well, imagine if Arm launches this new prototype (read Arm Cortex M-85 + AKIDA 2000 for example) at the roadshow which is scheduled to start in the first week of September with pricing for the IPO to come in the following week! The prototype was expected to only be used to showcase what is possible since it was reported Arm apparently had no plans to license or sell it as a product. So what better time to showcase this fancy, new, wiz-bang advanced processor than at the roadshow in the lead up to an IPO that promises to be bigger than Ben-Hur's helmet!!!

I fully acknowledge that I'm in the habit of seeing AKIDA behind almost every pot plant but I'm hopeful that this prediction might not be completely outside the realms of possibility.

DYOR and don't forget to brush your hair and teeth.

B

In our upcoming livestream, Nikunj Kotecha and Joshua Buck will discuss BrainChip and Edge Impulse's collaborative effort in building an ecosystem for accelerated edge AI products. The session will… | Edge Impulse

In our upcoming livestream, Nikunj Kotecha and Joshua Buck will discuss BrainChip and Edge Impulse's collaborative effort in building an ecosystem for accelerated edge AI products. The session will take a deep dive into BrainChip’s Akida neuromorphic hardware and provide a framework for...

Cartagena

Regular

Yes someone is very confident.Good Morning Chippers,

Morning trade.. Someone just got their BUY order filled for 1,000,000 units @ $0.37

Wooo Hooo.... Another big wave rider ( retail holder ) hopefully.

Regards,

Esq.

Money well invested.

The race is on for AI

We will prevail. Many companies are investing big money into Edge AI to have an advantage and we have more validation from partners.

The release of Gen 2 akida is coming soon. Hopefully we have a big contract announced also to add more spark and put serious dynamite under the share price.

We are nearing the middle of Qtr 3. Time is closing in

Yes someone is very confident.

Money well invested.

The race is on for AI

We will prevail. Many companies are investing big money into Edge AI to have an advantage and we have more validation from partners.

The release of Gen 2 akida is coming soon. Hopefully we have a big contract announced also to add more spark and put serious dynamite under the share price.

We are nearing the middle of Qtr 3. Time is closing in

Nvidia reveals new A.I. chip, says costs of running LLMs will 'drop significantly'

Currently, Nvidia dominates the market for AI chips, with over 80% market share, according to some estimates.

Nvidia's GH200 has the same GPU as the H100, Nvidia's current highest-end AI chip, but pairs it with 141 gigabytes of cutting-edge memory, as well as a 72-core ARM central processor.

SIGGRAPH Special Address: NVIDIA CEO Brings Generative AI to LA Show

As generative AI continues to sweep an increasingly digital, hyperconnected world, NVIDIA founder and CEO Jensen Huang made a thunderous return to SIGGRAPH, the world’s premier computer graphics conference. “The generative AI era is upon us, the iPhone moment if you will,” Huang told an audience...

blogs.nvidia.com

Last edited:

Similar threads

- Replies

- 0

- Views

- 4K

- Replies

- 9

- Views

- 6K

- Replies

- 0

- Views

- 3K