JDelekto

Regular

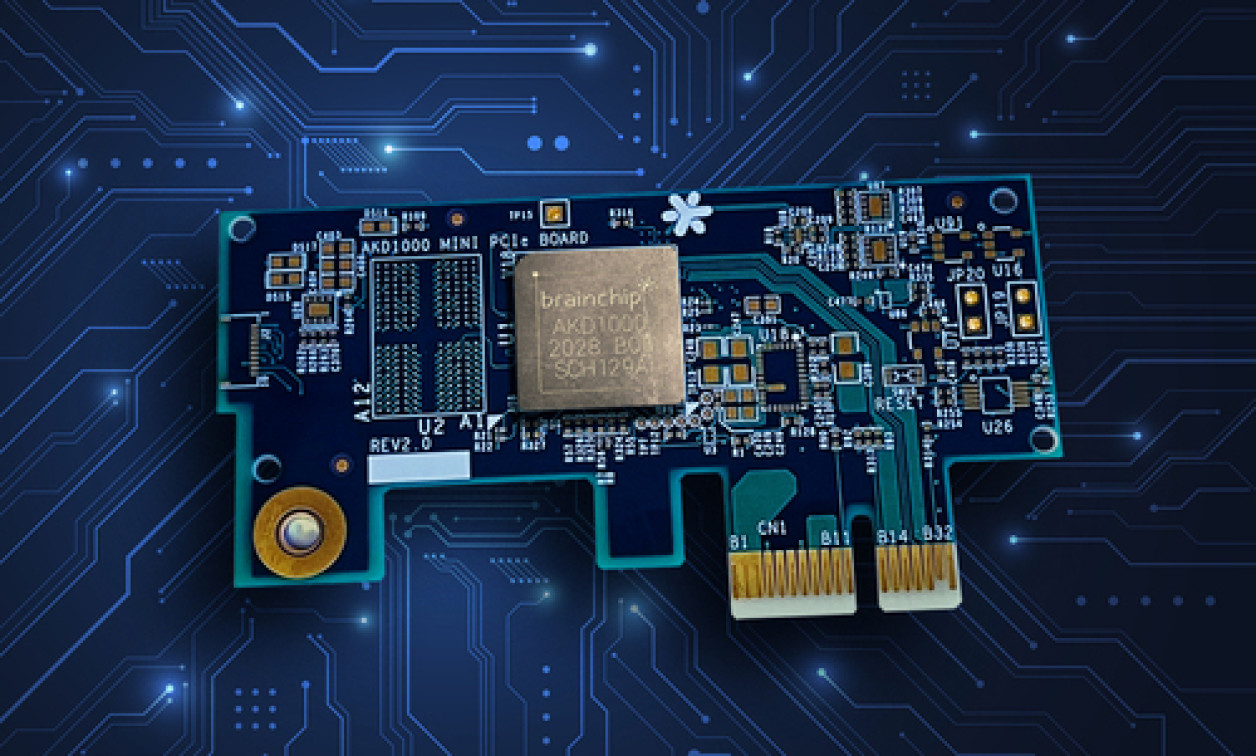

Something I've been thinking about is Neuralink. They made one of their rare updates on YT late last year. If ever there was a need for a cool- running, low power edge chip, that would be it. Brainchip powering a human-computer interface device would make sense. I've heard Musk's Neuralink team develop their own hardware, but is their any inkling of interest from those quarters?

I was very interested in Nuralink, however, they seem to be getting a lot of negative press lately regarding the monkeys that they were using in their experiments: Elon Musk's brain chip firm denies animal cruelty claims.

While I haven't given much thought to any type of Neuralink/BrainChip collaboration, what I have been interested in is research using BrainChip with existing BCI (Brain to Computer Interface) devices, like the Emotiv Insight, in conjunction with BrainChip's on-chip learning to identify various commands that the wearer could issue to control IoT devices locally.

The BCI device is trained similar to a TTS (Text-To-Speech) engine, where you "think" about certain commands, and the resulting EEG (Electroencephalogram) or brain activity is recorded and used to "train" the software that does the control. One can then use the device to read and playback these commands. It sounds like science fiction, but it's very impressive technology.

Imagine being able to put on a lightweight Bluetooth headset when you get home and use your Smart Home devices, just by thinking about it.

I think in the future, BrainChip will be very symbiotic with technologies like Nueralink once they are more advanced and proven safer, as it could be a great solution for training artificial limbs, eyes, and ears while personalizing the experience for that particular individual's brain and nervous system.