uiux

Regular

https:// arxiv.org/pdf/1611.01235 .pdf

A Self-Driving Robot Using Deep Convolutional Neural Networks on Neuromorphic Hardware

Tiffany Hwu∗†, Jacob Isbell‡, Nicolas Oros§, and Jeffrey Krichmar∗¶

∗Department of Cognitive Sciences

University of California, Irvine

Irvine, California, USA, 92697

†Northrop Grumman

Redondo Beach, California, USA, 90278

‡Department of Electrical and Computer Engineering

University of Maryland

College Park, Maryland, USA, 20742

§BrainChip LLC

Aliso Viejo, California, USA, 92656

¶Department of Computer Sciences

University of California, Irvine

Irvine, California, USA, 92697

Abstract

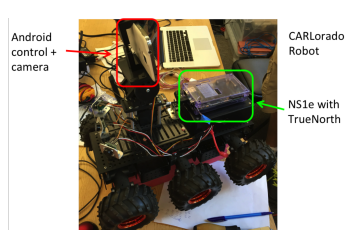

Neuromorphic computing is a promising solution for reducing the size, weight and power of mobile embedded systems. In this paper, we introduce a realization of such a system by creating the first closed-loop battery-powered communication system between an IBM TrueNorth NS1e and an autonomous Android-Based Robotics platform. Using this system, we constructed a dataset of path following behavior by manually driving the Android-Based robot along steep mountain trails and recording video frames from the camera mounted on the robot along with the corresponding motor commands. We used this dataset to train a deep convolutional neural network implemented on the TrueNorth NS1e. The NS1e, which was mounted on the robot and powered by the robot’s battery, resulted in a selfdriving robot that could successfully traverse a steep mountain path in real time. To our knowledge, this represents the first time the TrueNorth NS1e neuromorphic chip has been embedded on a mobile platform under closed-loop control.

ACKNOWLEDGMENT

The authors would like to thank Andrew Cassidy and Rodrigo Alvarez-Icaza of IBM for their support. This work was supported by the National Science Foundation Award number 1302125 and Northrop Grumman Aerospace Systems. We also would like to thank the Telluride Neuromorphic Cognition Engineering Workshop, The Institute of Neuromorphic Engineering, and their National Science Foundation, DoD and Industrial Sponsors.

---

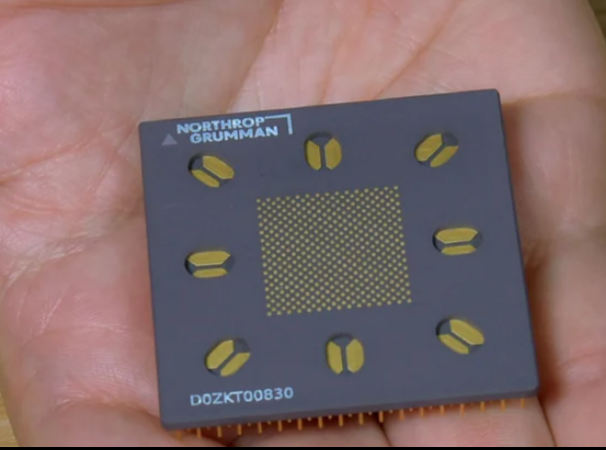

Superconducting neuromorphic core

NORTHROP GRUMMAN SYSTEMS CORPORATION

Abstract

A superconducting neuromorphic pipelined processor core can be used to build neural networks in hardware by providing the functionality of somas, axons, dendrites and synaptic connections. Each instance of the superconducting neuromorphic pipelined processor core can implement a programmable and scalable model of one or more biological neurons in superconducting hardware that is more efficient and biologically suggestive than existing designs. This core can be used to build a wide variety of large-scale neural networks in hardware. The biologically suggestive operation of the neuron core provides additional capabilities to the network that are difficult to implement in software-based neural networks and would be impractical using room-temperature semiconductor electronics. The superconductive electronics that make up the core enable it to perform more operations per second per watt than is possible in comparable state-of-the-art semiconductor-based designs.

www.frontiersin.org

www.frontiersin.org

BrainFreeze: Expanding the Capabilities of Neuromorphic Systems Using Mixed-Signal Superconducting Electronics

Superconducting electronics (SCE) is uniquely suited to implement neuromorphic systems. As a result, SCE has the potential to enable a new generation of neuromorphic architectures that can simultaneously provide scalability, programmability, biological fidelity, on-line learning support, efficiency and speed. Supporting all of these capabilities simultaneously has thus far proven to be difficult using existing semiconductor technologies. However, as the fields of computational neuroscience and artificial intelligence (AI) continue to advance, the need for architectures that can provide combinations of these capabilities will grow. In this paper, we will explain how superconducting electronics could be used to address this need by combining analog and digital SCE circuits to build large scale neuromorphic systems. In particular, we will show through detailed analysis that the available SCE technology is suitable for near term neuromorphic demonstrations. Furthermore, this analysis will establish that neuromorphic architectures built using SCE will have the potential to be significantly faster and more efficient than current approaches, all while supporting capabilities such as biologically suggestive neuron models and on-line learning. In the future, SCE-based neuromorphic systems could serve as experimental platforms supporting investigations that are not feasible with current approaches. Ultimately, these systems and the experiments that they support would enable the advancement of neuroscience and the development of more sophisticated AI.

The main contributions of this paper are:

• A description of a novel mixed-signal superconducting neuromorphic architecture

• A detailed analysis of the design trade-offs and feasibility of mixed-signal superconducting neuromorphic architectures

• A comparison of the proposed system with other state-of-the-art neuromorphic architectures

• A discussion of the potential of superconducting neuromorphic systems in terms of on-line learning support and large system scaling.

---

System and method for automating observe-orient-decide-act (ooda) loop enabling cognitive autonomous agent systems

NORTHROP GRUMMAN SYSTEMS CORPORATION

Abstract

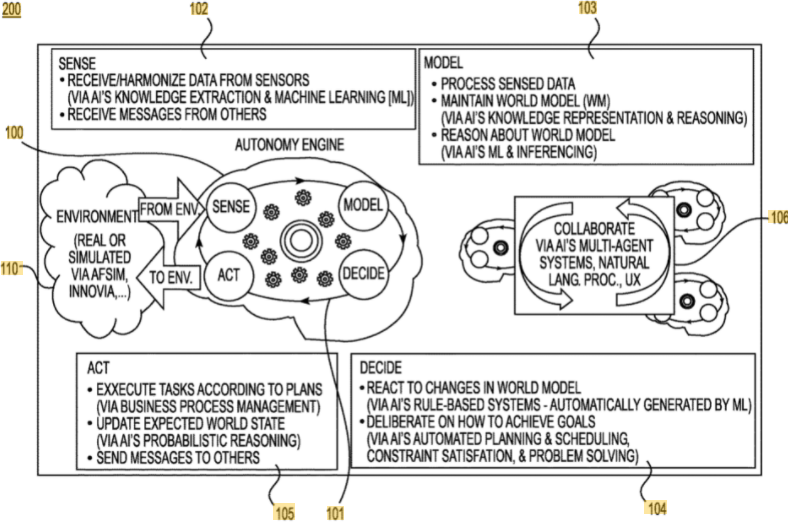

The disclosed invention provides system and method for providing autonomous actions that are consistently applied to evolving missions in physical and virtual domains. The system includes a autonomy engine that is implanted in computing devices. The autonomy engine includes a sense component including one or more sensor drivers that are coupled to the one or more sensors, a model component including a world model, a decide component reacting to changes in the world model and generating a task based on the changes in the world model, and an act component receiving the task from the decide component and invoking actions based on the task. The sense component acquires data from the sensors and extracts knowledge from the acquired data. The model component receives the knowledge from the sense component, and creates or updates the world model based on the knowledge received from the sense component. The act component includes one or more actuator drivers to apply the invoked actions to the physical and virtual domains.

The sense component 102 includes sensor drivers 121 to control sensors 202 to monitor and acquire environmental data. The model component 103 includes domain specific tools, tools for Edge which is light weight, streaming, and neuromorphic, common analytic toolkits, and common open architecture. The model component 103 is configured to implement human interfaces and application programming interfaces. The model component 103 build knowledge-based models (e.g., the World Model) that may include mission model including goals, tasks, and plans, environment model including adversaries, self-model including agent's capabilities, and history. The model component 103 also may build machine learning-based models that learns from experience.

---

Optical information collection system

NORTHROP GRUMMAN SYSTEMS CORPORATION

Abstract

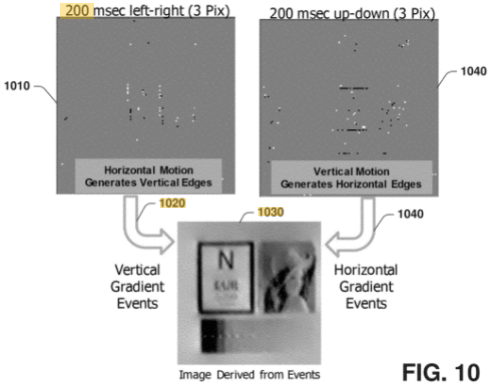

An optical information collection system includes a neuromorphic sensor to collect optical information from a scene in response to change in photon flux detected at a plurality of photoreceptors of the neuromorphic sensor. A sensor stimulator stimulates a subset of the plurality of photoreceptors according to an eye movement pattern in response to a control command. A controller generates the control command that includes instructions to execute the eye movement pattern.

---

DARPA Announces Research Teams to Develop Intelligent Event-Based Imagers

DARPA today announced that three teams of researchers led by Raytheon, BAE Systems, and Northrop Grumman have been selected to develop event-based infrared (IR) camera technologies under the Fast Event-based Neuromorphic Camera and Electronics (FENCE) program. Event-based – or neuromorphic – cameras are an emerging class of sensors with demonstrated advantages relative to traditional imagers. These advanced models operate asynchronously and only transmit information about pixels that have changed. This means they produce significantly less data and operate with much lower latency and power.

---

www.northropgrumman.com

www.northropgrumman.com

Neuromorphic Cameras Provide a Vision of the Future

“Cameras we use today have an array of pixels: 1024 by 768,” explained Isidoros Doxas, an AI Systems Architect at Northrop Grumman. “And each pixel essentially measures the amount of light or number of photons falling on it. That number is called the flux. Now, if you display the same numbers on a screen, you will see the same image that fell on your camera.”

By contrast, neuromorphic cameras only report changes in flux. If the rate of photons falling on a pixel doesn’t change, they report nothing.

“If a constant 1,000 photons per second is falling on a pixel, it basically says, ‘I’m good, nothing happened.’ But, if at some point there are now 1,100 photons per second falling on the pixel, it will report that change in flux,” Doxas said.

“Surprisingly, this is exactly how the human eye works,” he added. “You may think that your eye reports the image that you see. But it doesn’t. All that stuff is in your head. All the eye reports are little blips saying ‘up’ or ‘down.’ The image we perceive is built by our brains.”

A Self-Driving Robot Using Deep Convolutional Neural Networks on Neuromorphic Hardware

Tiffany Hwu∗†, Jacob Isbell‡, Nicolas Oros§, and Jeffrey Krichmar∗¶

∗Department of Cognitive Sciences

University of California, Irvine

Irvine, California, USA, 92697

†Northrop Grumman

Redondo Beach, California, USA, 90278

‡Department of Electrical and Computer Engineering

University of Maryland

College Park, Maryland, USA, 20742

§BrainChip LLC

Aliso Viejo, California, USA, 92656

¶Department of Computer Sciences

University of California, Irvine

Irvine, California, USA, 92697

Abstract

Neuromorphic computing is a promising solution for reducing the size, weight and power of mobile embedded systems. In this paper, we introduce a realization of such a system by creating the first closed-loop battery-powered communication system between an IBM TrueNorth NS1e and an autonomous Android-Based Robotics platform. Using this system, we constructed a dataset of path following behavior by manually driving the Android-Based robot along steep mountain trails and recording video frames from the camera mounted on the robot along with the corresponding motor commands. We used this dataset to train a deep convolutional neural network implemented on the TrueNorth NS1e. The NS1e, which was mounted on the robot and powered by the robot’s battery, resulted in a selfdriving robot that could successfully traverse a steep mountain path in real time. To our knowledge, this represents the first time the TrueNorth NS1e neuromorphic chip has been embedded on a mobile platform under closed-loop control.

ACKNOWLEDGMENT

The authors would like to thank Andrew Cassidy and Rodrigo Alvarez-Icaza of IBM for their support. This work was supported by the National Science Foundation Award number 1302125 and Northrop Grumman Aerospace Systems. We also would like to thank the Telluride Neuromorphic Cognition Engineering Workshop, The Institute of Neuromorphic Engineering, and their National Science Foundation, DoD and Industrial Sponsors.

---

US11157804B2 - Superconducting neuromorphic core - Google Patents

A superconducting neuromorphic pipelined processor core can be used to build neural networks in hardware by providing the functionality of somas, axons, dendrites and synaptic connections. Each instance of the superconducting neuromorphic pipelined processor core can implement a programmable and...

patents.google.com

Superconducting neuromorphic core

NORTHROP GRUMMAN SYSTEMS CORPORATION

Abstract

A superconducting neuromorphic pipelined processor core can be used to build neural networks in hardware by providing the functionality of somas, axons, dendrites and synaptic connections. Each instance of the superconducting neuromorphic pipelined processor core can implement a programmable and scalable model of one or more biological neurons in superconducting hardware that is more efficient and biologically suggestive than existing designs. This core can be used to build a wide variety of large-scale neural networks in hardware. The biologically suggestive operation of the neuron core provides additional capabilities to the network that are difficult to implement in software-based neural networks and would be impractical using room-temperature semiconductor electronics. The superconductive electronics that make up the core enable it to perform more operations per second per watt than is possible in comparable state-of-the-art semiconductor-based designs.

Frontiers | BrainFreeze: Expanding the Capabilities of Neuromorphic Systems Using Mixed-Signal Superconducting Electronics

Superconducting electronics (SCE) is uniquely suited to implement neuromorphic systems. As a result, SCE has the potential to enable a new generation of neur...

BrainFreeze: Expanding the Capabilities of Neuromorphic Systems Using Mixed-Signal Superconducting Electronics

Superconducting electronics (SCE) is uniquely suited to implement neuromorphic systems. As a result, SCE has the potential to enable a new generation of neuromorphic architectures that can simultaneously provide scalability, programmability, biological fidelity, on-line learning support, efficiency and speed. Supporting all of these capabilities simultaneously has thus far proven to be difficult using existing semiconductor technologies. However, as the fields of computational neuroscience and artificial intelligence (AI) continue to advance, the need for architectures that can provide combinations of these capabilities will grow. In this paper, we will explain how superconducting electronics could be used to address this need by combining analog and digital SCE circuits to build large scale neuromorphic systems. In particular, we will show through detailed analysis that the available SCE technology is suitable for near term neuromorphic demonstrations. Furthermore, this analysis will establish that neuromorphic architectures built using SCE will have the potential to be significantly faster and more efficient than current approaches, all while supporting capabilities such as biologically suggestive neuron models and on-line learning. In the future, SCE-based neuromorphic systems could serve as experimental platforms supporting investigations that are not feasible with current approaches. Ultimately, these systems and the experiments that they support would enable the advancement of neuroscience and the development of more sophisticated AI.

The main contributions of this paper are:

• A description of a novel mixed-signal superconducting neuromorphic architecture

• A detailed analysis of the design trade-offs and feasibility of mixed-signal superconducting neuromorphic architectures

• A comparison of the proposed system with other state-of-the-art neuromorphic architectures

• A discussion of the potential of superconducting neuromorphic systems in terms of on-line learning support and large system scaling.

---

US20210390432A1 - System and method for automating observe-orient-decide-act (ooda) loop enabling cognitive autonomous agent systems - Google Patents

The disclosed invention provides system and method for providing autonomous actions that are consistently applied to evolving missions in physical and virtual domains. The system includes a autonomy engine that is implanted in computing devices. The autonomy engine includes a sense component...

patents.google.com

System and method for automating observe-orient-decide-act (ooda) loop enabling cognitive autonomous agent systems

NORTHROP GRUMMAN SYSTEMS CORPORATION

Abstract

The disclosed invention provides system and method for providing autonomous actions that are consistently applied to evolving missions in physical and virtual domains. The system includes a autonomy engine that is implanted in computing devices. The autonomy engine includes a sense component including one or more sensor drivers that are coupled to the one or more sensors, a model component including a world model, a decide component reacting to changes in the world model and generating a task based on the changes in the world model, and an act component receiving the task from the decide component and invoking actions based on the task. The sense component acquires data from the sensors and extracts knowledge from the acquired data. The model component receives the knowledge from the sense component, and creates or updates the world model based on the knowledge received from the sense component. The act component includes one or more actuator drivers to apply the invoked actions to the physical and virtual domains.

The sense component 102 includes sensor drivers 121 to control sensors 202 to monitor and acquire environmental data. The model component 103 includes domain specific tools, tools for Edge which is light weight, streaming, and neuromorphic, common analytic toolkits, and common open architecture. The model component 103 is configured to implement human interfaces and application programming interfaces. The model component 103 build knowledge-based models (e.g., the World Model) that may include mission model including goals, tasks, and plans, environment model including adversaries, self-model including agent's capabilities, and history. The model component 103 also may build machine learning-based models that learns from experience.

---

US20200249080A1 - Optical information collection system - Google Patents

An optical information collection system includes a neuromorphic sensor to collect optical information from a scene in response to change in photon flux detected at a plurality of photoreceptors of the neuromorphic sensor. A sensor stimulator stimulates a subset of the plurality of...

patents.google.com

Optical information collection system

NORTHROP GRUMMAN SYSTEMS CORPORATION

Abstract

An optical information collection system includes a neuromorphic sensor to collect optical information from a scene in response to change in photon flux detected at a plurality of photoreceptors of the neuromorphic sensor. A sensor stimulator stimulates a subset of the plurality of photoreceptors according to an eye movement pattern in response to a control command. A controller generates the control command that includes instructions to execute the eye movement pattern.

---

DARPA Announces Research Teams to Develop Intelligent Event-Based Imagers

DARPA today announced that three teams of researchers led by Raytheon, BAE Systems, and Northrop Grumman have been selected to develop event-based infrared (IR) camera technologies under the Fast Event-based Neuromorphic Camera and Electronics (FENCE) program. Event-based – or neuromorphic – cameras are an emerging class of sensors with demonstrated advantages relative to traditional imagers. These advanced models operate asynchronously and only transmit information about pixels that have changed. This means they produce significantly less data and operate with much lower latency and power.

---

Neuromorphic Cameras Provide a Vision of the Future | Northrop Grumman

From enhanced battlefield protection systems to maintaining aerial drone delivery fleets, neuromorphic cameras hold promise for the future.

Neuromorphic Cameras Provide a Vision of the Future

“Cameras we use today have an array of pixels: 1024 by 768,” explained Isidoros Doxas, an AI Systems Architect at Northrop Grumman. “And each pixel essentially measures the amount of light or number of photons falling on it. That number is called the flux. Now, if you display the same numbers on a screen, you will see the same image that fell on your camera.”

By contrast, neuromorphic cameras only report changes in flux. If the rate of photons falling on a pixel doesn’t change, they report nothing.

“If a constant 1,000 photons per second is falling on a pixel, it basically says, ‘I’m good, nothing happened.’ But, if at some point there are now 1,100 photons per second falling on the pixel, it will report that change in flux,” Doxas said.

“Surprisingly, this is exactly how the human eye works,” he added. “You may think that your eye reports the image that you see. But it doesn’t. All that stuff is in your head. All the eye reports are little blips saying ‘up’ or ‘down.’ The image we perceive is built by our brains.”

Last edited: