uiux

Regular

On the Two-View Geometry of Unsynchronized Cameras

Abstract

We present new methods for simultaneously estimating camera geometry and time shift from video sequences from multiple unsynchronized cameras. Algorithms for simultaneous computation of a fundamental matrix or a homography with unknown time shift between images are developed. Our methods use minimal correspondence sets (eight for fundamental matrix and four and a half for homography) and therefore are suitable for robust estimation using RANSAC. Furthermore, we present an iterative algorithm that extends the applicability on sequences which are significantly unsynchronized, finding the correct time shift up to several seconds. We evaluated the methods on synthetic and wide range of real world datasets and the results show a broad applicability to the problem of camera synchronization

Authors and associations to note:

Jan Heller

CTO of Magik-Eye

Researcher in the field of artificial intelligence with an expertise in computer vision, and robotics camera calibration.

Tomas Pajdla

Scientific Advisor at Magik-Eye

Assistant Professor, Czech Technical University, Department of Cybernetics

Distinguished Researcher for Center of Machine Perception

Co-Founder, Neovision

Andrew Fitzgibbon

HoloLens, Microsoft

Cambridge

---

Magik Eye Inc.

The Magik Eye sensor is revolutionizing how machines see the world. We’ve put together a team of leading experts in optical science and computer vision to reinvent 3D vision, making it more performant, more reliable, and much less expensive.

Breakthrough of Optics and Mathematics

Invertible Light™ is in stark contrast to current 3D sensing methods. For example, Structured Light requires the projection of a specific or random pattern to measure distortions. The result is significant power, multiple components and complexity of production. In contrast to the Time of Flight (ToF) method that is good for longer distances, Invertible Light™ has greater visibility in shorter distances with similar advantages of size, speed and power-efficiency.

US10535151B2 - Depth map with structured and flood light - Google Patents

A method including receiving an image of a scene illuminated by both a predetermined structured light pattern and a flood fill illumination, generating an active brightness image of the scene based on the received image of the scene including detecting a plurality of dots of the predetermined...

patents.google.com

Depth map with structured and flood light

Current Assignee Microsoft Technology Licensing LLC

Abstract

A method including receiving an image of a scene illuminated by both a predetermined structured light pattern and a flood fill illumination, generating an active brightness image of the scene based on the received image of the scene including detecting a plurality of dots of the predetermined structured light pattern, and removing the plurality of dots of the predetermined structured light pattern from the active brightness image, and generating a depth map of the scene based on the received image and the active brightness image.

---

BrainChip on LinkedIn: The Akida NSoC represents a revolutionary new breed of Neural Processing…

The Akida NSoC represents a revolutionary new breed of Neural Processing computing devices for Edge AI devices and systems. Learn more: https://lnkd.in/gdmimtU

---

M'soft Gives Deeper Look into HoloLens - EPS News

by SANTA CLARA, Calif. — A computer vision specialist from Microsoft gave a deep dive into the challenges and opportunities of its HoloLens in a keynote at the Embedded Vision Summit here. His talk sketched out several areas where such augmented reality products still need work to live up to...

M’soft Gives Deeper Look into HoloLens

Sensor/processor integration needed

The HoloLens “will be the next generation of personal computing devices with more context” about its users and their environments than today’s PCs and smartphones, said Marc Pollefeys, an algorithm expert who runs a computer vision lab at ETH Zurich and joined the HoloLens project in July as director of science.

It could take a decade to get to the form factor of Google Glass, Pollefeys said. “There are new display and sensing technologies coming … and a lot of trade-offs in how much processing we ship off [the headset to the cloud, and] we are going from many components to SoCs.”One of the team’s biggest silicon challenges is to render “the full region and resolution that the eye can see in a lightweight platform.”

“Most of the energy is spent moving bits around … so it would seem natural that … the first layers of processing should happen in the sensor,” Pollefeys told EE Times in a brief interview after his talk. “I’m following the neuromorphic work that promises very power-efficient systems with layers of processing in the sensor — that’s a direction where we need a lot of innovation — it’s the only way to get a device that’s not heavier than glasses and can do tracking all day.”

---

---

Mercedes-Benz and Microsoft HoloLens 2 show off augmented reality's impact in the automotive space

For customers that bring in a hard-to-solve problem, it was common for Mercedes-Benz to call in a flying doctor, an expert from HQ that would fly in...

www.techspot.com

www.techspot.com

Mercedes-Benz and Microsoft HoloLens 2 show off augmented reality's impact in the automotive space

Mercedes-Benz is infusing its dealerships with AR technology to speed up the diagnosis and repair of tricky and complex issues with its Virtual Remote Support, powered by Microsoft's HoloLens 2 and Dynamics 365 Remote Assist

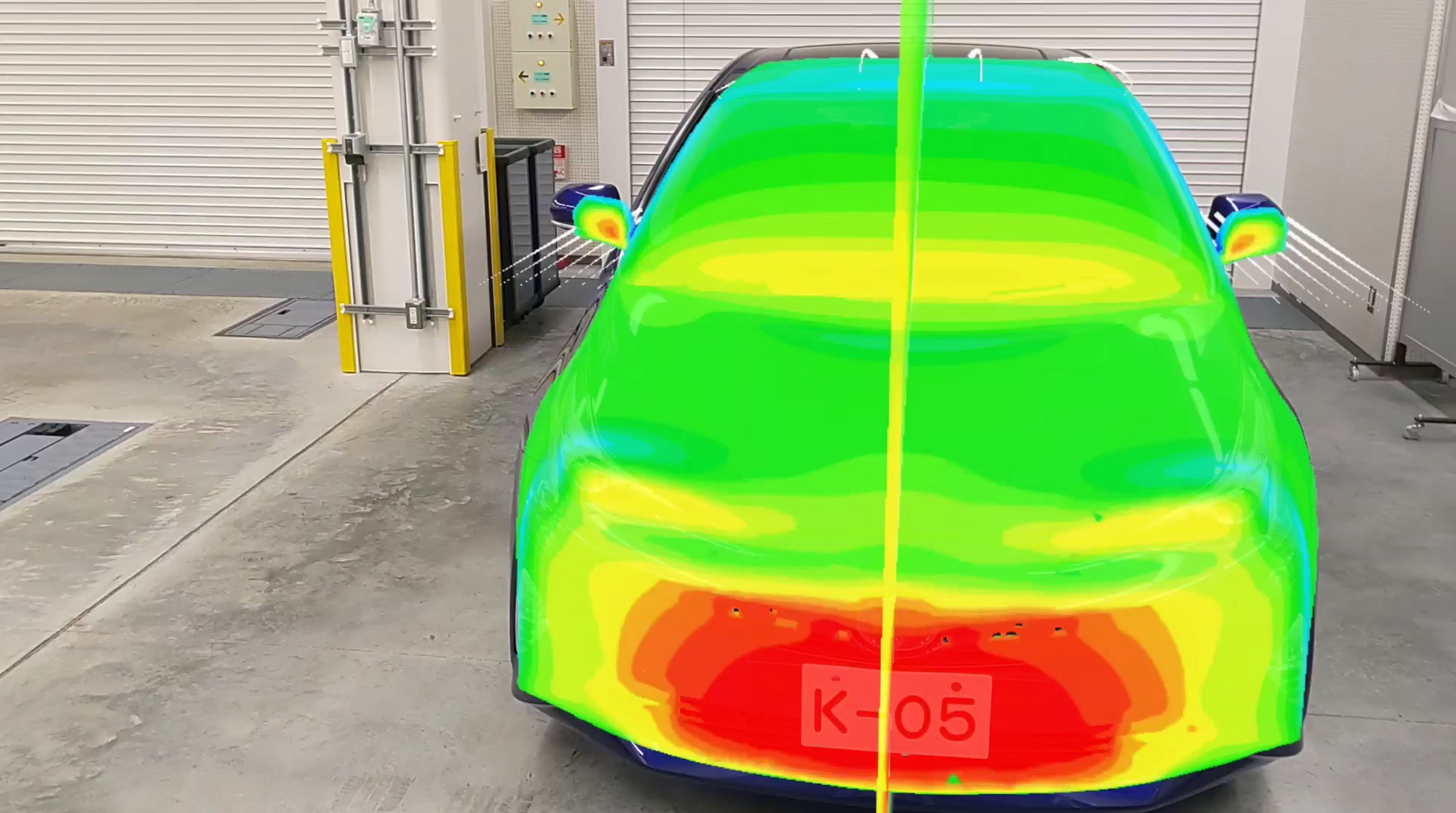

Toyota makes mixed reality magic with Unity and Microsoft HoloLens 2

Learn how Unity and HoloLens 2 have become essential tools at the world’s largest automaker to streamline processes, increase understanding, and save time.

Toyota makes mixed reality magic with Unity and Microsoft HoloLens 2

Toyota has used Unity to create and deploy mixed reality applications to Microsoft’s revolutionary device across its automotive production process. Naturally, its team was eager to expand their mixed reality capabilities with HoloLens 2, the next generation of Microsoft’s wearable holographic computer

---

Army moves Microsoft HoloLens-based headset from prototyping to production phase - The Official Microsoft Blog

Earlier today, the United States Army announced that it will work with Microsoft on the production phase of the Integrated Visual Augmentation System (IVAS) program as it moves from rapid prototyping to production and rapid fielding. The IVAS headset, based on HoloLens and augmented by Microsoft...

Army moves Microsoft HoloLens-based headset from prototyping to production phase

The IVAS headset, based on HoloLens and augmented by Microsoft Azure cloud services, delivers a platform that will keep Soldiers safer and make them more effective. The program delivers enhanced situational awareness, enabling information sharing and decision-making in a variety of scenarios. Microsoft has worked closely with the U.S. Army over the past two years, and together we pioneered Soldier Centered Design to enable rapid prototyping for a product to provide Soldiers with the tools and capabilities necessary to achieve their mission.

Samsung Working With US Military On 5G AR Testing

While 5G may be fast, unique applications for the technology have been relatively slow in coming. A new test bed that Samsung and GBL Systems are starting to deploy with the US DoD, however, are starting to illustrate the potential of the technology.

www.forbes.com

www.forbes.com

Samsung Working With US Military On 5G AR Testing

This week Samsung, both the Networks division and its Electronics group, along with defense contractor GBL Systems, announced the initial deployment of a new 5G-powered test bed for augmented reality (AR) applications. Part of a larger $600 million-dollar 5G testing-focused initiative that the Department of Defense announced in October of 2020, this testbed is one of the US military’s numerous efforts to leverage advanced connectivity technologies to improve its training efforts and operational effectiveness.

Microsoft's multi-billion dollar deal with US defense for HoloLens is still on

Since 2018 Microsoft has been developing a version of the HoloLens 2 specifically for the US military. Called Integrated Visual Augmentation System (IVAS), the device replaced the Army’s own Heads-Up Display 3.0 effort to develop a sophisticated situational awareness tool soldiers can use to...

mspoweruser.com

Microsoft’s multi-billion dollar deal with US defense for HoloLens is still on

Since 2018 Microsoft has been developing a version of the HoloLens 2 specifically for the US military. Called Integrated Visual Augmentation System (IVAS), the device replaced the Army’s own Heads-Up Display 3.0 effort to develop a sophisticated situational awareness tool soldiers can use to view key tactical information before their eyes. Early this year, Microsoft won a contract to deliver 120,000 military-adapted HoloLens augmented reality headsets worth as much as $21.88 billion over 10 years.

New US Army Military-Grade HoloLens 2 Imagery Gives Us Star Wars Stormtrooper Vibes

It's all fun and games until the technology is actually put into use and you realize augmented reality is now part of Death Star. That's what some AR insiders may be thinking once they get a look at the latest modified HoloLens 2 images released by the US Army.

New US Army Military-Grade HoloLens 2 Imagery Gives Us Star Wars Stormtrooper Vibes

Looking at some of these new images, the modded HoloLens 2 gives soldiers a bit of a Star Wars Stormtrooper look, which will be exciting for some, assuming you're not on the wrong end of the AR lens during battle. It's one thing to see a single soldier wearing the device, but being presented with an entire team of AR helmet-equipped flying soldiers begins to look a lot like science fiction.

Why Microsoft Won The $22 Billion Army Hololens 2 AR Deal

Analyst Anshel Sag analyzes Microsoft's $22 billion win with the U.S. Army for AR headsets and technology.

www.forbes.com

www.forbes.com

Why Microsoft Won The $22 Billion Army Hololens 2 AR Deal

Since the first Hololens launched in 2016 with the Development Edition, Microsoft has been hard at work building out its Mixed Reality platform. That same year, Microsoft also announced its Mixed Reality VR headsets for Windows. As some may remember, 2016 was a peak hype year for VR. The momentum behind the technology allowed Microsoft to establish a comprehensive understanding of the needs of AR and VR, and develop APIs for developers accordingly. Last week, Microsoft announced the closure of a $22 billion deal with the Army for AR headsets, software and services. Let’s take a look at the deal and what it means for Microsoft and the industry at large.

US Army is deploying Microsoft HoloLens-based headsets in a $21.88 billion deal

The hardware is moving to production phase.

US Army is deploying Microsoft HoloLens-based headsets in a $21.88 billion deal

Microsoft and the US Army have announced that augmented reality headsets based on the HoloLens 2 will enter production, finalizing a prototype that has been in development since 2018. The new contract is significantly larger than the 2018 deal, providing for 120,000 headsets according to a CNBC report. The contract could be as large as $21.88 billion over 10 years.

---

Unrelated but interesting research:

(PDF) The Next SoC Design: Neuromorphic Smart Glasses

PDF | As Moore’s Law quickly approaches its physical limit, system-on-chip (SoC) architects are beginning to explore new design paradigms, such as the... | Find, read and cite all the research you need on ResearchGate

The Next SoC Design: Neuromorphic Smart Glasses

As Moore’s Law quickly approaches its physical limit, system-on-chip (SoC) architects are beginning to explore new design paradigms, such as the integration of various heterogenous hardware accelerators that improve energy efficiency of new SoCs. This is especially true for low-power spiking neural network (SNN) accelerators which are beginning to see their debut in commercial neuromorphic SoCs. This paper overviews the progression of neuromorphic SoC hardware and wearable computing over the past 20 years. We argue that SNN accelerators will be an important aspect of emerging smartglasses hardware due to their energy efficiency and we propose a novel smartglasses SoC design.